Statistical Thinking in Python (Part 2)

- Course: DataCamp: Statistical Thinking in Python (Part 2)

- This notebook is a reproducible reference.

- The material is from the course

- I completed the exercises

- If you find the content beneficial, consider a DataCamp Subscription.

def create_dir_save_filedownloads and saves the required data (data/2020-06-01_statistical_thinking_2) and image (Images/2020-06-01_statistical_thinking_2) files.

Course Description

An understanding of statistical inference will be provided. Building on the foundations from Part 1, your ability to estimate parameters and conduct hypothesis tests using real-world data sets will be enhanced. Hands-on analysis, including the study of beak measurements of Darwin’s finches, will solidify your skills. By the end of the course, you’ll be equipped with advanced tools and practical experience to confidently approach and solve your own data inference challenges.

Synopsis

Parameter estimation by optimization

- Objective: Estimate probability distribution parameters to describe data.

- Key Concepts:

- Importance of parameter estimation in statistical inference.

- Use of probability distributions to model data.

- Methods:

- Normality check using Michelson’s speed of light data.

- Calculation of mean and standard deviation.

- Comparison of empirical and theoretical CDFs.

- Findings:

- Good parameter estimates provide a good fit between empirical and theoretical CDFs.

- Impact of poor parameter estimates on the accuracy of the CDF fit.

Bootstrap confidence intervals

- Objective: Estimate confidence intervals using bootstrap resampling.

- Key Concepts:

- Variability in parameter estimates.

- Interpretation of bootstrap confidence intervals.

- Methods:

- Implementation of the bootstrap method.

- Application to Michelson’s data for mean estimation.

- Findings:

- Bootstrap provides insight into the precision of parameter estimates.

- Confidence intervals help in understanding the range of plausible values.

Introduction to hypothesis testing

- Objective: Framework for making statistical decisions.

- Key Concepts:

- Null and alternative hypotheses.

- Test statistics and their role.

- P-values and significance levels.

- Methods:

- Explanation of hypothesis testing steps.

- Introduction to decision-making based on statistical tests.

- Findings:

- P-values quantify the evidence against the null hypothesis.

- Significance levels help determine the threshold for rejecting the null hypothesis.

Hypothesis test examples

- Objective: Practical application of hypothesis testing.

- Key Concepts:

- Type I and Type II errors.

- Interpretation of test results.

- Methods:

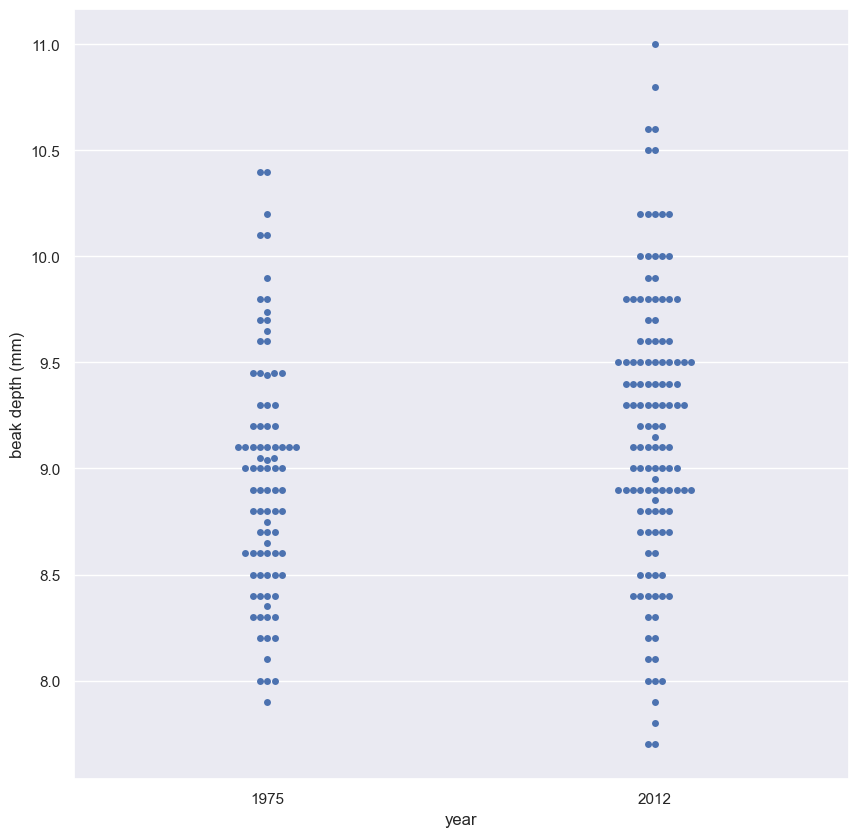

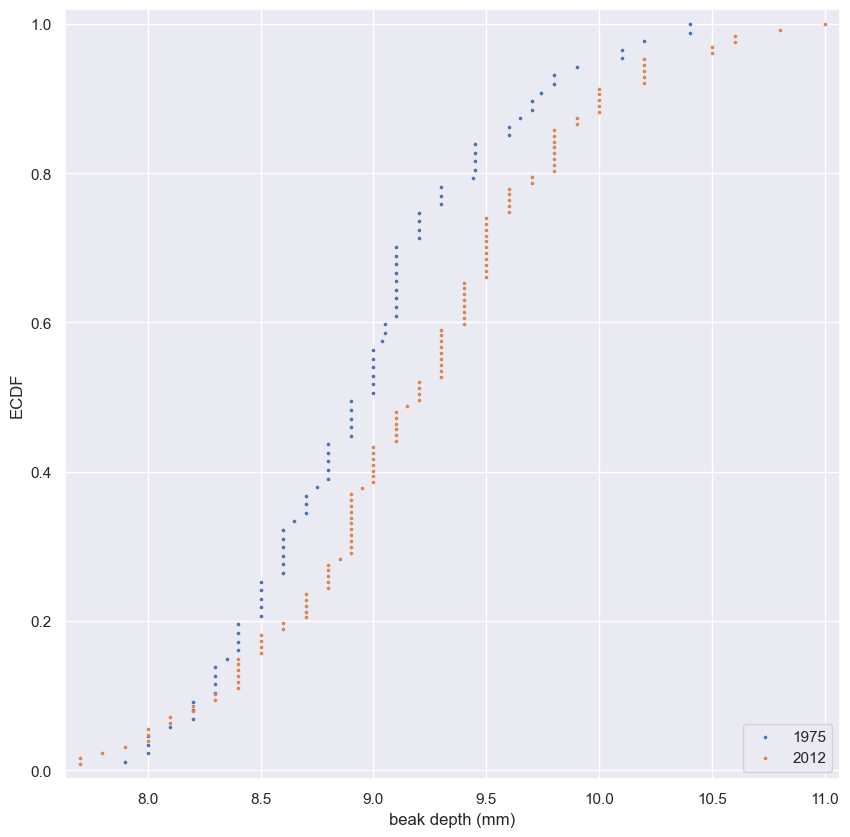

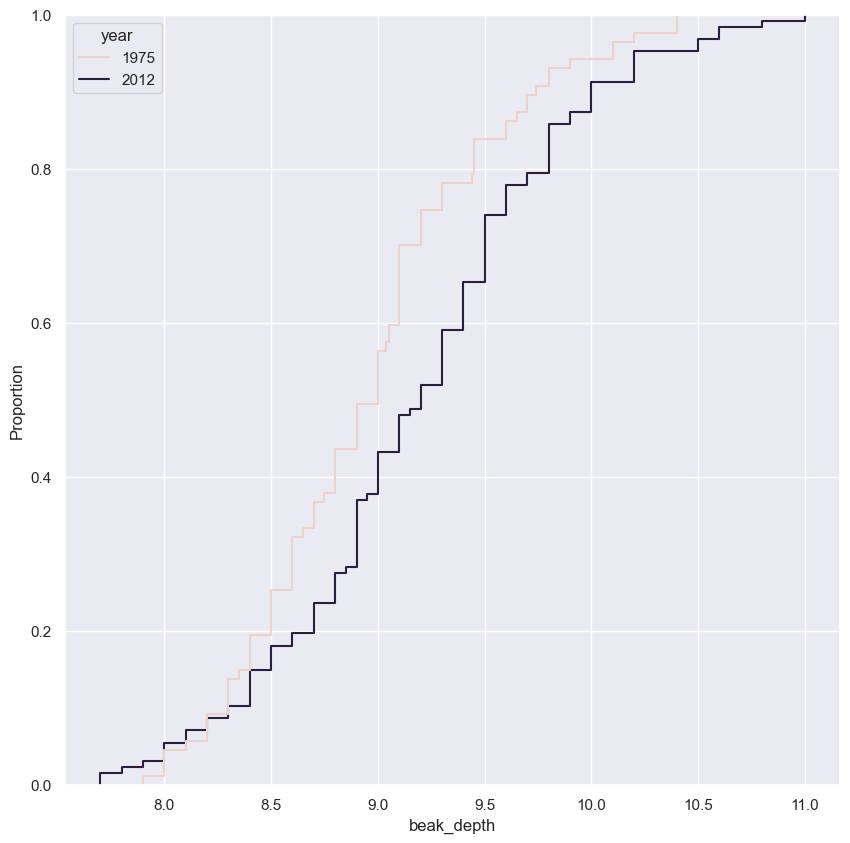

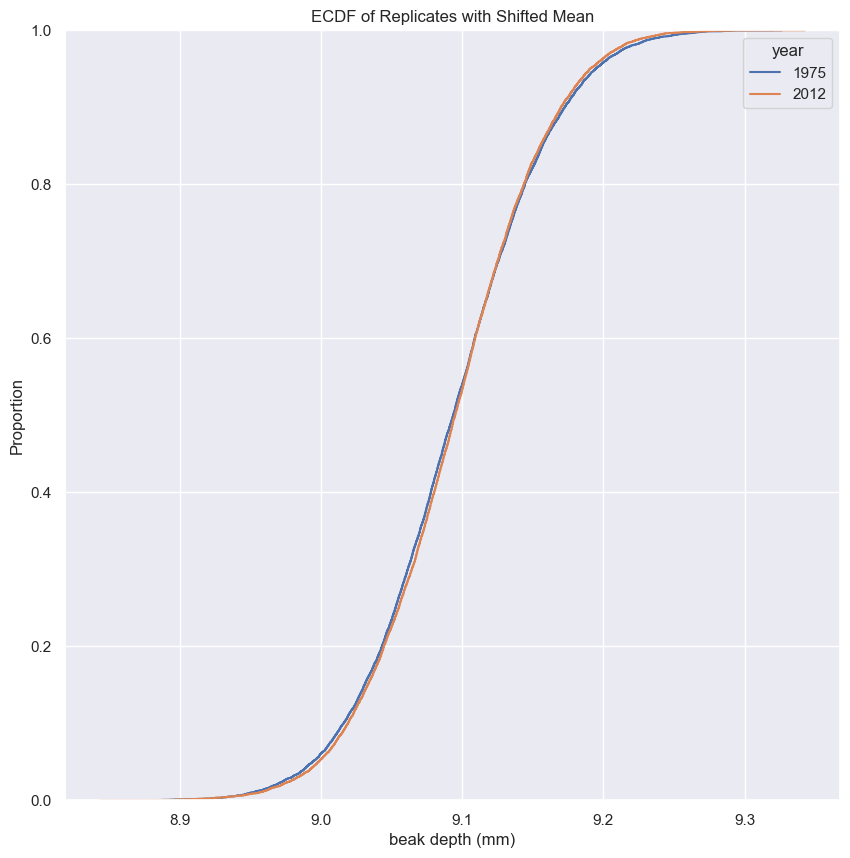

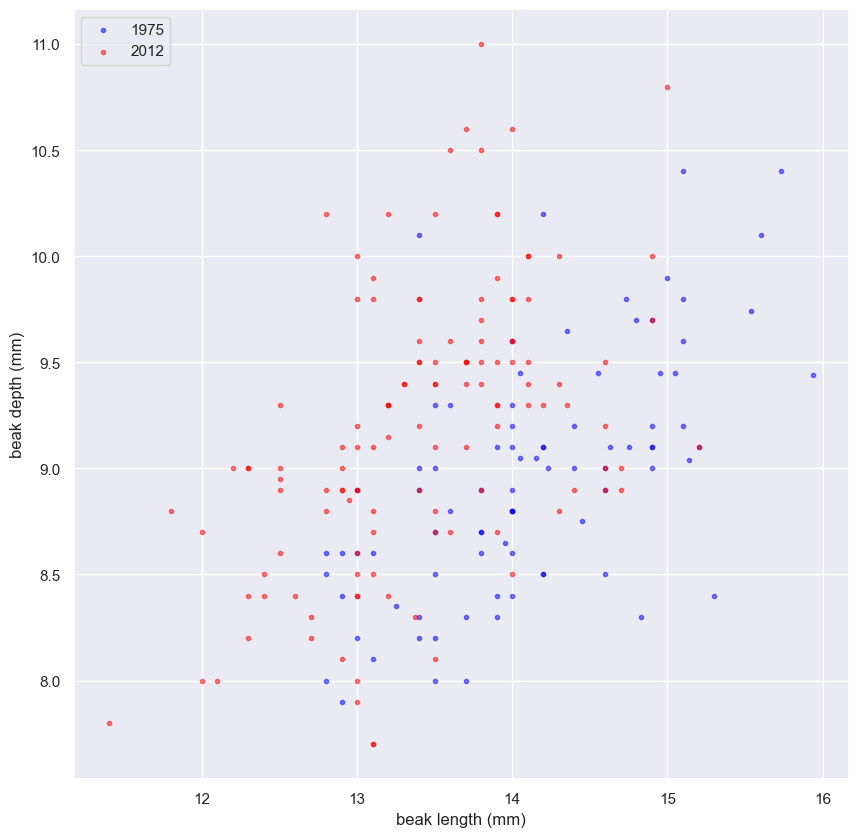

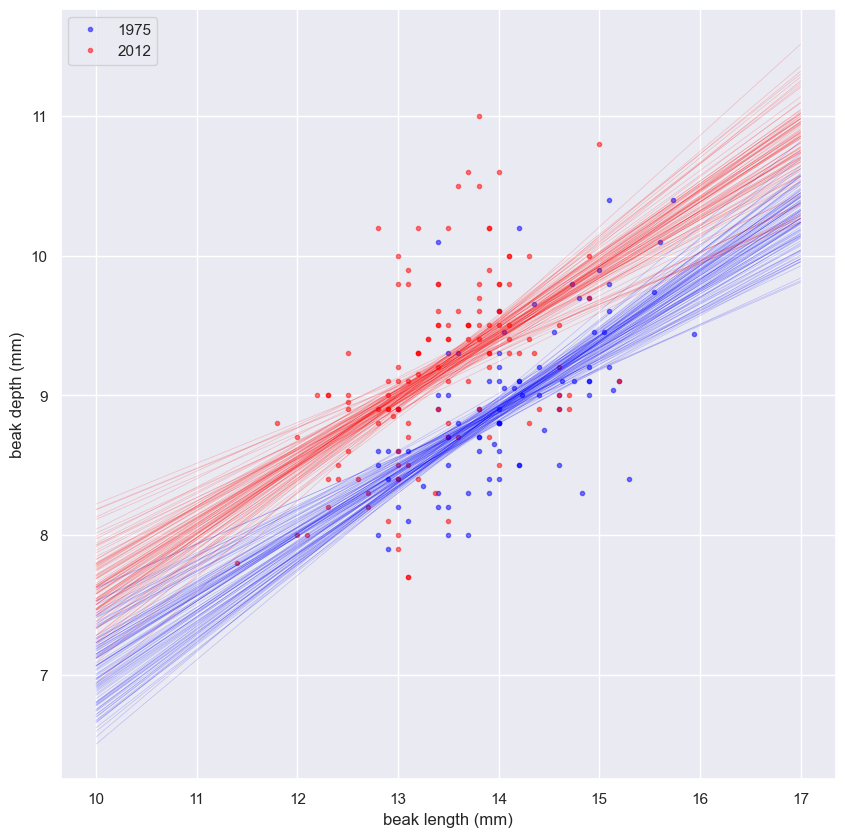

- Hypothesis tests on real datasets (e.g., finch beak data).

- Calculation and interpretation of p-values.

- Findings:

- Examples illustrate the application of hypothesis testing.

- Understanding errors in hypothesis testing helps in making informed decisions.

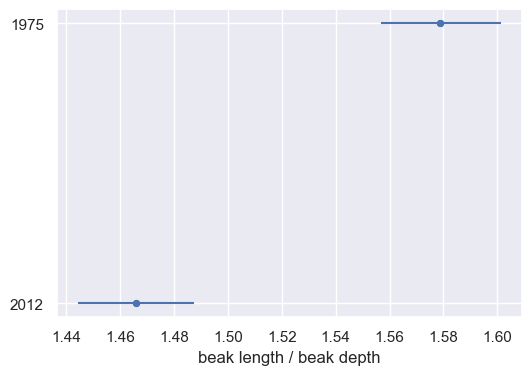

Case study

- Objective: Comprehensive analysis using learned methods.

- Key Concepts:

- Integration of parameter estimation, bootstrap, and hypothesis testing.

- Application to biological data (Darwin’s finches).

- Methods:

- Detailed analysis of finch beak measurements from different years.

- Use of statistical methods to interpret data.

- Findings:

- Combined methods provide a thorough understanding of data.

- Statistical analysis aids in drawing meaningful conclusions from biological studies.

Imports

1

2

3

4

5

6

7

8

9

import pandas as pd

import matplotlib.pyplot as plt

from matplotlib import collections as mc

import numpy as np

from scipy.stats import pearsonr

import seaborn as sns

from pathlib import Path

import requests

from datetime import datetime

1

import matplotlib as mpl

1

mpl.__version__

1

'3.8.4'

Pandas Configuration Options

1

2

3

pd.set_option('display.max_columns', 200)

pd.set_option('display.max_rows', 300)

pd.set_option('display.expand_frame_repr', True)

Matplotlib Configuration Options

1

2

3

4

plt.rcParams['figure.figsize'] = (10.0, 10.0)

# plt.style.use('seaborn-dark-palette')

plt.rcParams['axes.grid'] = True

plt.rcParams["patch.force_edgecolor"] = True

Functions

def create_dir_save_file

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

def create_dir_save_file(dir_path: Path, url: str):

"""

Check if the path exists and create it if it does not.

Check if the file exists and download it if it does not.

"""

if not dir_path.parents[0].exists():

dir_path.parents[0].mkdir(parents=True)

print(f'Directory Created: {dir_path.parents[0]}')

else:

print('Directory Exists')

if not dir_path.exists():

r = requests.get(url, allow_redirects=True)

open(dir_path, 'wb').write(r.content)

print(f'File Created: {dir_path.name}')

else:

print('File Exists')

1

2

data_dir = Path('data/2020-06-01_statistical_thinking_2')

images_dir = Path('Images/2020-06-01_statistical_thinking_2')

def ecdf

- empirical cumulative distribution function

- Computing the ECDF

1

2

3

4

5

6

7

8

9

10

11

12

def ecdf(data):

"""Compute ECDF for a one-dimensional array of measurements."""

# Number of data points: n

n = len(data)

# x-data for the ECDF: x

x = np.sort(data)

# y-data for the ECDF: y

y = np.arange(1, n+1) / n

return x, y

def pearson

- Pearson correlation coefficient

- Computing the Pearson correlation coefficient

1

2

3

4

5

6

7

def pearson_r(x, y):

"""Compute Pearson correlation coefficient between two arrays."""

# Compute correlation matrix: corr_mat

corr_mat = np.corrcoef(x, y)

# Return entry [0,1]

return corr_mat[0,1]

Datasets

1

2

3

4

5

6

7

8

9

10

11

12

13

14

anscombe = 'https://assets.datacamp.com/production/repositories/470/datasets/fe820c6cbe9bcf4060eeb9e31dd86aa04264153a/anscombe.csv'

bee_sperm = 'https://assets.datacamp.com/production/repositories/470/datasets/e427679d28d154934a6c087b2fa945bc7696db6d/bee_sperm.csv'

female_lit_fer = 'https://assets.datacamp.com/production/repositories/470/datasets/f1e7f8a98c18da5c60b625cb8af04c3217f4a5c3/female_literacy_fertility.csv'

finch_beaks_1975 = 'https://assets.datacamp.com/production/repositories/470/datasets/eb228490f7d823bfa6458b93db075ca5ccd3ec3d/finch_beaks_1975.csv'

finch_beaks_2012 = 'https://assets.datacamp.com/production/repositories/470/datasets/b28d5bf65e38460dca7b3c5c0e4d53bdfc1eb905/finch_beaks_2012.csv'

fortis_beak = 'https://assets.datacamp.com/production/repositories/470/datasets/532cb2fecd1bffb006c79a28f344af2290d643f3/fortis_beak_depth_heredity.csv'

frog_tongue = 'https://assets.datacamp.com/production/repositories/470/datasets/df6e0479c0f292ce9d2b951385f64df8e2a8e6ac/frog_tongue.csv'

basball = 'https://assets.datacamp.com/production/repositories/470/datasets/593c37a3588980e321b126e30873597620ca50b7/mlb_nohitters.csv'

scandens_beak = 'https://assets.datacamp.com/production/repositories/470/datasets/7ff772e1f4e99ed93685296063b6e604a334236d/scandens_beak_depth_heredity.csv'

sheffield_weather = 'https://assets.datacamp.com/production/repositories/470/datasets/129cba08c45749a82701fbe02180c5b69eb9adaf/sheffield_weather_station.csv'

nohitter_time = 'https://raw.githubusercontent.com/trenton3983/DataCamp/master/data/2020-06-01_statistical_thinking_2/nohitter_time.csv'

sol = 'https://assets.datacamp.com/production/repositories/469/datasets/df23780d215774ff90be0ea93e53f4fb5ebbade8/michelson_speed_of_light.csv'

votes = 'https://assets.datacamp.com/production/repositories/469/datasets/8fb59b9a99957c3b9b1c82b623aea54d8ccbcd9f/2008_all_states.csv'

swing = 'https://assets.datacamp.com/production/repositories/469/datasets/e079fddb581197780e1a7b7af2aeeff7242535f0/2008_swing_states.csv'

1

2

3

4

5

6

7

8

datasets = [anscombe, bee_sperm, female_lit_fer, finch_beaks_1975, finch_beaks_2012, fortis_beak, frog_tongue, basball, scandens_beak, sheffield_weather, nohitter_time, sol, votes, swing]

data_paths = list()

for data in datasets:

file_name = data.split('/')[-1].replace('?raw=true', '')

data_path = data_dir / file_name

create_dir_save_file(data_path, data)

data_paths.append(data_path)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

DataFrames

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

df_bb = pd.read_csv(data_paths[7])

nohitter_times = pd.read_csv(data_paths[10])

sol = pd.read_csv(data_paths[11])

votes = pd.read_csv(data_paths[12])

swing = pd.read_csv(data_paths[13])

fem = pd.read_csv(data_paths[2])

anscombe = pd.read_csv(data_paths[0], header=[0, 1])

anscombe.columns = [f'{x[1]}_{x[0]}' for x in anscombe.columns] # reduce multi-level column name to single level

sheffield = pd.read_csv(data_paths[9], header=[8], sep='\\s+')

frogs = pd.read_csv(data_paths[6], header=[14])

frogs.date = pd.to_datetime(frogs.date, format='%Y_%m_%d')

baseball_df = pd.read_csv(data_paths[7])

baseball_df.date = pd.to_datetime(baseball_df.date, format='%Y%m%d')

baseball_df['days_since_last_nht'] = baseball_df.date.diff() # calculate days since last no-hitter

baseball_df['games_since_last_nht'] = baseball_df.game_number.diff().fillna(0) - 1 # calculate games since last no-hitter

bees = pd.read_csv(data_paths[1], header=[3])

finch_1975 = pd.read_csv(data_paths[3])

finch_1975['year'] = 1975

finch_1975.rename(columns={'Beak length, mm': 'beak_length', 'Beak depth, mm': 'beak_depth'}, inplace=True)

finch_2012 = pd.read_csv(data_paths[4])

finch_2012['year'] = 2012

finch_2012.rename(columns={'blength': 'beak_length', 'bdepth': 'beak_depth'}, inplace=True)

finch = pd.concat([finch_1975, finch_2012]).reset_index(drop=True)

Parameter estimation by optimization

When doing statistical inference, we speak the language of probability. A probability distribution that describes your data has parameters. So, a major goal of statistical inference is to estimate the values of these parameters, which allows us to concisely and unambiguously describe our data and draw conclusions from it. In this chapter, you will learn how to find the optimal parameters, those that best describe your data.

Optimal parameters

- Outcomes of measurements follow probability distributions defined by the story of how the data came to be.

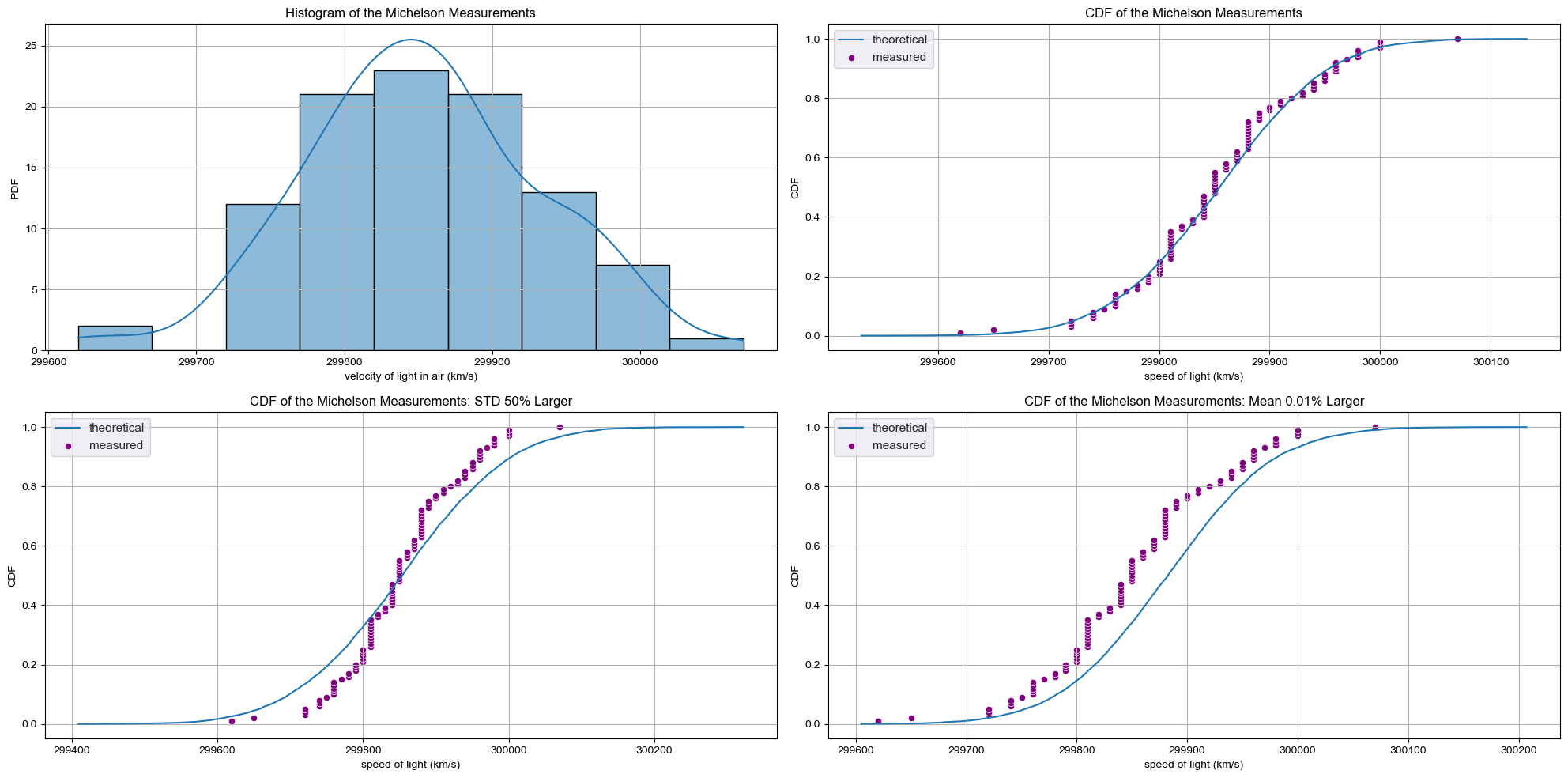

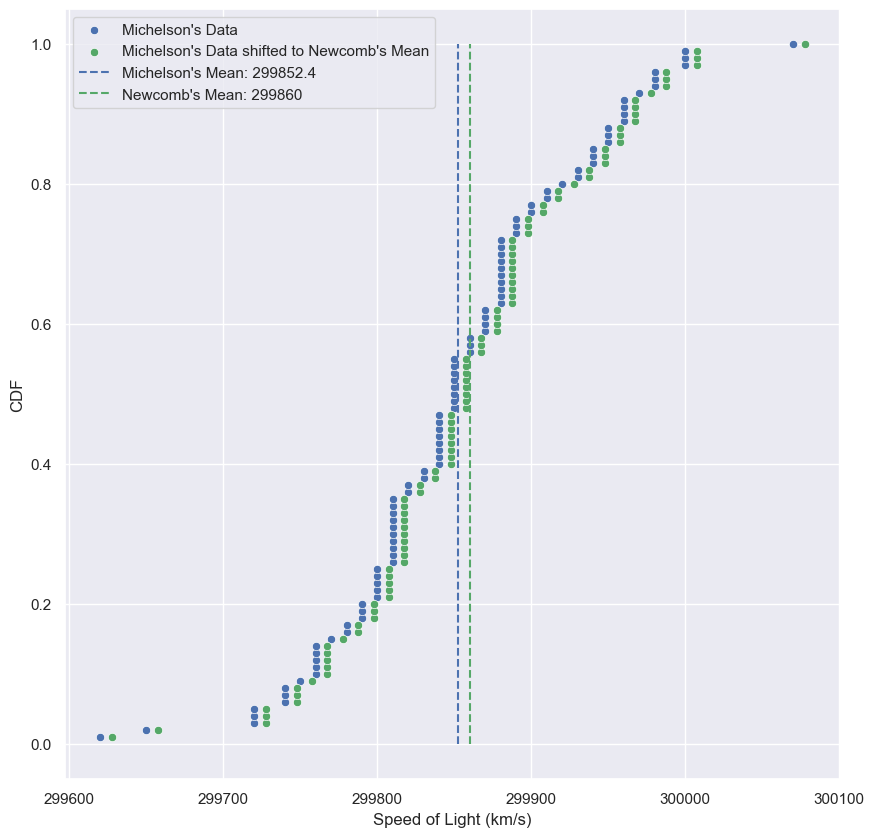

- When we looked at Michelson’s speed of light in air measurements, we assumed that the results were Normally distributed.

- We verified that both by looking at the PDF and the CDF, which was more effective becasue there is no binning bias.

Checking Normality of the Michelson data

- To compute the theoretical CDF by sampling, we pass two parameters to

np.random.normal - Calculate the mean and standard deviation (std) of the data

- The values chosen for these parameters are the mean and std of the data

CDF of the Michelson measurements

- The theoretical CDF overlays nicely with the empirical CDF.

- How did we know the mean and std calculated from the data were the appropriate values for the Normal parameters?

CDF with Bad Estimates

- We could have chosen others

- What if the std differs by 50%?

- The CDF no longer match.

- What if the main varies by just 0.01%?

Optimal Parameters

- If we believe the process that generates our data gives Normally distributed results, the set of parameters that brings the model, in this case, a Normal distribution, in closest agreement with the data, uses the mean and std computed directly from the data.

- Parameter values that bring the model in closest agreement with the data

- These are the optimal parameters.

- Remember though, the parameters are only optimal for the model chosen for the data.

Mass of MA Large mouth bass

- When your model is wrong, the optimal parameters are not really meaningful.

- Finding the optimal parameters is not always as easy as just computing the mean and std from the data.

- We’ll encounter this later in this chapter when we do linear regressions and we rely on built-in Numpy functions to find the optimal parameter for us.

Packages to do statistical inference

- There are great tolls in the Python ecosystem for doing statistical inference, including by optimization

- scipy.stats

- statsmodels

- In this course we focus on hacker statistics using Numpy.

- The same simple principle is applicable to a wide variety of statistical problems.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

f, axes = plt.subplots(2, 2, figsize=(20, 10), sharex=False)

# Plot 1

sns.histplot(sol['velocity of light in air (km/s)'], bins=9, kde=True, ax=axes[0, 0])

axes[0, 0].set_title('Histogram of the Michelson Measurements')

axes[0, 0].set_ylabel('PDF')

# Plot 2

mean = np.mean(sol['velocity of light in air (km/s)'])

std = np.std(sol['velocity of light in air (km/s)'])

samples = np.random.normal(mean, std, size=10000)

x, y = ecdf(sol['velocity of light in air (km/s)'])

x_theor, y_theor = ecdf(samples)

sns.set()

sns.lineplot(x=x_theor, y=y_theor, label='theoretical', ax=axes[0, 1])

sns.scatterplot(x=x, y=y, label='measured', color='purple', ax=axes[0, 1])

axes[0, 1].set_title('CDF of the Michelson Measurements')

axes[0, 1].set_xlabel('speed of light (km/s)')

axes[0, 1].set_ylabel('CDF')

# Plot 3

samples = np.random.normal(mean, std*1.5, size=10000)

x_theor3, y_theor3 = ecdf(samples)

sns.set()

sns.lineplot(x=x_theor3, y=y_theor3, label='theoretical', ax=axes[1, 0])

sns.scatterplot(x=x, y=y, label='measured', color='purple', ax=axes[1, 0])

axes[1, 0].set_title('CDF of the Michelson Measurements: STD 50% Larger')

axes[1, 0].set_xlabel('speed of light (km/s)')

axes[1, 0].set_ylabel('CDF')

ticks= axes[1, 0].get_xticks()

# Plot 4

samples = np.random.normal(mean*1.0001, std, size=10000)

x_theor4, y_theor4 = ecdf(samples)

sns.set()

sns.lineplot(x=x_theor4, y=y_theor4, label='theoretical', ax=axes[1, 1])

sns.scatterplot(x=x, y=y, label='measured', color='purple', ax=axes[1, 1])

axes[1, 1].set_title('CDF of the Michelson Measurements: Mean 0.01% Larger')

axes[1, 1].set_xlabel('speed of light (km/s)')

axes[1, 1].set_ylabel('CDF')

plt.tight_layout()

plt.show()

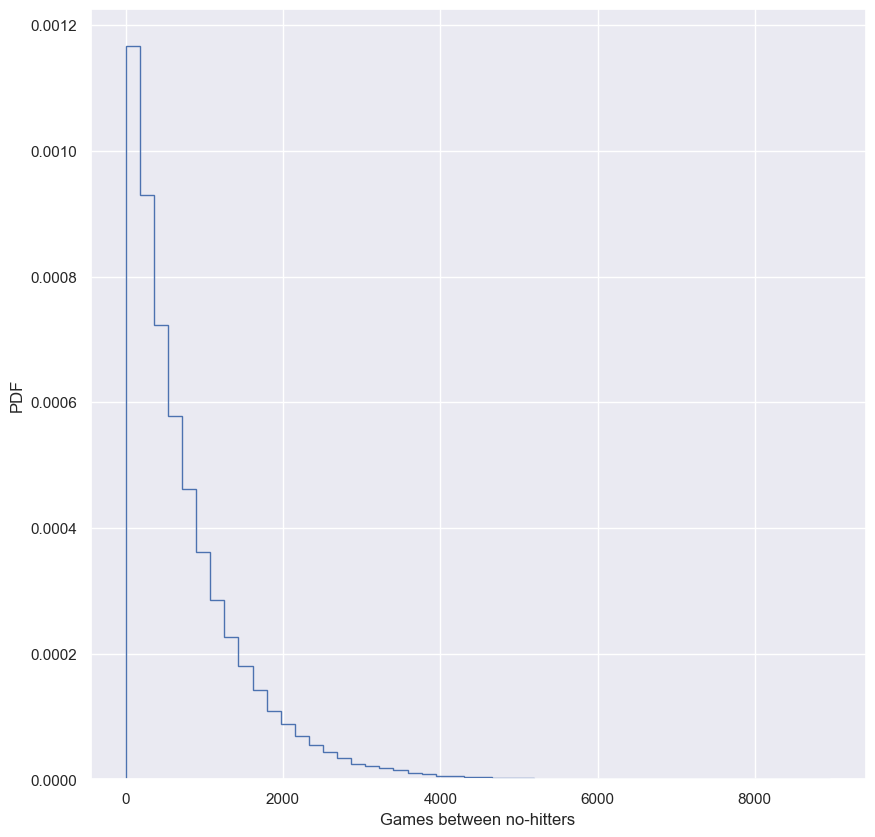

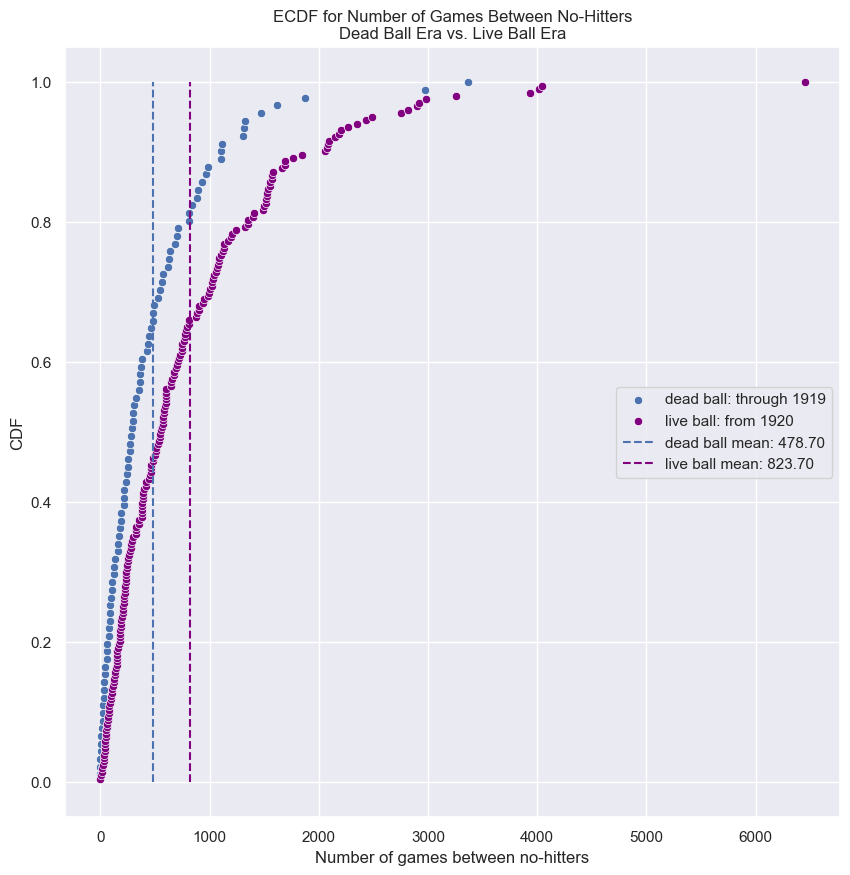

How often do we get no-hitters?

The number of games played between each no-hitter in the modern era (1901-2015) of Major League Baseball is stored in the array nohitter_times.

If you assume that no-hitters are described as a Poisson process, then the time between no-hitters is Exponentially distributed. As you have seen, the Exponential distribution has a single parameter, which we will call $\tau$, the typical interval time. The value of the parameter $\tau$ that makes the exponential distribution best match the data is the mean interval time (where time is in units of number of games) between no-hitters.

Compute the value of this parameter from the data. Then, use np.random.exponential() to “repeat” the history of Major League Baseball by drawing inter-no-hitter times from an exponential distribution with the $\tau$ you found and plot the histogram as an approximation to the PDF.

NumPy, pandas, matlotlib.pyplot, and seaborn have been imported for you as np, pd, plt, and sns, respectively.

Instructions

- Seed the random number generator with

42. - Compute the mean time (in units of number of games) between no-hitters.

- Draw 100,000 samples from an Exponential distribution with the parameter you computed from the mean of the inter-no-hitter times.

- Plot the theoretical PDF using

plt.hist(). Remember to use keyword argumentsbins=50,normed=True, andhisttype='step'. Be sure to label your axes. - Show your plot.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# Seed random number generator

np.random.seed(42)

# Compute mean no-hitter time: tau

tau = np.mean(nohitter_times)

# Draw out of an exponential distribution with parameter tau: inter_nohitter_time

inter_nohitter_time = np.random.exponential(tau, 100000)

# Plot the PDF and label axes

_ = plt.hist(inter_nohitter_time, bins=50, density=True, histtype='step')

_ = plt.xlabel('Games between no-hitters')

_ = plt.ylabel('PDF')

# Show the plot

plt.show()

We see the typical shape of the Exponential distribution, going from a maximum at 0 and decaying to the right.

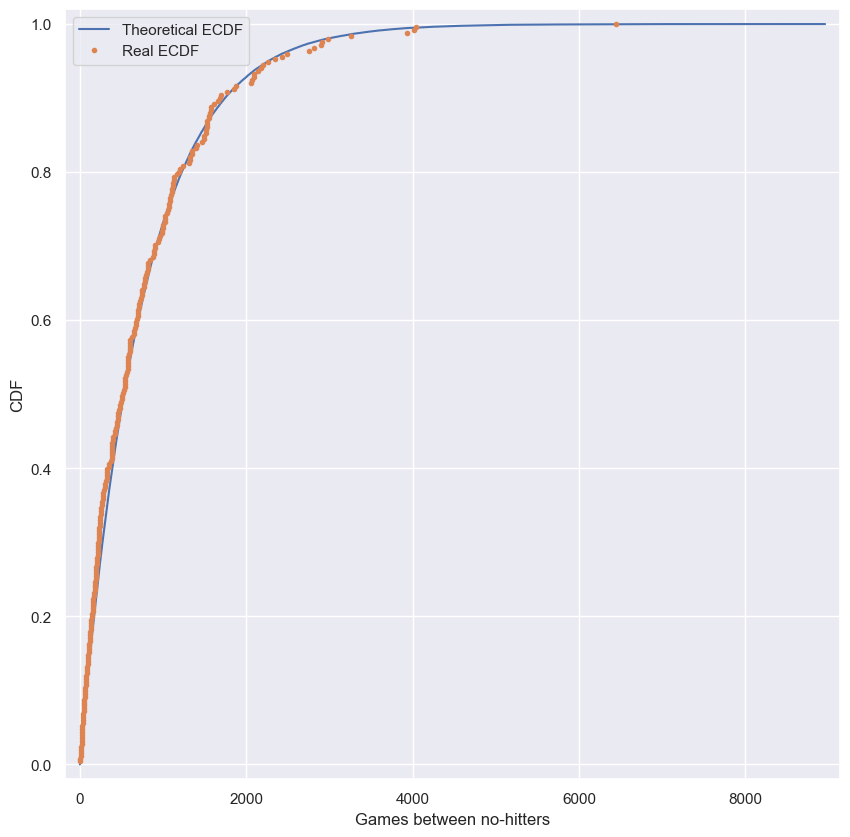

Do the data follow our story?

You have modeled no-hitters using an Exponential distribution. Create an ECDF of the real data. Overlay the theoretical CDF with the ECDF from the data. This helps you to verify that the Exponential distribution describes the observed data.

It may be helpful to remind yourself of the function you created in the previous course to compute the ECDF, as well as the code you wrote to plot it.

Instructions

- Compute an ECDF from the actual time between no-hitters (

nohitter_times). Use theecdf()function you wrote in the prequel course. - Create a CDF from the theoretical samples you took in the last exercise (

inter_nohitter_time). - Plot

x_theorandy_theoras a line usingplt.plot(). Then overlay the ECDF of the real dataxandyas points. To do this, you have to specify the keyword argumentsmarker = '.'andlinestyle = 'none'in addition toxandyinsideplt.plot(). - Set a 2% margin on the plot.

- Show the plot.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# Create an ECDF from real data: x, y

x, y = ecdf(nohitter_times.nohitter_time)

# Create a CDF from theoretical samples: x_theor, y_theor

x_theor, y_theor = ecdf(inter_nohitter_time)

# Overlay the plots

plt.plot(x_theor, y_theor, label='Theoretical ECDF')

plt.plot(x, y, marker='.', linestyle='none', label='Real ECDF')

# Margins and axis labels

plt.margins(0.02)

plt.xlabel('Games between no-hitters')

plt.ylabel('CDF')

plt.legend()

# Show the plot

plt.show()

It looks like no-hitters in the modern era of Major League Baseball are Exponentially distributed. Based on the story of the Exponential distribution, this suggests that they are a random process; when a no-hitter will happen is independent of when the last no-hitter was.

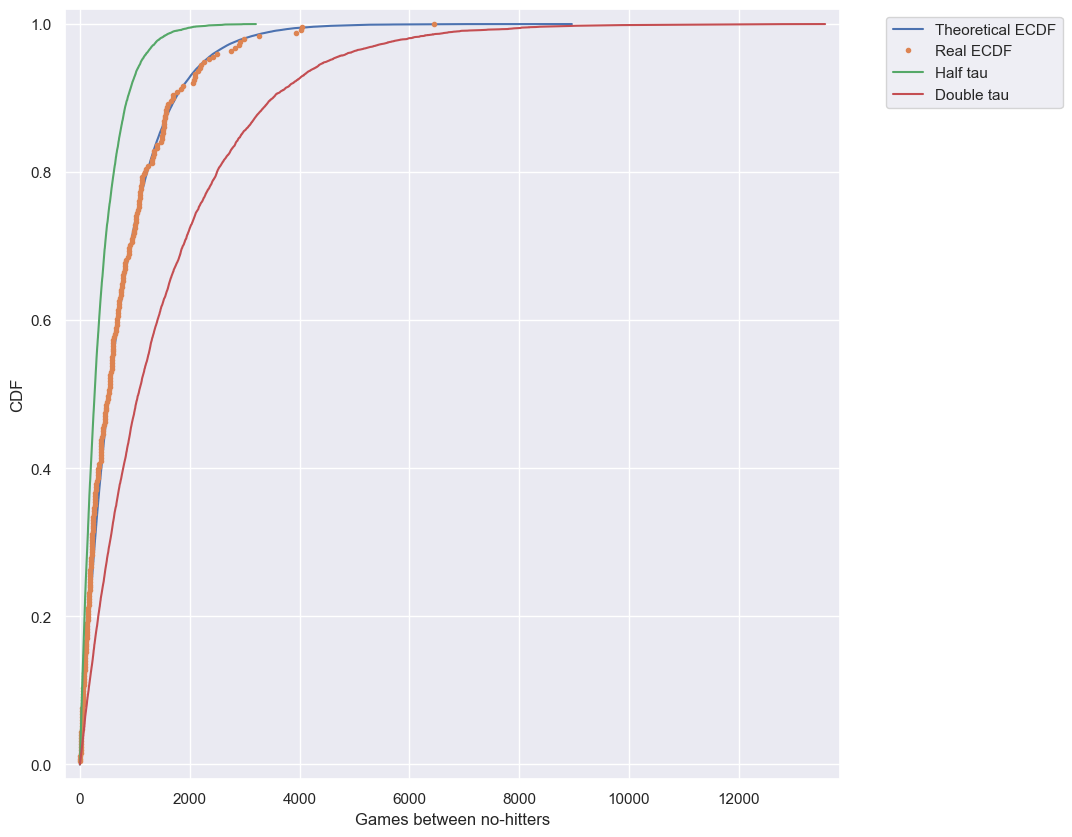

How is this parameter optimal?

Now sample out of an exponential distribution with $\tau$ being twice as large as the optimal $\tau$. Do it again for $\tau$ half as large. Make CDFs of these samples and overlay them with your data. You can see that they do not reproduce the data as well. Thus, the $\tau$ you computed from the mean inter-no-hitter times is optimal in that it best reproduces the data.

Note: In this and all subsequent exercises, the random number generator is pre-seeded for you to save you some typing.

Instructions

- Take

10000samples out of an Exponential distribution with parameter $\tau_{\frac{1}{2}} =$tau/2. - Take

10000samples out of an Exponential distribution with parameter $\tau_{2} =$2*tau. - Generate CDFs from these two sets of samples using your

ecdf()function. - Add these two CDFs as lines to your plot.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

# Plot the theoretical CDFs

plt.plot(x_theor, y_theor, label='Theoretical ECDF')

plt.plot(x, y, marker='.', linestyle='none', label='Real ECDF')

plt.margins(0.02)

plt.xlabel('Games between no-hitters')

plt.ylabel('CDF')

# Compute mean no-hitter time: tau

tau = np.mean(nohitter_times)

# Take samples with half tau: samples_half

samples_half = np.random.exponential(tau/2, 10000)

# Take samples with double tau: samples_double

samples_double = np.random.exponential(tau*2, 10000)

# Generate CDFs from these samples

x_half, y_half = ecdf(samples_half)

x_double, y_double = ecdf(samples_double)

# Plot these CDFs as lines

_ = plt.plot(x_half, y_half, label='Half tau')

_ = plt.plot(x_double, y_double, label='Double tau')

plt.legend(bbox_to_anchor=(1.05, 1), loc='upper left')

# Show the plot

plt.show()

Notice how the value of tau given by the mean matches the data best. In this way, tau is an optimal parameter.

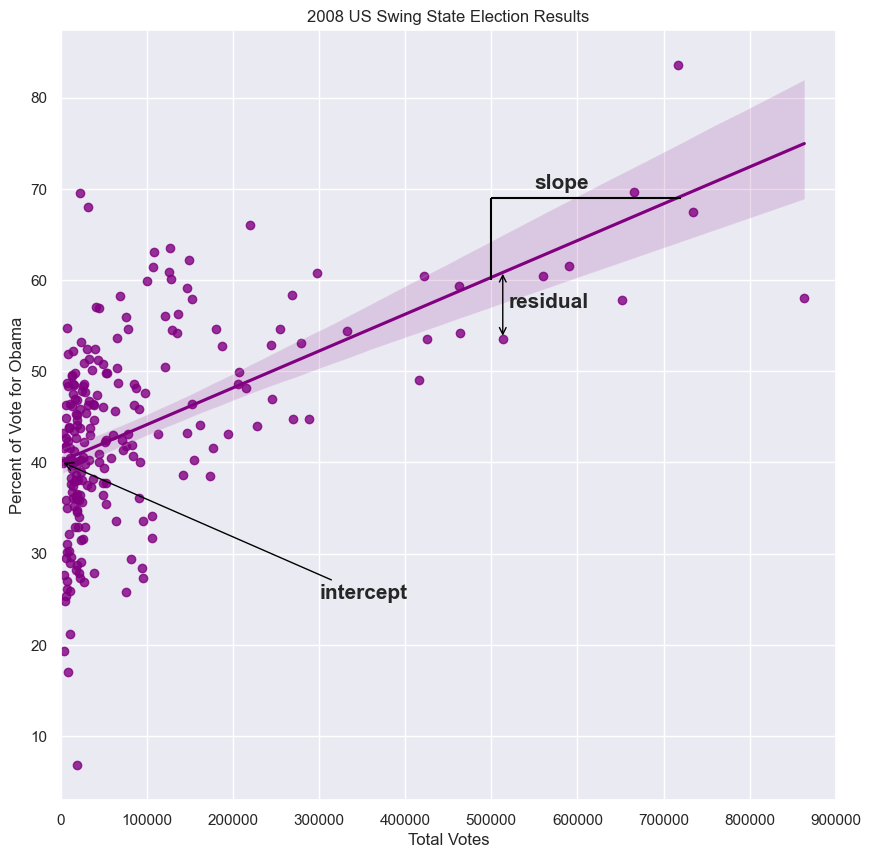

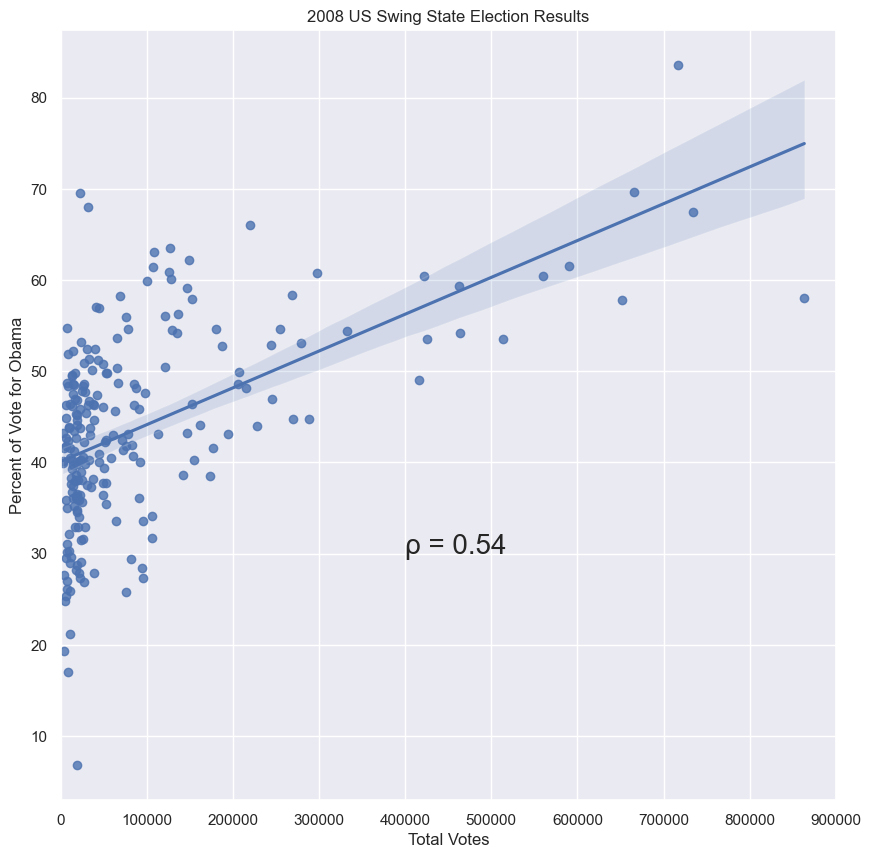

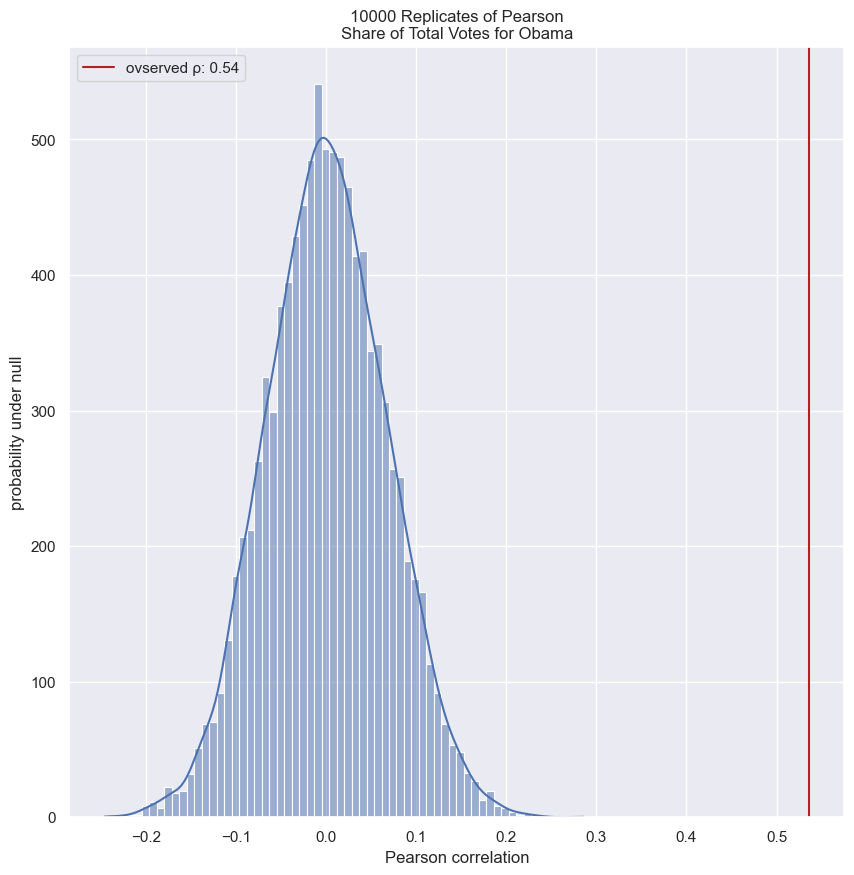

Linear regression by least squares

- Sometimes, two variables are related

- You may recall from the prequel course that we computed the Pearson correlation coefficient between Obama’s vote share in each county in swing states and the total vote count of the respective counties.

- The Pearson correlation coefficient is important to compute, be we might like to get a fuller understanding of how the data are related to each other.

- Specifically, we might suspect some underlying function give the data its shape.

- Often times, a linear function is appropriate to describe the data, and this is what we focus on in the course.

- The parameters of the function are slope and intercept.

- The slope sets how steep the line is, and the intercept sets where the line crosses the y-axis.

- How do we figure out which slope and intercept best describe the data?

- We want to choose the slope and intercept such that the data points collectively lie as close as possible to the line.

- This is easiest to think about by first considering one data point.

- The vertical distance between the data point and the line is called the residual

- In this case, the residual has a negative value because the data point lies below the line.

- Each data point has an associated residual.

Least squares

- The process of finding the parameters for which the sum of the squares of the residual is minimal, is called lease squares

- We define the line that is closest to the data to be the line for which the sum of the squares of all the residuals is minimal.

- There are many algorithms to do this in practice.

Lease squares with np.polyfit()

- We will use the np.polyfit(), which performs least squares analysis with polynomial functions.

- We can use it because a linear function is a first degree polynomial.

- The first two arguments to this function are the x and y data.

- The thirst argument is the degree of the polynomial you wish to fit; for linear functions, use 1.

- The function returns the slope and intercept of the best fit line.

- The slope tells us we get about 4 more percent votes for Obama for every 100,000 additional voters in a county.

1

2

3

4

5

slope, intercept = np.polyfit(swing.total_votes, swing.dem_share, 1)

print(slope)

>>> 4.0370717009465555e-05

print(intercept)

>>> 40.113911968641744

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

g = sns.regplot(x='total_votes', y='dem_share', data=swing, color='purple')

plt.xlim(0, 900000)

plt.ylabel('Percent of Vote for Obama')

plt.xlabel('Total Votes')

plt.title('2008 US Swing State Election Results')

plt.annotate('intercept', xy=(0, 40.1), weight='bold', xytext=(300000, 25),

fontsize=15, arrowprops=dict(arrowstyle="->", color='black'))

plt.text(550000, 70, 'slope', fontsize=15, weight='bold')

lc = mc.LineCollection([[(500000, 69), (720000, 69)], [(500000, 69), (500000, 60)]], color='black')

g.add_collection(lc)

plt.text(520000, 57, 'residual', fontsize=15, weight='bold')

plt.annotate('', xy=(513312, 53.59), xytext=(513312, 61), arrowprops=dict(arrowstyle="<->", color='black'))

plt.show()

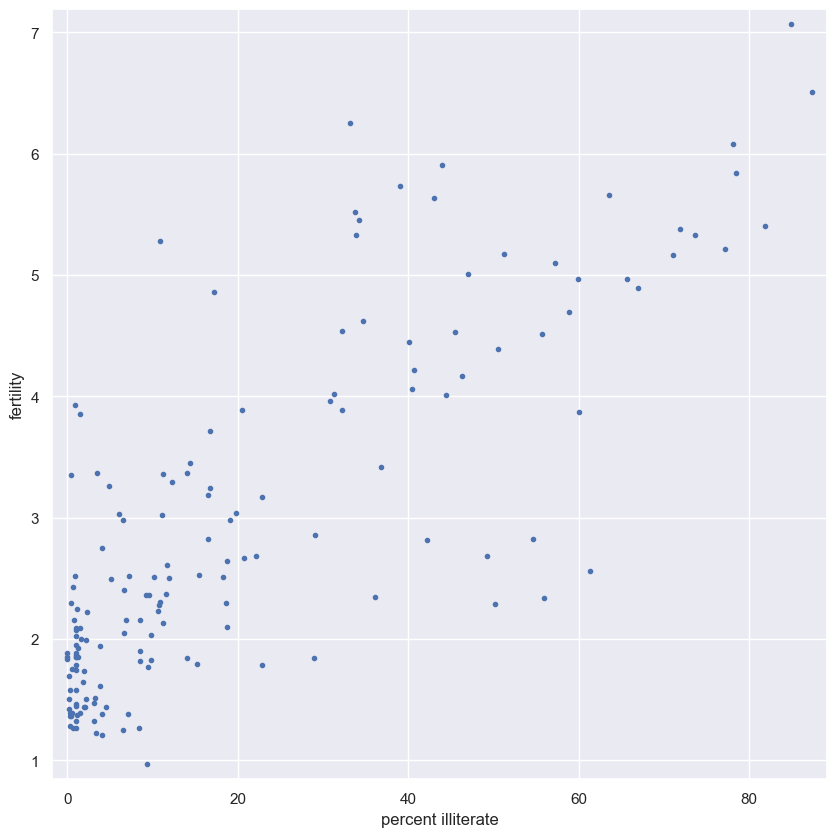

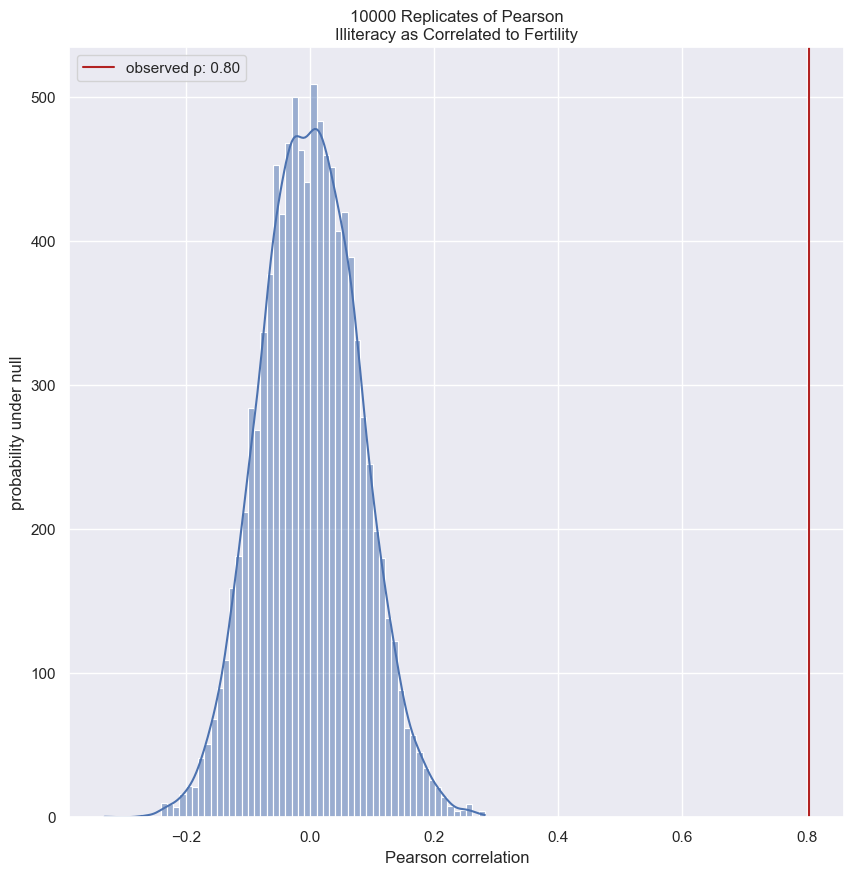

EDA of literacy/fertility data

In the next few exercises, we will look at the correlation between female literacy and fertility (defined as the average number of children born per woman) throughout the world. For ease of analysis and interpretation, we will work with the illiteracy rate.

It is always a good idea to do some EDA ahead of our analysis. To this end, plot the fertility versus illiteracy and compute the Pearson correlation coefficient. The Numpy array illiteracy has the illiteracy rate among females for most of the world’s nations. The array fertility has the corresponding fertility data.

Here, it may be useful to refer back to the function you wrote in the previous course to compute the Pearson correlation coefficient.

Instructions

- Plot

fertility(y-axis) versusilliteracy(x-axis) as a scatter plot. - Set a 2% margin.

- Compute and print the Pearson correlation coefficient between

illiteracyandfertility.

1

fem.head()

| Country | Continent | female literacy | fertility | population | |

|---|---|---|---|---|---|

| 0 | Chine | ASI | 90.5 | 1.769 | 1,324,655,000 |

| 1 | Inde | ASI | 50.8 | 2.682 | 1,139,964,932 |

| 2 | USA | NAM | 99.0 | 2.077 | 304,060,000 |

| 3 | Indonésie | ASI | 88.8 | 2.132 | 227,345,082 |

| 4 | Brésil | LAT | 90.2 | 1.827 | 191,971,506 |

1

2

illiteracy = 100 - fem['female literacy']

fertility = fem['fertility']

1

2

3

4

5

6

7

8

9

10

11

12

13

14

# Plot the illiteracy rate versus fertility

plt.plot(illiteracy, fertility, marker='.', linestyle='none')

# Set the margins and label axes

plt.margins(0.02)

plt.xlabel('percent illiterate')

plt.ylabel('fertility')

# Show the plot

plt.show()

# Show the Pearson correlation coefficient

print(pearson_r(illiteracy, fertility))

print(pearsonr(illiteracy, fertility))

1

2

0.8041324026815344

PearsonRResult(statistic=0.8041324026815345, pvalue=5.635092985976876e-38)

You can see the correlation between illiteracy and fertility by eye, and by the substantial Pearson correlation coefficient of 0.8. It is difficult to resolve in the scatter plot, but there are many points around near-zero illiteracy and about 1.8 children/woman.

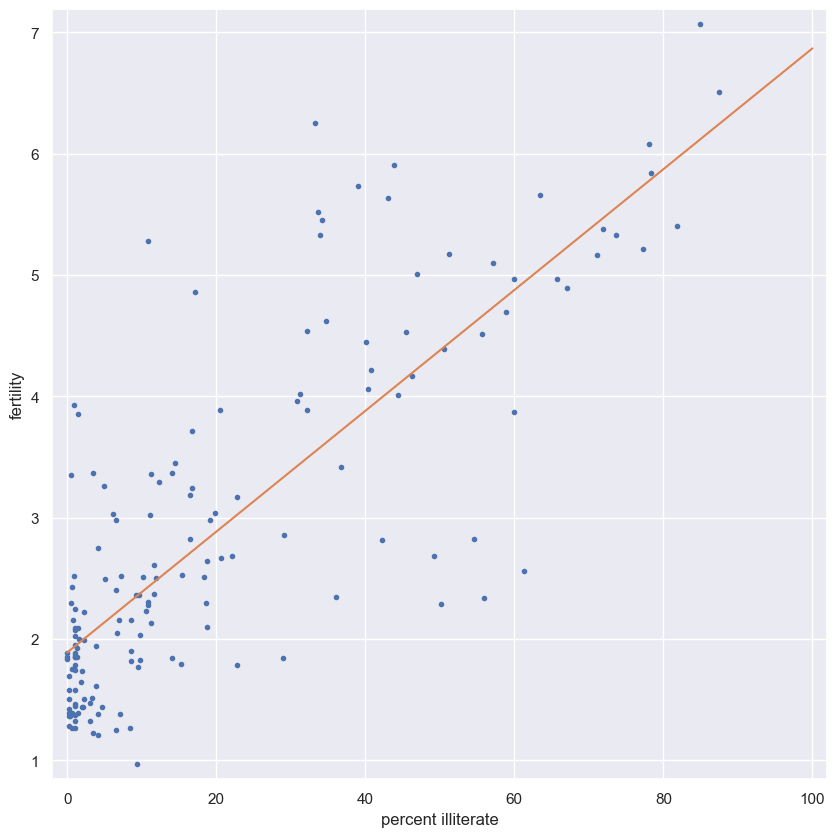

Linear regression

We will assume that fertility is a linear function of the female illiteracy rate. That is, $f=ai+b$, where a is the slope and b is the intercept. We can think of the intercept as the minimal fertility rate, probably somewhere between one and two. The slope tells us how the fertility rate varies with illiteracy. We can find the best fit line using np.polyfit().

Plot the data and the best fit line. Print out the slope and intercept. (Think: what are their units?)

Instructions

- Compute the slope and intercept of the regression line using

np.polyfit(). Remember,fertilityis on the y-axis andilliteracyon the x-axis. - Print out the slope and intercept from the linear regression.

- To plot the best fit line, create an array

xthat consists of 0 and 100 usingnp.array(). Then, compute the theoretical values ofybased on your regression parameters. I.e.,y = a * x + b. - Plot the data and the regression line on the same plot. Be sure to label your axes.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

# Plot the illiteracy rate versus fertility

plt.plot(illiteracy, fertility, marker='.', linestyle='none')

plt.margins(0.02)

plt.xlabel('percent illiterate')

plt.ylabel('fertility')

# Perform a linear regression using np.polyfit(): a, b

a, b = np.polyfit(illiteracy, fertility, 1)

# Print the results to the screen

print('slope =', a, 'children per woman / percent illiterate')

print('intercept =', b, 'children per woman')

# Make theoretical line to plot

x = np.array([0, 100])

y = a * x + b

# Add regression line to your plot

plt.plot(x, y)

# Draw the plot

plt.show()

1

2

slope = 0.04979854809063423 children per woman / percent illiterate

intercept = 1.888050610636557 children per woman

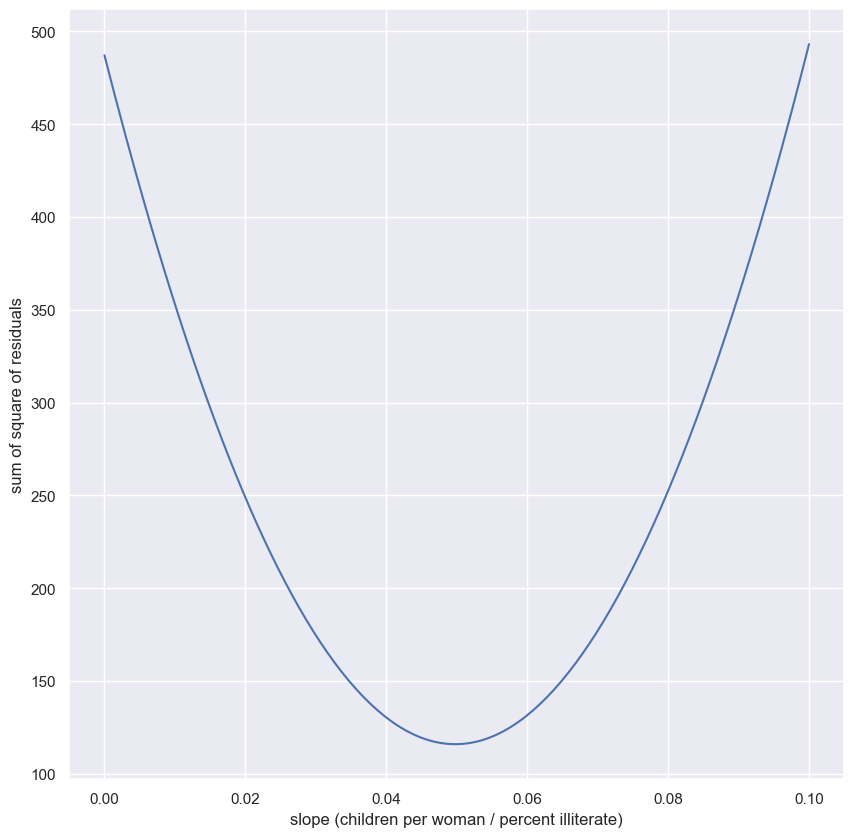

How is it optimal?

The function np.polyfit() that you used to get your regression parameters finds the optimal slope and intercept. It is optimizing the sum of the squares of the residuals, also known as RSS (for residual sum of squares). In this exercise, you will plot the function that is being optimized, the RSS, versus the slope parameter a. To do this, fix the intercept to be what you found in the optimization. Then, plot the RSS vs. the slope. Where is it minimal?

Instructions

- Specify the values of the slope to compute the RSS. Use

np.linspace()to get200points in the range between0and0.1. For example, to get100points in the range between0and0.5, you could usenp.linspace()like so:np.linspace(0, 0.5, 100). - Initialize an array,

rss, to contain the RSS usingnp.empty_like()and the array you created above. Theempty_like()function returns a new array with the same shape and type as a given array (in this case,a_vals). - Write a

forloop to compute the sum of RSS of the slope. Hint: the RSS is given bynp.sum((y_data - a * x_data - b)**2). The variablebyou computed in the last exercise is already in your namespace. Here,fertilityis they_dataandilliteracythex_data. - Plot the RSS (

rss) versus slope (a_vals).

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# Specify slopes to consider: a_vals

a_vals = np.linspace(0, 0.1, 200)

# Initialize sum of square of residuals: rss

rss = np.empty_like(a_vals)

# Compute sum of square of residuals for each value of a_vals

for i, a in enumerate(a_vals):

rss[i] = np.sum((fertility - a*illiteracy - b)**2)

# Plot the RSS

plt.plot(a_vals, rss, '-')

plt.xlabel('slope (children per woman / percent illiterate)')

plt.ylabel('sum of square of residuals')

plt.show()

Notice that the minimum on the plot, that is the value of the slope that gives the minimum sum of the square of the residuals, is the same value you got when performing the regression.

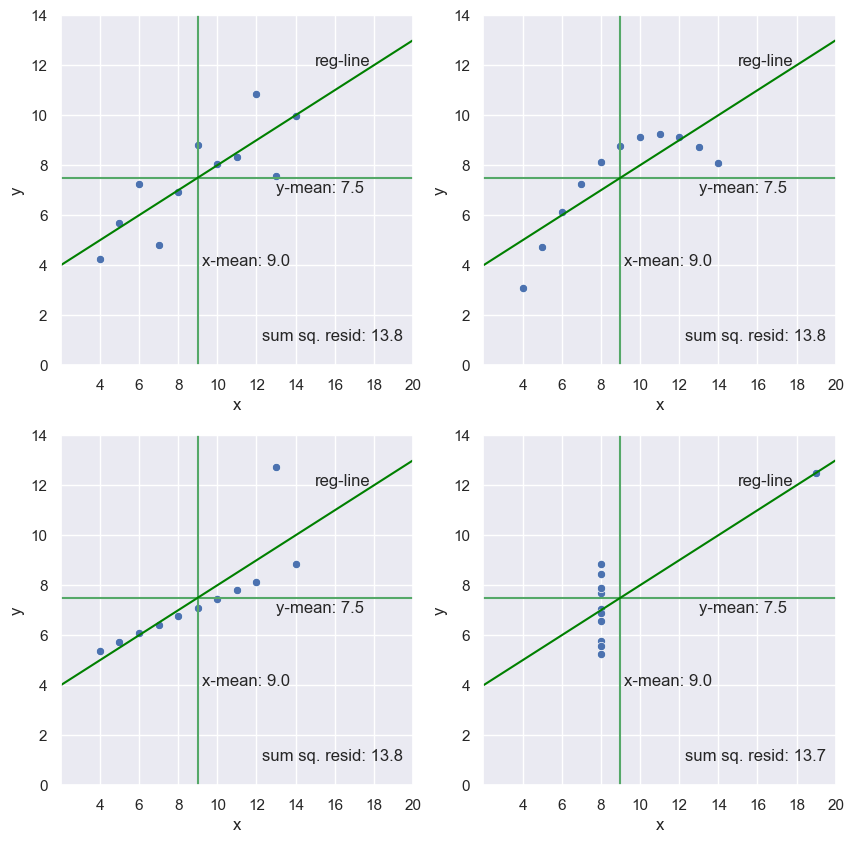

The importance of EDA: Anscombe’s quartet

- In 1973, statistician Francis Anscombe published a paper that contained for fictitious x-y data sets, plotted below.

- He uses these data sets to make an important point.

- The point becomes clear if we blindly go about doing parameter estimation on these data sets.

- Lets look at the mean x & y values of the four data sets.

- They’re all the same

- What if we do a linear regression on each of the data sets?

- They’re the same

- Some of the fits are less optimal than others

- Let’s look at the sum of the squares of the residuals.

- They’re basically all the same

- The data sets were constructed so this would happen.

- He was making a very important point

- There are already some powerful tolls for statistical inference.

- You can compute summary statistics and optimal parameters, including linear regression parameters, and by the end of the course, you’ll be able to construct confidence intervals which quantify uncertainty about the parameter estimates.

- These are crucial skills for any data analyst, no doubt

Look before you leap!

- This is a powerful reminder to do some graphical exploratory data analysis before you start computing and making judgments about your data.

Anscombe’s quartet

- For example, plot[0, 0] (

x_0, y_0) might be well modeled with a line, and the regression parameters will be meaningful. - The same is true of plot[1, 0] (

x_2, y_2), but the outlier throws off the slope and intercept - After doing EDA, you should look into what is causing that outlier

- Plot[1, 1] (

x_3, y_3) might also have a linear relationship between x, and y, but from the plot, you can conclude that you should try to acquire more data for intermediate x values to make sure that it does. - Plot[0, 1] (

x_1, y_1) is definitely not linear, and you need to choose another model. - Explore your data first!

1

anscombe

| x_0 | y_0 | x_1 | y_1 | x_2 | y_2 | x_3 | y_3 | |

|---|---|---|---|---|---|---|---|---|

| 0 | 10.0 | 8.04 | 10.0 | 9.14 | 10.0 | 7.46 | 8.0 | 6.58 |

| 1 | 8.0 | 6.95 | 8.0 | 8.14 | 8.0 | 6.77 | 8.0 | 5.76 |

| 2 | 13.0 | 7.58 | 13.0 | 8.74 | 13.0 | 12.74 | 8.0 | 7.71 |

| 3 | 9.0 | 8.81 | 9.0 | 8.77 | 9.0 | 7.11 | 8.0 | 8.84 |

| 4 | 11.0 | 8.33 | 11.0 | 9.26 | 11.0 | 7.81 | 8.0 | 8.47 |

| 5 | 14.0 | 9.96 | 14.0 | 8.10 | 14.0 | 8.84 | 8.0 | 7.04 |

| 6 | 6.0 | 7.24 | 6.0 | 6.13 | 6.0 | 6.08 | 8.0 | 5.25 |

| 7 | 4.0 | 4.26 | 4.0 | 3.10 | 4.0 | 5.39 | 19.0 | 12.50 |

| 8 | 12.0 | 10.84 | 12.0 | 9.13 | 12.0 | 8.15 | 8.0 | 5.56 |

| 9 | 7.0 | 4.82 | 7.0 | 7.26 | 7.0 | 6.42 | 8.0 | 7.91 |

| 10 | 5.0 | 5.68 | 5.0 | 4.74 | 5.0 | 5.73 | 8.0 | 6.89 |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

for i in range(1, 5):

plt.subplot(2, 2, i)

g = sns.scatterplot(x=f'x_{i-1}', y=f'y_{i-1}', data=anscombe)

# x & y mean

x_mean = anscombe.loc[:, f'x_{i-1}'].mean()

y_mean = anscombe.loc[:, f'y_{i-1}'].mean()

plt.vlines(anscombe.loc[:, f'x_{i-1}'].mean(), 0, 14, color='g')

plt.text(9.2, 4, f'x-mean: {x_mean}')

plt.hlines(anscombe.loc[:, f'y_{i-1}'].mean(), 2, 20, color='g')

plt.text(13, 6.9, f'y-mean: {y_mean:0.1f}')

# regression line

slope, intercept = np.polyfit(anscombe[f'x_{i-1}'], anscombe[f'y_{i-1}'], 1)

y1 = slope*2 + intercept

y2 = slope*20 + intercept

lc = mc.LineCollection([[(2, y1), (20, y2)]], color='green')

g.add_collection(lc)

plt.text(15, 12, 'reg-line')

# sum of the squares of the residuals

A = np.vstack((anscombe.loc[:, f'x_{i-1}'], np.ones(11))).T

resid = np.linalg.lstsq(A, anscombe.loc[:, f'y_{i-1}'], rcond=-1)[1][0]

plt.text(12.3, 1, f'sum sq. resid: {resid:0.1f}')

plt.ylim(0, 14)

plt.xlim(2, 20)

plt.xticks(list(range(4, 22, 2)))

plt.ylabel('y')

plt.xlabel('x')

The importance of EDA

Why should exploratory data analysis be the first step in an analysis of data (after getting your data imported and cleaned, of course)?

Answer the question

- You can be protected from misinterpretation of the type demonstrated by Anscombe’s quartet.

- EDA provides a good starting point for planning the rest of your analysis.

- EDA is not really any more difficult than any of the subsequent analysis, so there is no excuse for not exploring the data.

- All of these reasons!

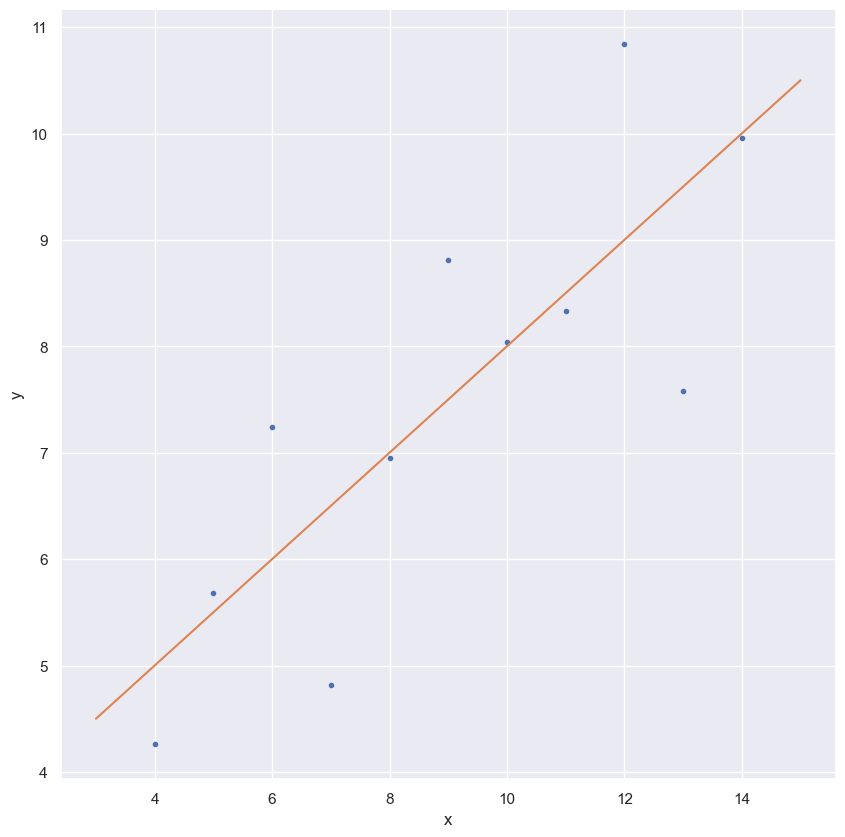

Linear regression on appropriate Anscombe data

For practice, perform a linear regression on the data set from Anscombe’s quartet that is most reasonably interpreted with linear regression.

Instructions

- Compute the parameters for the slope and intercept using

np.polyfit(). The Anscombe data are stored in the arraysxandy. - Print the slope

aand interceptb. - Generate theoretical $x$ and $y$ data from the linear regression. Your $x$ array, which you can create with

np.array(), should consist of3and15. To generate the $y$ data, multiply the slope byx_theorand add the intercept. - Plot the Anscombe data as a scatter plot and then plot the theoretical line. Remember to include the

marker='.'andlinestyle='none'keyword arguments in addition toxandywhen you plot the Anscombe data as a scatter plot. You do not need these arguments when plotting the theoretical line.

1

2

x = anscombe.x_0

y = anscombe.y_0

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

# Perform linear regression: a, b

a, b = np.polyfit(x, y, 1)

# Print the slope and intercept

print(a, b)

# Generate theoretical x and y data: x_theor, y_theor

x_theor = np.array([3, 15])

y_theor = a * x_theor + b

# Plot the Anscombe data and theoretical line

_ = plt.plot(x, y, marker='.', linestyle='none')

_ = plt.plot(x_theor, y_theor)

# Label the axes

plt.xlabel('x')

plt.ylabel('y')

# Show the plot

plt.show()

1

0.5000909090909095 3.0000909090909076

Linear regression on all Anscombe data

Now, to verify that all four of the Anscombe data sets have the same slope and intercept from a linear regression, you will compute the slope and intercept for each set. The data are stored in lists; anscombe_x = [x1, x2, x3, x4] and anscombe_y = [y1, y2, y3, y4], where, for example, x2 and y2 are the $x$ and $y$ values for the second Anscombe data set.

Instructions

- Write a

forloop to do the following for each Anscombe data set. - Compute the slope and intercept.

- Print the slope and intercept.

1

2

3

4

5

6

7

8

9

# Iterate through x,y pairs

for i in range(0, 4):

# Compute the slope and intercept: a, b

x = anscombe.loc[:, f'x_{i}']

y = anscombe.loc[:, f'y_{i}']

a, b = np.polyfit(x, y, 1)

# Print the result

print(f'x_{i} & y_{i} slope: {a:0.4f} intercept: {b:0.4f}')

1

2

3

4

x_0 & y_0 slope: 0.5001 intercept: 3.0001

x_1 & y_1 slope: 0.5000 intercept: 3.0009

x_2 & y_2 slope: 0.4997 intercept: 3.0025

x_3 & y_3 slope: 0.4999 intercept: 3.0017

Bootstrap confidence intervals

To “pull yourself up by your bootstraps” is a classic idiom meaning that you achieve a difficult task by yourself with no help at all. In statistical inference, you want to know what would happen if you could repeat your data acquisition an infinite number of times. This task is impossible, but can we use only the data we actually have to get close to the same result as an infinitude of experiments? The answer is yes! The technique to do it is aptly called bootstrapping. This chapter will introduce you to this extraordinarily powerful tool.

Generating bootstrap replicates

- In the prequel to this course we computed summary statistics of measurements, including the mean, median and standard deviation (std).

- Remember, we need to think probabilistically.

- What if we acquire the data again?

- Would we get the same mean? Probably not.

- In inference problems, it is rare that we are interested in the result from a single experiment or data acquisition.

- We want to say something more general.

- Michaelson was not interested in what the measured speed of light was in the specific 100 measurements conducted in the summer of 1879.

- He wanted to know what the speed of light actually is.

- Statistically speaking, that means he wanted to know what speed of light he would observe if he did the experiment over and over again an infinite number of times.

- Unfortunately, actually repeating the experiment lots and lots of times is just not possible.

- But, as hackers, we can simulate getting the data again.

- The idea is that we resample the data we have and recompute the summary statistic of interest, say the mean.

- To resample an array of measurements, we randomly select one entry and store it,

- Importantly, we replace the entry in the original array, or equivalently, we just don’t delete it.

- This is call sampling with replacement.

- The, we randomly select another one and store it.

- We do this n times, where n is the total number of measurements, five in this case.

- We then have a resampled array of data.

- Data:

[23.3, 27.1, 24.3, 25.7, 26.0] - Mean = 25.2

- Resampled Data:

[27.1, 26.0, 23.3, 25.7, 23.3] - Mean = 25.08

- Using this new resampled array, we compute the summary statistic and store the result.

- Resampling the speed of light data is as if we repeated Michelson’s set of measurements.

- We do this over and over again to get a large number of summary statistics from the resampled data sets.

- We can use these results to plot an ECDF, for example, to get a picture of the probability distribution describing the summary statistic.

- This process is an example of bootstrapping, which more generally is the use of resampled data to perform statistical inference.

- To make sure we have our terminology down, each resampled array is called a bootstrap sample

- A bootstrap replicate is the value of the summary statistic computed from the bootstrap sample.

- The name makes sense; it’s a simulated replica of the original data acquired by bootstrapping.

- Let’s look at how we can generate a bootstrap sample and compute a bootstrap replicate from it using Python.

- We’ll use Michelson’s measurements of the speed of light.

Resampling

- We’ll use

np.random.choiceto perform the resampling. - There is a

sizeargument so we can specify the sample size - The function does not delete an entry we it samples out of the array.

- With this, we can draw 100 samples out of the Michelson speed of light data.

- This is a bootstrap sample, since there we 100 data points and we are choosing 100 of them with replacement.

- Now that we have a bootstrap sample, we can compute a bootstrap replicate.

- We can pick whatever summary statistic we like.

- We’ll compute the mean, median and std.

- It’s as simple as treating the bootstrap sample as though it were a dataset.

1

np.random.choice(list(range(1, 6)), size=5)

1

array([4, 5, 4, 1, 3])

1

2

3

4

vel = sol['velocity of light in air (km/s)']

print(f'Mean: {vel.mean()}')

print(f'Median: {vel.median()}')

print(f'STD: {vel.std()}')

1

2

3

Mean: 299852.4

Median: 299850.0

STD: 79.01054781905178

1

2

3

4

bs_sample = np.random.choice(vel, size=100)

print(f'Mean: {bs_sample.mean()}')

print(f'Median: {np.median(bs_sample)}')

print(f'STD: {np.std(bs_sample)}')

1

2

3

Mean: 299855.0

Median: 299860.0

STD: 74.42445834535849

Getting the terminology down

Getting tripped up over terminology is a common cause of frustration in students. Unfortunately, you often will read and hear other data scientists using different terminology for bootstrap samples and replicates. This is even more reason why we need everything to be clear and consistent for this course. So, before going forward discussing bootstrapping, let’s get our terminology down. If we have a data set with $n$ repeated measurements, a bootstrap sample is an array of length $n$ that was drawn from the original data with replacement. What is a bootstrap replicate?

Answer the question

Just another name for a bootstrap sample.- A single value of a statistic computed from a bootstrap sample.

An actual repeat of the measurements.

Bootstrapping by hand

To help you gain intuition about how bootstrapping works, imagine you have a data set that has only three points, [-1, 0, 1]. How many unique bootstrap samples can be drawn (e.g., [-1, 0, 1] and [1, 0, -1] are unique), and what is the maximum mean you can get from a bootstrap sample? It might be useful to jot down the samples on a piece of paper.

(These are too few data to get meaningful results from bootstrap procedures, but this example is useful for intuition.)

Instructions

There are 3 unique samples, and the maximum mean is 0.There are 10 unique samples, and the maximum mean is 0.There are 10 unique samples, and the maximum mean is 1.There are 27 unique samples, and the maximum mean is 0.There are 27 unique samples, and the maximum mean is 1.

- Types to choose from?

n = 3 - Number of chosen?

r = 3 - Order is important.

- Repetition is allowed.

- Formula: $n^{r}$

There are 27 total bootstrap samples, and one of them, [1,1,1] has a mean of 1. Conversely, 7 of them have a mean of zero.

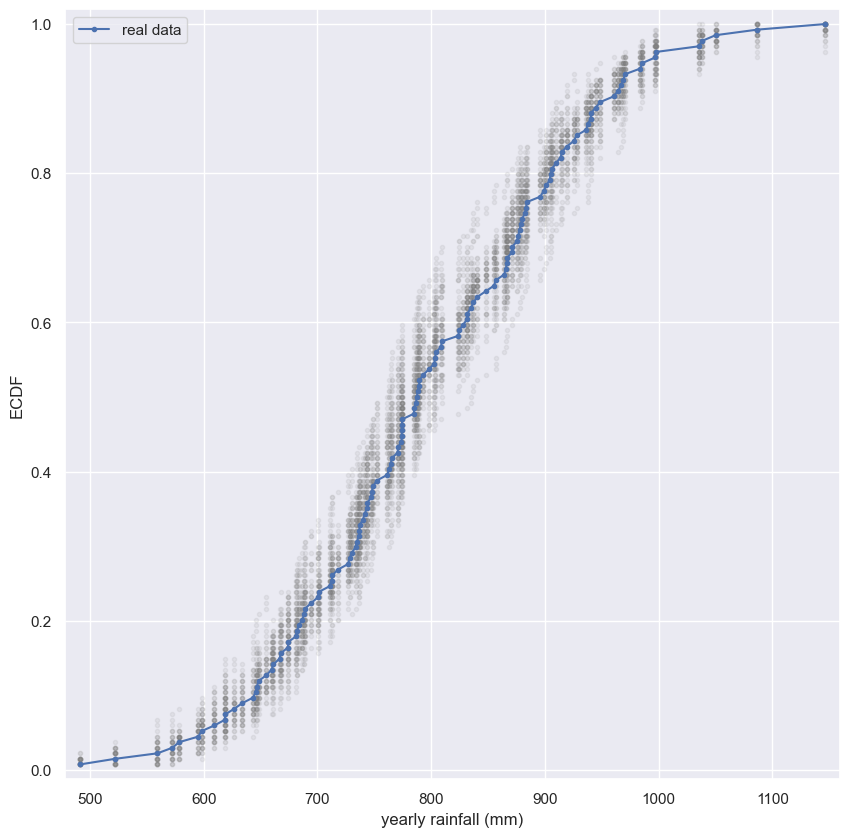

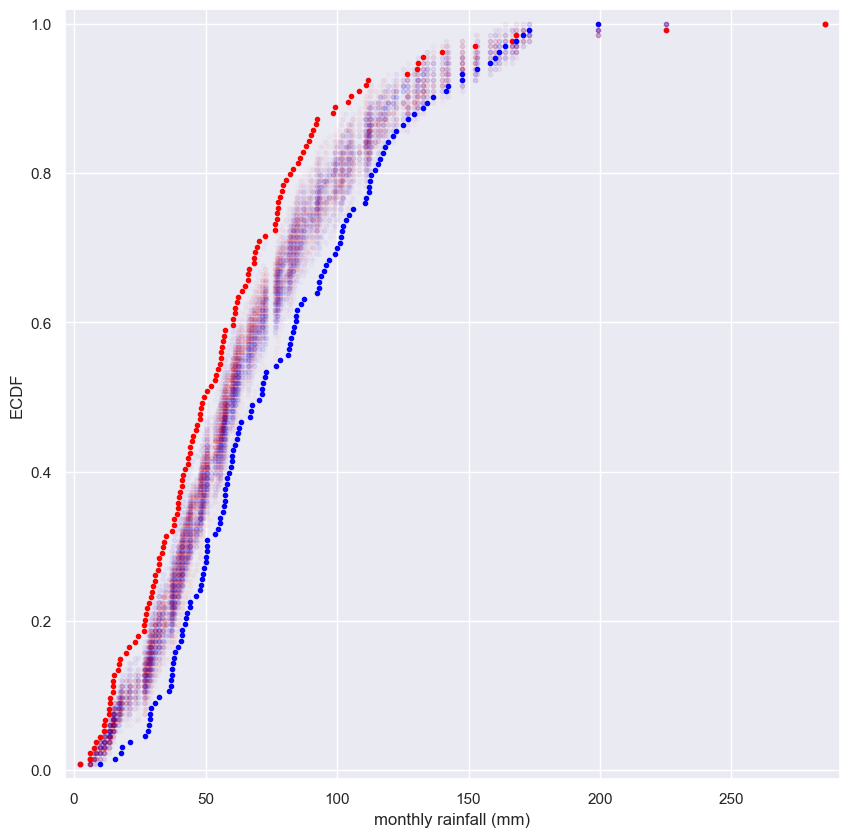

Visualizing bootstrap samples

In this exercise, you will generate bootstrap samples from the set of annual rainfall data measured at the Sheffield Weather Station in the UK from 1883 to 2015. The data are stored in the NumPy array rainfall in units of millimeters (mm). By graphically displaying the bootstrap samples with an ECDF, you can get a feel for how bootstrap sampling allows probabilistic descriptions of data.

Instructions

- Write a

forloop to acquire50bootstrap samples of the rainfall data and plot their ECDF. - Use

np.random.choice()to generate a bootstrap sample from the NumPy arrayrainfall. Be sure that thesizeof the resampled array islen(rainfall). - Use the function

ecdf()that you wrote in the prequel to this course to generate thexandyvalues for the ECDF of the bootstrap samplebs_sample. - Plot the ECDF values. Specify

color='gray'(to make gray dots) andalpha=0.1(to make them semi-transparent, since we are overlaying so many) in addition to themarker='.'andlinestyle='none'keyword arguments. - Use

ecdf()to generatexandyvalues for the ECDF of the originalrainfalldata available in the array rainfall. - Plot the ECDF values of the original data.

1

rainfall = sheffield.groupby('yyyy').agg({'rain': 'sum'})['rain'].tolist()

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

for _ in range(50):

# Generate bootstrap sample: bs_sample

bs_sample = np.random.choice(rainfall, size=len(rainfall))

# Compute and plot ECDF from bootstrap sample

x, y = ecdf(bs_sample)

_ = plt.plot(x, y, marker='.', linestyle='none',

color='gray', alpha=0.1)

# Compute and plot ECDF from original data

x, y = ecdf(rainfall)

_ = plt.plot(x, y, marker='.', label='real data')

# Make margins and label axes

plt.margins(0.02)

_ = plt.xlabel('yearly rainfall (mm)')

_ = plt.ylabel('ECDF')

plt.legend()

# Show the plot

plt.show()

Notice how the bootstrap samples give an idea of how the distribution of rainfalls is spread.

Bootstrap confidence intervals

- In the last video, we learned how to take a set of data, create a bootstrap sample, and then compute a bootstrap replicate of a given statistic.

- Since we will repeat the replicates over and over again, we can write a function to generate a bootstrap replicate.

- We will call the function

bootstrap_replicate_1d, since it works on 1-dimensional arrays. - We pass in the data and also a function that computes the statistic of interest.

- We could pass

np.meanornp.medianfor example. - Generating a replicate takes two steps.

- First, we choose entries out of the data array so that the bootstrap sample has the same number of entries as the original data.

- Then, we compute the statistic using the specified function

- If we call the function, we get a bootstrap replicate

def bootstrap_replicate_1d

1

2

3

4

def bootstrap_replicate_1d(data, func):

"""Generate bootstrap replicate of 1D data."""

bs_sample = np.random.choice(data, len(data))

return func(bs_sample)

1

bootstrap_replicate_1d(sol['velocity of light in air (km/s)'], np.mean)

1

299854.9

1

bootstrap_replicate_1d(sol['velocity of light in air (km/s)'], np.mean)

1

299859.0

Many bootstrap replicates

- How do we repeatedly get replicates?

- First, we have to initialize an array to store our bootstrap replicates.

- We will make 10,000 replicates, so we use

np.emptyto create the empty array. - Next, we write a

for-loopto generate a replicate and store it in thebs_replicatesarray. - Now that we have the replicates, we can make a histogram to see what we might expect to get for the mean of repeated measurements of the speed of light.

density=Truesets the height of the bars of the histogram such that the total area of the bars is equal to one.- This is call normalization, and we do it so that the histogram approximates a probability density function.

- The area under the PDF gives a probability.

- So, we have computed the approximate PDF of the mean speed of light we would expect to get if we performed the measurements again.

- If we repeat the experiment again and again, we are likely to only see the sample mean vary by about 30 km/s.

- Now it’s useful to summarize this result without having to resort to a graphical method like a histogram.

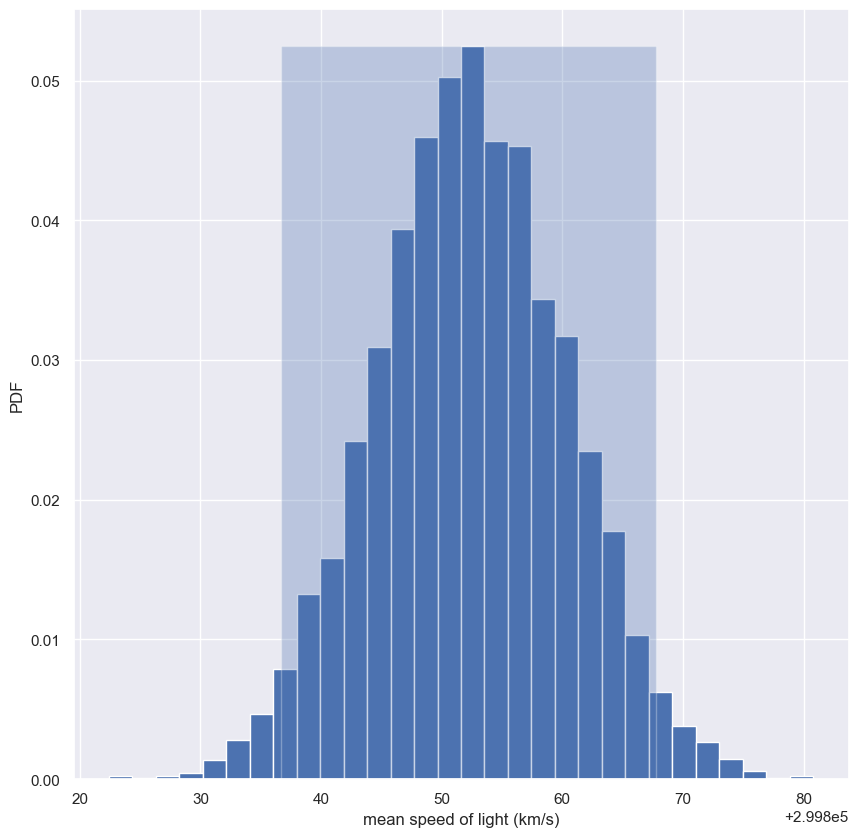

Confidence interval of a statistic

- To do this, we will compute the 95% confidence interval of the mean.

- The p% confidence interval is defined as follows

- If we repeated measurements over and over again, p% of the observed values would lie within the p% confidence interval.

- In our case, if we repeated the 100 measurements of the speed of light over and over again, 95% of the sample means would lie within the 95% confidence interval.

- By doing bootstrap replicas, we just “repeated” the experiment over and over again.

- We just use

np.perentileto compute the 2.5th and 97.5th percentiles to get the 95% confidence interval.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

bs_replicates = np.empty(10000)

for i in range(10000):

bs_replicates[i] = bootstrap_replicate_1d(sol['velocity of light in air (km/s)'], np.mean)

conf_int = np.percentile(bs_replicates, [2.5, 97.5])

print(conf_int)

n, bins, _ = plt.hist(bs_replicates, bins=30, density=True)

plt.fill_betweenx([0, n.max()], conf_int[0], conf_int[1], alpha=0.3)

plt.xlabel('mean speed of light (km/s)')

plt.ylabel('PDF')

plt.show()

1

[299836.7 299867.8]

Generating many bootstrap replicates

The function bootstrap_replicate_1d() from the video is available in your namespace. Now you’ll write another function, draw_bs_reps(data, func, size=1), which generates many bootstrap replicates from the data set. This function will come in handy for you again and again as you compute confidence intervals and later when you do hypothesis tests.

Instructions

- Define a function with call signature

draw_bs_reps(data, func, size=1). - Using

np.empty(), initialize an array calledbs_replicatesof sizesizeto hold all of the bootstrap replicates. - Write a

forloop that ranges oversizeand computes a replicate usingbootstrap_replicate_1d(). Refer to the exercise description above to see the function signature ofbootstrap_replicate_1d(). Store the replicate in the appropriate index ofbs_replicates. - Return the array of replicates

bs_replicates. This has already been done for you.

def draw_bs_reps

1

2

3

4

5

6

7

8

9

10

11

def draw_bs_reps(data, func, size=1):

"""Draw bootstrap replicates."""

# Initialize array of replicates: bs_replicates

bs_replicates = np.empty(size)

# Generate replicates

for i in range(size):

bs_replicates[i] = bootstrap_replicate_1d(data, func)

return bs_replicates

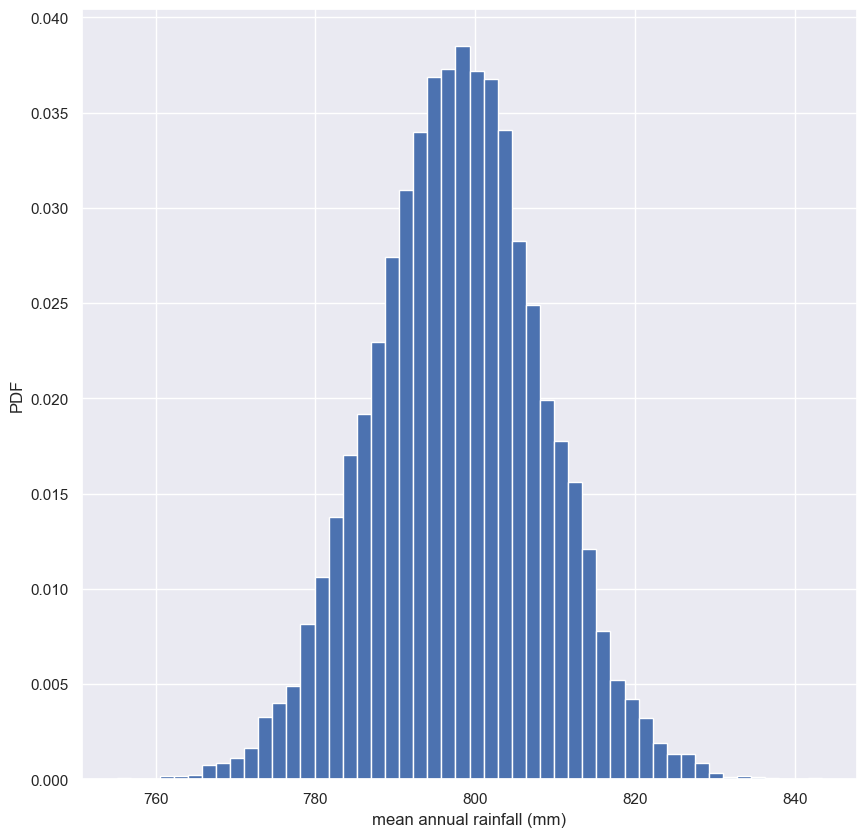

Bootstrap replicates of the mean and the SEM

In this exercise, you will compute a bootstrap estimate of the probability density function of the mean annual rainfall at the Sheffield Weather Station. Remember, we are estimating the mean annual rainfall we would get if the Sheffield Weather Station could repeat all of the measurements from 1883 to 2015 over and over again. This is a probabilistic estimate of the mean. You will plot the PDF as a histogram, and you will see that it is Normal.

In fact, it can be shown theoretically that under not-too-restrictive conditions, the value of the mean will always be Normally distributed. (This does not hold in general, just for the mean and a few other statistics.) The standard deviation of this distribution, called the standard error of the mean, or SEM, is given by the standard deviation of the data divided by the square root of the number of data points. I.e., for a data set, sem = np.std(data) / np.sqrt(len(data)). Using hacker statistics, you get this same result without the need to derive it, but you will verify this result from your bootstrap replicates.

The dataset has been pre-loaded for you into an array called rainfall.

Instructions

- Draw

10000bootstrap replicates of the mean annual rainfall using yourdraw_bs_reps()function and therainfallarray. Hint: Pass innp.meanforfuncto compute the mean. - As a reminder,

draw_bs_reps()accepts 3 arguments:data,func, andsize. - Compute and print the standard error of the mean of

rainfall. - The formula to compute this is

np.std(data) / np.sqrt(len(data)). - Compute and print the standard deviation of your bootstrap replicates

bs_replicates. - Make a histogram of the replicates using the

density=Truekeyword argument and50bins.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# Take 10,000 bootstrap replicates of the mean: bs_replicates

bs_replicates = draw_bs_reps(rainfall, np.mean, 10000)

# Compute and print SEM

sem = np.std(rainfall) / np.sqrt(len(rainfall))

print(sem)

# Compute and print standard deviation of bootstrap replicates

bs_std = np.std(bs_replicates)

print(bs_std)

# Make a histogram of the results

_ = plt.hist(bs_replicates, bins=50, density=True)

_ = plt.xlabel('mean annual rainfall (mm)')

_ = plt.ylabel('PDF')

# Show the plot

plt.show()

1

2

10.635458130769608

10.55070953324053

Notice that the SEM we got from the known expression and the bootstrap replicates is the same and the distribution of the bootstrap replicates of the mean is Normal.

Confidence intervals of rainfall data

A confidence interval gives upper and lower bounds on the range of parameter values you might expect to get if we repeat our measurements. For named distributions, you can compute them analytically or look them up, but one of the many beautiful properties of the bootstrap method is that you can take percentiles of your bootstrap replicates to get your confidence interval. Conveniently, you can use the np.percentile() function.

Use the bootstrap replicates you just generated to compute the 95% confidence interval. That is, give the 2.5th and 97.5th percentile of your bootstrap replicates stored as bs_replicates. What is the 95% confidence interval?

(765, 776) mm/year- (780, 821) mm/year

(761, 817) mm/year(761, 841) mm/year

1

2

conf_int = np.percentile(bs_replicates, [2.5, 97.5])

print(conf_int)

1

[777.18011194 818.45406716]

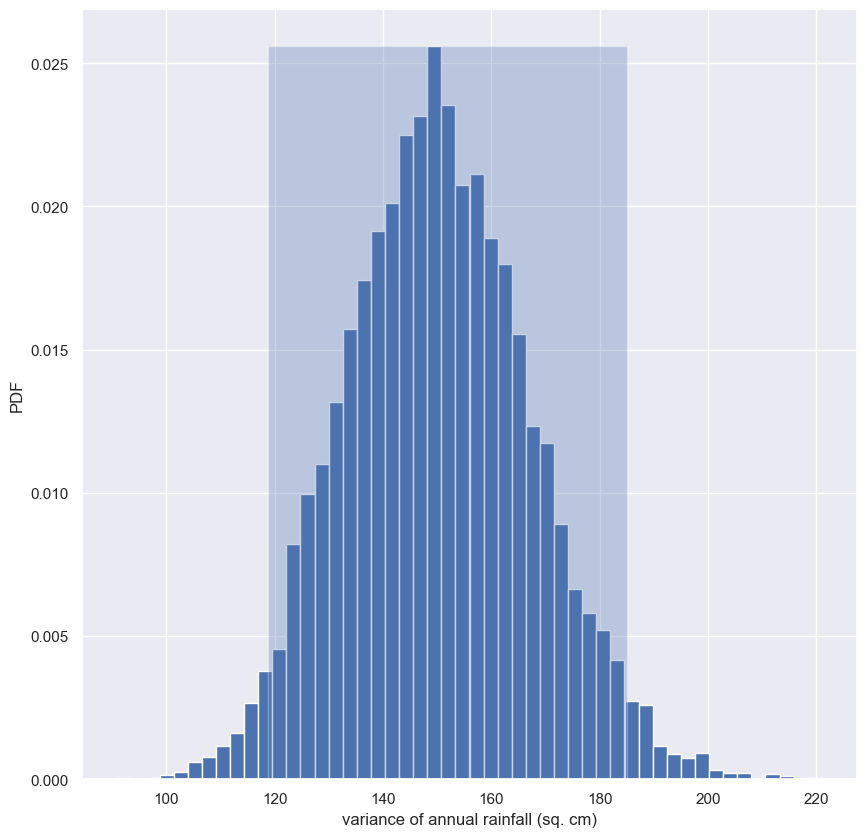

Bootstrap replicates of other statistics

We saw in a previous exercise that the mean is Normally distributed. This does not necessarily hold for other statistics, but no worry: as hackers, we can always take bootstrap replicates! In this exercise, you’ll generate bootstrap replicates for the variance of the annual rainfall at the Sheffield Weather Station and plot the histogram of the replicates.

Here, you will make use of the draw_bs_reps() function you defined a few exercises ago. It is provided below for your reference:

1

2

3

4

5

6

7

8

def draw_bs_reps(data, func, size=1):

"""Draw bootstrap replicates."""

# Initialize array of replicates

bs_replicates = np.empty(size)

# Generate replicates

for i in range(size):

bs_replicates[i] = bootstrap_replicate_1d(data, func)

return bs_replicates

Instructions

- Draw

10000bootstrap replicates of the variance in annual rainfall, stored in therainfalldataset, using yourdraw_bs_reps()function. Hint: Pass innp.varfor computing the variance. - Divide your variance replicates (

bs_replicates) by100to put the variance in units of square centimeters for convenience. - Make a histogram of

bs_replicatesusing thedensity=Truekeyword argument and50bins.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

# Generate 10,000 bootstrap replicates of the variance: bs_replicates

bs_replicates = draw_bs_reps(rainfall, np.var, 10000)

# Put the variance in units of square centimeters

bs_replicates = bs_replicates/100

conf_int = np.percentile(bs_replicates, [2.5, 97.5])

print(conf_int)

# Make a histogram of the results

n, bins, _ = plt.hist(bs_replicates, bins=50, density=True)

plt.fill_betweenx([0, n.max()], conf_int[0], conf_int[1], alpha=0.3)

plt.xlabel('variance of annual rainfall (sq. cm)')

plt.ylabel('PDF')

# Show the plot

plt.show()

1

[118.79130786 185.07380985]

This is not normally distributed, as it has a longer tail to the right. Note that you can also compute a confidence interval on the variance, or any other statistic, using np.percentile() with your bootstrap replicates.

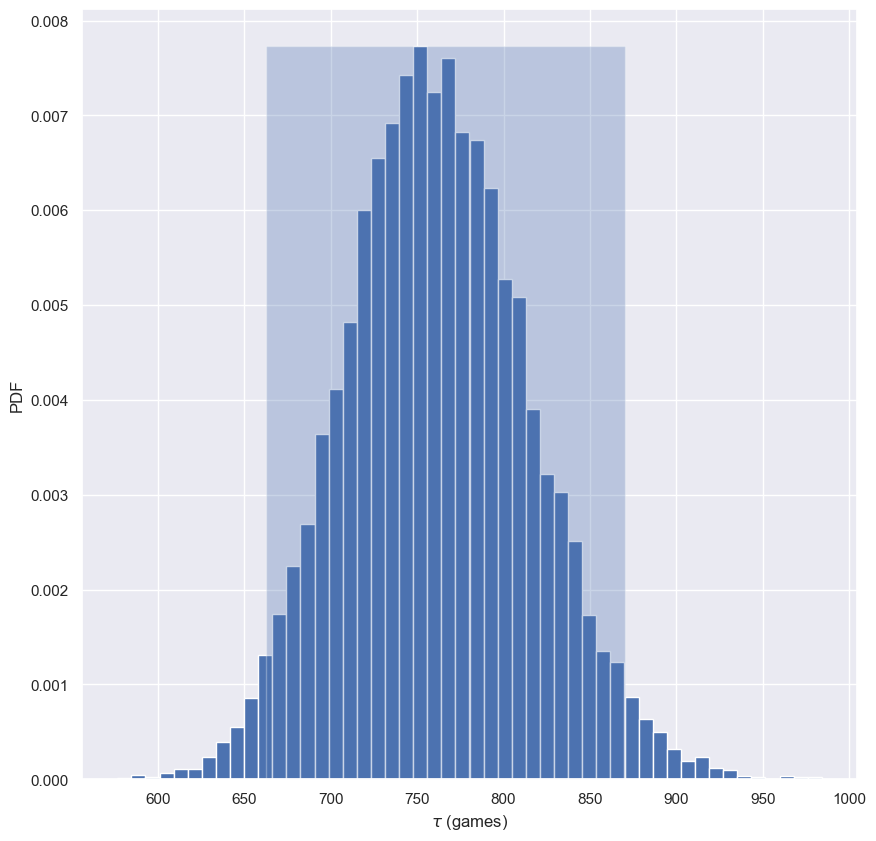

Confidence interval on the rate of no-hitters

Consider again the inter-no-hitter intervals for the modern era of baseball. Generate 10,000 bootstrap replicates of the optimal parameter $τ$. Plot a histogram of your replicates and report a 95% confidence interval.

Instructions

- Generate

10000bootstrap replicates of $τ$ from thenohitter_timesdata using yourdraw_bs_reps()function. Recall that the optimal $τ$ is calculated as the mean of the data. - Compute the 95% confidence interval using

np.percentile()and passing in two arguments: The arraybs_replicates, and the list of percentiles - in this case2.5and97.5. - Print the confidence interval.

- Plot a histogram of your bootstrap replicates.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

# Draw bootstrap replicates of the mean no-hitter time (equal to tau): bs_replicates

bs_replicates = draw_bs_reps(nohitter_times.nohitter_time, np.mean, 10000)

# Compute the 95% confidence interval: conf_int

conf_int = np.percentile(bs_replicates, [2.5, 97.5])

# Print the confidence interval

print('95% confidence interval =', conf_int, 'games')

# Plot the histogram of the replicates

n, bins, _ = plt.hist(bs_replicates, bins=50, density=True)

plt.fill_betweenx([0, n.max()], conf_int[0], conf_int[1], alpha=0.3)

plt.xlabel(r'$\tau$ (games)')

plt.ylabel('PDF')

# Show the plot

plt.show()

1

95% confidence interval = [662.58964143 870.47440239] games

This gives you an estimate of what the typical time between no-hitters is. It could be anywhere between 660 and 870 games.

Pairs bootstrap

- When we computed bootstrap confidence intervals on summary statistics, we did so nonparametrically.

- By this, I mean that we did not assume any model underlying the data; the estimates we done using the data alone.

- When we performed a linear least squares regression, however, we were using a linear model, which has two parameters, the slope and intercept.

- This was a parametric estimate.

- The optimal parameter values we compute for our parametric model are like other statistics, in that we get different values for them if we acquired the data again.

- We can perform bootstrap estimates to get confidence intervals on the slope and intercept as well.

- Remember: we need to think probabilistically.

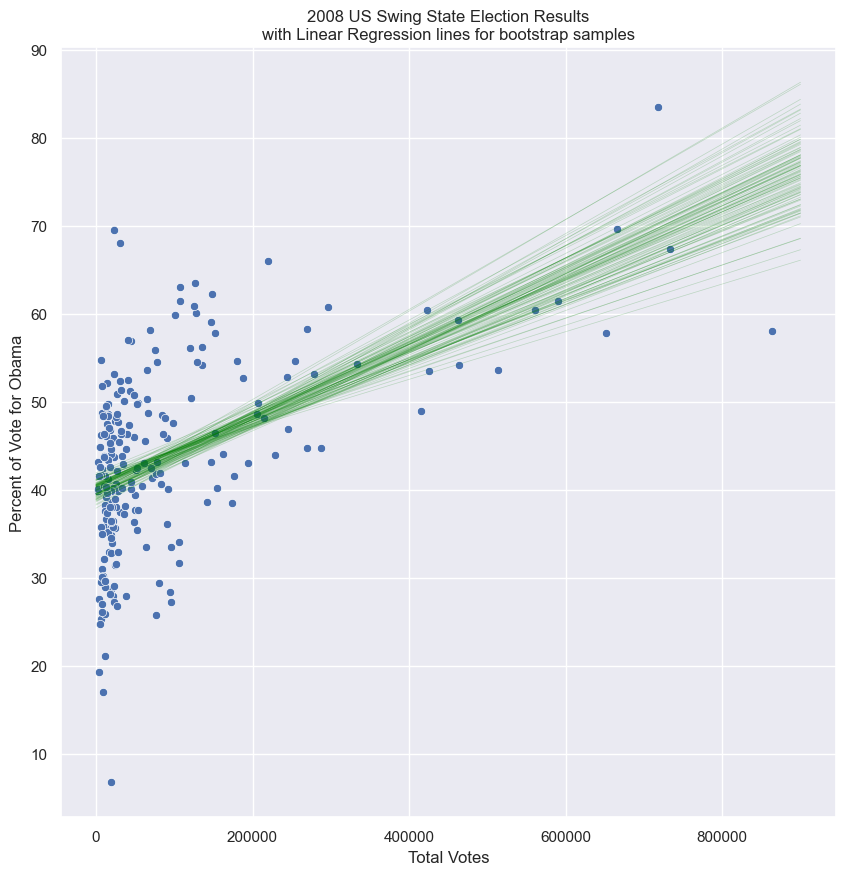

2008 US Swing State Election Results

- Let’s consider the swing state election data from the prequel course.

- What if we had the election again, under identical conditions?

- How would the slope and intercept change?

- This is kind of a tricky question; there are several ways to get bootstrap estimates of the confidence intervals on these parameters, each of which makes different assumptions about the data.

Pairs bootstrap for linear regression

- We will do a method that makes the least assumptions, called pairs bootstrap

- Since we cannot resample individual data because each county has two variables associated with it, the vote share for Obama and the total number of votes, we resample pairs.

- For the election data, we could randomly select a given county, and keep its total votes and Democratic share as a pair.

- So our bootstrap sample consists of a set (x, y) pair.

- We then compute the slope and intercept from this pairs bootstrap sample to get the bootstrap replicates.

- You can get confidence intervals from many bootstrap replicates of the slope and intercept, just like before.

Generating a pairs bootstrap sample

- Because

np.random.choicemust sample a 1D array, we will sample the indices of the data points. - We can generate the indices of a numpy array using the

np.arrangefunction, which gives an array of sequential integers. - We then sample the indices with replacement

- The bootstrap sample is generated by slicing out the respective values from the original data arrays.

- We can perform a linear regression using

np.polyfiton the pairs bootstrap sample to get a bootstrap replicate. - If we compare the result to the linear regression on the original data, they are close, but not equal.

- As we have seen before, you can use many of these replicates to generate bootstrap confidence intervals for the slope and intercept using

np.percentile. - You can also plot the lines you get from your bootstrap replicate to get a graphic idea how the regression line may change if the data were collected again.

- You’ll work through this whole procedure in the exercises.

- Always keep in mind that you are thinking probabilistically.

- Getting an optimal parameter values is the first step.

- Now, you are finding out how that parameter is likely to change upon repeated measurements.

1

np.arange(7)

1

array([0, 1, 2, 3, 4, 5, 6])

1

2

3

4

5

6

7

8

# random.choice by indices

inds = np.arange(len(swing.total_votes))

bs_inds = np.random.choice(inds, len(inds))

bs_total_votes = swing.total_votes[bs_inds].tolist()

bs_dem_share = swing.dem_share[bs_inds].tolist()

bs_slope, bs_intercept = np.polyfit(bs_total_votes, bs_dem_share, 1)

print(bs_slope, bs_intercept)

1

4.626047844746474e-05 40.39416987543208

1

2

3

4

# given the data in a pandas dataframe

bs_df_sample = swing[['total_votes', 'dem_share']].sample(len(swing.index), replace=True).copy()

np.polyfit(bs_df_sample.total_votes, bs_df_sample.dem_share, 1)

1

array([4.44451644e-05, 3.85875615e+01])

1

2

# linear regression of original data

np.polyfit(swing.total_votes, swing.dem_share, 1)

1

array([4.0370717e-05, 4.0113912e+01])

def bs_df_poly_1

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

def bs_df_poly_1(df: pd.DataFrame, size: int, x1: int, x2: int) -> list:

"""

Calculates a number of bootstrap samples determined by size

For each bs_df_sample, calculate the slope & intercept

For each slope & intercept, calculate the line y1 & y2 for a line segment

The returned list can be plotted with matplotlib.collections.LineCollection

df: two column dataframe with x & y

size: number of bootstrap samples

x1: first x-intercept

x2: second x_intercpt

returns a list of line coordinates [[(x1, y1), (x2, y2)], [(x1, y1), (x2, y2)]]

returns a list of slope intercepts

"""

bs_df_samples = [df.sample(len(df), replace=True) for _ in range(size)]

poly_samples = [np.polyfit(v.iloc[:, 0], v.iloc[:, 1], 1) for v in bs_df_samples]

collections = [[(x1, v[0]*x1 + v[1]), (x2, v[0]*x2 + v[1])] for v in poly_samples]

return poly_samples, collections

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

g = sns.scatterplot(x=swing.total_votes, y=swing.dem_share, )

polys, lines = bs_df_poly_1(swing[['total_votes', 'dem_share']], 100, 0, 900000)

# lines can be plotted with slope and intercept

x = np.array([0, 900000])

for poly in polys:

plt.plot(x, poly[0]*x + poly[1], linewidth=0.5, alpha=0.2, color='green')

# or lines can be plotted with a collection of lines

# lc = mc.LineCollection(lines, color='green', alpha=0.2)

# g.add_collection(lc)

plt.title('2008 US Swing State Election Results\nwith Linear Regression lines for bootstrap samples')

plt.xlabel('Total Votes')

plt.ylabel('Percent of Vote for Obama')

plt.show()

A function to do pairs bootstrap

As discussed in the video, pairs bootstrap involves resampling pairs of data. Each collection of pairs fit with a line, in this case using np.polyfit(). We do this again and again, getting bootstrap replicates of the parameter values. To have a useful tool for doing pairs bootstrap, you will write a function to perform pairs bootstrap on a set of x,y data.

Instructions

- Define a function with call signature

draw_bs_pairs_linreg(x, y, size=1)to perform pairs bootstrap estimates on linear regression parameters. - Use

np.arange()to set up an array of indices going from0tolen(x). These are what you will resample and use them to pick values out of thexandyarrays. - Use

np.empty()to initialize the slope and intercept replicate arrays to be of sizesize. - Write a

forloop to:- Resample the indices

inds. Usenp.random.choice()to do this. - Make new $x$ and $y$ arrays

bs_xandbs_yusing the the resampled indicesbs_inds. To do this, slicexandywithbs_inds. - Use

np.polyfit()on the new $x$ and $y$ arrays and store the computed slope and intercept.

- Resample the indices

- Return the pair bootstrap replicates of the slope and intercept.

def draw_bs_pairs_linreg

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

def draw_bs_pairs_linreg(x, y, size=1):

"""Perform pairs bootstrap for linear regression."""

# Set up array of indices to sample from: inds

inds = np.arange(len(x))

# Initialize replicates: bs_slope_reps, bs_intercept_reps

bs_slope_reps = np.empty(size)

bs_intercept_reps = np.empty(size)

# Generate replicates

for i in range(size):

bs_inds = np.random.choice(inds, size=len(inds))

bs_x, bs_y = x[bs_inds], y[bs_inds]

bs_slope_reps[i], bs_intercept_reps[i] = np.polyfit(bs_x, bs_y, 1)

return bs_slope_reps, bs_intercept_reps

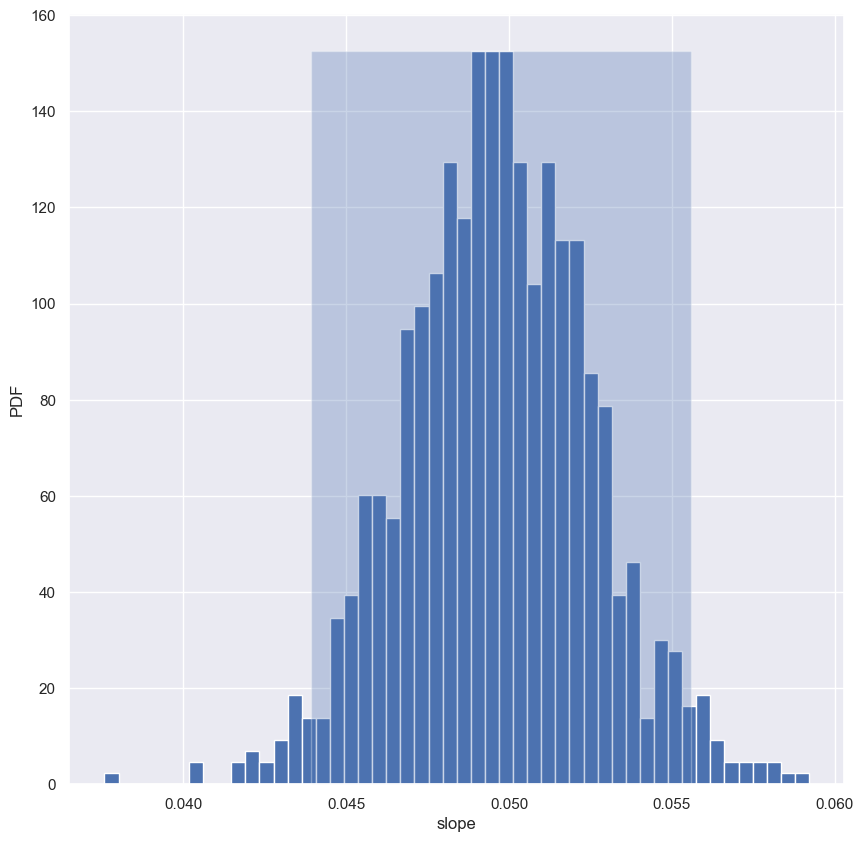

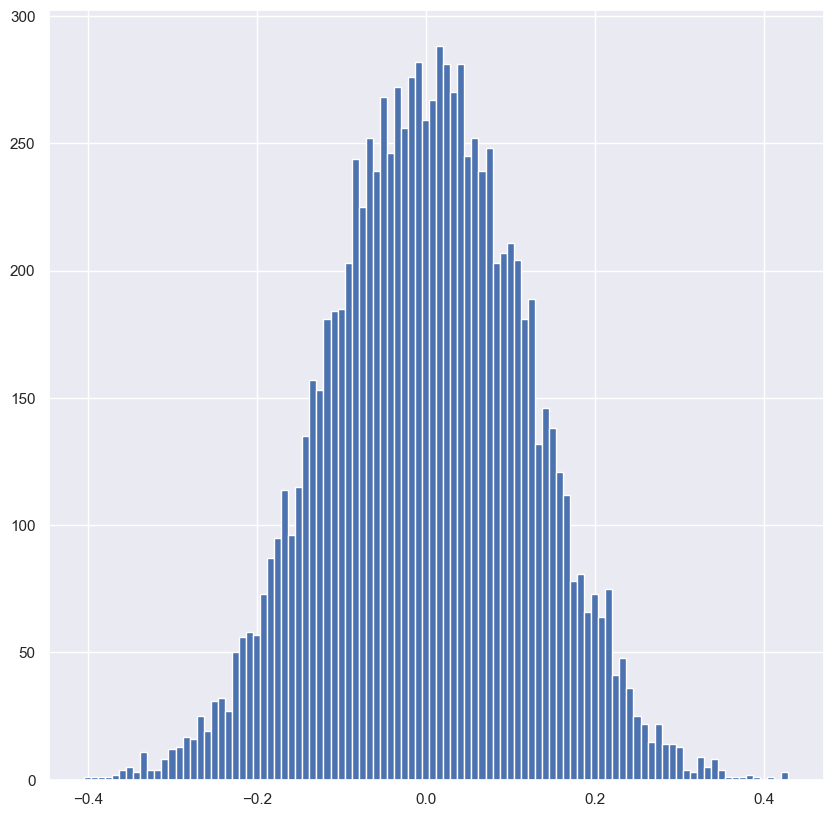

Pairs bootstrap of literacy/fertility data

Using the function you just wrote, perform pairs bootstrap to plot a histogram describing the estimate of the slope from the illiteracy/fertility data. Also report the 95% confidence interval of the slope. The data is available to you in the NumPy arrays illiteracy and fertility.

As a reminder, draw_bs_pairs_linreg() has a function signature of draw_bs_pairs_linreg(x, y, size=1), and it returns two values: bs_slope_reps and bs_intercept_reps.

Instructions

- Use your

draw_bs_pairs_linreg()function to take1000bootstrap replicates of the slope and intercept. The x-axis data isilliteracyand y-axis data isfertility. - Compute and print the 95% bootstrap confidence interval for the slope.

- Plot and show a histogram of the slope replicates. Be sure to label your axes.

1

2

3

4

5

6

7

8

9

10

11

12

13

# Generate replicates of slope and intercept using pairs bootstrap

bs_slope_reps, bs_intercept_reps = draw_bs_pairs_linreg(illiteracy, fertility, 1000)

# Compute and print 95% CI for slope

conf_int = np.percentile(bs_slope_reps, [2.5, 97.5])

print(conf_int)

# Plot the histogram

n, bins, _ = plt.hist(bs_slope_reps, bins=50, density=True)

plt.fill_betweenx([0, n.max()], conf_int[0], conf_int[1], alpha=0.3)

plt.xlabel('slope')

plt.ylabel('PDF')

plt.show()

1

[0.04392444 0.05557051]

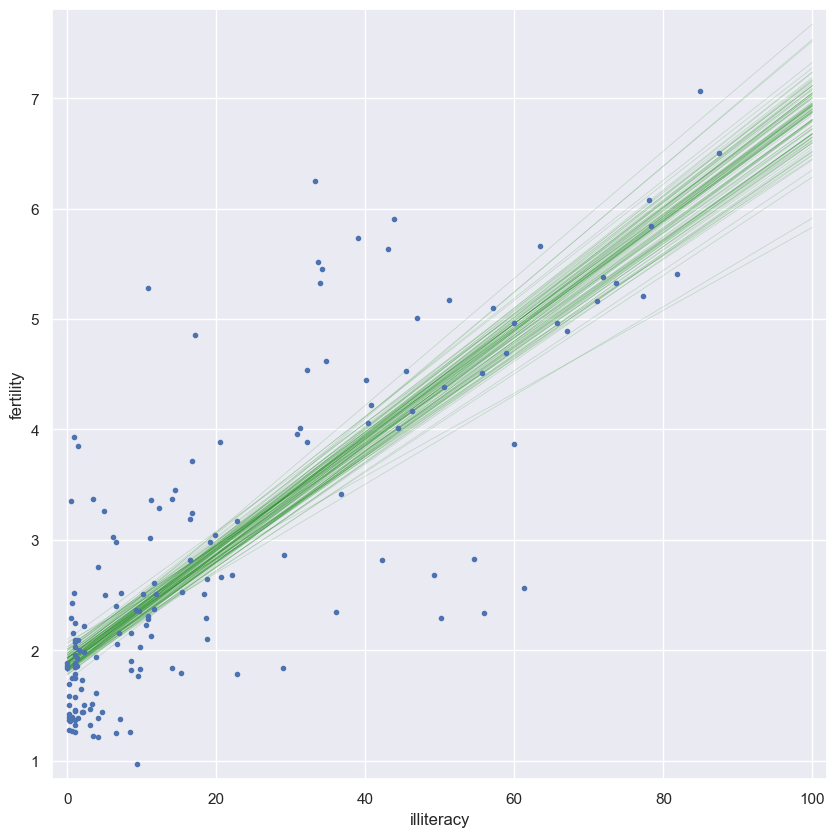

Plotting bootstrap regressions

A nice way to visualize the variability we might expect in a linear regression is to plot the line you would get from each bootstrap replicate of the slope and intercept. Do this for the first 100 of your bootstrap replicates of the slope and intercept (stored as bs_slope_reps and bs_intercept_reps).

Instructions

- Generate an array of $x$-values consisting of

0and100for the plot of the regression lines. Use thenp.array()function for this. - Write a

forloop in which you plot a regression line with a slope and intercept given by the pairs bootstrap replicates. Do this for100lines. - When plotting the regression lines in each iteration of the

forloop, recall the regression equationy = a*x + b. Here,aisbs_slope_reps[i]andbisbs_intercept_reps[i]. - Specify the keyword arguments

linewidth=0.5,alpha=0.2, andcolor='red'in your call toplt.plot(). - Make a scatter plot with

illiteracyon the x-axis andfertilityon the y-axis. Remember to specify themarker='.'andlinestyle='none'keyword arguments. - Label the axes, set a 2% margin, and show the plot.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

# Generate array of x-values for bootstrap lines: x

x = np.array([0, 100])

# Plot the bootstrap lines

for i in range(0, 100):

_ = plt.plot(x, bs_slope_reps[i]*x + bs_intercept_reps[i], linewidth=0.5, alpha=0.2, color='green')

# Plot the data

_ = plt.plot(illiteracy, fertility, marker='.', linestyle='none')

# Label axes, set the margins, and show the plot

_ = plt.xlabel('illiteracy')

_ = plt.ylabel('fertility')

plt.margins(0.02)

plt.show()

Introduction to hypothesis testing

You now know how to define and estimate parameters given a model. But the question remains: how reasonable is it to observe your data if a model is true? This question is addressed by hypothesis tests. They are the icing on the inference cake. After completing this chapter, you will be able to carefully construct and test hypotheses using hacker statistics.

Formulating and simulating a hypothesis

- When we studied linear regression, we assumed a linear model for how the data are generated and then estimated the parameters that are defined by that model.

- But, how do we assess how reasonable it is that our observed data are actually described by the model?

- This is the realm of hypothesis testing.

- Let’s start by thinking about a simpler scenario.

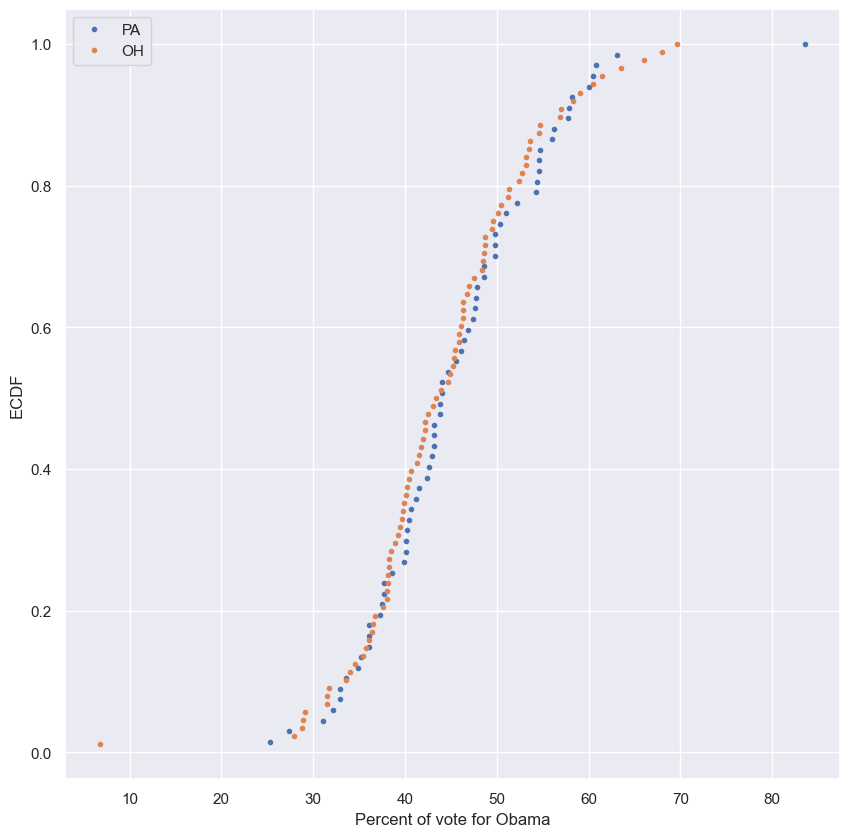

Consider the following

- Ohio and Pennsylvania are similar states.

- They’re neighbors and they both have liberal urban counties and also lots of rural conservative counties.

- I hypothesize that country-level voting in these two states have identical probability distributions.

- We have voting data to help test this hypothesis.

- Stated more concretely, we’re going to assess how reasonable the observed data are assuming the hypothesis is true.

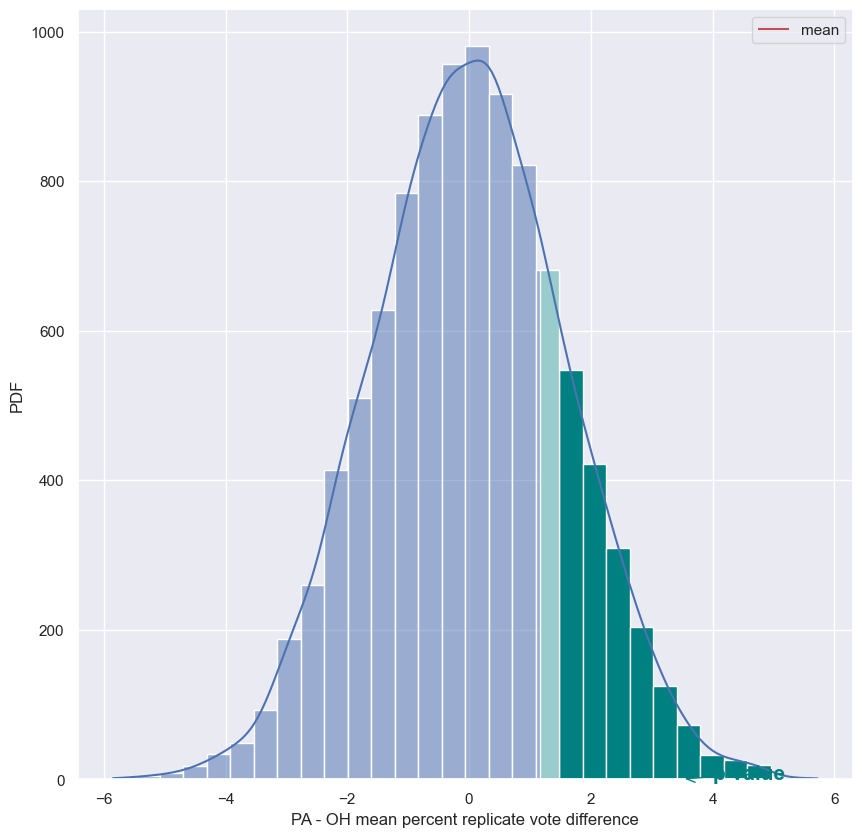

Null hypothesis

- The hypothesis we are testing is typically called the null hypothesis.

- We might start by just plotting the two ECDFs of county-level votes.

- Based on the graph below, it’s pretty tough to make a judgment.

- Pennsylvania seems to be slightly more toward Obama in the middle part of the ECDFs, but not by much.

- We can’t really draw a conclusion here.

- We could just compare some summary statistics.

- An answer is still not clear.

- The means and medians of the two states are really close, and the standard deviations are almost identical.

- Eyeballing the data is not enough to make a determination.

- To resolve this issue, we can simulate what the data would look like if the country-level voting trends in the two states were identically distributed.

- We can do this by putting the Democratic share of the vote for all of Pennsylvania’s 67 counties and Ohio’s 88 counties together.

- We then ignore what state they belong to.

- Next, randomly scramble the ordering of the counties.

- We then re-label the first 67 to be Pennsylvania and the remaining to be Ohio.

- We we just redid the election as if there was no difference between Pennsylvania and Ohio.

Permutation

- This technique, of scrambling the order of an array, is called permutation.

- It is at the heart of simulating a null hypothesis were we assume two quantities are identically distributed.

1

swing.head()

| state | county | total_votes | dem_votes | rep_votes | dem_share | |

|---|---|---|---|---|---|---|

| 0 | PA | Erie County | 127691 | 75775 | 50351 | 60.08 |

| 1 | PA | Bradford County | 25787 | 10306 | 15057 | 40.64 |

| 2 | PA | Tioga County | 17984 | 6390 | 11326 | 36.07 |

| 3 | PA | McKean County | 15947 | 6465 | 9224 | 41.21 |

| 4 | PA | Potter County | 7507 | 2300 | 5109 | 31.04 |

1

2

3

4

5

pa_dem_share = swing.dem_share[swing.state == 'PA']

oh_dem_share = swing.dem_share[swing.state == 'OH']

pa_x, pa_y = ecdf(pa_dem_share)

oh_x, oh_y = ecdf(oh_dem_share)

1

2

3

4

5

6

plt.plot(pa_x, pa_y, marker='.', linestyle='none', label='PA')

plt.plot(oh_x, oh_y, marker='.', linestyle='none', label='OH')

plt.xlabel('Percent of vote for Obama')

plt.ylabel('ECDF')

plt.legend()

plt.show()

1

2

df = pd.DataFrame({'PA': pa_dem_share.reset_index(drop=True), 'OH': oh_dem_share.reset_index(drop=True)})

df.head()

| PA | OH | |

|---|---|---|

| 0 | 60.08 | 56.94 |

| 1 | 40.64 | 50.46 |

| 2 | 36.07 | 65.99 |

| 3 | 41.21 | 45.88 |

| 4 | 31.04 | 42.23 |

1

2

3

pa_oh_stats = df.agg(['mean', 'median', 'std'])

pa_oh_stats['PA - OH'] = pa_oh_stats.PA - pa_oh_stats.OH

pa_oh_stats

| PA | OH | PA - OH | |

|---|---|---|---|

| mean | 45.476418 | 44.318182 | 1.158236 |

| median | 44.030000 | 43.675000 | 0.355000 |

| std | 9.803028 | 9.895699 | -0.092671 |

Generating a permutation sample

- Make a single array with all the counties.

- Use

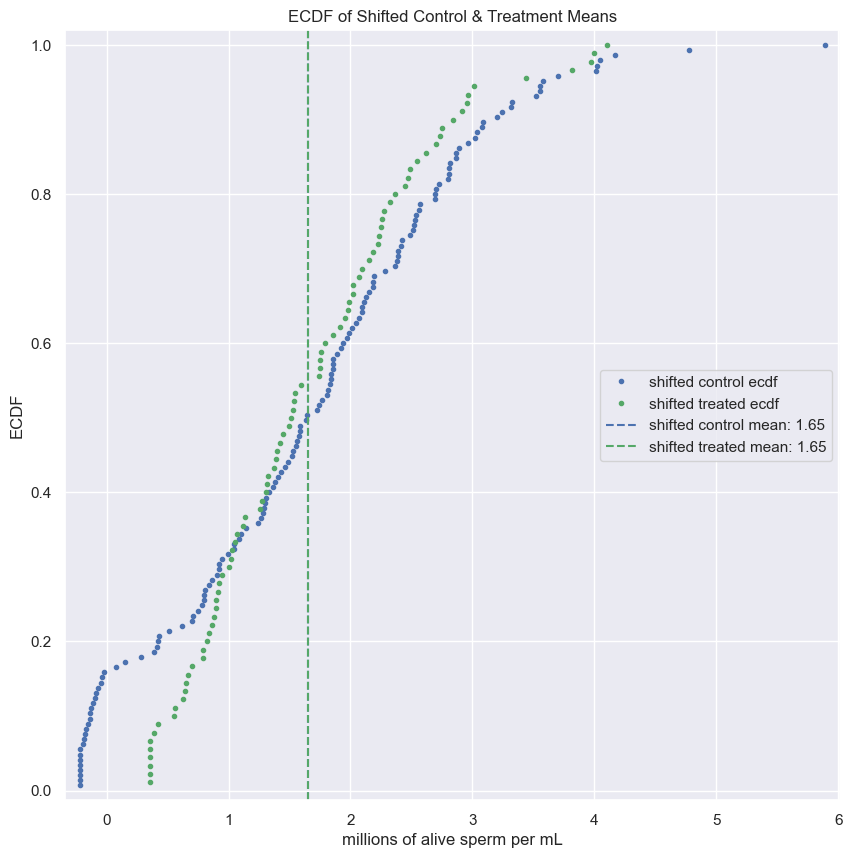

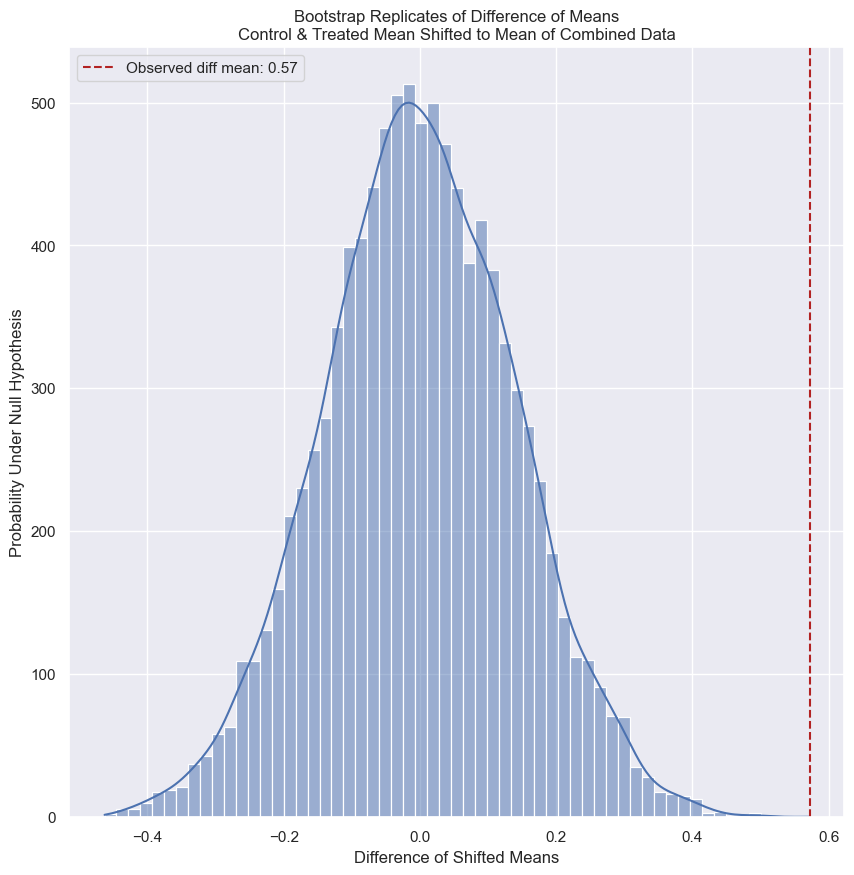

np.random.permutationto permute the entries of the array. - Get the permutation samples by assigning the first 67 to be labeled Pennsylvania and the last 88 to be labeled Ohio