- Course: DataCamp: Introduction to Databases in Python

- This notebook was created as a reproducible reference.

- Most of the material is from the course, however, code updates have been introduced for compatibility with

sqlalchemy v2.0.29. - I completed the exercises

- If you find the content beneficial, consider a DataCamp Subscription.

- Test in:

- pandas version: 2.2.1

- matplotlib version: 3.8.4

- SQLAlchemy version: 2.0.29

- PyMySQL version: 1.0.2

1

2

3

4

5

6

7

8

| import pandas as pd

import matplotlib.pyplot as plt

from sqlalchemy import create_engine, Table, MetaData, select, and_, desc, func, case, cast, Float

from sqlalchemy import Column, String, Integer, Boolean, insert, update, delete, inspect, text

from sqlalchemy.orm import Session

import pymysql

from pprint import pprint as pp

import csv

|

1

2

3

| pd.set_option('display.max_columns', 200)

pd.set_option('display.max_rows', 300)

pd.set_option('display.expand_frame_repr', True)

|

Data Files Location

Data File Objects

1

2

3

| census_csv_data = 'data/intro_to_databases_in_python/census.csv'

census_sql_data = 'sqlite:///data/intro_to_databases_in_python/census.sqlite'

employees_sql_data = 'sqlite:///data/intro_to_databases_in_python/employees.sqlite'

|

Introduction to Databases in Python

Summary

- Introduction

- Discuss the role of database management in modern software development.

- Introduce SQLAlchemy as a powerful tool for database interactions in Python.

- Setting Up Your Environment

- Explanation of how to set up a local SQLite database for development and testing purposes.

- Core Functionalities of SQLAlchemy

- Detailed instructions on creating an engine to connect to various types of databases.

- Examples of executing raw SQL statements through SQLAlchemy to perform database operations.

- Using SQLAlchemy ORM (Object-Relational Mapping)

- Definition and benefits of using ORM over traditional SQL queries.

- Guide to defining database schemas with Python classes and mapping them to database tables.

- Techniques for manipulating data in tables using ORM, including adding, deleting, and updating records.

- Session Management and Transactions

- Explanation of session management in SQLAlchemy for handling database operations.

- Detailed process for adding records to the database, committing transactions to save changes, and rolling back transactions in case of errors.

- Querying Data

- Introduction to constructing queries using SQLAlchemy ORM, focusing on simplicity and power.

- Advanced querying techniques, including joining tables, filtering results, and using subqueries for complex data retrieval.

- Integrating SQLAlchemy with SQLite

- Configuration steps to integrate SQLAlchemy with SQLite, a lightweight relational database.

- Practical examples and code snippets for performing create, read, update, and delete (CRUD) operations in SQLite using SQLAlchemy.

Course Description

In this Python SQL course, you’ll learn the basics of using Structured Query Language (SQL) with Python. This will be useful since whether you like it or not, databases are ubiquitous and, as a data scientist, you’ll need to interact with them constantly. The Python SQL toolkit SQLAlchemy provides an accessible and intuitive way to query, build & write to SQLite, MySQL and Postgresql databases (among many others), all of which you will encounter in the daily life of a data scientist.

Basics of Relational Databases

In this chapter, you will become acquainted with the fundamentals of Relational Databases and the Relational Model. You will learn how to connect to a database and then interact with it by writing basic SQL queries, both in raw SQL as well as with SQLAlchemy, which provides a Pythonic way of interacting with databases.

Introduction to Databases

A database consists of tables

Table consists of columns and rows

Exercises

Relational Model

Which of the following is not part of the relational model?

Answer the question

- Tables

- Columns

- Rows

- Dimensions

- Relationships

Connecting to a Database

Meet SQLAlchemy

- Two Main Pieces

- Core (Relational Model focused)

- ORM (User Data Model focused)

There are many types of databases

- SQLite

- PostgreSQL

- MySQL

- MS SQL

- Oracle

- Many more

Connecting to a database

1

2

3

| from sqlalchemy import create_engine

engine = create_engine('sqlite:///census_nyc.sqlite')

connection = engine.connect()

|

- Engine: common interface to the database from SQLAlchemy

- Connection string: All the details required to find the database (and login, if necessary)

A word on connection strings

- ‘sqlite:///census_nyc.sqlite’

- Driver+Dialect Filename

What’s in your database?

- Before querying your database, you’ll want to know what is in it: what the tables are, for example:

1

2

3

4

| from sqlalchemy import create_engine

engine = create_engine('sqlite:///census_nyc.sqlite')

print(engine.table_names())

Out: ['census', 'state_fact']

|

Reflection

- Reflection reads database and builds SQLAlchemy Table objects

1

2

3

4

5

6

7

8

9

10

11

| from sqlalchemy import MetaData, Table

metadata = MetaData()

census = Table('census', metadata, autoload=True, autoload_with=engine)

print(repr(census))

Out:

Table('census', MetaData(bind=None), Column('state',

VARCHAR(length=30), table=<census>), Column('sex',

VARCHAR(length=1), table=<census>), Column('age', INTEGER(),

table=<census>), Column('pop2000', INTEGER(), table=<census>),

Column('pop2008', INTEGER(), table=<census>), schema=None)

|

Exercises

Engines and Connection Strings

Alright, it’s time to create your first engine! An engine is just a common interface to a database, and the information it requires to connect to one is contained in a connection string, such as sqlite:///census_nyc.sqlite. Here, sqlite is the database driver, while census_nyc.sqlite is a SQLite file contained in the local directory.

You can learn a lot more about connection strings in the SQLAlchemy documentation.

Your job in this exercise is to create an engine that connects to a local SQLite file named census.sqlite. Then, print the names of the tables it contains using the .table_names() method. Note that when you just want to print the table names, you do not need to use engine.connect() after creating the engine.

Instructions

- Import create_engine from the sqlalchemy module.

- Using the create_engine() function, create an engine for a local file named census.sqlite with sqlite as the driver. Be sure to enclose the connection string within quotation marks.

- Print the output from the .table_names() method on the engine.

1

2

3

4

5

6

7

8

9

10

11

| # Import create_engine - at top of notebook

# Create an engine that connects to the census.sqlite file: engine

engine = create_engine(census_sql_data)

# Create an inspector object

inspector = inspect(engine)

# Use the inspector to list the table names

table_names = inspector.get_table_names()

print(table_names)

|

1

| ['census', 'state_fact']

|

Autoloading Tables from a Database

SQLAlchemy can be used to automatically load tables from a database using something called reflection. Reflection is the process of reading the database and building the metadata based on that information. It’s the opposite of creating a Table by hand and is very useful for working with existing databases. To perform reflection, you need to import the Table object from the SQLAlchemy package. Then, you use this Table object to read your table from the engine and autoload the columns. Using the Table object in this manner is a lot like passing arguments to a function. For example, to autoload the columns with the engine, you have to specify the keyword arguments autoload=True and autoload_with=engine to Table().

In this exercise, your job is to reflect the census table available on your engine into a variable called census. The metadata has already been loaded for you using MetaData() and is available in the variable metadata.

Instructions

- Import the Table object from sqlalchemy.

- Reflect the census table by using the Table object with the arguments:

- The name of the table as a string (‘census’).

- The metadata, contained in the variable metadata.

- autoload=True

- The engine to autoload with - in this case, engine.

- Print the details of census using the repr() function.

2024-04-19 Update Notes

The error you’re seeing in the updated trace is due to the use of deprecated arguments with the Table constructor in SQLAlchemy version 1.4 and later. The autoload and autoload_with parameters have been replaced by a different mechanism for reflecting tables from a database.

To fix the error, you should use the autoload_with parameter in a different way along with SQLAlchemy’s reflection system.

This code first reflects all tables from the connected database into the metadata object with metadata.reflect(engine). Then, it retrieves a specific table (in this case, ‘census’) without using the now-deprecated autoload parameter. This approach uses the recommended pattern for SQLAlchemy version 1.4 and later, providing compatibility and future-proofing your code.

1

2

3

4

5

6

7

8

9

10

11

| # Import Table - at top of Notebook

# Create a MetaData instance

metadata = MetaData()

# Reflect an existing table by using the Table constructor and MetaData.reflect

metadata.reflect(engine)

census = Table('census', metadata, autoload_with=engine)

# Now you can print out the structure of the table

print(repr(census))

|

1

| Table('census', MetaData(), Column('state', VARCHAR(length=30), table=<census>), Column('sex', VARCHAR(length=1), table=<census>), Column('age', INTEGER(), table=<census>), Column('pop2000', INTEGER(), table=<census>), Column('pop2008', INTEGER(), table=<census>), schema=None)

|

Viewing Table Details

Great job reflecting the census table! Now you can begin to learn more about the columns and structure of your table. It is important to get an understanding of your database by examining the column names. This can be done by using the .columns attribute and accessing the .keys() method. For example, census.columns.keys() would return a list of column names of the census table.

Following this, we can use the metadata container to find out more details about the reflected table such as the columns and their types. For example, table objects are stored in the metadata.tables dictionary, so you can get the metadata of your census table with metadata.tables[‘census’]. This is similar to your use of the repr() function on the census table from the previous exercise.

Instructions

- Reflect the census table as you did in the previous exercise using the Table() function.

- Print a list of column names of the census table by applying the .keys() method to census.columns.

- Print the details of the census table using the metadata.tables dictionary along with the repr() function. To do this, first access the ‘census’ key of the metadata.tables dictionary, and place this inside the provided repr() function.

1

2

3

4

5

| # Reflect the census table from the engine: census

census = Table('census', metadata, autoload=True, autoload_with=engine)

# Print the column names

census.columns.keys()

|

1

| ['state', 'sex', 'age', 'pop2000', 'pop2008']

|

1

2

| # Print full table metadata

repr(metadata.tables['census'])

|

1

| "Table('census', MetaData(), Column('state', VARCHAR(length=30), table=<census>), Column('sex', VARCHAR(length=1), table=<census>), Column('age', INTEGER(), table=<census>), Column('pop2000', INTEGER(), table=<census>), Column('pop2008', INTEGER(), table=<census>), schema=None)"

|

Introduction to SQL

SQL Statements

- Select, Insert, Update & Delete data

- Create & Alter data

Basic SQL querying

1

2

3

| ● SELECT column_name FROM table_name

● SELECT pop2008 FROM People

● SELECT * FROM People

|

Basic SQL querying

1

2

3

4

5

6

| from sqlalchemy import create_engine

engine = create_engine('sqlite:///census_nyc.sqlite')

connection = engine.connect()

stmt = 'SELECT * FROM people'

result_proxy = connection.execute(stmt)

results = result_proxy.fetchall()

|

ResultProxy vs ResultSet

1

2

| In [5]: result_proxy = connection.execute(stmt)

In [6]: results = result_proxy.fetchall()

|

Handling ResultSets

1

2

3

4

5

6

7

8

9

| first_row = results[0]

print(first_row)

Out: ('Illinois', 'M', 0, 89600, 95012)

print(first_row.keys())

Out: ['state', 'sex', 'age', 'pop2000', 'pop2008']

print(first_row.state)

Out: 'Illinois'

|

SQLAlchemy to Build Queries

- Provides a Pythonic way to build SQL statements

- Hides differences between backend database types

SQLAlchemy querying

1

2

3

4

5

| from sqlalchemy import Table, MetaData

metadata = MetaData()

census = Table('census', metadata, autoload=True, autoload_with=engine)

stmt = select(census)

results = connection.execute(stmt).fetchall()

|

SQLAlchemy Select Statement

- Requires a list of one or more Tables or Columns

- Using a table will select all the columns in it

1

2

3

| stmt = select(census)

print(stmt)

Out: 'SELECT * from CENSUS'

|

Exercises

Selecting data from a Table: raw SQL

Using what we just learned about SQL and applying the .execute() method on our connection, we can leverage a raw SQL query to query all the records in our census table. The object returned by the .execute() method is a ResultProxy. On this ResultProxy, we can then use the .fetchall() method to get our results - that is, the ResultSet.

In this exercise, you’ll use a traditional SQL query. In the next exercise, you’ll move to SQLAlchemy and begin to understand its advantages. Go for it!

Instructions

- Build a SQL statement to query all the columns from census and store it in stmt. Note that your SQL statement must be a string.

- Use the .execute() and .fetchall() methods on connection and store the result in results. Remember that .execute() comes before .fetchall() and that stmt needs to be passed to .execute().

- Print results.

2024-04-22 Update Notes

SQLAlchemy in its later versions (especially from version 1.4 onwards) requires that you wrap raw SQL strings in a text() construct to safely execute them. This is a part of SQLAlchemy’s security measures to help prevent SQL injection attacks.

In this revised code, text() is imported from sqlalchemy and is used to wrap the SQL statement. This ensures that SQLAlchemy treats the string as an SQL expression, allowing it to be executed on the database. This method is safe and recommended when executing raw SQL queries with SQLAlchemy.

1

2

| engine = create_engine(census_sql_data)

connection = engine.connect()

|

1

2

3

4

5

6

| # Build select statement for census table: stmt

stmt = text('SELECT * FROM census')

# Execute the statement and fetch the results: results

results = connection.execute(stmt).fetchall()

results[:5]

|

1

2

3

4

5

| [('Illinois', 'M', 0, 89600, 95012),

('Illinois', 'M', 1, 88445, 91829),

('Illinois', 'M', 2, 88729, 89547),

('Illinois', 'M', 3, 88868, 90037),

('Illinois', 'M', 4, 91947, 91111)]

|

Selecting data from a Table with SQLAlchemy

It’s now time to build your first select statement using SQLAlchemy. SQLAlchemy provides a nice “Pythonic” way of interacting with databases. So rather than dealing with the differences between specific dialects of traditional SQL such as MySQL or PostgreSQL, you can leverage the Pythonic framework of SQLAlchemy to streamline your workflow and more efficiently query your data. For this reason, it is worth learning even if you may already be familiar with traditional SQL.

In this exercise, you’ll once again build a statement to query all records from the census table. This time, however, you’ll make use of the select() function of the sqlalchemy module. This function requires a list of tables or columns as the only required argument.

Table and MetaData have already been imported. The metadata is available as metadata and the connection to the database as connection.

Instructions

- Import select from the sqlalchemy module.

- Reflect the census table. This code is already written for you.

- Create a query using the select() function to retrieve the census table. To do so, pass a list to select() containing a single element: census.

- Print stmt to see the actual SQL query being created. This code has been written for you.

- Using the provided print() function, print all the records from the census table. To do this:

- Use the .execute() method on connection with stmt as the argument to retrieve the ResultProxy.

- Use .fetchall() on connection.execute(stmt) to retrieve the ResultSet.

2024-04-22 Update Note

The select function from SQLAlchemy no longer accepts a list of tables or columns as an argument inside a list. This syntax change was introduced in SQLAlchemy 1.4 to enforce more explicit construction patterns and improve security against SQL injection attacks.

This change reflects the updated usage of the select function where you pass the table or columns directly, without enclosing them in brackets. This update in your code will conform to the latest SQLAlchemy syntax and should run without errors.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| # Import select - at top of Notebook

engine = create_engine(census_sql_data)

connection = engine.connect()

metadata = MetaData()

metadata.reflect(engine)

# Access the 'census' table

census = metadata.tables['census']

# Build select statement for census table: stmt

stmt = select(census)

# Print the emitted statement to see the SQL emitted

print(stmt)

|

1

2

| SELECT census.state, census.sex, census.age, census.pop2000, census.pop2008

FROM census

|

1

2

3

| # Execute the statement and print the results

results = connection.execute(stmt).fetchall()

results[:5]

|

1

2

3

4

5

| [('Illinois', 'M', 0, 89600, 95012),

('Illinois', 'M', 1, 88445, 91829),

('Illinois', 'M', 2, 88729, 89547),

('Illinois', 'M', 3, 88868, 90037),

('Illinois', 'M', 4, 91947, 91111)]

|

Handling a ResultSet

Recall the differences between a ResultProxy and a ResultSet:

- ResultProxy: The object returned by the .execute() method. It can be used in a variety of ways to get the data returned by the query.

- ResultSet: The actual data asked for in the query when using a fetch method such as .fetchall() on a ResultProxy.

This separation between the ResultSet and ResultProxy allows us to fetch as much or as little data as we desire.

Once we have a ResultSet, we can use Python to access all the data within it by column name and by list style indexes. For example, you can get the first row of the results by using results[0]. With that first row then assigned to a variable first_row, you can get data from the first column by either using first_row[0] or by column name such as first_row[‘column_name’]. You’ll now practice exactly this using the ResultSet you obtained from the census table in the previous exercise. It is stored in the variable results. Enjoy!

Instructions

- Extract the first row of results and assign it to the variable first_row.

- Print the value of the first column in first_row.

- Print the value of the ‘state’ column in first_row.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| # Get the first row of the results by using an index: first_row

first_row = results[0]

# Print the first row of the results

print(first_row)

# Print the first column of the first row by using an index

print(first_row[0])

# Print the 'state' column of the first row by using its name

# print(first_row['state']) # this does not work

# attribute access does work

print(first_row.state)

|

1

2

3

| ('Illinois', 'M', 0, 89600, 95012)

Illinois

Illinois

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

| # Create an SQLAlchemy Engine instance. This engine manages connections to the database.

# The `census_sql_data` should be a database URL that specifies database dialect and connection arguments.

engine = create_engine(census_sql_data)

# Create a session. This session establishes and maintains all conversations with the database.

# It represents a 'holding zone' for all the objects which you've loaded or associated with it during its lifespan.

session = Session(bind=engine)

# Create a MetaData instance. MetaData is a container object that keeps together many different features of a database (or multiple databases).

metadata = MetaData()

# Reflect the database schema into MetaData. This loads table definitions from the database automatically.

# The `bind=engine` argument tells MetaData which engine to use for connection.

metadata.reflect(bind=engine)

# Access the 'census' table object from the metadata. This dictionary-style access allows you to get a Table object.

# Each Table object is a member of the MetaData collection.

census = metadata.tables['census']

# Build a SELECT statement. `select(census)` constructs a simple query that selects all columns from the 'census' table.

stmt = select(census)

# Execute the SELECT statement using the session. This sends the SQL statement to the database and returns a result object.

result = session.execute(stmt)

# Fetch the first row of the result. `fetchone()` retrieves the next row of a query result set, returning a single sequence, or None if no more rows are available.

first_row = result.fetchone()

|

1

2

3

4

5

6

7

8

9

10

11

| # Print the type of the first row to verify it's an instance of `Row`, which allows both indexed and keyed access.

print(type(first_row))

# Print the column names available in the result. This helps verify the structure of the returned rows and what columns can be accessed.

print(result.keys())

# Print the first row to see the data that has been fetched.

print(first_row)

# Print the value of the 'state' column accessed using attribute access. This is an alternative access method provided by SQLAlchemy for convenience.

print(first_row.state)

|

1

2

3

4

| <class 'sqlalchemy.engine.row.Row'>

RMKeyView(['state', 'sex', 'age', 'pop2000', 'pop2008'])

('Illinois', 'M', 0, 89600, 95012)

Illinois

|

Coming up Next…

- Beef up your SQL querying skills

- Learn how to extract all types of useful information from your databases using SQLAlchemy

- Learn how to crete and write to relational databases

- Deep dive into the US census dataset

Applying Filtering, Ordering and Grouping to Queries

In this chapter, you will build on the database knowledge you began acquiring in the previous chapter by writing more nuanced queries that allow you to filter, order, and count your data, all within the Pythonic framework provided by SQLAlchemy!

Filtering and Targeted Data

Where Clauses

1

2

3

4

5

6

7

8

9

10

11

12

13

| stmt = select(census)

stmt = stmt.where(census.columns.state == 'California')

results = connection.execute(stmt).fetchall()

for result in results:

print(result.state, result.age)

Out:

California 0

California 1

California 2

California 3

California 4

Calif

|

- Restrict data returned by a query based on boolean conditions

- Compare a column against a value or another column

- Often used comparisons: ‘==’, ‘<=’, ‘>=’, or ‘!=’

Expressions

- Provide more complex conditions than simple operators

- Eg. in_(), like(), between()

- Many more in documentation

- Available as method on a Column

1

2

3

4

5

6

7

8

9

10

| stmt = select(census)

stmt = stmt.where(census.columns.state.startswith('New'))

for result in connection.execute(stmt):

print(result.state, result.pop2000)

Out:

New Jersey 56983

New Jersey 56686

New Jersey 57011

...

|

Conjunctions

- Allow us to have multiple criteria in a where clause

- Eg. and_(), not_(), or_()

1

2

3

4

5

6

7

8

9

10

11

| from sqlalchemy import or_

stmt = select(census)

stmt = stmt.where(or_(census.columns.state == 'California',

census.columns.state == 'New York'))

for result in connection.execute(stmt):

print(result.state, result.sex)

Out:

New York M

…

California F

|

Exercises

Connecting to a PostgreSQL Database

In these exercises, you will be working with real databases hosted on the cloud via Amazon Web Services (AWS)!

Let’s begin by connecting to a PostgreSQL database. When connecting to a PostgreSQL database, many prefer to use the psycopg2 database driver as it supports practically all of PostgreSQL’s features efficiently and is the standard dialect for PostgreSQL in SQLAlchemy.

You might recall from Chapter 1 that we use the create_engine() function and a connection string to connect to a database.

There are three components to the connection string in this exercise: the dialect and driver (‘postgresql+psycopg2://’), followed by the username and password (‘student:datacamp’), followed by the host and port (‘@postgresql.csrrinzqubik.us-east-1.rds.amazonaws.com:5432/’), and finally, the database name (‘census’). You will have to pass this string as an argument to create_engine() in order to connect to the database.

Instructions

- Import create_engine from sqlalchemy.

- Create an engine to the census database by concatenating the following strings:

- ‘postgresql+psycopg2://’

- ‘student:datacamp’

- ‘@postgresql.csrrinzqubik.us-east-1.rds.amazonaws.com’

- ‘:5432/census’

- Use the .table_names() method on engine to print the table names.

1

2

3

4

5

6

7

| # Use with local file

engine = create_engine(census_sql_data)

connection = engine.connect()

metadata = MetaData()

metadata.reflect(bind=engine)

census = metadata.tables['census']

|

1

2

3

4

5

6

| # Create an inspector object from the engine

inspector = inspect(engine)

# Use the inspector to list the table names

table_names = inspector.get_table_names()

print(table_names)

|

1

| ['census', 'state_fact']

|

1

2

3

4

5

| # Create an engine to the census database - exercise

# engine = create_engine('postgresql+psycopg2://student:datacamp@postgresql.csrrinzqubik.us-east-1.rds.amazonaws.com:5432/census')

# Use the .table_names() method on the engine to print the table names

# print(engine.table_names()) # this no longer works

|

Filter data selected from a Table - Simple

Having connected to the database, it’s now time to practice filtering your queries!

As mentioned in the video, a where() clause is used to filter the data that a statement returns. For example, to select all the records from the census table where the sex is Female (or ‘F’) we would do the following:

select(census).where(census.columns.sex == ‘F’)

In addition to == we can use basically any python comparison operator (such as <=, !=, etc) in the where() clause.

Instructions

- Select all records from the census table by passing in census as a list to select().

- Append a where clause to stmt to return only the records with a state of ‘New York’.

- Execute the statement stmt using .execute() and retrieve the results using .fetchall().

- Iterate over results and print the age, sex and pop2008 columns from each record. For example, you can print out the age of result with result.age.

1

2

3

4

5

6

7

8

9

10

11

12

13

| # Create a select query: stmt

stmt = select(census)

# Add a where clause to filter the results to only those for New York

stmt = stmt.where(census.columns.state == 'New York')

# Execute the query to retrieve all the data returned: results

results = connection.execute(stmt).fetchall()

# Loop over the results and print the age, sex, and pop2008

for i, result in enumerate(results):

if i < 7:

print(result.age, result.sex, result.pop2008)

|

1

2

3

4

5

6

7

| 0 M 128088

1 M 125649

2 M 121615

3 M 120580

4 M 122482

5 M 121205

6 M 120089

|

1

2

3

4

5

6

7

| [('New York', 'M', 0, 126237, 128088),

('New York', 'M', 1, 124008, 125649),

('New York', 'M', 2, 124725, 121615),

('New York', 'M', 3, 126697, 120580),

('New York', 'M', 4, 131357, 122482),

('New York', 'M', 5, 133095, 121205),

('New York', 'M', 6, 134203, 120089)]

|

Filter data selected from a Table - Expressions

In addition to standard Python comparators, we can also use methods such as in_() to create more powerful where() clauses. You can see a full list of expressions in the SQLAlchemy Documentation.

We’ve already created a list of some of the most densely populated states.

Instructions

- Select all records from the census table by passing it in as a list to select().

- Append a where clause to return all the records with a state in the states list. Use in_(states) on census.columns.state to do this.

- Loop over the ResultProxy connection.execute(stmt) and print the state and pop2000 columns from each record.

1

2

3

| stmt = stmt.where(census.columns.state.startswith('New'))

for result in connection.execute(stmt):

print(result.state, result.pop2000)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

| New York 126237

New York 124008

New York 124725

New York 126697

New York 131357

New York 133095

New York 134203

New York 137986

New York 139455

New York 142454

New York 145621

New York 138746

New York 135565

New York 132288

New York 132388

New York 131959

New York 130189

New York 132566

New York 132672

New York 133654

New York 132121

New York 126166

New York 123215

New York 121282

New York 118953

New York 123151

New York 118727

New York 122359

New York 128651

New York 140687

New York 149558

New York 139477

New York 138911

New York 139031

New York 145440

New York 156168

New York 153840

New York 152078

New York 150765

New York 152606

New York 159345

New York 148628

New York 147892

New York 144195

New York 139354

New York 141953

New York 131875

New York 128767

New York 125406

New York 124155

New York 125955

New York 118542

New York 118532

New York 124418

New York 95025

New York 92652

New York 90096

New York 95340

New York 83273

New York 77213

New York 77054

New York 72212

New York 70967

New York 66461

New York 64361

New York 64385

New York 58819

New York 58176

New York 57310

New York 57057

New York 57761

New York 53775

New York 53568

New York 51263

New York 48440

New York 46702

New York 43508

New York 40730

New York 37950

New York 35774

New York 32453

New York 26803

New York 25041

New York 21687

New York 18873

New York 88366

New York 120355

New York 118219

New York 119577

New York 121029

New York 125247

New York 128227

New York 128428

New York 131161

New York 133646

New York 135746

New York 138287

New York 131904

New York 129028

New York 126571

New York 125682

New York 125409

New York 122770

New York 123978

New York 125307

New York 127956

New York 129184

New York 124575

New York 123701

New York 124108

New York 122624

New York 127474

New York 123033

New York 128125

New York 134795

New York 146832

New York 152973

New York 144001

New York 143930

New York 144653

New York 151147

New York 159228

New York 159999

New York 157911

New York 156103

New York 159284

New York 163331

New York 155353

New York 153688

New York 151615

New York 146774

New York 148318

New York 139802

New York 138062

New York 134107

New York 134399

New York 136630

New York 130843

New York 130196

New York 136064

New York 106579

New York 104847

New York 101857

New York 108406

New York 94346

New York 88584

New York 88932

New York 82899

New York 82172

New York 77171

New York 76032

New York 76498

New York 70465

New York 71088

New York 70847

New York 71377

New York 74378

New York 70611

New York 70513

New York 69156

New York 68042

New York 68410

New York 64971

New York 61287

New York 58911

New York 56865

New York 54553

New York 46381

New York 45599

New York 40525

New York 37436

New York 226378

|

1

2

3

4

5

6

7

8

9

10

11

12

| states = ['New York', 'California', 'Texas']

# Create a query for the census table: stmt

stmt = select(census)

# Append a where clause to match all the states in_ the list states

stmt = stmt.where(census.columns.state.in_(states))

# Loop over the ResultProxy and print the state and its population in 2000

for i, result in enumerate(connection.execute(stmt)):

if i < 7:

print(result.state, result.pop2000)

|

1

2

3

4

5

6

7

| New York 126237

New York 124008

New York 124725

New York 126697

New York 131357

New York 133095

New York 134203

|

Filter data selected from a Table - Advanced

You’re really getting the hang of this! SQLAlchemy also allows users to use conjunctions such as and_(), or_(), and not_() to build more complex filtering. For example, we can get a set of records for people in New York who are 21 or 37 years old with the following code:

1

2

3

| select(census).where(and_(census.columns.state == 'New York',

or_(census.columns.age == 21,

census.columns.age == 37)))

|

Instructions

- Import and_ from the sqlalchemy module.

- Select all records from the census table.

- Append a where clause to filter all the records whose state is ‘California’, and whose sex is not ‘M’.

- Iterate over the ResultProxy and print the age and sex columns from each record.

1

2

3

4

5

6

7

8

9

10

11

12

| # Build a query for the census table: stmt

stmt = select(census)

# Append a where clause to select only non-male records from California using and_

# The state of California with a non-male sex

stmt = stmt.where(and_(census.columns.state == 'California',

census.columns.sex != 'M'))

# Loop over the ResultProxy printing the age and sex

for i, result in enumerate(connection.execute(stmt)):

if i < 7:

print(result.age, result.sex)

|

1

2

3

4

5

6

7

| 0 F

1 F

2 F

3 F

4 F

5 F

6 F

|

Ordering Query Results

Order by Clauses

- Allows us to control the order in which records are returned in the query results

- Available as a method on statements order_by()

1

2

3

4

5

6

7

8

| print(results[:10])

Out: [('Illinois',), …]

stmt = select(census.columns.state)

stmt = stmt.order_by(census.columns.state)

results = connection.execute(stmt).fetchall()

print(results[:10])

Out: [('Alabama',), …]

|

Order by Descending

- Wrap the column with desc() in the order_by() clause

Order by Multiple

- Just separate multiple columns with a comma

- Orders completely by the first column

- Then if there are duplicates in the first column, orders by the second column

- repeat until all columns are ordered

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| print(results)

Out:

('Alabama', 'M')

stmt = select(census.columns.state, census.columns.sex)

stmt = stmt.order_by(census.columns.state, census.columns.sex)

results = connection.execute(stmt).first()

print(results)

Out:

('Alabama', 'F')

('Alabama', 'F')

…

('Alabama', 'M')

|

Exercises

1

2

3

4

5

6

7

8

| # Use with local file

engine = create_engine(census_sql_data)

connection = engine.connect()

metadata = MetaData()

metadata.reflect(engine)

# Reflect the 'census' table via engine

census = Table('census', metadata, autoload_with=engine)

|

Ordering by a Single Column

To sort the result output by a field, we use the .order_by() method. By default, the .order_by() method sorts from lowest to highest on the supplied column. You just have to pass in the name of the column you want sorted to .order_by().

In the video, for example, Jason used stmt.order_by(census.columns.state) to sort the result output by the state column.

Instructions

- Select all records of the state column from the census table. To do this, pass census.columns.state as a list to select().

- Append an .order_by() to sort the result output by the state column.

- Execute stmt using the .execute() method on connection and retrieve all the results using .fetchall().

- Print the first 10 rows of results.

1

2

3

4

5

6

7

8

9

10

11

| # Build a query to select the state column: stmt

stmt = select(census.columns.state)

# Order stmt by the state column

stmt = stmt.order_by(census.columns.state)

# Execute the query and store the results: results

results = connection.execute(stmt).fetchall()

# Print the first 10 results

results[:10]

|

1

2

3

4

5

6

7

8

9

10

| [('Alabama',),

('Alabama',),

('Alabama',),

('Alabama',),

('Alabama',),

('Alabama',),

('Alabama',),

('Alabama',),

('Alabama',),

('Alabama',)]

|

Ordering in Descending Order by a Single Column

You can also use .order_by() to sort from highest to lowest by wrapping a column in the desc() function. Although you haven’t seen this function in action, it generalizes what you have already learned.

Pass desc() (for “descending”) inside an .order_by() with the name of the column you want to sort by. For instance, stmt.order_by(desc(table.columns.column_name)) sorts column_name in descending order.

Instructions

- Import desc from the sqlalchemy module.

- Select all records of the state column from the census table.

- Append an .order_by() to sort the result output by the state column in descending order. Save the result as rev_stmt.

- Execute rev_stmt using connection.execute() and fetch all the results with .fetchall(). Save them as rev_results.

- Print the first 10 rows of rev_results.

1

2

3

4

5

6

7

8

9

10

11

| # Build a query to select the state column: stmt

stmt = select(census.columns.state)

# Order stmt by state in descending order: rev_stmt

rev_stmt = stmt.order_by(desc(census.columns.state))

# Execute the query and store the results: rev_results

rev_results = connection.execute(rev_stmt).fetchall()

# Print the first 10 rev_results

rev_results[:10]

|

1

2

3

4

5

6

7

8

9

10

| [('Wyoming',),

('Wyoming',),

('Wyoming',),

('Wyoming',),

('Wyoming',),

('Wyoming',),

('Wyoming',),

('Wyoming',),

('Wyoming',),

('Wyoming',)]

|

Ordering by Multiple Columns

We can pass multiple arguments to the .order_by() method to order by multiple columns. In fact, we can also sort in ascending or descending order for each individual column. Each column in the .order_by() method is fully sorted from left to right. This means that the first column is completely sorted, and then within each matching group of values in the first column, it’s sorted by the next column in the .order_by() method. This process is repeated until all the columns in the .order_by() are sorted.

Instructions

- Select all records of the state and age columns from the census table.

- Use .order_by() to sort the output of the state column in ascending order and age in descending order. (NOTE: desc is already imported).

- Execute stmt using the .execute() method on connection and retrieve all the results using .fetchall().

- Print the first 20 results.

1

2

3

4

5

6

7

8

9

10

11

| # Build a query to select state and age: stmt

stmt = select(census.columns.state, census.columns.age)

# Append order by to ascend by state and descend by age

stmt = stmt.order_by(census.columns.state, desc(census.columns.age))

# Execute the statement and store all the records: results

results = connection.execute(stmt).fetchall()

# Print the first 20 results

results[:20]

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| [('Alabama', 85),

('Alabama', 85),

('Alabama', 84),

('Alabama', 84),

('Alabama', 83),

('Alabama', 83),

('Alabama', 82),

('Alabama', 82),

('Alabama', 81),

('Alabama', 81),

('Alabama', 80),

('Alabama', 80),

('Alabama', 79),

('Alabama', 79),

('Alabama', 78),

('Alabama', 78),

('Alabama', 77),

('Alabama', 77),

('Alabama', 76),

('Alabama', 76)]

|

Counting, Summing and Grouping Data

SQL Functions

- E.g. Count, Sum

- from sqlalchemy import func

- More efficient than processing in Python

- Aggregate data

Sum Example

1

2

3

4

5

| from sqlalchemy import func

stmt = select(func.sum(census.columns.pop2008))

results = connection.execute(stmt).scalar()

print(results)

Out: 302876613

|

Group by

- Allows us to group row by common values

1

2

3

4

5

6

| stmt = select(census.columns.sex, func.sum(census.columns.pop2008))

stmt = stmt.group_by(census.columns.sex)

results = connection.execute(stmt).fetchall()

print(results)

Out: [('F', 153959198), ('M', 148917415)]

|

- Supports multiple columns to group by with a pattern similar to order_by()

- Requires all selected columns to be grouped or aggregated by a function

Group by Multiple

1

2

3

4

5

6

7

8

9

| stmt = select(census.columns.sex, census.columns.age, func.sum(census.columns.pop2008))

stmt = stmt.group_by(census.columns.sex, census.columns.age)

results = connection.execute(stmt).fetchall()

print(results)

Out:

[('F', 0, 2105442), ('F', 1, 2087705), ('F', 2, 2037280), ('F', 3,

2012742), ('F', 4, 2014825), ('F', 5, 1991082), ('F', 6, 1977923),

('F', 7, 2005470), ('F', 8, 1925725), …

|

Handling ResultSets from Functions

- SQLAlchemy auto generates “column names” for functions in the ResultSet

- The column names are often func_# such as count_1

- Replace them with the label() method

Using label()

1

2

3

4

5

6

7

8

| print(results[0].keys())

Out: ['sex', u'sum_1']

stmt = select(census.columns.sex, func.sum(census.columns.pop2008).label( 'pop2008_sum'))

stmt = stmt.group_by(census.columns.sex)

results = connection.execute(stmt).fetchall()

print(results[0].keys())

Out: ['sex', 'pop2008_sum']

|

Exercises

1

2

3

4

5

6

7

8

| # Use with local file

engine = create_engine(census_sql_data)

connection = engine.connect()

metadata = MetaData()

metadata.reflect(engine)

# Reflect the 'census' table via engine

census = Table('census', metadata, autoload_with=engine)

|

Counting Distinct Data

As mentioned in the video, SQLAlchemy’s func module provides access to built-in SQL functions that can make operations like counting and summing faster and more efficient.

In the video, Jason used func.sum() to get a sum of the pop2008 column of census as shown below:

1

| select(func.sum(census.columns.pop2008))

|

If instead you want to count the number of values in pop2008, you could use func.count() like this:

1

| select(func.count(census.columns.pop2008))

|

Furthermore, if you only want to count the distinct values of pop2008, you can use the .distinct() method:

1

| select(func.count(census.columns.pop2008.distinct()))

|

In this exercise, you will practice using func.count() and .distinct() to get a count of the distinct number of states in census.

So far, you’ve seen .fetchall() and .first() used on a ResultProxy to get the results. The ResultProxy also has a method called .scalar() for getting just the value of a query that returns only one row and column.

This can be very useful when you are querying for just a count or sum.

Instructions

- Build a select statement to count the distinct values in the state field of census.

- Execute stmt to get the count and store the results as distinct_state_count.

- Print the value of distinct_state_count.

1

2

3

4

5

6

7

8

| # Build a query to count the distinct states values: stmt

stmt = select(func.count(census.columns.state.distinct()))

# Execute the query and store the scalar result: distinct_state_count

distinct_state_count = connection.execute(stmt).scalar()

# Print the distinct_state_count

distinct_state_count

|

Count of Records by State

Often, we want to get a count for each record with a particular value in another column. The .group_by() method helps answer this type of query. You can pass a column to the .group_by() method and use in an aggregate function like sum() or count(). Much like the .order_by() method, .group_by() can take multiple columns as arguments.

Instructions

- Import func from sqlalchemy.

- Build a select statement to get the value of the state field and a count of the values in the age field, and store it as stmt.

- Use the .group_by() method to group the statement by the state column.

- Execute stmt using the connection to get the count and store the results as results.

- Print the keys/column names of the results returned using results[0].keys().

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

| # Create an SQLAlchemy Engine instance. This engine manages connections to the database.

# The `census_sql_data` should be a database URL that specifies database dialect and connection arguments.

engine = create_engine(census_sql_data)

# Create a session. This session establishes and maintains all conversations with the database.

# It represents a 'holding zone' for all the objects which you've loaded or associated with it during its lifespan.

session = Session(bind=engine)

# Create a MetaData instance. MetaData is a container object that keeps together many different features of a database (or multiple databases).

metadata = MetaData()

# Reflect the database schema into MetaData. This loads table definitions from the database automatically.

# The `bind=engine` argument tells MetaData which engine to use for connection.

metadata.reflect(bind=engine)

# Access the 'census' table object from the metadata. This dictionary-style access allows you to get a Table object.

# Each Table object is a member of the MetaData collection.

census = metadata.tables['census']

# Build a SELECT statement. `select(census)` constructs a simple query that selects all columns from the 'census' table.

stmt = select(census.columns.state, func.count(census.columns.age))

# Group stmt by state

stmt = stmt.group_by(census.columns.state)

# Execute the SELECT statement using the session. This sends the SQL statement to the database and returns a result object.

result = session.execute(stmt)

rows = result.fetchall()

# Fetch the first row of the result. `fetchone()` retrieves the next row of a query result set, returning a single sequence, or None if no more rows are available.

first_row = rows[0]

|

1

2

3

4

5

6

7

8

9

10

11

| # Print the column names available in the result. This helps verify the structure of the returned rows and what columns can be accessed.

print(result.keys())

# Print some rows

print(rows[:5])

# Print the type of the first row to verify it's an instance of `Row`, which allows both indexed and keyed access.

print(type(first_row))

print(first_row.state)

print(first_row.count_1)

|

1

2

3

4

5

| RMKeyView(['state', 'count_1'])

[('Alabama', 172), ('Alaska', 172), ('Arizona', 172), ('Arkansas', 172), ('California', 172)]

<class 'sqlalchemy.engine.row.Row'>

Alabama

172

|

result = session.execute(stmt) is not the same as result = connection.execute(stmt).fetchall()

type(session.execute(stmt)) → sqlalchemy.engine.cursor.CursorResult

type(connection.execute(stmt).fetchall()) → list

Determining the Population Sum by State

To avoid confusion with query result column names like count_1, we can use the .label() method to provide a name for the resulting column. This gets appendedto the function method we are using, and its argument is the name we want to use.

We can pair func.sum() with .group_by() to get a sum of the population by State and use the label() method to name the output.

We can also create the func.sum() expression before using it in the select statement. We do it the same way we would inside the select statement and store it in a variable. Then we use that variable in the select statement where the func.sum() would normally be.

Instructions

- Import func from sqlalchemy.

- Build an expression to calculate the sum of the values in the pop2008 field labeled as ‘population’.

- Build a select statement to get the value of the state field and the sum of the values in pop2008.

- Group the statement by state using a .group_by() method.

- Execute stmt using the connection to get the count and store the results as results.

- Print the keys/column names of the results returned using results[0].keys().

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| # Build an expression to calculate the sum of pop2008 labeled as population

pop2008_sum = func.sum(census.columns.pop2008).label('population')

# Build a query to select the state and sum of pop2008: stmt

stmt = select(census.columns.state, pop2008_sum)

# Group stmt by state

stmt = stmt.group_by(census.columns.state)

# Execute the statement and store all the records: results

results = connection.execute(stmt)

# Print the keys/column names of the results returned

print(results.keys())

rows = results.fetchall()

# Print results

rows[:5]

|

1

2

3

4

5

6

7

8

9

10

11

| RMKeyView(['state', 'population'])

[('Alabama', 4649367),

('Alaska', 664546),

('Arizona', 6480767),

('Arkansas', 2848432),

('California', 36609002)]

|

SQLAlchemy and Pandas for Visualization

SQLAlchemy and Pandas

- DataFrame can take a SQLAlchemy ResultSet

- Make sure to set the DataFrame columns to the ResultSet keys

DataFrame Example

1

2

3

4

5

6

7

8

9

10

11

12

13

| import pandas as pd

df = pd.DataFrame(results)

df.columns = results[0].keys()

print(df)

Out:

sex pop2008_sum

0 F 2105442

1 F 2087705

2 F 2037280

3 F 2012742

4 F 2014825

5 F 1991082

|

Graphing

- We can graph just like we would normally

Graphing Example

1

2

3

| import matplotlib.pyplot as plt

df[10:20].plot.barh()

plt.show()

|

Exercises

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

| # Use with local file

engine = create_engine(census_sql_data)

connection = engine.connect()

metadata = MetaData()

metadata.reflect(bind=engine)

census = metadata.tables['census']

# Build an expression to calculate the sum of pop2008 labeled as population

pop2008_sum = func.sum(census.columns.pop2008).label('population')

# Build a query to select the state and sum of pop2008: stmt

stmt = select(census.columns.state, pop2008_sum)

stmt = stmt.order_by(desc(pop2008_sum))

# Group stmt by state

stmt = stmt.group_by(census.columns.state)

# Execute the statement and store all the records: results

results = connection.execute(stmt)

rows = results.fetchall()

# Print results

rows[:5]

|

1

2

3

4

5

| [('California', 36609002),

('Texas', 24214127),

('New York', 19465159),

('Florida', 18257662),

('Illinois', 12867077)]

|

SQLAlchemy ResultsProxy and Pandas Dataframes

We can feed a ResultProxy directly into a pandas DataFrame, which is the workhorse of many Data Scientists in PythonLand. Jason demonstrated this in the video. In this exercise, you’ll follow exactly the same approach to convert a ResultProxy into a DataFrame.

Instructions

- Import pandas as pd.

- Create a DataFrame df using pd.DataFrame() on the ResultProxy results.

- Set the columns of the DataFrame df.columns to be the columns from the first result object results[0].keys().

- Print the DataFrame.

1

2

3

4

5

| # Create a DataFrame from the results: df

df = pd.DataFrame(rows, columns=results.keys())

# Print the Dataframe

df.head()

|

| state | population |

|---|

| 0 | California | 36609002 |

|---|

| 1 | Texas | 24214127 |

|---|

| 2 | New York | 19465159 |

|---|

| 3 | Florida | 18257662 |

|---|

| 4 | Illinois | 12867077 |

|---|

From SQLAlchemy results to a Graph

We can also take advantage of pandas and Matplotlib to build figures of our data. Remember that data visualization is essential for both exploratory data analysis and communication of your data!

Instructions

- Import matplotlib.pyplot as plt.

- Create a DataFrame df using pd.DataFrame() on the provided results.

- Set the columns of the DataFrame df.columns to be the columns from the first result object results[0].keys().

- Print the DataFrame df.

- Use the plot.bar() method on df to create a bar plot of the results.

- Display the plot with plt.show().

1

2

3

4

| df = pd.DataFrame(rows, columns=results.keys())

# Print the DataFrame

df.head()

|

| state | population |

|---|

| 0 | California | 36609002 |

|---|

| 1 | Texas | 24214127 |

|---|

| 2 | New York | 19465159 |

|---|

| 3 | Florida | 18257662 |

|---|

| 4 | Illinois | 12867077 |

|---|

1

2

| # Plot the DataFrame

ax = df.plot(x='state', kind='barh', figsize=(7, 10))

|

Advanced SQLAlchemy Queries

Herein, you will learn to perform advanced - and incredibly useful - queries that will enable you to interact with your data in powerful ways.

Calculating Values in a Query

Math Operators

- addition +

- subtraction -

- multiplication *

- division /

- modulus %

- Work differently on different data types

Calculating Difference

1

2

3

4

5

6

7

8

| stmt = select(census.columns.age, (census.columns.pop2008 - census.columns.pop2000).label('pop_change'))

stmt = stmt.group_by(census.columns.age)

stmt = stmt.order_by(desc('pop_change'))

stmt = stmt.limit(5)

results = connection.execute(stmt).fetchall()

print(results)

Out: [(61, 52672), (85, 51901), (54, 50808), (58, 45575), (60, 44915)]

|

Case Statement

- Used to treat data differently based on a condition

- Accepts a list of conditions to match and a column to return if the condition matches

- The list of conditions ends with an else clause to determine what to do when a record doesn’t match any prior conditions

Case Example

1

2

3

4

5

6

| from sqlalchemy import case

stmt = select(func.sum(case((census.columns.state == 'New York', census.columns.pop2008), else_=0)))

results = connection.execute(stmt).fetchall()

print(results)

Out:[(19465159,)]

|

Cast Statement

- Converts data to another type

- Useful for converting

- integers to floats for division

- strings to dates and times

- Accepts a column or expression and the target Type

Percentage Example

1

2

3

4

5

6

7

| from sqlalchemy import case, cast, Float

stmt = select((func.sum(case((census.columns.state == 'New York', census.columns.pop2008), else_=0)) /

cast(func.sum(census.columns.pop2008), Float) * 100).label('ny_percent'))

results = connection.execute(stmt).fetchall()

print(results)

Out: [(Decimal('6.4267619765'),)]

|

Examples

Connecting to a MySQL Database

Before you jump into the calculation exercises, let’s begin by connecting to our database. Recall that in the last chapter you connected to a PostgreSQL database. Now, you’ll connect to a MySQL database, for which many prefer to use the pymysql database driver, which, like psycopg2 for PostgreSQL, you have to install prior to use.

This connection string is going to start with ‘mysql+pymysql://’, indicating which dialect and driver you’re using to establish the connection. The dialect block is followed by the ‘username:password’ combo. Next, you specify the host and port with the following ‘@host:port/’. Finally, you wrap up the connection string with the ‘database_name’.

Now you’ll practice connecting to a MySQL database: it will be the same census database that you have already been working with. One of the great things about SQLAlchemy is that, after connecting, it abstracts over the type of database it has connected to and you can write the same SQLAlchemy code, regardless!

Instructions

- Import the create_engine function from the sqlalchemy library.

- Create an engine to the census database by concatenating the following strings and passing them to create_engine():

- ‘mysql+pymysql://’ (the dialect and driver).

- ‘student:datacamp’ (the username and password).

- ‘@courses.csrrinzqubik.us-east-1.rds.amazonaws.com:3306/’ (the host and port).

- ‘census’ (the database name).

- Use the .table_names() method on engine to print the table names.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| # Use with local file

engine2 = create_engine(census_sql_data)

# Create an inspector object from the engine

inspector = inspect(engine2)

# Use the inspector to list the table names

table_names = inspector.get_table_names()

print('Engine Table Names: \n', table_names)

connection = engine2.connect()

metadata = MetaData()

metadata.reflect(bind=engine)

census = metadata.tables['census']

print('\nCensus:')

census

|

1

2

3

4

5

6

7

8

9

10

| Engine Table Names:

['census', 'state_fact']

Census:

Table('census', MetaData(), Column('state', VARCHAR(length=30), table=<census>), Column('sex', VARCHAR(length=1), table=<census>), Column('age', INTEGER(), table=<census>), Column('pop2000', INTEGER(), table=<census>), Column('pop2008', INTEGER(), table=<census>), schema=None)

|

1

2

3

4

5

6

7

8

| # Use for remote connection

# Create an engine to the census database

engine = create_engine('mysql+pymysql://student:datacamp@courses.csrrinzqubik.us-east-1.rds.amazonaws.com:3306/census')

inspector = inspect(engine)

table_names = inspector.get_table_names()

# Print the table names

print('Engine Table Names: \n', table_names)

|

1

2

| Engine Table Names:

['census', 'state_fact']

|

1

2

3

4

5

6

| # Copy and run as code to use with remote engine

connection = engine.connect()

metadata = MetaData()

census = Table('census', metadata, autoload=True, autoload_with=engine)

print('\nCensus:')

census

|

Calculating a Difference between Two Columns

Often, you’ll need to perform math operations as part of a query, such as if you wanted to calculate the change in population from 2000 to 2008. For math operations on numbers, the operators in SQLAlchemy work the same way as they do in Python.

You can use these operators to perform addition (+), subtraction (-), multiplication (*), division (/), and modulus (%) operations. Note: They behave differently when used with non-numeric column types.

Let’s now find the top 5 states by population growth between 2000 and 2008.

Instructions

- Define a select statement called stmt to return:

- i) The state column of the census table (census.columns.state).

- ii) The difference in population count between 2008 (census.columns.pop2008) and 2000 (census.columns.pop2000) labeled as ‘pop_change’.

- Group the statement by census.columns.state.

- Order the statement by population change (‘pop_change’) in descending order. Do so by passing it desc(‘pop_change’).

- Use the .limit() method on the statement to return only 5 records.

- Execute the statement and fetchall() the records.

- The print statement has already been written for you. Hit ‘Submit Answer’ to view the results!

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| # Build query to return state names by population difference from 2008 to 2000: stmt

stmt = select(census.columns.state, (census.columns.pop2008 - census.columns.pop2000).label('pop_change'))

# Append group by for the state: stmt

stmt = stmt.group_by(census.columns.state)

# Append order by for pop_change descendingly: stmt

stmt = stmt.order_by(desc('pop_change'))

# Return only 5 results: stmt

stmt = stmt.limit(5)

# Use connection to execute the statement and fetch all results

results = connection.execute(stmt).fetchall()

# Print the state and population change for each record

for result in results:

print(f'{result.state}:{result.pop_change}')

|

1

2

3

4

5

| Texas:40137

California:35406

Florida:21954

Arizona:14377

Georgia:13357

|

Determining the Overall Percentage of Females

It’s possible to combine functions and operators in a single select statement as well. These combinations can be exceptionally handy when we want to calculate percentages or averages, and we can also use the case() expression to operate on data that meets specific criteria while not affecting the query as a whole. The case() expression accepts a list of conditions to match and the column to return if the condition matches, followed by an else_ if none of the conditions match. We can wrap this entire expression in any function or math operation we like.

Often when performing integer division, we want to get a float back. While some databases will do this automatically, you can use the cast() function to convert an expression to a particular type.

Instructions

- Import case, cast, and Float from sqlalchemy.

- Build an expression female_pop2000 to calculate female population in 2000. To achieve this:

- Use case() inside func.sum().

- The first argument of case() is a list containing a tuple of

- i) A boolean checking that census.columns.sex is equal to ‘F’.

- ii) The column census.columns.pop2000.

- The second argument is the else_ condition, which should be set to 0.

- Calculate the total population in 2000 and use cast() to convert it to Float.

- Build a query to calculate the percentage of females in 2000. To do this, divide female_pop2000 by total_pop2000 and multiply by 100.

- Execute the query and print percent_female.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| # import case, cast and Float from sqlalchemy - at top of notebook

# Build an expression to calculate female population in 2000

female_pop2000 = func.sum(case((census.columns.sex == 'F', census.columns.pop2000), else_=0))

# Cast an expression to calculate total population in 2000 to Float

total_pop2000 = cast(func.sum(census.columns.pop2000), Float)

# Build a query to calculate the percentage of females in 2000: stmt

stmt = select(female_pop2000 / total_pop2000 * 100)

# Execute the query and store the scalar result: percent_female

percent_female = connection.execute(stmt).scalar()

percent_female

|

SQL Relationships

Relationships

- Allow us to avoid duplicate data

- Make it easy to change things in one place

- Useful to break out information from a table we don’t need very often

Relationships

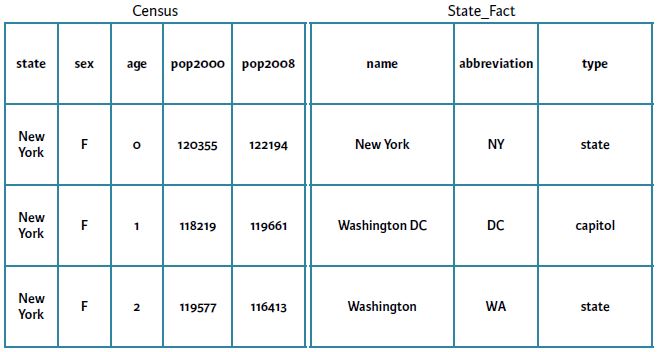

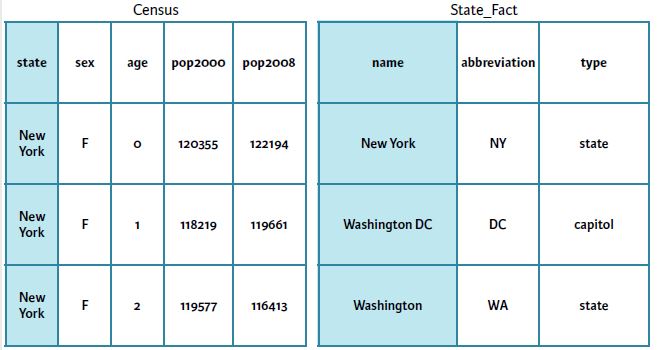

Automatic Joins

1

2

3

4

5

6

7

8

9

10

11

12

| stmt = select(census.columns.pop2008, state_fact.columns.abbreviation)

results = connection.execute(stmt).fetchall()

print(results)

Out:

[(95012, u'IL'),

(95012, u'NJ'),

(95012, u'ND'),

(95012, u'OR'),

(95012, u'DC'),

(95012, u'WI'),

…

|

Join

- Accepts a Table and an optional expression that explains how the two tables are related

- The expression is not needed if the relationship is predefined and available via reflection

- Comes immediately after the select() clause and prior to any where(), order_by or group_by() clauses

Select_from

- Used to replace the default, derived FROM clause with a join

- Wraps the join() clause

Select_from Example

1

2

3

4

5

6

| stmt = select(func.sum(census.columns.pop2000))

stmt = stmt.select_from(census.join(state_fact))

stmt = stmt.where(state_fact.columns.circuit_court == '10')

result = connection.execute(stmt).scalar()

print(result)

Out: 14945252

|

Joining Tables without Predefined Relationship

- Join accepts a Table and an optional expression that explains how the two tables are related

- Will only join on data that match between the two columns

- Avoid joining on columns of different types

Select_from Example

1

2

3

4

5

6

7

| stmt = select(func.sum(census.columns.pop2000))

stmt = stmt.select_from(census.join(state_fact, census.columns.state == state_fact.columns.name))

stmt = stmt.where(state_fact.columns.census_division_name == 'East South Central')

result = connection.execute(stmt).scalar()

print(result)

Out: 16982311

|

Examples

Automatic Joins with an Established Relationship

If you have two tables that already have an established relationship, you can automatically use that relationship by just adding the columns we want from each table to the select statement. Recall that Jason constructed the following query:

1

| stmt = select(census.columns.pop2008, state_fact.columns.abbreviation)

|

in order to join the census and state_fact tables and select the pop2008 column from the first and the abbreviation column from the second. In this case, the census and state_fact tables had a pre-defined relationship: the state column of the former corresponded to the name column of the latter.

In this exercise, you’ll use the same predefined relationship to select the pop2000 and abbreviation columns!

Instructions

- Build a statement to join the census and state_fact tables and select the pop2000 column from the first and the abbreviation column from the second.

- Execute the statement to get the first result and save it as result.

- Hit ‘Submit Answer’ to loop over the keys of the result object, and print the key and value for each!

1

2

| state_fact = Table('state_fact', metadata, autoload=True, autoload_with=engine2)

state_fact

|

1

| Table('state_fact', MetaData(), Column('id', VARCHAR(length=256), table=<state_fact>), Column('name', VARCHAR(length=256), table=<state_fact>), Column('abbreviation', VARCHAR(length=256), table=<state_fact>), Column('country', VARCHAR(length=256), table=<state_fact>), Column('type', VARCHAR(length=256), table=<state_fact>), Column('sort', VARCHAR(length=256), table=<state_fact>), Column('status', VARCHAR(length=256), table=<state_fact>), Column('occupied', VARCHAR(length=256), table=<state_fact>), Column('notes', VARCHAR(length=256), table=<state_fact>), Column('fips_state', VARCHAR(length=256), table=<state_fact>), Column('assoc_press', VARCHAR(length=256), table=<state_fact>), Column('standard_federal_region', VARCHAR(length=256), table=<state_fact>), Column('census_region', VARCHAR(length=256), table=<state_fact>), Column('census_region_name', VARCHAR(length=256), table=<state_fact>), Column('census_division', VARCHAR(length=256), table=<state_fact>), Column('census_division_name', VARCHAR(length=256), table=<state_fact>), Column('circuit_court', VARCHAR(length=256), table=<state_fact>), schema=None)

|

1

2

3

4

5

6

7

8

9

10

11

12

| # Build a statement to join census and state_fact tables: stmt

stmt = select(census.columns.pop2000, state_fact.columns.abbreviation)

# Execute the statement and get the first result: result

result = connection.execute(stmt)

# extract the rows

row = result.fetchone()

# Loop over the keys in the result object and print the key and value

for key in result.keys():

print(key, getattr(row, key))

|

1

2

3

4

5

6

| pop2000 89600

abbreviation IL

C:\Users\trenton\AppData\Local\Temp\ipykernel_54724\3953660652.py:5: SAWarning: SELECT statement has a cartesian product between FROM element(s) "census" and FROM element "state_fact". Apply join condition(s) between each element to resolve.

result = connection.execute(stmt)

|

Joins

If you aren’t selecting columns from both tables or the two tables don’t have a defined relationship, you can still use the .join() method on a table to join it with another table and get extra data related to our query. The join() takes the table object you want to join in as the first argument and a condition that indicates how the tables are related to the second argument. Finally, you use the .select_from() method on the select statement to wrap the join clause. For example, in the video, Jason executed the following code to join the census table to the state_fact table such that the state column of the census table corresponded to the name column of the state_fact table.

1

2