Introduction and Setup

Synopsis

The code cells that follow have been updated to pandas v2.2.2, so some code does not match associated instructions, but these updates are minor.

Here is a concise synopsis of each major section within the document:

Preparing Data

- Discusses various techniques for importing multiple files into DataFrames.

- Focuses on how to use Indexes to share information between DataFrames.

- Covers essential commands for reading data files such as

pd.read_csv() and highlights their importance for subsequent merging tasks.

Concatenating Data

- Explores database-style operations to append and concatenate DataFrames using real-world datasets.

- Describes methods like

.append() and .concat() which stack rows or join DataFrames along an axis.- Some instructions reference

.append(), which is removed in favor of .concat().

- Emphasizes the handling of indices during the concatenation process and introduces the concept of hierarchical indexing.

Merging Data

- Provides an in-depth look at merging techniques in pandas, including different types of joins (left, right, inner, outer).

- Explains the use of

merge() function to align rows using one or more columns. - Discusses ordered merging, which is particularly useful when dealing with columns that have a natural ordering, like dates.

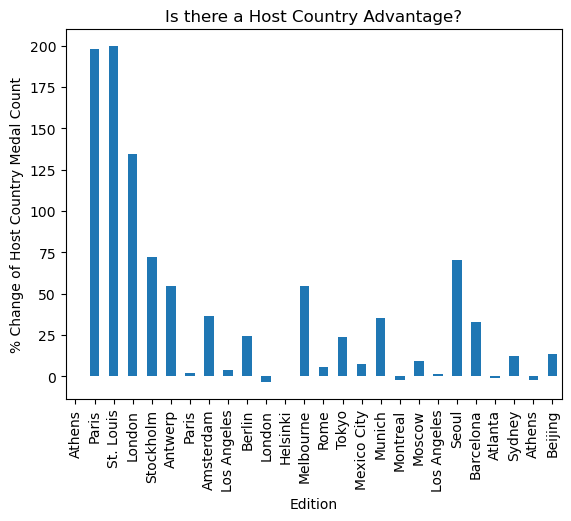

Case Study - Summer Olympics

- Applies previously discussed DataFrame skills to analyze Olympic medal data, integrating lessons from both the current and prior pandas courses.

- Uses the dataset of Summer Olympic medalists from 1896 to 2008 to showcase data manipulation capabilities in pandas.

Each section builds on the knowledge from the previous, culminating in a practical case study that utilizes all the discussed DataFrame manipulation techniques.

Imports

1

2

3

4

5

6

7

| import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

from pprint import pprint as pp

import csv

from pathlib import Path

import yfinance as yf

|

1

2

3

4

| print(f'Pandas Version: {pd.__version__}')

print(f'Matplotlib Version: {plt.matplotlib.__version__}')

print(f'Numpy Version: {np.__version__}')

print(f'Yahoo Finance Version: {yf.__version__}')

|

1

2

3

4

| Pandas Version: 2.2.2

Matplotlib Version: 3.8.4

Numpy Version: 1.26.4

Yahoo Finance Version: 0.2.38

|

Pandas Configuration Options

1

2

3

| pd.set_option('display.max_columns', 200)

pd.set_option('display.max_rows', 300)

pd.set_option('display.expand_frame_repr', True)

|

Data Files Location

Data File Objects

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

| data = Path.cwd() / 'data' / 'merging-dataframes-with-pandas'

auto_fuel_file = data / 'auto_fuel_efficiency.csv'

baby_1881_file = data / 'baby_names1881.csv'

baby_1981_file = data / 'baby_names1981.csv'

exch_rates_file = data / 'exchange_rates.csv'

gdp_china_file = data / 'gdp_china.csv'

gdp_usa_file = data / 'gdp_usa.csv'

oil_price_file = data / 'oil_price.csv'

pitts_file = data / 'pittsburgh_weather_2013.csv'

sales_feb_hardware_file = data / 'sales-feb-Hardware.csv'

sales_feb_service_file = data / 'sales-feb-Service.csv'

sales_feb_software_file = data / 'sales-feb-Software.csv'

sales_jan_2015_file = data / 'sales-jan-2015.csv'

sales_feb_2015_file = data / 'sales-feb-2015.csv'

sales_mar_2015_file = data / 'sales-mar-2015.csv'

sp500_file = data / 'sp500.csv'

so_bronze_file = data / 'summer_olympics_Bronze.csv'

so_bronze5_file = data / 'summer_olympics_bronze_top5.csv'

so_gold_file = data / 'summer_olympics_Gold.csv'

so_gold5_file = data / 'summer_olympics_gold_top5.csv'

so_silver_file = data / 'summer_olympics_Silver.csv'

so_silver5_file = data / 'summer_olympics_silver_top5.csv'

so_all_medalists_file = data / 'summer_olympics_medalists 1896 to 2008 - ALL MEDALISTS.tsv'

so_editions_file = data / 'summer_olympics_medalists 1896 to 2008 - EDITIONS.tsv'

so_ioc_codes_file = data / 'summer_olympics_medalists 1896 to 2008 - IOC COUNTRY CODES.csv'

|

Course Description

As a Data Scientist, you’ll often find that the data you need is not in a single file. It may be spread across a number of text files, spreadsheets, or databases. You want to be able to import the data of interest as a collection of DataFrames and figure out how to combine them to answer your central questions. This course is all about the act of combining, or merging, DataFrames, an essential part of any working Data Scientist’s toolbox. You’ll hone your pandas skills by learning how to organize, reshape, and aggregate multiple data sets to answer your specific questions.

Preparing Data

In this chapter, you’ll learn about different techniques you can use to import multiple files into DataFrames. Having imported your data into individual DataFrames, you’ll then learn how to share information between DataFrames using their Indexes. Understanding how Indexes work is essential information that you’ll need for merging DataFrames later in the course.

Reading multiple data files

pd.read_csv() for CSV filesdf = pd.read_csv(filepath)- dozens of optional input parameters

- Other data import tools:

pd.read_excel()pd.read_html()pd.read_json()

Loading separate files

1

2

3

| import pandas as pd

dataframe0 = pd.read_csv('sales-jan-2015.csv')

dataframe1 = pd.read_csv('sales-feb-2015.csv')

|

Using a loop

1

2

3

4

| filenames = ['sales-jan-2015.csv', 'sales-feb-2015.csv']

dataframes = []

for f in filenames:

dataframes.append(pd.read_csv(f))

|

Using a comprehension

1

2

| filenames = ['sales-jan-2015.csv', 'sales-feb-2015.csv']

dataframes = [pd.read_csv(f) for f in filenames]

|

Using glob

1

2

3

| from glob import glob

filenames = glob('sales*.csv')

dataframes = [pd.read_csv(f) for f in filenames]

|

Exercises

Reading DataFrames from multiple files

When data is spread among several files, you usually invoke pandas’ read_csv() (or a similar data import function) multiple times to load the data into several DataFrames.

The data files for this example have been derived from a list of Olympic medals awarded between 1896 & 2008 compiled by the Guardian.

The column labels of each DataFrame are NOC, Country, & Total where NOC is a three-letter code for the name of the country and Total is the number of medals of that type won (bronze, silver, or gold).

Instructions

- Import pandas as pd.

- Read the file 'Bronze.csv' into a DataFrame called bronze.

- Read the file 'Silver.csv' into a DataFrame called silver.

- Read the file 'Gold.csv' into a DataFrame called gold.

- Print the first 5 rows of the DataFrame gold. This has been done for you, so hit 'Submit Answer' to see the results.

1

2

3

4

5

6

7

8

9

10

11

| # Read 'Bronze.csv' into a DataFrame: bronze

bronze = pd.read_csv(so_bronze_file)

# Read 'Silver.csv' into a DataFrame: silver

silver = pd.read_csv(so_silver_file)

# Read 'Gold.csv' into a DataFrame: gold

gold = pd.read_csv(so_gold_file)

# Print the first five rows of gold

gold.head()

|

| NOC | Country | Total |

|---|

| 0 | USA | United States | 2088.0 |

|---|

| 1 | URS | Soviet Union | 838.0 |

|---|

| 2 | GBR | United Kingdom | 498.0 |

|---|

| 3 | FRA | France | 378.0 |

|---|

| 4 | GER | Germany | 407.0 |

|---|

Reading DataFrames from multiple files in a loop

As you saw in the video, loading data from multiple files into DataFrames is more efficient in a loop or a list comprehension.

Notice that this approach is not restricted to working with CSV files. That is, even if your data comes in other formats, as long as pandas has a suitable data import function, you can apply a loop or comprehension to generate a list of DataFrames imported from the source files.

Here, you’ll continue working with The Guardian’s Olympic medal dataset.

Instructions

- Create a list of file names called filenames with three strings 'Gold.csv', 'Silver.csv', & 'Bronze.csv'. This has been done for you.

- Use a for loop to create another list called dataframes containing the three DataFrames loaded from filenames:

- Iterate over filenames.

- Read each CSV file in filenames into a DataFrame and append it to dataframes by using pd.read_csv() inside a call to .append().

- Print the first 5 rows of the first DataFrame of the list dataframes. This has been done for you, so hit ‘Submit Answer’ to see the results.

1

2

3

4

5

6

7

8

9

10

| # Create the list of file names: filenames

filenames = [so_bronze_file, so_silver_file, so_gold_file]

# Create the list of three DataFrames: dataframes

dataframes = []

for filename in filenames:

dataframes.append(pd.read_csv(filename))

# Print top 5 rows of 1st DataFrame in dataframes

dataframes[0].head()

|

| NOC | Country | Total |

|---|

| 0 | USA | United States | 1052.0 |

|---|

| 1 | URS | Soviet Union | 584.0 |

|---|

| 2 | GBR | United Kingdom | 505.0 |

|---|

| 3 | FRA | France | 475.0 |

|---|

| 4 | GER | Germany | 454.0 |

|---|

Combining DataFrames from multiple data files

In this exercise, you’ll combine the three DataFrames from earlier exercises - gold, silver, & bronze - into a single DataFrame called medals. The approach you’ll use here is clumsy. Later on in the course, you’ll see various powerful methods that are frequently used in practice for concatenating or merging DataFrames.

Remember, the column labels of each DataFrame are NOC, Country, and Total, where NOC is a three-letter code for the name of the country and Total is the number of medals of that type won.

Instructions

- Construct a copy of the DataFrame

gold called medals using the .copy() method. - Create a list called

new_labels with entries 'NOC', 'Country', & 'Gold'. This is the same as the column labels from gold with the column label 'Total' replaced by 'Gold'. - Rename the columns of

medals by assigning new_labels to medals.columns. - Create new columns

'Silver' and 'Bronze' in medals using silver['Total'] & bronze['Total']. - Print the top 5 rows of the final DataFrame

medals. This has been done for you, so hit ‘Submit Answer’ to see the result!

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| # Make a copy of gold: medals

medals = gold.copy()

# Create list of new column labels: new_labels

new_labels = ['NOC', 'Country', 'Gold']

# Rename the columns of medals using new_labels

medals.columns = new_labels

# Add columns 'Silver' & 'Bronze' to medals

medals['Silver'] = silver['Total']

medals['Bronze'] = bronze['Total']

# Print the head of medals

medals.head()

|

| NOC | Country | Gold | Silver | Bronze |

|---|

| 0 | USA | United States | 2088.0 | 1195.0 | 1052.0 |

|---|

| 1 | URS | Soviet Union | 838.0 | 627.0 | 584.0 |

|---|

| 2 | GBR | United Kingdom | 498.0 | 591.0 | 505.0 |

|---|

| 3 | FRA | France | 378.0 | 461.0 | 475.0 |

|---|

| 4 | GER | Germany | 407.0 | 350.0 | 454.0 |

|---|

1

| del bronze, silver, gold, dataframes, medals

|

Reindexing DataFrames

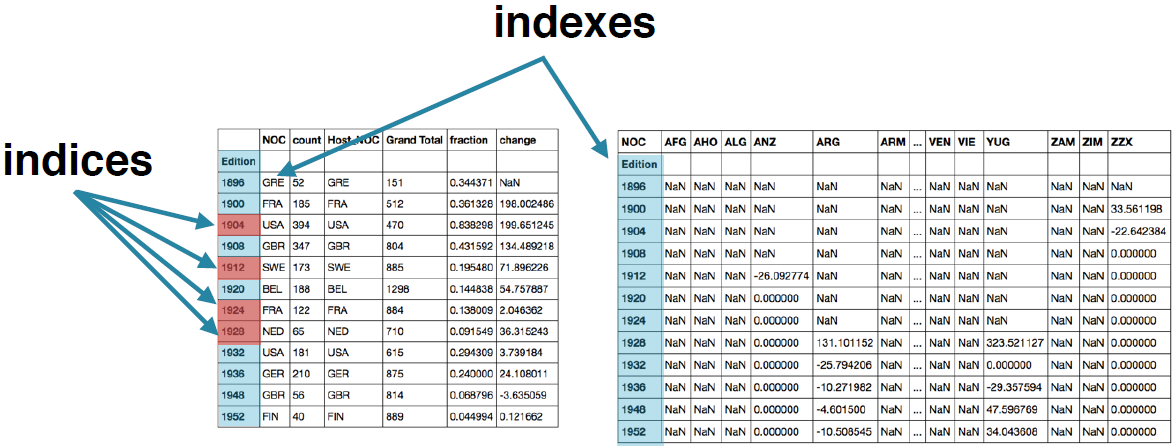

“Indexes” vs. “Indices”

- indices: many index labels within Index data structures

- indexes: many pandas Index data structures

Importing weather data

1

2

3

| import pandas as pd

w_mean = pd.read_csv('quarterly_mean_temp.csv', index_col='Month')

w_max = pd.read_csv('quarterly_max_temp.csv', index_col='Month')

|

Examining the data

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| print(w_mean)

Mean TemperatureF

Month

Apr 61.956044

Jan 32.133333

Jul 68.934783

Oct 43.434783

print(w_max)

Max TemperatureF

Month

Jan 68

Apr 89

Jul 91

Oct 84

|

The DataFrame indexes

1

2

3

4

5

6

7

8

| print(w_mean.index)

Index(['Apr', 'Jan', 'Jul', 'Oct'], dtype='object', name='Month')

print(w_max.index)

Index(['Jan', 'Apr', 'Jul', 'Oct'], dtype='object', name='Month')

print(type(w_mean.index))

<class 'pandas.indexes.base.Index'>

|

Using .reindex()

1

2

3

4

5

6

7

8

9

10

| ordered = ['Jan', 'Apr', 'Jul', 'Oct']

w_mean2 = w_mean.reindex(ordered)

print(w_mean2)

Mean TemperatureF

Month

Jan 32.133333

Apr 61.956044

Jul 68.934783

Oct 43.434783

|

Using .sort_index()

1

2

3

4

5

6

7

| w_mean2.sort_index()

Mean TemperatureF

Month

Apr 61.956044

Jan 32.133333

Jul 68.934783

Oct 43.434783

|

Reindex from a DataFrame Index

1

2

3

4

5

6

7

| w_mean.reindex(w_max.index)

Mean TemperatureF

Month

Jan 32.133333

Apr 61.956044

Jul 68.934783

Oct 43.434783

|

Reindexing with missing labels

1

2

3

4

5

6

7

| w_mean3 = w_mean.reindex(['Jan', 'Apr', 'Dec'])

print(w_mean3)

Mean TemperatureF

Month

Jan 32.133333

Apr 61.956044

Dec NaN

|

Reindex from a DataFrame Index

1

2

3

4

5

6

7

8

9

10

11

12

| w_max.reindex(w_mean3.index)

Max TemperatureF

Month

Jan 68.0

Apr 89.0

Dec NaN

w_max.reindex(w_mean3.index).dropna()

Max TemperatureF

Month

Jan 68.0

Apr 89.0

|

Order matters

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| w_max.reindex(w_mean.index)

Max TemperatureF

Month

Apr 89

Jan 68

Jul 91

Oct 84

w_mean.reindex(w_max.index)

Mean TemperatureF

Month

Jan 32.133333

Apr 61.956044

Jul 68.934783

Oct 43.434783

|

Exercises

Sorting DataFrame with the Index & columns

It is often useful to rearrange the sequence of the rows of a DataFrame by sorting. You don’t have to implement these yourself; the principal methods for doing this are .sort_index() and .sort_values().

In this exercise, you’ll use these methods with a DataFrame of temperature values indexed by month names. You’ll sort the rows alphabetically using the Index and numerically using a column. Notice, for this data, the original ordering is probably most useful and intuitive: the purpose here is for you to understand what the sorting methods do.

Instructions

- Read

'monthly_max_temp.csv' into a DataFrame called weather1 with 'Month' as the index. - Sort the index of

weather1 in alphabetical order using the .sort_index() method and store the result in weather2. - Sort the index of

weather1 in reverse alphabetical order by specifying the additional keyword argument ascending=False inside .sort_index(). - Use the

.sort_values() method to sort weather1 in increasing numerical order according to the values of the column 'Max TemperatureF'.

1

2

| monthly_max_temp = {'Month': ['Jan', 'Feb', 'Mar', 'Apr', 'May', 'Jun', 'Jul', 'Aug', 'Sep', 'Oct', 'Nov', 'Dec'],

'Max TemperatureF': [68, 60, 68, 84, 88, 89, 91, 86, 90, 84, 72, 68]}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

| # Read 'monthly_max_temp.csv' into a DataFrame: weather1

# weather1 = pd.read_csv('monthly_max_temp.csv', index_col='Month')

weather1 = pd.DataFrame.from_dict(monthly_max_temp)

weather1.set_index('Month', inplace=True)

# Print the head of weather1

print(weather1.head())

# Sort the index of weather1 in alphabetical order: weather2

weather2 = weather1.sort_index()

# Print the head of weather2

print(weather2.head())

# Sort the index of weather1 in reverse alphabetical order: weather3

weather3 = weather1.sort_index(ascending=False)

# Print the head of weather3

print(weather3.head())

# Sort weather1 numerically using the values of 'Max TemperatureF': weather4

weather4 = weather1.sort_values(by='Max TemperatureF')

# Print the head of weather4

print(weather4.head())

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

| Max TemperatureF

Month

Jan 68

Feb 60

Mar 68

Apr 84

May 88

Max TemperatureF

Month

Apr 84

Aug 86

Dec 68

Feb 60

Jan 68

Max TemperatureF

Month

Sep 90

Oct 84

Nov 72

May 88

Mar 68

Max TemperatureF

Month

Feb 60

Jan 68

Mar 68

Dec 68

Nov 72

|

Reindexing DataFrame from a list

Sorting methods are not the only way to change DataFrame Indexes. There is also the .reindex() method.

In this exercise, you’ll reindex a DataFrame of quarterly-sampled mean temperature values to contain monthly samples (this is an example of upsampling or increasing the rate of samples, which you may recall from the pandas Foundations course).

The original data has the first month’s abbreviation of the quarter (three-month interval) on the Index, namely Apr, Jan, Jul, and Oct. This data has been loaded into a DataFrame called weather1 and has been printed in its entirety in the IPython Shell. Notice it has only four rows (corresponding to the first month of each quarter) and that the rows are not sorted chronologically.

You’ll initially use a list of all twelve month abbreviations and subsequently apply the .ffill() method to forward-fill the null entries when upsampling. This list of month abbreviations has been pre-loaded as year.

Instructions

- Reorder the rows of

weather1 using the .reindex() method with the list year as the argument, which contains the abbreviations for each month. - Reorder the rows of

weather1 just as you did above, this time chaining the .ffill() method to replace the null values with the last preceding non-null value.

1

2

3

4

5

| monthly_max_temp = {'Month': ['Jan', 'Apr', 'Jul', 'Oct'],

'Max TemperatureF': [32.13333, 61.956044, 68.934783, 43.434783]}

weather1 = pd.DataFrame.from_dict(monthly_max_temp)

weather1.set_index('Month', inplace=True)

weather1

|

| Max TemperatureF |

|---|

| Month | |

|---|

| Jan | 32.133330 |

|---|

| Apr | 61.956044 |

|---|

| Jul | 68.934783 |

|---|

| Oct | 43.434783 |

|---|

1

2

3

4

5

6

7

| year = ['Jan', 'Feb', 'Mar', 'Apr', 'May', 'Jun', 'Jul', 'Aug', 'Sep', 'Oct', 'Nov', 'Dec']

# Reindex weather1 using the list year: weather2

weather2 = weather1.reindex(year)

# Print weather2

weather2

|

| Max TemperatureF |

|---|

| Month | |

|---|

| Jan | 32.133330 |

|---|

| Feb | NaN |

|---|

| Mar | NaN |

|---|

| Apr | 61.956044 |

|---|

| May | NaN |

|---|

| Jun | NaN |

|---|

| Jul | 68.934783 |

|---|

| Aug | NaN |

|---|

| Sep | NaN |

|---|

| Oct | 43.434783 |

|---|

| Nov | NaN |

|---|

| Dec | NaN |

|---|

1

2

3

4

5

| # Reindex weather1 using the list year with forward-fill: weather3

weather3 = weather1.reindex(year).ffill()

# Print weather3

weather3

|

| Max TemperatureF |

|---|

| Month | |

|---|

| Jan | 32.133330 |

|---|

| Feb | 32.133330 |

|---|

| Mar | 32.133330 |

|---|

| Apr | 61.956044 |

|---|

| May | 61.956044 |

|---|

| Jun | 61.956044 |

|---|

| Jul | 68.934783 |

|---|

| Aug | 68.934783 |

|---|

| Sep | 68.934783 |

|---|

| Oct | 43.434783 |

|---|

| Nov | 43.434783 |

|---|

| Dec | 43.434783 |

|---|

Reindexing DataFrame using another DataFrame Index

Another common technique is to reindex a DataFrame using the Index of another DataFrame. The DataFrame .reindex() method can accept the Index of a DataFrame or Series as input. You can access the Index of a DataFrame with its .index attribute.

The Baby Names Dataset from data.gov summarizes counts of names (with genders) from births registered in the US since 1881. In this exercise, you will start with two baby-names DataFrames names_1981 and names_1881 loaded for you.

The DataFrames names_1981 and names_1881 both have a MultiIndex with levels name and gender giving unique labels to counts in each row. If you’re interested in seeing how the MultiIndexes were set up, names_1981 and names_1881 were read in using the following commands:

1

2

| names_1981 = pd.read_csv('names1981.csv', header=None, names=['name','gender','count'], index_col=(0,1))

names_1881 = pd.read_csv('names1881.csv', header=None, names=['name','gender','count'], index_col=(0,1))

|

As you can see by looking at their shapes, which have been printed in the IPython Shell, the DataFrame corresponding to 1981 births is much larger, reflecting the greater diversity of names in 1981 as compared to 1881.

Your job here is to use the DataFrame .reindex() and .dropna() methods to make a DataFrame common_names counting names from 1881 that were still popular in 1981.

Instructions

- Create a new DataFrame

common_names by reindexing names_1981 using the Index of the DataFrame names_1881 of older names. - Print the shape of the new

common_names DataFrame. This has been done for you. It should be the same as that of names_1881. - Drop the rows of

common_names that have null counts using the .dropna() method. These rows correspond to names that fell out of fashion between 1881 & 1981. - Print the shape of the reassigned

common_names DataFrame. This has been done for you, so hit ‘Submit Answer’ to see the result!

1

2

| names_1981 = pd.read_csv(baby_1981_file, header=None, names=['name', 'gender', 'count'], index_col=(0,1))

names_1981.head()

|

| | count |

|---|

| name | gender | |

|---|

| Jennifer | F | 57032 |

|---|

| Jessica | F | 42519 |

|---|

| Amanda | F | 34370 |

|---|

| Sarah | F | 28162 |

|---|

| Melissa | F | 28003 |

|---|

1

2

| names_1881 = pd.read_csv(baby_1881_file, header=None, names=['name','gender','count'], index_col=(0,1))

names_1881.head()

|

| | count |

|---|

| name | gender | |

|---|

| Mary | F | 6919 |

|---|

| Anna | F | 2698 |

|---|

| Emma | F | 2034 |

|---|

| Elizabeth | F | 1852 |

|---|

| Margaret | F | 1658 |

|---|

1

2

3

4

5

| # Reindex names_1981 with index of names_1881: common_names

common_names = names_1981.reindex(names_1881.index)

# Print shape of common_names

common_names.shape

|

1

2

3

4

5

| # Drop rows with null counts: common_names

common_names = common_names.dropna()

# Print shape of new common_names

common_names.shape

|

| | count |

|---|

| name | gender | |

|---|

| Mary | F | 11030.0 |

|---|

| Anna | F | 5182.0 |

|---|

| Emma | F | 532.0 |

|---|

| Elizabeth | F | 20168.0 |

|---|

| Margaret | F | 2791.0 |

|---|

| Minnie | F | 56.0 |

|---|

| Ida | F | 206.0 |

|---|

| Annie | F | 973.0 |

|---|

| Bertha | F | 209.0 |

|---|

| Alice | F | 745.0 |

|---|

1

| del weather1, weather2, weather3, weather4, common_names, names_1881, names_1981

|

Arithmetic with Series & DataFrames

Loading weather data

1

2

3

4

5

6

7

8

9

10

11

12

13

| import pandas as pd

weather = pd.read_csv('pittsburgh2013.csv', index_col='Date', parse_dates=True)

weather.loc['2013-7-1':'2013-7-7', 'PrecipitationIn']

Date

2013-07-01 0.18

2013-07-02 0.14

2013-07-03 0.00

2013-07-04 0.25

2013-07-05 0.02

2013-07-06 0.06

2013-07-07 0.10

Name: PrecipitationIn, dtype: float64

|

Scalar multiplication

1

2

3

4

5

6

7

8

9

10

11

| weather.loc['2013-07-01':'2013-07-07', 'PrecipitationIn'] * 2.54

Date

2013-07-01 0.4572

2013-07-02 0.3556

2013-07-03 0.0000

2013-07-04 0.6350

2013-07-05 0.0508

2013-07-06 0.1524

2013-07-07 0.2540

Name: PrecipitationIn, dtype: float64

|

Absolute temperature range

1

2

3

4

5

6

7

8

9

10

11

| week1_range = weather.loc['2013-07-01':'2013-07-07', ['Min TemperatureF', 'Max TemperatureF']]

print(week1_range)

Min TemperatureF Max TemperatureF

Date

2013-07-01 66 79

2013-07-02 66 84

2013-07-03 71 86

2013-07-04 70 86

2013-07-05 69 86

2013-07-06 70 89

2013-07-07 70 77

|

Average temperature

1

2

3

4

5

6

7

8

9

10

11

| week1_mean = weather.loc['2013-07-01':'2013-07-07', 'Mean TemperatureF']

print(week1_mean)

Date

2013-07-01 72

2013-07-02 74

2013-07-03 78

2013-07-04 77

2013-07-05 76

2013-07-06 78

2013-07-07 72

Name: Mean TemperatureF, dtype: int64

|

Relative temperature range

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| week1_range / week1_mean

RuntimeWarning: Cannot compare type 'Timestamp' with type 'str', sort order is

undefined for incomparable objects

return this.join(other, how=how, return_indexers=return_indexers)

2013-07-01 00:00:00 2013-07-02 00:00:00 2013-07-03 00:00:00 \

Date

2013-07-01 NaN NaN NaN

2013-07-02 NaN NaN NaN

2013-07-03 NaN NaN NaN

2013-07-04 NaN NaN NaN

2013-07-05 NaN NaN NaN

2013-07-06 NaN NaN NaN

2013-07-07 NaN NaN NaN

2013-07-04 00:00:00 2013-07-05 00:00:00 2013-07-06 00:00:00 \

Date

2013-07-01 NaN NaN NaN

... ...

|

Relative temperature range

1

2

3

4

5

6

7

8

9

10

11

| week1_range.divide(week1_mean, axis='rows')

Min TemperatureF Max TemperatureF

Date

2013-07-01 0.916667 1.097222

2013-07-02 0.891892 1.135135

2013-07-03 0.910256 1.102564

2013-07-04 0.909091 1.116883

2013-07-05 0.907895 1.131579

2013-07-06 0.897436 1.141026

2013-07-07 0.972222 1.069444

|

Percentage changes

1

2

3

4

5

6

7

8

9

10

11

| week1_mean.pct_change() * 100

Date

2013-07-01 NaN

2013-07-02 2.777778

2013-07-03 5.405405

2013-07-04 -1.282051

2013-07-05 -1.298701

2013-07-06 2.631579

2013-07-07 -7.692308

Name: Mean TemperatureF, dtype: float64

|

Bronze Olympic medals

1

2

3

4

5

6

7

8

9

| bronze = pd.read_csv('bronze_top5.csv', index_col=0)

print(bronze)

Total

Country

United States 1052.0

Soviet Union 584.0

United Kingdom 505.0

France 475.0

Germany 454.0

|

Silver Olympic medals

1

2

3

4

5

6

7

8

9

| silver = pd.read_csv('silver_top5.csv', index_col=0)

print(silver)

Total

Country

United States 1195.0

Soviet Union 627.0

United Kingdom 591.0

France 461.0

Italy 394.0

|

Gold Olympic medals

1

2

3

4

5

6

7

8

9

| gold = pd.read_csv('gold_top5.csv', index_col=0)

print(gold)

Total

Country

United States 2088.0

Soviet Union 838.0

United Kingdom 498.0

Italy 460.0

Germany 407.0

|

Adding bronze, silver

1

2

3

4

5

6

7

8

9

10

| bronze + silver

Country

France 936.0

Germany NaN

Italy NaN

Soviet Union 1211.0

United Kingdom 1096.0

United States 2247.0

Name: Total, dtype: float64

|

Adding bronze, silver

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| bronze + silver

Country

France 936.0

Germany NaN

Italy NaN

Soviet Union 1211.0

United Kingdom 1096.0

United States 2247.0

Name: Total, dtype: float64

In [22]: print(bronze['United States'])

1052.0

In [23]: print(silver['United States'])

1195.0

|

Using the .add() method

1

2

3

4

5

6

7

8

9

10

| bronze.add(silver)

Country

France 936.0

Germany NaN

Italy NaN

Soviet Union 1211.0

United Kingdom 1096.0

United States 2247.0

Name: Total, dtype: float64

|

Using a fill_value

1

2

3

4

5

6

7

8

9

10

| bronze.add(silver, fill_value=0)

Country

France 936.0

Germany 454.0

Italy 394.0

Soviet Union 1211.0

United Kingdom 1096.0

United States 2247.0

Name: Total, dtype: float64

|

Adding bronze, silver, gold

1

2

3

4

5

6

7

8

9

10

| bronze + silver + gold

Country

France NaN

Germany NaN

Italy NaN

Soviet Union 2049.0

United Kingdom 1594.0

United States 4335.0

Name: Total, dtype: float64

|

Chaining .add()

1

2

3

4

5

6

7

8

9

10

| bronze.add(silver, fill_value=0).add(gold, fill_value=0)

Country

France 936.0

Germany 861.0

Italy 854.0

Soviet Union 2049.0

United Kingdom 1594.0

United States 4335.0

Name: Total, dtype: float64

|

Exercises

Adding unaligned DataFrames

The DataFrames january and february, which have been printed in the IPython Shell, represent the sales a company made in the corresponding months.

The Indexes in both DataFrames are called Company, identifying which company bought that quantity of units. The column Units is the number of units sold.

If you were to add these two DataFrames by executing the command total = january + february, how many rows would the resulting DataFrame have? Try this in the IPython Shell and find out for yourself.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| jan_dict = {'Company': ['Acme Corporation', 'Hooli', 'Initech', 'Mediacore', 'Streeplex'],

'Units': [19, 17, 20, 10, 13]}

feb_dict = {'Company': ['Acme Corporation', 'Hooli', 'Mediacore', 'Vandelay Inc'],

'Units': [15, 3, 12, 25]}

january = pd.DataFrame.from_dict(jan_dict)

january.set_index('Company', inplace=True)

print(january)

february = pd.DataFrame.from_dict(feb_dict)

february.set_index('Company', inplace=True)

print('\n', february, '\n')

print(january + february)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| Units

Company

Acme Corporation 19

Hooli 17

Initech 20

Mediacore 10

Streeplex 13

Units

Company

Acme Corporation 15

Hooli 3

Mediacore 12

Vandelay Inc 25

Units

Company

Acme Corporation 34.0

Hooli 20.0

Initech NaN

Mediacore 22.0

Streeplex NaN

Vandelay Inc NaN

|

In this exercise, you’ll work with weather data pulled from wunderground.com. The DataFrame weather has been pre-loaded along with pandas as pd. It has 365 rows (observed each day of the year 2013 in Pittsburgh, PA) and 22 columns reflecting different weather measurements each day.

You’ll subset a collection of columns related to temperature measurements in degrees Fahrenheit, convert them to degrees Celsius, and relabel the columns of the new DataFrame to reflect the change of units.

Remember, ordinary arithmetic operators (like +, -, *, and /) broadcast scalar values to conforming DataFrames when combining scalars & DataFrames in arithmetic expressions. Broadcasting also works with pandas Series and NumPy arrays.

Instructions

- Create a new DataFrame

temps_f by extracting the columns 'Min TemperatureF', 'Mean TemperatureF', & 'Max TemperatureF' from weather as a new DataFrame temps_f. To do this, pass the relevant columns as a list to weather[]. - Create a new DataFrame

temps_c from temps_f using the formula (temps_f - 32) * 5/9. - Rename the columns of

temps_c to replace 'F' with 'C' using the .str.replace('F', 'C') method on temps_c.columns. - Print the first 5 rows of DataFrame

temps_c. This has been done for you, so hit ‘Submit Answer’ to see the result!

1

2

3

| weather = pd.read_csv(pitts_file)

weather.set_index('Date', inplace=True)

weather.head(3)

|

| Max TemperatureF | Mean TemperatureF | Min TemperatureF | Max Dew PointF | MeanDew PointF | Min DewpointF | Max Humidity | Mean Humidity | Min Humidity | Max Sea Level PressureIn | Mean Sea Level PressureIn | Min Sea Level PressureIn | Max VisibilityMiles | Mean VisibilityMiles | Min VisibilityMiles | Max Wind SpeedMPH | Mean Wind SpeedMPH | Max Gust SpeedMPH | PrecipitationIn | CloudCover | Events | WindDirDegrees |

|---|

| Date | | | | | | | | | | | | | | | | | | | | | | |

|---|

| 2013-1-1 | 32 | 28 | 21 | 30 | 27 | 16 | 100 | 89 | 77 | 30.10 | 30.01 | 29.94 | 10 | 6 | 2 | 10 | 8 | NaN | 0.0 | 8 | Snow | 277 |

|---|

| 2013-1-2 | 25 | 21 | 17 | 14 | 12 | 10 | 77 | 67 | 55 | 30.27 | 30.18 | 30.08 | 10 | 10 | 10 | 14 | 5 | NaN | 0.0 | 4 | NaN | 272 |

|---|

| 2013-1-3 | 32 | 24 | 16 | 19 | 15 | 9 | 77 | 67 | 56 | 30.25 | 30.21 | 30.16 | 10 | 10 | 10 | 17 | 8 | 26.0 | 0.0 | 3 | NaN | 229 |

|---|

1

2

3

4

5

6

7

8

9

10

11

| # Extract selected columns from weather as new DataFrame: temps_f

temps_f = weather[['Min TemperatureF', 'Mean TemperatureF', 'Max TemperatureF']]

# Convert temps_f to celsius: temps_c

temps_c = (temps_f - 32) * 5/9

# Rename 'F' in column names with 'C': temps_c.columns

temps_c.columns = temps_c.columns.str.replace('F', 'C')

# Print first 5 rows of temps_c

temps_c.head()

|

| Min TemperatureC | Mean TemperatureC | Max TemperatureC |

|---|

| Date | | | |

|---|

| 2013-1-1 | -6.111111 | -2.222222 | 0.000000 |

|---|

| 2013-1-2 | -8.333333 | -6.111111 | -3.888889 |

|---|

| 2013-1-3 | -8.888889 | -4.444444 | 0.000000 |

|---|

| 2013-1-4 | -2.777778 | -2.222222 | -1.111111 |

|---|

| 2013-1-5 | -3.888889 | -1.111111 | 1.111111 |

|---|

Computing percentage growth of GDP

Your job in this exercise is to compute the yearly percent-change of US GDP (Gross Domestic Product) since 2008.

The data has been obtained from the Federal Reserve Bank of St. Louis and is available in the file GDP.csv, which contains quarterly data; you will resample it to annual sampling and then compute the annual growth of GDP. For a refresher on resampling, check out the relevant material from pandas Foundations.

Instructions

- Read the file

'GDP.csv' into a DataFrame called gdp. - Use

parse_dates=True and index_col='DATE'. - Create a DataFrame

post2008 by slicing gdp such that it comprises all rows from 2008 onward. - Print the last 8 rows of the slice

post2008. This has been done for you. This data has quarterly frequency so the indices are separated by three-month intervals. - Create the DataFrame

yearly by resampling the slice post2008 by year. Remember, you need to chain .resample() (using the alias 'A' for annual frequency) with some kind of aggregation; you will use the aggregation method .last() to select the last element when resampling. - Compute the percentage growth of the resampled DataFrame

yearly with .pct_change() * 100.

1

2

3

| # Read 'GDP.csv' into a DataFrame: gdp

gdp = pd.read_csv(gdp_usa_file, parse_dates=True, index_col='DATE')

gdp.head()

|

| VALUE |

|---|

| DATE | |

|---|

| 1947-01-01 | 243.1 |

|---|

| 1947-04-01 | 246.3 |

|---|

| 1947-07-01 | 250.1 |

|---|

| 1947-10-01 | 260.3 |

|---|

| 1948-01-01 | 266.2 |

|---|

1

2

3

4

5

| # Slice all the gdp data from 2008 onward: post2008

post2008 = gdp.loc['2008-01-01':]

# Print the last 8 rows of post2008

post2008.tail(8)

|

| VALUE |

|---|

| DATE | |

|---|

| 2014-07-01 | 17569.4 |

|---|

| 2014-10-01 | 17692.2 |

|---|

| 2015-01-01 | 17783.6 |

|---|

| 2015-04-01 | 17998.3 |

|---|

| 2015-07-01 | 18141.9 |

|---|

| 2015-10-01 | 18222.8 |

|---|

| 2016-01-01 | 18281.6 |

|---|

| 2016-04-01 | 18436.5 |

|---|

1

2

3

4

5

| # Resample post2008 by year, keeping last(): yearly

yearly = post2008.resample('YE').last()

# Print yearly

yearly

|

| VALUE |

|---|

| DATE | |

|---|

| 2008-12-31 | 14549.9 |

|---|

| 2009-12-31 | 14566.5 |

|---|

| 2010-12-31 | 15230.2 |

|---|

| 2011-12-31 | 15785.3 |

|---|

| 2012-12-31 | 16297.3 |

|---|

| 2013-12-31 | 16999.9 |

|---|

| 2014-12-31 | 17692.2 |

|---|

| 2015-12-31 | 18222.8 |

|---|

| 2016-12-31 | 18436.5 |

|---|

1

2

3

4

5

| # Compute percentage growth of yearly: yearly['growth']

yearly['growth'] = yearly.pct_change() * 100

# Print yearly again

yearly

|

| VALUE | growth |

|---|

| DATE | | |

|---|

| 2008-12-31 | 14549.9 | NaN |

|---|

| 2009-12-31 | 14566.5 | 0.114090 |

|---|

| 2010-12-31 | 15230.2 | 4.556345 |

|---|

| 2011-12-31 | 15785.3 | 3.644732 |

|---|

| 2012-12-31 | 16297.3 | 3.243524 |

|---|

| 2013-12-31 | 16999.9 | 4.311144 |

|---|

| 2014-12-31 | 17692.2 | 4.072377 |

|---|

| 2015-12-31 | 18222.8 | 2.999062 |

|---|

| 2016-12-31 | 18436.5 | 1.172707 |

|---|

Converting currency of stocks

In this exercise, stock prices in US Dollars for the S&P 500 in 2015 have been obtained from Yahoo Finance. The files sp500.csv for sp500 and exchange.csv for the exchange rates are both provided to you.

Using the daily exchange rate to Pounds Sterling, your task is to convert both the Open and Close column prices.

Instructions

- Read the DataFrames

sp500 & exchange from the files 'sp500.csv' & 'exchange.csv' respectively.. - Use

parse_dates=True and index_col='Date'. - Extract the columns

'Open' & 'Close' from the DataFrame sp500 as a new DataFrame dollars and print the first 5 rows. - Construct a new DataFrame

pounds by converting US dollars to British pounds. You’ll use the .multiply() method of dollars with exchange['GBP/USD'] and axis='rows' - Print the first 5 rows of the new DataFrame

pounds. This has been done for you, so hit ‘Submit Answer’ to see the results!.

1

2

3

| # Read 'sp500.csv' into a DataFrame: sp500

sp500 = pd.read_csv(sp500_file, parse_dates=True, index_col='Date')

sp500.head()

|

| Open | High | Low | Close | Adj Close | Volume | tkr |

|---|

| Date | | | | | | | |

|---|

| 1980-01-02 | 0.0 | 108.430000 | 105.290001 | 105.760002 | 105.760002 | 40610000 | ^gspc |

|---|

| 1980-01-03 | 0.0 | 106.080002 | 103.260002 | 105.220001 | 105.220001 | 50480000 | ^gspc |

|---|

| 1980-01-04 | 0.0 | 107.080002 | 105.089996 | 106.519997 | 106.519997 | 39130000 | ^gspc |

|---|

| 1980-01-07 | 0.0 | 107.800003 | 105.800003 | 106.809998 | 106.809998 | 44500000 | ^gspc |

|---|

| 1980-01-08 | 0.0 | 109.290001 | 106.290001 | 108.949997 | 108.949997 | 53390000 | ^gspc |

|---|

1

2

3

| # Read 'exchange.csv' into a DataFrame: exchange

exchange = pd.read_csv(exch_rates_file, parse_dates=True, index_col='Date')

exchange.head()

|

| GBP/USD |

|---|

| Date | |

|---|

| 2015-01-02 | 0.65101 |

|---|

| 2015-01-05 | 0.65644 |

|---|

| 2015-01-06 | 0.65896 |

|---|

| 2015-01-07 | 0.66344 |

|---|

| 2015-01-08 | 0.66151 |

|---|

1

2

3

4

5

| # Subset 'Open' & 'Close' columns from sp500: dollars

dollars = sp500[['Open', 'Close']]

# Print the head of dollars

dollars.head()

|

| Open | Close |

|---|

| Date | | |

|---|

| 1980-01-02 | 0.0 | 105.760002 |

|---|

| 1980-01-03 | 0.0 | 105.220001 |

|---|

| 1980-01-04 | 0.0 | 106.519997 |

|---|

| 1980-01-07 | 0.0 | 106.809998 |

|---|

| 1980-01-08 | 0.0 | 108.949997 |

|---|

1

2

3

4

5

| # Convert dollars to pounds: pounds

pounds = dollars.multiply(exchange['GBP/USD'], axis='rows')

# Print the head of pounds

pounds.head()

|

| Open | Close |

|---|

| Date | | |

|---|

| 1980-01-02 | NaN | NaN |

|---|

| 1980-01-03 | NaN | NaN |

|---|

| 1980-01-04 | NaN | NaN |

|---|

| 1980-01-07 | NaN | NaN |

|---|

| 1980-01-08 | NaN | NaN |

|---|

1

| del january, february, feb_dict, jan_dict, weather, temps_f, temps_c, gdp, post2008, yearly, sp500, exchange, dollars, pounds

|

Concatenating Data

Having learned how to import multiple DataFrames and share information using Indexes, in this chapter you’ll learn how to perform database-style operations to combine DataFrames. In particular, you’ll learn about appending and concatenating DataFrames while working with a variety of real-world datasets.

Appending & concatenating Series

append()

- .append(): Series & DataFrame method

- Invocation:

- s1.append(s2)

- Stacks rows of s2 below s1

- Method for Series & DataFrames

concat()

- concat(): pandas module function

- Invocation:

- pd.concat([s1, s2, s3])

- Can stack row-wise or column-wise

concat() & .append()

- Equivalence of concat() & .append():

- result1 = pd.concat([s1, s2, s3])

- result2 = s1.append(s2).append(s3)

- result1 == result2 elementwise

Series of US states

1

2

3

4

5

| import pandas as pd

northeast = pd.Series(['CT', 'ME', 'MA', 'NH', 'RI', 'VT', 'NJ', 'NY', 'PA'])

south = pd.Series(['DE', 'FL', 'GA', 'MD', 'NC', 'SC', 'VA', 'DC', 'WV', 'AL', 'KY', 'MS', 'TN', 'AR', 'LA', 'OK', 'TX'])

midwest = pd.Series(['IL', 'IN', 'MN', 'MO', 'NE', 'ND', 'SD', 'IA', 'KS', 'MI', 'OH', 'WI'])

west = pd.Series(['AZ', 'CO', 'ID', 'MT',

|

Using .append()

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| east = northeast.append(south)

print(east)

0 CT 7 DC

1 ME 8 WV

2 MA 9 AL

3 NH 10 KY

4 RI 11 MS

5 VT 12 TN

6 NJ 13 AR

7 NY 14 LA

8 PA 15 OK

0 DE 16 TX

1 FL dtype: object

2 GA

3 MD

4 NC

5 SC

6 VA

|

The appended Index

1

2

3

4

5

6

7

| print(east.index)

Int64Index([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16], dtype='int64')

print(east.loc[3])

3 NH

3 MD

dtype: object

|

Using .reset_index()

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| new_east = northeast.append(south).reset_index(drop=True)

print(new_east.head(11))

0 CT

1 ME

2 MA

3 NH

4 RI

5 VT

6 NJ

7 NY

8 PA

9 DE

10 FL

dtype: object

print(new_east.index)

RangeIndex(start=0, stop=26, step=1)

|

Using concat()

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| east = pd.concat([northeast, south])

print(east.head(11))

0 CT

1 ME

2 MA

3 NH

4 RI

5 VT

6 NJ

7 NY

8 PA

0 DE

1 FL

dtype: object

print(east.index)

Int64Index([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16], dtype='int64')

|

Using ignore_index

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| new_east = pd.concat([northeast, south], ignore_index=True)

print(new_east.head(11))

0 CT

1 ME

2 MA

3 NH

4 RI

5 VT

6 NJ

7 NY

8 PA

9 DE

10 FL

dtype: object

print(new_east.index)

RangeIndex(start=0, stop=26, step=1)

|

Exercises

Appending Series with nonunique Indices

The Series bronze and silver, which have been printed in the IPython Shell, represent the 5 countries that won the most bronze and silver Olympic medals respectively between 1896 & 2008. The Indexes of both Series are called Country and the values are the corresponding number of medals won.

If you were to run the command combined = bronze.append(silver), how many rows would combined have? And how many rows would combined.loc['United States'] return? Find out for yourself by running these commands in the IPython Shell.

Instructions

Possible Answers

- combined has 5 rows and combined.loc[‘United States’] is empty (0 rows).

- combined has 10 rows and combined.loc['United States'] has 2 rows.

- combined has 6 rows and combined.loc[‘United States’] has 1 row.

- combined has 5 rows and combined.loc[‘United States’] has 2 rows.

1

2

| bronze = pd.read_csv(so_bronze5_file, index_col=0)

bronze

|

| Total |

|---|

| Country | |

|---|

| United States | 1052.0 |

|---|

| Soviet Union | 584.0 |

|---|

| United Kingdom | 505.0 |

|---|

| France | 475.0 |

|---|

| Germany | 454.0 |

|---|

1

2

| silver = pd.read_csv(so_silver5_file, index_col=0)

silver

|

| Total |

|---|

| Country | |

|---|

| United States | 1195.0 |

|---|

| Soviet Union | 627.0 |

|---|

| United Kingdom | 591.0 |

|---|

| France | 461.0 |

|---|

| Italy | 394.0 |

|---|

1

2

| combined = pd.concat([bronze, silver])

combined

|

| Total |

|---|

| Country | |

|---|

| United States | 1052.0 |

|---|

| Soviet Union | 584.0 |

|---|

| United Kingdom | 505.0 |

|---|

| France | 475.0 |

|---|

| Germany | 454.0 |

|---|

| United States | 1195.0 |

|---|

| Soviet Union | 627.0 |

|---|

| United Kingdom | 591.0 |

|---|

| France | 461.0 |

|---|

| Italy | 394.0 |

|---|

1

| combined.loc['United States']

|

| Total |

|---|

| Country | |

|---|

| United States | 1052.0 |

|---|

| United States | 1195.0 |

|---|

Appending pandas Series

In this exercise, you’ll load sales data from the months January, February, and March into DataFrames. Then, you’ll extract Series with the 'Units' column from each and append them together with method chaining using .append().

To check that the stacking worked, you’ll print slices from these Series, and finally, you’ll add the result to figure out the total units sold in the first quarter.

Instructions

- Read the files

'sales-jan-2015.csv', 'sales-feb-2015.csv' and 'sales-mar-2015.csv' into the DataFrames jan, feb, and mar respectively. - Use

parse_dates=True and index_col='Date'. - Extract the

'Units' column of jan, feb, and mar to create the Series jan_units, feb_units, and mar_units respectively. - Construct the Series

quarter1 by appending feb_units to jan_units and then appending mar_units to the result. Use chained calls to the .append() method to do this. - Verify that

quarter1 has the individual Series stacked vertically. To do this: - Print the slice containing rows from

jan 27, 2015 to feb 2, 2015. - Print the slice containing rows from

feb 26, 2015 to mar 7, 2015. - Compute and print the total number of units sold from the Series

quarter1. This has been done for you, so hit ‘Submit Answer’ to see the result!

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| # Load 'sales-jan-2015.csv' into a DataFrame: jan

jan = pd.read_csv(sales_jan_2015_file, parse_dates=True, index_col='Date')

# Load 'sales-feb-2015.csv' into a DataFrame: feb

feb = pd.read_csv(sales_feb_2015_file, parse_dates=True, index_col='Date')

# Load 'sales-mar-2015.csv' into a DataFrame: mar

mar = pd.read_csv(sales_mar_2015_file, parse_dates=True, index_col='Date')

# Extract the 'Units' column from jan: jan_units

jan_units = jan['Units']

# Extract the 'Units' column from feb: feb_units

feb_units = feb['Units']

# Extract the 'Units' column from mar: mar_units

mar_units = mar['Units']

# Append feb_units and then mar_units to jan_units: quarter1

quarter1 = pd.concat([jan_units, feb_units, mar_units])

# Print the first slice from quarter1

display(quarter1.sort_index().loc['jan 27, 2015':'feb 2, 2015'])

# Print the second slice from quarter1

display(quarter1.sort_index().loc['feb 26, 2015':'mar 7, 2015'])

# Compute & print total sales in quarter1

display(quarter1.sum())

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| Date

2015-01-27 07:11:55 18

2015-02-02 08:33:01 3

2015-02-02 20:54:49 9

Name: Units, dtype: int64

Date

2015-02-26 08:57:45 4

2015-02-26 08:58:51 1

2015-03-06 02:03:56 17

2015-03-06 10:11:45 17

Name: Units, dtype: int64

642

|

Concatenating pandas Series along row axis

Having learned how to append Series, you’ll now learn how to achieve the same result by concatenating Series instead. You’ll continue to work with the sales data you’ve seen previously. This time, the DataFrames jan, feb, and mar have been pre-loaded.

Your job is to use pd.concat() with a list of Series to achieve the same result that you would get by chaining calls to .append().

You may be wondering about the difference between pd.concat() and pandas’ .append() method. One way to think of the difference is that .append() is a specific case of a concatenation, while pd.concat() gives you more flexibility, as you’ll see in later exercises.

Instructions

- Create an empty list called

units. This has been done for you.- Use a

for loop to iterate over [jan, feb, mar]:

- In each iteration of the loop, append the

'Units' column of each DataFrame to units.- Concatenate the Series contained in the list

units into a longer Series called quarter1 using pd.concat().

- Specify the keyword argument

axis='rows' to stack the Series vertically. - Verify that

quarter1 has the individual Series stacked vertically by printing slices. This has been done for you, so hit ‘Submit Answer’ to see the result!

1

2

3

4

5

6

7

8

9

10

11

12

13

| # Initialize empty list: units

units = []

# Build the list of Series

for month in [jan, feb, mar]:

units.append(month.Units)

# Concatenate the list: quarter1

quarter1 = pd.concat(units, axis='rows')

# Print slices from quarter1

print(quarter1.sort_index().loc['jan 27, 2015':'feb 2, 2015'])

print(quarter1.sort_index().loc['feb 26, 2015':'mar 7, 2015'])

|

1

2

3

4

5

6

7

8

9

10

11

| Date

2015-01-27 07:11:55 18

2015-02-02 08:33:01 3

2015-02-02 20:54:49 9

Name: Units, dtype: int64

Date

2015-02-26 08:57:45 4

2015-02-26 08:58:51 1

2015-03-06 02:03:56 17

2015-03-06 10:11:45 17

Name: Units, dtype: int64

|

1

| del bronze, silver, combined, jan, feb, mar, jan_units, feb_units, mar_units, quarter1

|

Appending & concatenating DataFrames

Loading population data

1

2

3

| import pandas as pd

pop1 = pd.read_csv('population_01.csv', index_col=0)

pop2 = pd.read_csv('population_02.csv', index_col=0)

|

1

2

3

4

5

6

7

| pop1_data = {'Zip Code ZCTA': [66407, 72732, 50579, 46421], '2010 Census Population': [479, 4716, 2405, 30670]}

pop2_data = {'Zip Code ZCTA': [12776, 76092, 98360, 49464], '2010 Census Population': [2180, 26669, 12221, 27481]}

pop1 = pd.DataFrame.from_dict(pop1_data)

pop1.set_index('Zip Code ZCTA', drop=True, inplace=True)

pop2 = pd.DataFrame.from_dict(pop2_data)

pop2.set_index('Zip Code ZCTA', drop=True, inplace=True)

|

Examining population data

| 2010 Census Population |

|---|

| Zip Code ZCTA | |

|---|

| 66407 | 479 |

|---|

| 72732 | 4716 |

|---|

| 50579 | 2405 |

|---|

| 46421 | 30670 |

|---|

| 2010 Census Population |

|---|

| Zip Code ZCTA | |

|---|

| 12776 | 2180 |

|---|

| 76092 | 26669 |

|---|

| 98360 | 12221 |

|---|

| 49464 | 27481 |

|---|

1

2

| print(type(pop1), pop1.shape)

print(type(pop2), pop2.shape)

|

1

2

| <class 'pandas.core.frame.DataFrame'> (4, 1)

<class 'pandas.core.frame.DataFrame'> (4, 1)

|

Appending population DataFrames

1

| pd.concat([pop1, pop2])

|

| 2010 Census Population |

|---|

| Zip Code ZCTA | |

|---|

| 66407 | 479 |

|---|

| 72732 | 4716 |

|---|

| 50579 | 2405 |

|---|

| 46421 | 30670 |

|---|

| 12776 | 2180 |

|---|

| 76092 | 26669 |

|---|

| 98360 | 12221 |

|---|

| 49464 | 27481 |

|---|

1

2

| print(pop1.index.name, pop1.columns)

print(pop2.index.name, pop2.columns)

|

1

2

| Zip Code ZCTA Index(['2010 Census Population'], dtype='object')

Zip Code ZCTA Index(['2010 Census Population'], dtype='object')

|

Population & unemployment data

1

2

| population = pd.read_csv('population_00.csv', index_col=0)

unemployment = pd.read_csv('unemployment_00.csv', index_col=0)

|

1

2

3

4

5

6

7

| pop_data = {'Zip Code ZCTA': [57538, 59916, 37660, 2860], '2010 Census Population': [322, 130, 40038, 45199]}

emp_data = {'Zip': [2860, 46167, 1097, 80808], 'unemployment': [0.11, 0.02, 0.33, 0.07], 'participants': [34447, 4800, 42, 4310]}

population = pd.DataFrame.from_dict(pop_data)

population.set_index('Zip Code ZCTA', drop=True, inplace=True)

unemployment = pd.DataFrame.from_dict(emp_data)

unemployment.set_index('Zip', drop=True, inplace=True)

|

| 2010 Census Population |

|---|

| Zip Code ZCTA | |

|---|

| 57538 | 322 |

|---|

| 59916 | 130 |

|---|

| 37660 | 40038 |

|---|

| 2860 | 45199 |

|---|

| unemployment | participants |

|---|

| Zip | | |

|---|

| 2860 | 0.11 | 34447 |

|---|

| 46167 | 0.02 | 4800 |

|---|

| 1097 | 0.33 | 42 |

|---|

| 80808 | 0.07 | 4310 |

|---|

Appending population & unemployment

1

| pd.concat([population, unemployment], sort=True)

|

| 2010 Census Population | participants | unemployment |

|---|

| 57538 | 322.0 | NaN | NaN |

|---|

| 59916 | 130.0 | NaN | NaN |

|---|

| 37660 | 40038.0 | NaN | NaN |

|---|

| 2860 | 45199.0 | NaN | NaN |

|---|

| 2860 | NaN | 34447.0 | 0.11 |

|---|

| 46167 | NaN | 4800.0 | 0.02 |

|---|

| 1097 | NaN | 42.0 | 0.33 |

|---|

| 80808 | NaN | 4310.0 | 0.07 |

|---|

Repeated index labels

1

| pd.concat([population, unemployment], sort=True)

|

| 2010 Census Population | participants | unemployment |

|---|

| 57538 | 322.0 | NaN | NaN |

|---|

| 59916 | 130.0 | NaN | NaN |

|---|

| 37660 | 40038.0 | NaN | NaN |

|---|

| 2860 | 45199.0 | NaN | NaN |

|---|

| 2860 | NaN | 34447.0 | 0.11 |

|---|

| 46167 | NaN | 4800.0 | 0.02 |

|---|

| 1097 | NaN | 42.0 | 0.33 |

|---|

| 80808 | NaN | 4310.0 | 0.07 |

|---|

Concatenating rows

- with

axis=0, pd.concat is the same as population.append(unemployment, sort=True)

1

| pd.concat([population, unemployment], axis=0, sort=True)

|

| 2010 Census Population | participants | unemployment |

|---|

| 57538 | 322.0 | NaN | NaN |

|---|

| 59916 | 130.0 | NaN | NaN |

|---|

| 37660 | 40038.0 | NaN | NaN |

|---|

| 2860 | 45199.0 | NaN | NaN |

|---|

| 2860 | NaN | 34447.0 | 0.11 |

|---|

| 46167 | NaN | 4800.0 | 0.02 |

|---|

| 1097 | NaN | 42.0 | 0.33 |

|---|

| 80808 | NaN | 4310.0 | 0.07 |

|---|

Concatenating column

1

| pd.concat([population, unemployment], axis=1, sort=True)

|

| 2010 Census Population | unemployment | participants |

|---|

| 1097 | NaN | 0.33 | 42.0 |

|---|

| 2860 | 45199.0 | 0.11 | 34447.0 |

|---|

| 37660 | 40038.0 | NaN | NaN |

|---|

| 46167 | NaN | 0.02 | 4800.0 |

|---|

| 57538 | 322.0 | NaN | NaN |

|---|

| 59916 | 130.0 | NaN | NaN |

|---|

| 80808 | NaN | 0.07 | 4310.0 |

|---|

1

| del pop1_data, pop2_data, pop1, pop2, pop_data, emp_data, population, unemployment

|

Exercises

Appending DataFrames with ignore_index

In this exercise, you’ll use the Baby Names Dataset (from data.gov) again. This time, both DataFrames names_1981 and names_1881 are loaded without specifying an Index column (so the default Indexes for both are RangeIndexes).

You’ll use the DataFrame .append() method to make a DataFrame combined_names. To distinguish rows from the original two DataFrames, you’ll add a 'year' column to each with the year (1881 or 1981 in this case). In addition, you’ll specify ignore_index=True so that the index values are not used along the concatenation axis. The resulting axis will instead be labeled 0, 1, ..., n-1, which is useful if you are concatenating objects where the concatenation axis does not have meaningful indexing information.

Instructions

- Create a

'year' column in the DataFrames names_1881 and names_1981, with values of 1881 and 1981 respectively. Recall that assigning a scalar value to a DataFrame column broadcasts that value throughout. - Create a new DataFrame called

combined_names by appending the rows of names_1981 underneath the rows of names_1881. Specify the keyword argument ignore_index=True to make a new RangeIndex of unique integers for each row. - Print the shapes of all three DataFrames. This has been done for you.

- Extract all rows from

combined_names that have the name 'Morgan'. To do this, use the .loc[] accessor with an appropriate filter. The relevant column of combined_names here is 'name'.

1

2

| names_1881 = pd.read_csv(baby_1881_file, header=None, names=['name', 'gender', 'count'])

names_1981 = pd.read_csv(baby_1981_file, header=None, names=['name', 'gender', 'count'])

|

| name | gender | count |

|---|

| 0 | Jennifer | F | 57032 |

|---|

| 1 | Jessica | F | 42519 |

|---|

| 2 | Amanda | F | 34370 |

|---|

| 3 | Sarah | F | 28162 |

|---|

| 4 | Melissa | F | 28003 |

|---|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| # Add 'year' column to names_1881 and names_1981

names_1881['year'] = 1881

names_1981['year'] = 1981

# Append names_1981 after names_1881 with ignore_index=True: combined_names

combined_names = pd.concat([names_1881, names_1981], ignore_index=True, sort=False)

# Print shapes of names_1981, names_1881, and combined_names

print(names_1981.shape)

print(names_1881.shape)

print(combined_names.shape)

# Print all rows that contain the name 'Morgan'

combined_names[combined_names.name == 'Morgan']

|

1

2

3

| (19455, 4)

(1935, 4)

(21390, 4)

|

| name | gender | count | year |

|---|

| 1283 | Morgan | M | 23 | 1881 |

|---|

| 2096 | Morgan | F | 1769 | 1981 |

|---|

| 14390 | Morgan | M | 766 | 1981 |

|---|

Concatenating pandas DataFrames along column axis

The function pd.concat() can concatenate DataFrames horizontally as well as vertically (vertical is the default). To make the DataFrames stack horizontally, you have to specify the keyword argument axis=1 or axis='columns'.

In this exercise, you’ll use weather data with maximum and mean daily temperatures sampled at different rates (quarterly versus monthly). You’ll concatenate the rows of both and see that, where rows are missing in the coarser DataFrame, null values are inserted in the concatenated DataFrame. This corresponds to an outer join (which you will explore in more detail in later exercises).

The files 'quarterly_max_temp.csv' and 'monthly_mean_temp.csv' have been pre-loaded into the DataFrames weather_max and weather_mean respectively, and pandas has been imported as pd.

Instructions

- Create a new DataFrame called

weather by concatenating the DataFrames weather_max and weather_mean horizontally.- Pass the DataFrames to

pd.concat() as a list and specify the keyword argument axis=1 to stack them horizontally.

- Print the new DataFrame

weather.

1

2

3

4

5

6

7

8

| weather_mean_data = {'Mean TemperatureF': [53.1, 70., 34.93548387, 28.71428571, 32.35483871, 72.87096774, 70.13333333, 35., 62.61290323, 39.8, 55.4516129 , 63.76666667],

'Month': ['Apr', 'Aug', 'Dec', 'Feb', 'Jan', 'Jul', 'Jun', 'Mar', 'May', 'Nov', 'Oct', 'Sep']}

weather_max_data = {'Max TemperatureF': [68, 89, 91, 84], 'Month': ['Jan', 'Apr', 'Jul', 'Oct']}

weather_mean = pd.DataFrame.from_dict(weather_mean_data)

weather_mean.set_index('Month', inplace=True, drop=True)

weather_max = pd.DataFrame.from_dict(weather_max_data)

weather_max.set_index('Month', inplace=True, drop=True)

|

| Max TemperatureF |

|---|

| Month | |

|---|

| Jan | 68 |

|---|

| Apr | 89 |

|---|

| Jul | 91 |

|---|

| Oct | 84 |

|---|

| Mean TemperatureF |

|---|

| Month | |

|---|

| Apr | 53.100000 |

|---|

| Aug | 70.000000 |

|---|

| Dec | 34.935484 |

|---|

| Feb | 28.714286 |

|---|

| Jan | 32.354839 |

|---|

| Jul | 72.870968 |

|---|

| Jun | 70.133333 |

|---|

| Mar | 35.000000 |

|---|

| May | 62.612903 |

|---|

| Nov | 39.800000 |

|---|

| Oct | 55.451613 |

|---|

| Sep | 63.766667 |

|---|

1

2

3

4

5

| # Concatenate weather_max and weather_mean horizontally: weather

weather = pd.concat([weather_max, weather_mean], axis=1, sort=True)

# Print weather

weather

|

| Max TemperatureF | Mean TemperatureF |

|---|

| Month | | |

|---|

| Apr | 89.0 | 53.100000 |

|---|

| Aug | NaN | 70.000000 |

|---|

| Dec | NaN | 34.935484 |

|---|

| Feb | NaN | 28.714286 |

|---|

| Jan | 68.0 | 32.354839 |

|---|

| Jul | 91.0 | 72.870968 |

|---|

| Jun | NaN | 70.133333 |

|---|

| Mar | NaN | 35.000000 |

|---|

| May | NaN | 62.612903 |

|---|

| Nov | NaN | 39.800000 |

|---|

| Oct | 84.0 | 55.451613 |

|---|

| Sep | NaN | 63.766667 |

|---|

Reading multiple files to build a DataFrame

It is often convenient to build a large DataFrame by parsing many files as DataFrames and concatenating them all at once. You’ll do this here with three files, but, in principle, this approach can be used to combine data from dozens or hundreds of files.

Here, you’ll work with DataFrames compiled from The Guardian’s Olympic medal dataset.

pandas has been imported as pd and two lists have been pre-loaded: An empty list called medals, and medal_types, which contains the strings 'bronze', 'silver', and 'gold'.

Instructions

- Iterate over

medal_types in the for loop. - Inside the

for loop:- Create

file_name using string interpolation with the loop variable medal. This has been done for you. The expression "%s_top5.csv" % medal evaluates as a string with the value of medal replacing %s in the format string. - Create the list of column names called

columns. This has been done for you. - Read

file_name into a DataFrame called medal_df. Specify the keyword arguments header=0, index_col='Country', and names=columns to get the correct row and column Indexes. - Append

medal_df to medals using the list .append() method.

- Concatenate the list of DataFrames

medals horizontally (using axis='columns') to create a single DataFrame called medals. Print it in its entirety.

1

2

3

| top_five = data.glob('*_top5.csv')

for file in top_five:

print(file)

|

1

2

3

| D:\users\trenton\Dropbox\PythonProjects\DataCamp\data\merging-dataframes-with-pandas\summer_olympics_bronze_top5.csv

D:\users\trenton\Dropbox\PythonProjects\DataCamp\data\merging-dataframes-with-pandas\summer_olympics_gold_top5.csv

D:\users\trenton\Dropbox\PythonProjects\DataCamp\data\merging-dataframes-with-pandas\summer_olympics_silver_top5.csv

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| medal_types = ['bronze', 'silver', 'gold']

medal_list = list()

for medal in medal_types:

# Create the file name: file_name

file_name = data / f'summer_olympics_{medal}_top5.csv'

# Create list of column names: columns

columns = ['Country', medal]

# Read file_name into a DataFrame: df

medal_df = pd.read_csv(file_name, header=0, index_col='Country', names=columns)

# Append medal_df to medals

medal_list.append(medal_df)

# Concatenate medals horizontally: medals

medals = pd.concat(medal_list, axis='columns', sort=True)

# Print medals

medals

|

| bronze | silver | gold |

|---|

| Country | | | |

|---|

| France | 475.0 | 461.0 | NaN |

|---|

| Germany | 454.0 | NaN | 407.0 |

|---|

| Italy | NaN | 394.0 | 460.0 |

|---|

| Soviet Union | 584.0 | 627.0 | 838.0 |

|---|

| United Kingdom | 505.0 | 591.0 | 498.0 |

|---|

| United States | 1052.0 | 1195.0 | 2088.0 |

|---|

1

| del names_1881, names_1981, combined_names, weather_mean_data, weather_max_data, weather_mean, weather_max, weather, top_five, medals, medal_list

|

Concatenation, keys & MultiIndexes

Loading rainfall data

1

2

3

4

5

| import pandas as pd

file1 = 'q1_rainfall_2013.csv'

rain2013 = pd.read_csv(file1, index_col='Month', parse_dates=True)

file2 = 'q1_rainfall_2014.csv'

rain2014 = pd.read_csv(file2, index_col='Month', parse_dates=True)

|

1

2

3

4

5

6

7

| rain_2013_data = {'Month': ['Jan', 'Feb', 'Mar'], 'Precipitation': [0.096129, 0.067143, 0.061613]}

rain_2014_data = {'Month': ['Jan', 'Feb', 'Mar'], 'Precipitation': [0.050323, 0.082143, 0.070968]}

rain2013 = pd.DataFrame.from_dict(rain_2013_data)

rain2013.set_index('Month', inplace=True)

rain2014 = pd.DataFrame.from_dict(rain_2014_data)

rain2014.set_index('Month', inplace=True)

|

Examining rainfall data

| Precipitation |

|---|

| Month | |

|---|

| Jan | 0.096129 |

|---|

| Feb | 0.067143 |

|---|

| Mar | 0.061613 |

|---|

| Precipitation |

|---|

| Month | |

|---|

| Jan | 0.050323 |

|---|

| Feb | 0.082143 |

|---|

| Mar | 0.070968 |

|---|

Concatenating rows

1

| pd.concat([rain2013, rain2014], axis=0)

|

| Precipitation |

|---|

| Month | |

|---|

| Jan | 0.096129 |

|---|

| Feb | 0.067143 |

|---|

| Mar | 0.061613 |

|---|

| Jan | 0.050323 |

|---|

| Feb | 0.082143 |

|---|

| Mar | 0.070968 |

|---|

Using multi-index on rows

1

2

| rain1314 = pd.concat([rain2013, rain2014], keys=[2013, 2014], axis=0)

rain1314

|

| | Precipitation |

|---|

| Month | |

|---|

| 2013 | Jan | 0.096129 |

|---|

| Feb | 0.067143 |

|---|

| Mar | 0.061613 |

|---|

| 2014 | Jan | 0.050323 |

|---|

| Feb | 0.082143 |

|---|

| Mar | 0.070968 |

|---|

Accessing a multi-index

| Precipitation |

|---|

| Month | |

|---|

| Jan | 0.050323 |

|---|

| Feb | 0.082143 |

|---|

| Mar | 0.070968 |

|---|

Concatenating columns

1

2

| rain1314 = pd.concat([rain2013, rain2014], axis='columns')

rain1314

|

| Precipitation | Precipitation |

|---|

| Month | | |

|---|

| Jan | 0.096129 | 0.050323 |

|---|

| Feb | 0.067143 | 0.082143 |

|---|

| Mar | 0.061613 | 0.070968 |

|---|

Using a multi-index on columns

1

2

| rain1314 = pd.concat([rain2013, rain2014], keys=[2013, 2014], axis='columns')

rain1314

|

| 2013 | 2014 |

|---|

| Precipitation | Precipitation |

|---|

| Month | | |

|---|

| Jan | 0.096129 | 0.050323 |

|---|

| Feb | 0.067143 | 0.082143 |

|---|

| Mar | 0.061613 | 0.070968 |

|---|

| Precipitation |

|---|

| Month | |

|---|

| Jan | 0.096129 |

|---|

| Feb | 0.067143 |

|---|

| Mar | 0.061613 |

|---|

pd.concat() with dict

1

2

3

| rain_dict = {2013: rain2013, 2014: rain2014}

rain1314 = pd.concat(rain_dict, axis='columns')

rain1314

|

| 2013 | 2014 |

|---|

| Precipitation | Precipitation |

|---|

| Month | | |

|---|

| Jan | 0.096129 | 0.050323 |

|---|

| Feb | 0.067143 | 0.082143 |

|---|

| Mar | 0.061613 | 0.070968 |

|---|

1

| del rain_2013_data, rain_2014_data, rain2013, rain2014, rain1314

|

Exercises

Concatenating vertically to get MultiIndexed rows

When stacking a sequence of DataFrames vertically, it is sometimes desirable to construct a MultiIndex to indicate the DataFrame from which each row originated. This can be done by specifying the keys parameter in the call to pd.concat(), which generates a hierarchical index with the labels from keys as the outermost index label. So you don’t have to rename the columns of each DataFrame as you load it. Instead, only the Index column needs to be specified.

Here, you’ll continue working with DataFrames compiled from The Guardian’s Olympic medal dataset. Once again, pandas has been imported as pd and two lists have been pre-loaded: An empty list called medals, and medal_types, which contains the strings 'bronze', 'silver', and 'gold'.

Instructions

- Within the

for loop:- Read

file_name into a DataFrame called medal_df. Specify the index to be 'Country'. - Append

medal_df to medals.

- Concatenate the list of DataFrames

medals into a single DataFrame called medals. Be sure to use the keyword argument keys=['bronze', 'silver', 'gold'] to create a vertically stacked DataFrame with a MultiIndex. - Print the new DataFrame

medals. This has been done for you, so hit ‘Submit Answer’ to see the result!

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| medal_types = ['bronze', 'silver', 'gold']

medal_list = list()

for medal in medal_types:

# Create the file name: file_name

file_name = data / f'summer_olympics_{medal}_top5.csv'

# Read file_name into a DataFrame: medal_df

medal_df = pd.read_csv(file_name, index_col='Country')

# Append medal_df to medals

medal_list.append(medal_df)

# Concatenate medals: medals

medals = pd.concat(medal_list, keys=['bronze', 'silver', 'gold'])

# Print medals in entirety

print(medals)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| Total

Country

bronze United States 1052.0