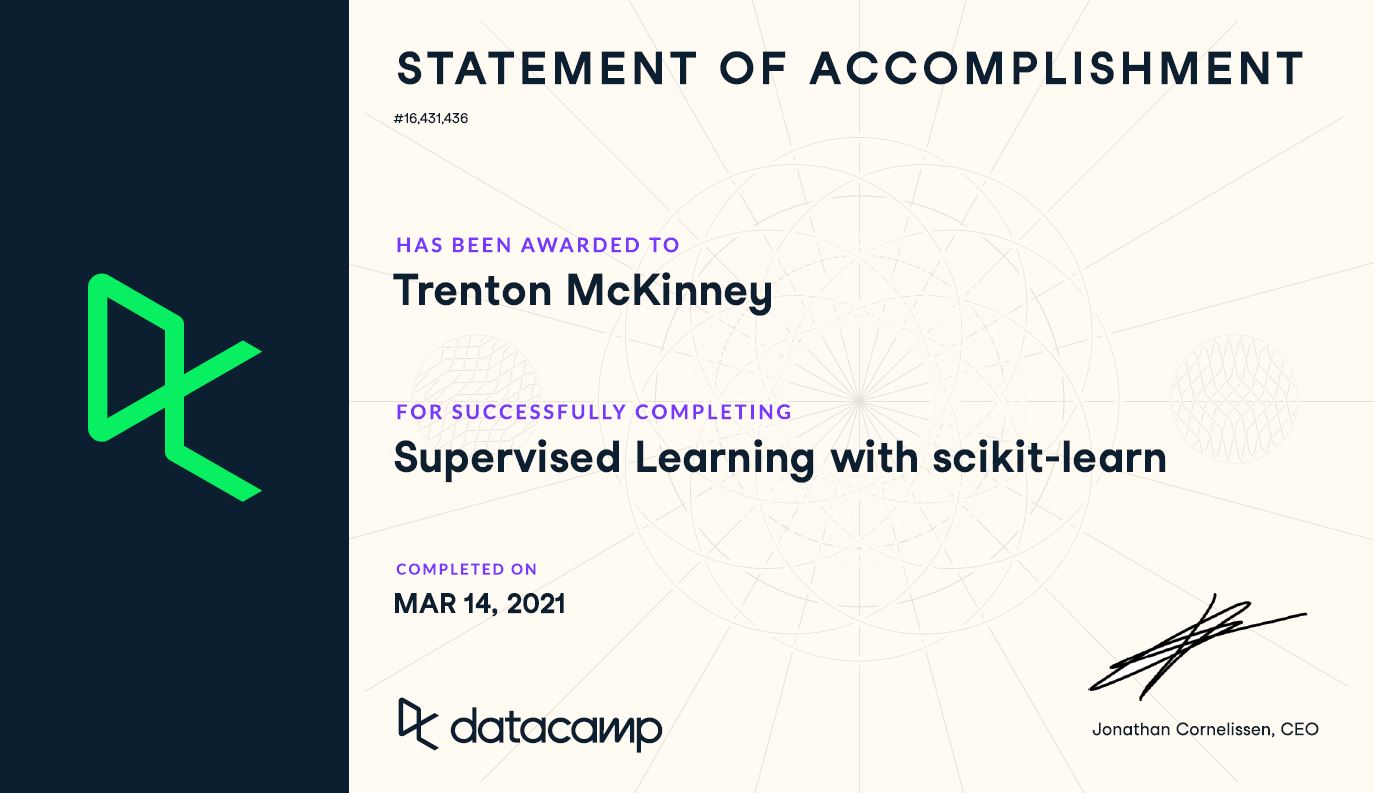

Supervised Learning with scikit-learn

- Course: DataCamp: Supervised Learning with scikit-learn

- This notebook was created as a reproducible reference.

- The material is from the course

- The course website uses

scikit-learn v0.19.2,pandas v0.19.2, andnumpy v1.17.4

- The course website uses

- If you find the content beneficial, consider a DataCamp Subscription.

- I added a function (

create_dir_save_file) to automatically download and save the required data (data/2020-10-14_supervised_learning_sklearn) and image (Images/2020-10-14_supervised_learning_sklearn) files. - Package Versions:

- Pandas version: 2.2.1

- Matplotlib version: 3.8.1

- Seaborn version: 0.13.2

- SciPy version: 1.12.0

- Scikit-Learn version: 1.3.2

- NumPy version: 1.26.4

Synopsis

This post covers the essentials of supervised machine learning using scikit-learn in Python. Designed for those looking to enhance their understanding of predictive modeling and data science, the guide offers practical insights and hands-on examples with real-world datasets.

Key Highlights:

- Introduction to Supervised Learning: Learn the fundamentals of supervised learning and its various applications.

- Working with Real-world Data: Gain skills in handling and preparing different types of data for effective modeling.

- Building Predictive Models: Detailed guidance on creating and training predictive models using scikit-learn.

- Model Tuning and Evaluation: Explore methods to fine-tune model parameters and evaluate their performance accurately.

- Practical Examples: Engage with comprehensive examples and case studies that illustrate the concepts discussed.

This guide aims to equip you with the necessary tools to implement supervised learning algorithms and make data-driven decisions effectively.

Course Description

Machine learning is the field that teaches machines and computers to learn from existing data to make predictions on new data: Will a tumor be benign or malignant? Which of your customers will take their business elsewhere? Is a particular email spam? In this course, you’ll learn how to use Python to perform supervised learning, an essential component of machine learning. You’ll learn how to build predictive models, tune their parameters, and determine how well they will perform with unseen data—all while using real world datasets. You’ll be using scikit-learn, one of the most popular and user-friendly machine learning libraries for Python.

Imports

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

import pandas as pd

import numpy as np

from pprint import pprint as pp

from itertools import combinations

import requests

from pathlib import Path

import seaborn as sns

import matplotlib.pyplot as plt

from scipy.stats import randint

from matplotlib.colors import ListedColormap

from sklearn.datasets import load_iris, load_digits

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import train_test_split, cross_val_score, GridSearchCV, RandomizedSearchCV

from sklearn.linear_model import LinearRegression, Ridge, Lasso, LogisticRegression, ElasticNet

from sklearn.metrics import mean_squared_error, confusion_matrix, classification_report, roc_curve, precision_recall_curve, roc_auc_score, accuracy_score

from sklearn.tree import DecisionTreeClassifier

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.impute import SimpleImputer

from sklearn.pipeline import Pipeline

from sklearn.svm import SVC

from sklearn.preprocessing import scale, StandardScaler

1

2

import warnings

warnings.simplefilter("ignore")

Configuration Options

1

2

3

4

5

pd.set_option('display.max_columns', 200)

pd.set_option('display.max_rows', 300)

pd.set_option('display.expand_frame_repr', True)

# plt.style.use('ggplot')

plt.rcParams["patch.force_edgecolor"] = True

Functions

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

def create_dir_save_file(dir_path: Path, url: str):

"""

Check if the path exists and create it if it does not.

Check if the file exists and download it if it does not.

"""

if not dir_path.parents[0].exists():

dir_path.parents[0].mkdir(parents=True)

print(f'Directory Created: {dir_path.parents[0]}')

else:

print('Directory Exists')

if not dir_path.exists():

r = requests.get(url, allow_redirects=True)

open(dir_path, 'wb').write(r.content)

print(f'File Created: {dir_path.name}')

else:

print('File Exists')

1

2

data_dir = Path('data/2020-10-14_supervised_learning_sklearn')

images_dir = Path('Images/2020-10-14_supervised_learning_sklearn')

Datasets

1

2

3

4

5

6

7

file_mpg = 'https://assets.datacamp.com/production/repositories/628/datasets/3781d588cf7b04b1e376c7e9dda489b3e6c7465b/auto.csv'

file_housing = 'https://assets.datacamp.com/production/repositories/628/datasets/021d4b9e98d0f9941e7bfc932a5787b362fafe3b/boston.csv'

file_diabetes = 'https://assets.datacamp.com/production/repositories/628/datasets/444cdbf175d5fbf564b564bd36ac21740627a834/diabetes.csv'

file_gapminder = 'https://assets.datacamp.com/production/repositories/628/datasets/a7e65287ebb197b1267b5042955f27502ec65f31/gm_2008_region.csv'

file_voting = 'https://assets.datacamp.com/production/repositories/628/datasets/35a8c54b79d559145bbeb5582de7a6169c703136/house-votes-84.csv'

file_wwine = 'https://assets.datacamp.com/production/repositories/628/datasets/2d9076606fb074c66420a36e06d7c7bc605459d4/white-wine.csv'

file_rwine = 'https://assets.datacamp.com/production/repositories/628/datasets/013936d2700e2d00207ec42100d448c23692eb6f/winequality-red.csv'

1

2

3

4

5

6

7

8

datasets = [file_mpg, file_housing, file_diabetes, file_gapminder, file_voting, file_wwine, file_rwine]

data_paths = list()

for data in datasets:

file_name = data.split('/')[-1].replace('?raw=true', '')

data_path = data_dir / file_name

create_dir_save_file(data_path, data)

data_paths.append(data_path)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

DataFrames

Classification

In this chapter, you will be introduced to classification problems and learn how to solve them using supervised learning techniques. And you’ll apply what you learn to a political dataset, where you classify the party affiliation of United States congressmen based on their voting records.

Supervised learning

What is machine learning?

- The art and science of giving computers the ability to learn to make decisions from data without being explicitly programmed.

- Examples:

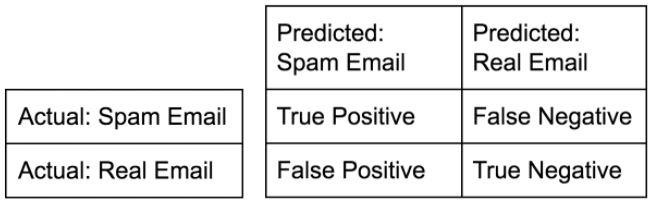

- Your computer can learn to predict whether an email is spam or not spam, given its content and sender.

- Your computer can learn to cluster, say, Wikipedia entries, into different categories based on the words they contain.

- It could then assign any new Wikipedia article to one of the existing clusters.

- Note that, in the first example, we are trying to predict a particular class label, the is, spam or not spam.

- In the second example, there is not such label.

- When there are labels present, we call it supervised learning.

- Where there are not labels present, we call it unsupervised learning.

Unsupervised learning

- In essence, is the machine learning task of uncovering hidden patterns and structures from unlabeled data.

- Example:

- A business may wish to group its customers into distinct categories (Clustering) based on their purchasing behavior without knowing in advance what these categories might be.

- This is known as clustering, one branch of unsupervised learning.

- A business may wish to group its customers into distinct categories (Clustering) based on their purchasing behavior without knowing in advance what these categories might be.

Reinforcement learning

- Machines or software agents interact with an environment.

- Reinforcement learning agents are able to automatically figure out how to optimize their behavior given a system of rewards and punishments.

- Reinforcement learning draws inspiration from behavioral psychology and has applications in many fields, such as, economics, genetics, as well as game playing.

- In 2016, reinforcement learning was used to train Google DeepMind’s AlphaGo, which was the first computer program to beat the world champion in Go.

Supervised learning

- In supervised learning, we have several data points or samples, described using predictor variables or features and a target variable.

- Out data is commonly represented in a tables structure such as the one below, in which there is a row for data point and a column for each feature.

1 2 3 4 5 6 7 8

| | Predictor Variables | Target | | | sepal_length | sepal_width | petal_length | petal_width | species | |---:|---------------:|--------------:|---------------:|--------------:|:----------| | 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa | | 1 | 4.9 | 3 | 1.4 | 0.2 | setosa | | 2 | 4.7 | 3.2 | 1.3 | 0.2 | setosa | | 3 | 4.6 | 3.1 | 1.5 | 0.2 | setosa | | 4 | 5 | 3.6 | 1.4 | 0.2 | setosa |

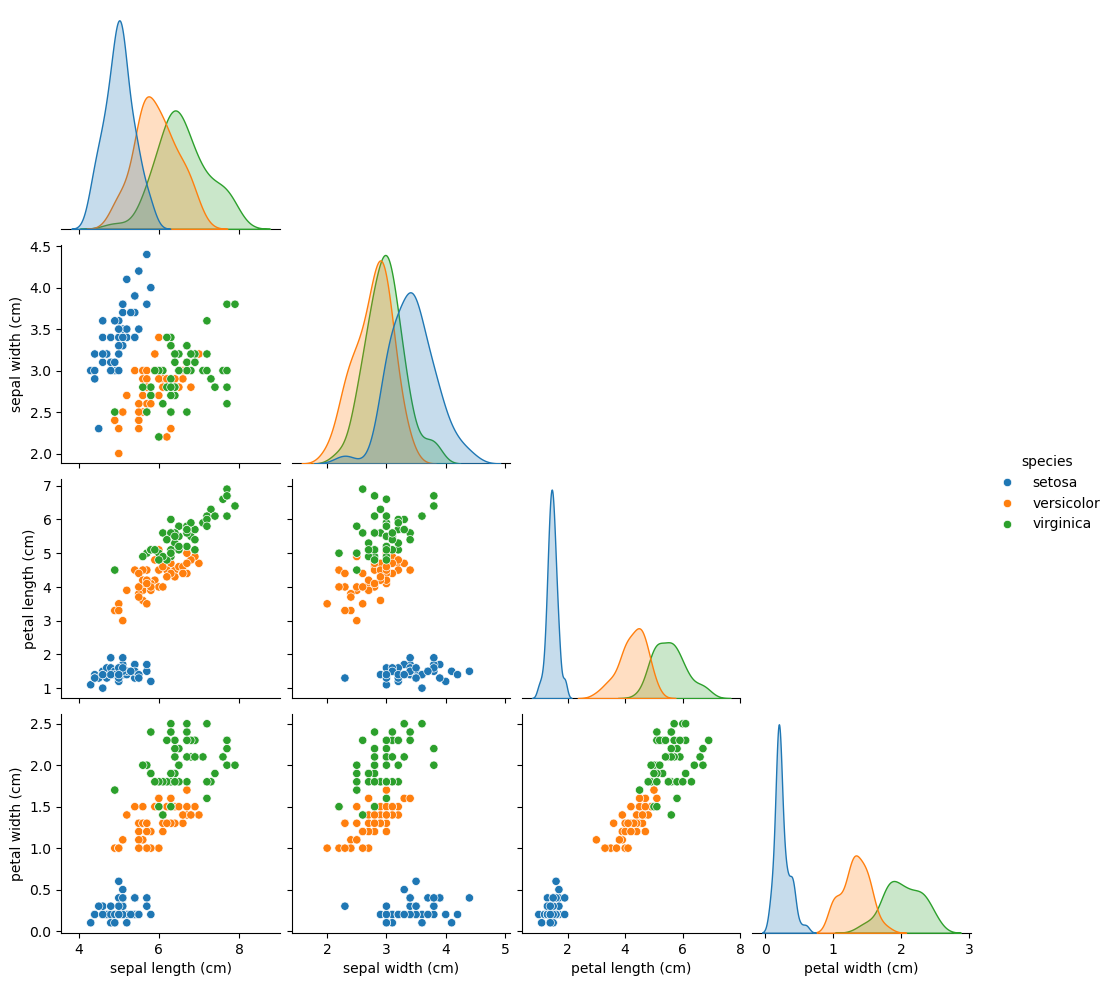

- Here, we see the iris dataset: each row represents measurements of a different flower and each column is a particular kind of measurement, like the width and length of a certain part of the flower.

- The aim of supervised learning is to build a model that is able to predict the target variable, here, the particular species of a flower, given the predictor variables, the physical measurements.

- If the target variable consists of categories, like

'click'or'no click','spam'or'not spam', or different species of flowers, we call the learning task, classification. - Alternatively, if the target is a continuously varying variable, the price of a house, it is a regression task.

- This chapter will focus on classification, the following, on regression.

- The goal of supervised learning is frequently to either automate a time-consuming, or expensive, manual task, such as a doctor’s diagnosis, or to make predictions about the future, say whether a customer will click on an add, or not.

- For supervised learning, you need labeled data and there are many ways to go get it: you can get historical data, which already has labels that you are interested in; you can perform experiments to get labeled data, such as A/B-testing to see how many clicks you get; or you can also use crowd-sourced labeling data, like reCAPTCHA does for text recognition.

- In any case, the goal is to learn from data, for which the right output is known, so that we can make predictions on new data from which we don’t know the output.

Supervised learning in python

- There are many ways to perform supervised learning in Python.

- In this course, we will use

scikit-learn, orsklearn, one of the most popular and use-friendly machine learning libraries for Python. - It also integrate very well with the

SciPystack, including libraries such asNumPy. - There are a number of other ML libraries out there, such as

TensorFlowandkeras, which are well worth checking out, once you get the basics down.

Naming conventions

- A note on naming conventions: out in the wild, you will find that what we call a feature, others may call a predictor variable, or independent variable, and what we call a target variable, others may call dependent variable, or response variable.

Which of these is a classification problem?

Once you decide to leverage supervised machine learning to solve a new problem, you need to identify whether your problem is better suited to classification or regression. This exercise will help you develop your intuition for distinguishing between the two.

Provided below are 4 example applications of machine learning. Which of them is a supervised classification problem?

Answer the question

- Using labeled financial data to predict whether the value of a stock will go up or go down next week.

- Exactly! In this example, there are two discrete, qualitative outcomes: the stock market going up, and the stock market going down. This can be represented using a binary variable, and is an application perfectly suited for classification.

Using labeled housing price data to predict the price of a new house based on various features.- Incorrect. The price of a house is a quantitative variable. This is not a classification problem.

Using unlabeled data to cluster the students of an online education company into different categories based on their learning styles.- Incorrect. When using unlabeled data, we enter the territory of unsupervised learning.

Using labeled financial data to predict what the value of a stock will be next week.- Incorrect. The value of a stock is a quantitative value. This is not a classification problem.

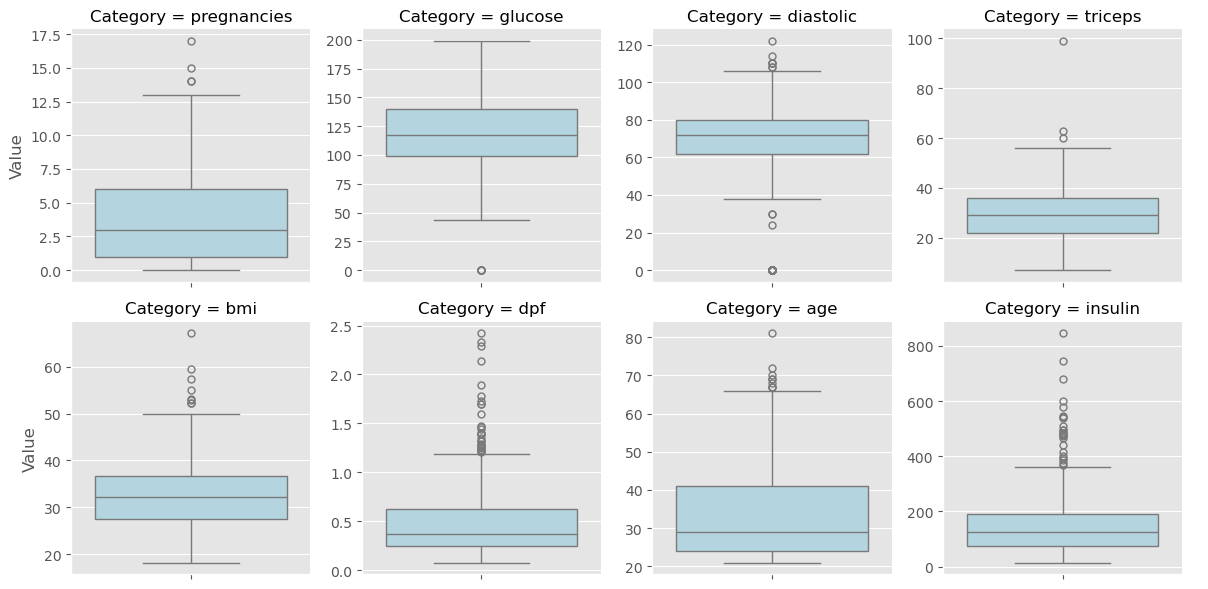

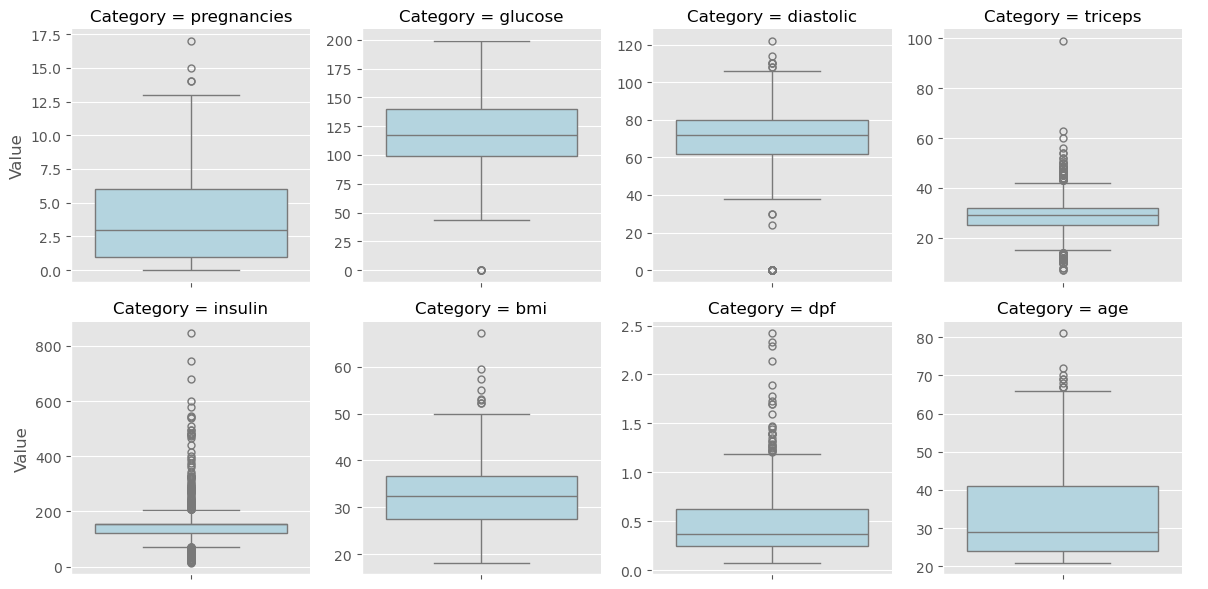

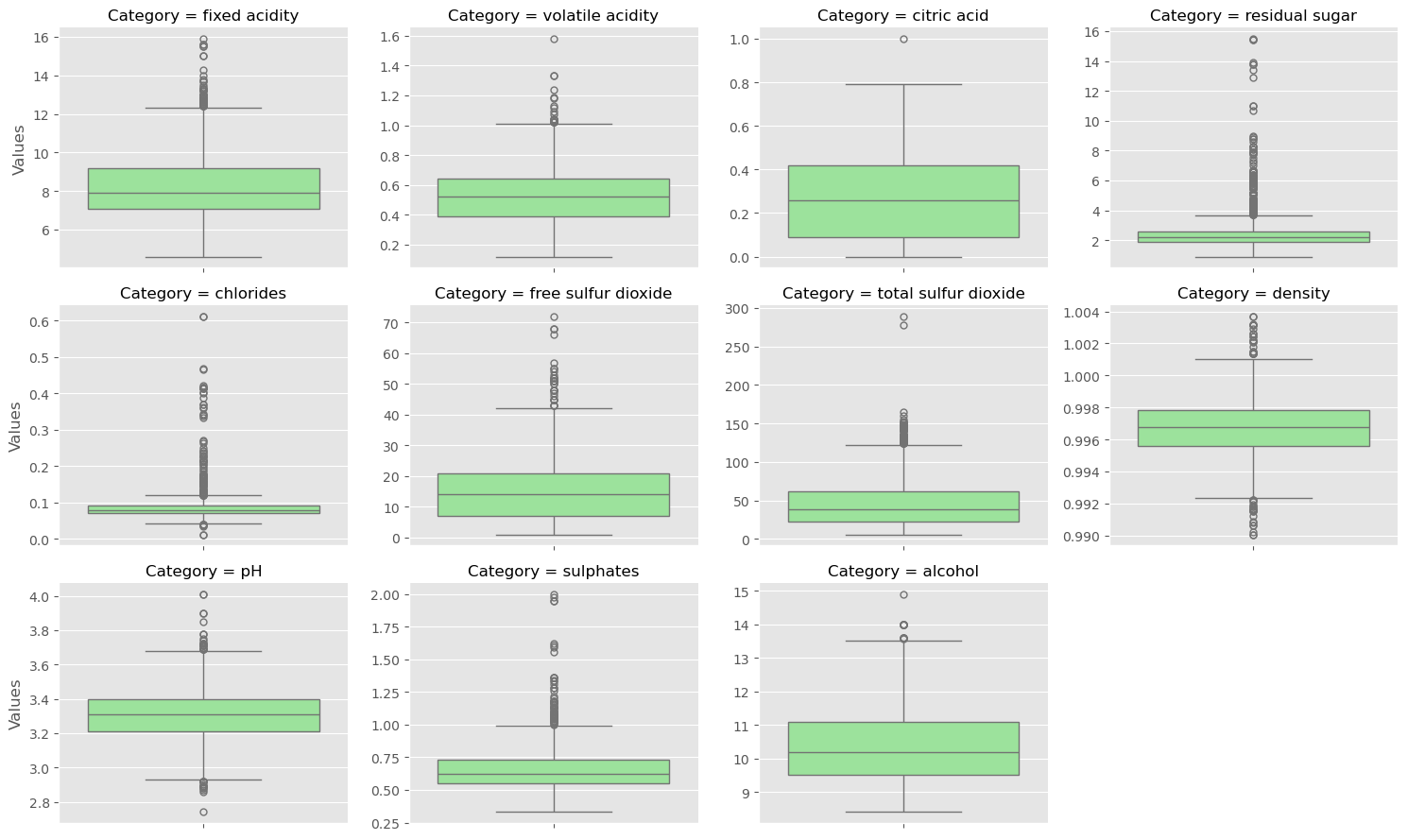

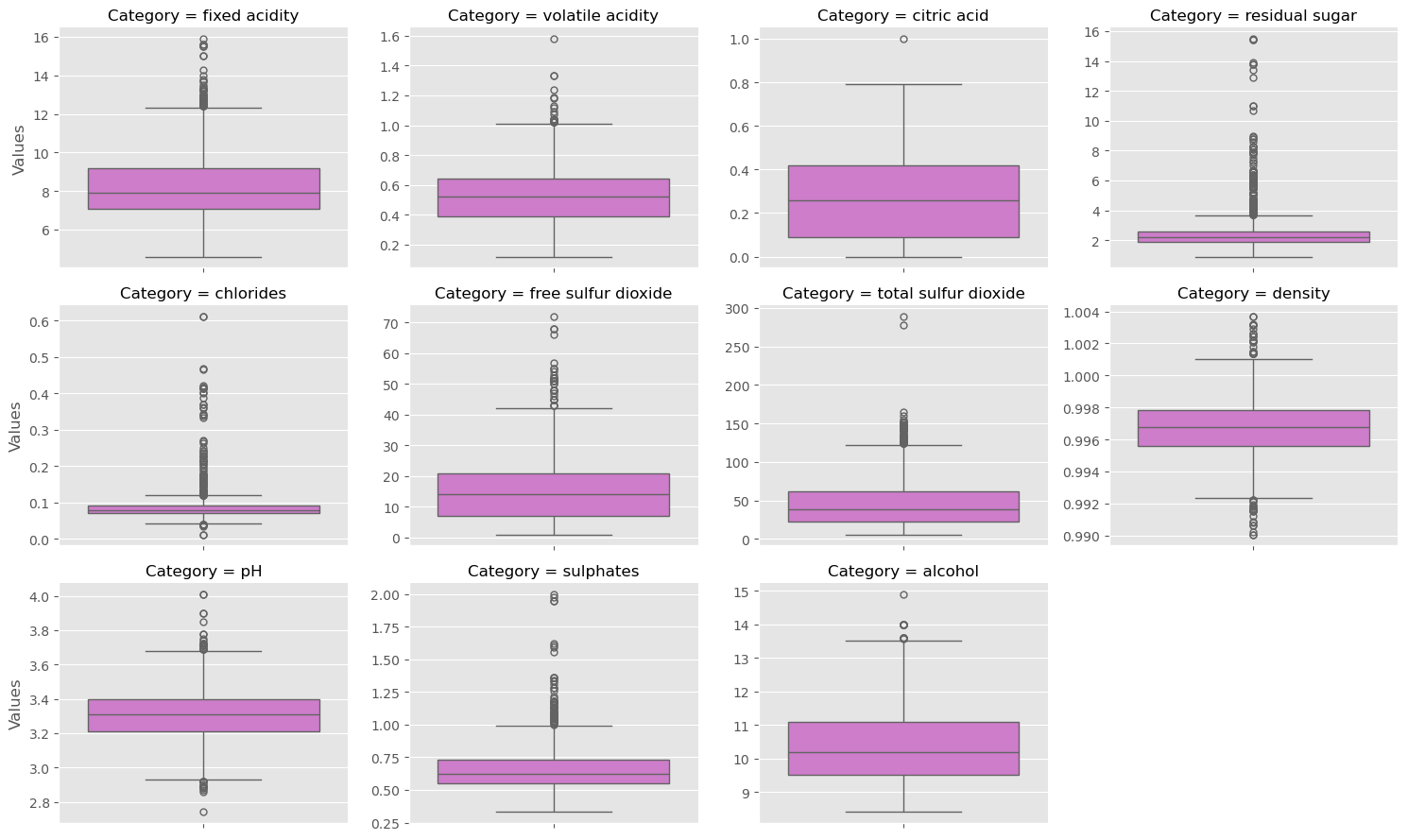

Exploratory data analysis

- Samples are in rows

- Features are in columns

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

iris = load_iris()

print(f'Type: {type(iris)}')

print(f'Keys: {iris.keys()}')

print(f'Data Type: {type(iris.data)}\nTarget Type: {type(iris.target)}')

print(f'Data Shape: {iris.data.shape}')

print(f'Target Names: {iris.target_names}')

X = iris.data

y = iris.target

df = pd.DataFrame(X, columns=iris.feature_names)

df['label'] = y

species_map = dict(zip(range(3), iris.target_names))

df['species'] = df.label.map(species_map)

df = df.reindex(['sepal length (cm)', 'sepal width (cm)', 'petal length (cm)', 'petal width (cm)', 'species', 'label'], axis=1)

display(df.head())

# pd.plotting.scatter_matrix(df, c=y, figsize=(12, 10))

ax = sns.pairplot(df.iloc[:, :5], hue='species', corner=True)

1

2

3

4

5

6

Type: <class 'sklearn.utils._bunch.Bunch'>

Keys: dict_keys(['data', 'target', 'frame', 'target_names', 'DESCR', 'feature_names', 'filename', 'data_module'])

Data Type: <class 'numpy.ndarray'>

Target Type: <class 'numpy.ndarray'>

Data Shape: (150, 4)

Target Names: ['setosa' 'versicolor' 'virginica']

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | label | |

|---|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa | 0 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | setosa | 0 |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | setosa | 0 |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | setosa | 0 |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | setosa | 0 |

Numerical EDA

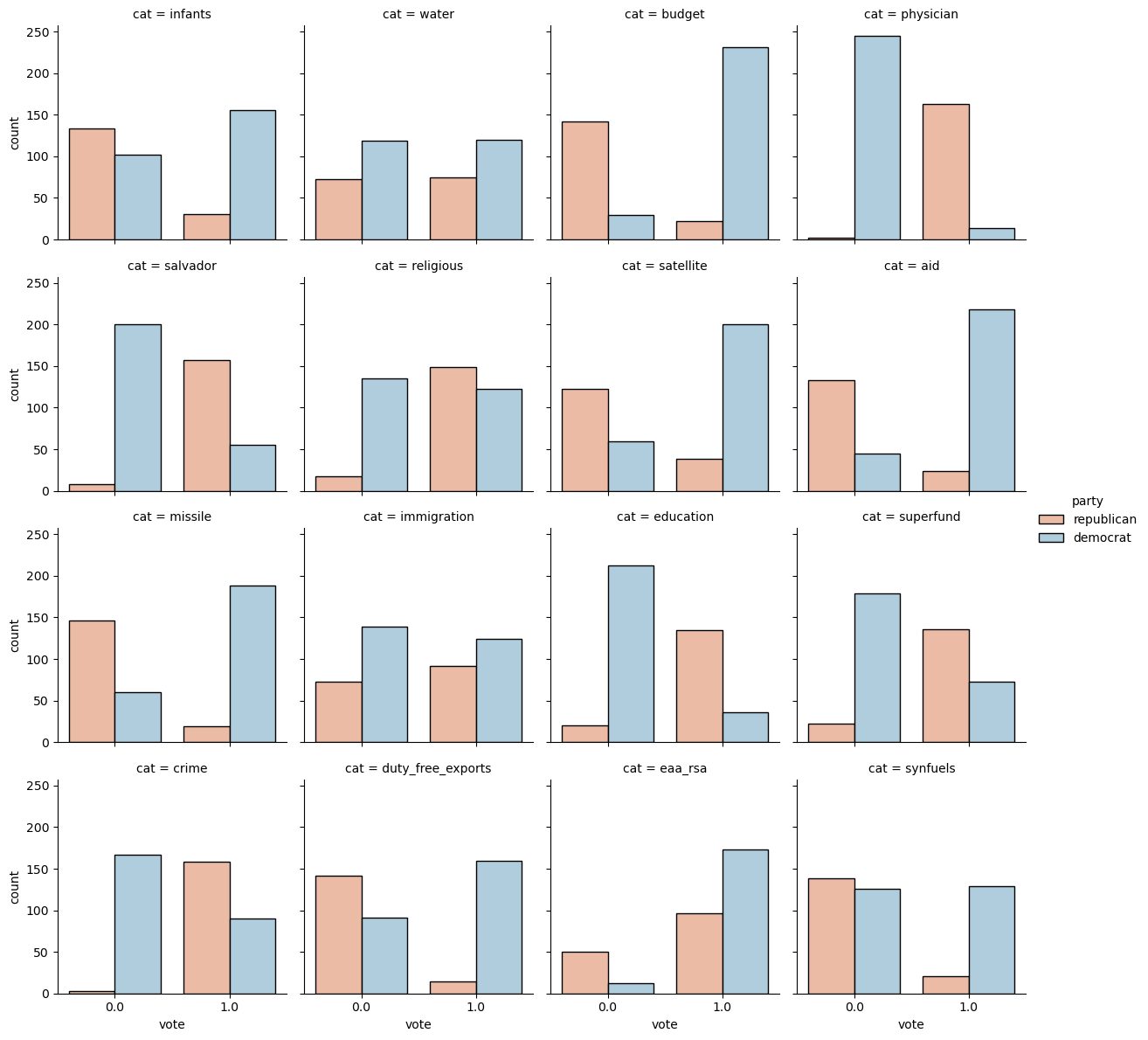

In this chapter, you’ll be working with a dataset obtained from the UCI Machine Learning Repository consisting of votes made by US House of Representatives Congressmen. Your goal will be to predict their party affiliation (‘Democrat’ or ‘Republican’) based on how they voted on certain key issues. Here, it’s worth noting that we have preprocessed this dataset to deal with missing values. This is so that your focus can be directed towards understanding how to train and evaluate supervised learning models. Once you have mastered these fundamentals, you will be introduced to preprocessing techniques in Chapter 4 and have the chance to apply them there yourself - including on this very same dataset!

Before thinking about what supervised learning models you can apply to this, however, you need to perform Exploratory data analysis (EDA) in order to understand the structure of the data. For a refresher on the importance of EDA, check out the first two chapters of Statistical Thinking in Python (Part 1).

Get started with your EDA now by exploring this voting records dataset numerically. It has been pre-loaded for you into a DataFrame called df. Use pandas’ .head(), .info(), and .describe() methods in the IPython Shell to explore the DataFrame, and select the statement below that is not true.

1

2

3

4

5

cols = ['party', 'infants', 'water', 'budget', 'physician', 'salvador', 'religious', 'satellite', 'aid',

'missile', 'immigration', 'synfuels', 'education', 'superfund', 'crime', 'duty_free_exports', 'eaa_rsa']

votes = pd.read_csv(data_paths[4], header=None, names=cols)

votes.iloc[:, 1:] = votes.iloc[:, 1:].replace({'?': None, 'n': 0, 'y': 1})

votes.head()

| party | infants | water | budget | physician | salvador | religious | satellite | aid | missile | immigration | synfuels | education | superfund | crime | duty_free_exports | eaa_rsa | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | republican | 0.0 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | NaN | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 |

| 1 | republican | 0.0 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | NaN |

| 2 | democrat | NaN | 1.0 | 1.0 | NaN | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 |

| 3 | democrat | 0.0 | 1.0 | 1.0 | 0.0 | NaN | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 1.0 |

| 4 | democrat | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | NaN | 1.0 | 1.0 | 1.0 | 1.0 |

Possible Answers

- The DataFrame has a total of

435rows and17columns. - Except for

'party', all of the columns are of typeint64. - The first two rows of the DataFrame consist of votes made by Republicans and the next three rows consist of votes made by Democrats.

There are 17 predictor variables, or features, in this DataFrame.- The number of columns in the DataFrame is not equal to the number of features. One of the columns -

'party'is the target variable.

- The number of columns in the DataFrame is not equal to the number of features. One of the columns -

- The target variable in this DataFrame is

'party'.

Visual EDA

The Numerical EDA you did in the previous exercise gave you some very important information, such as the names and data types of the columns, and the dimensions of the DataFrame. Following this with some visual EDA will give you an even better understanding of the data. In the video, Hugo used the scatter_matrix() function on the Iris data for this purpose. However, you may have noticed in the previous exercise that all the features in this dataset are binary; that is, they are either 0 or 1. So a different type of plot would be more useful here, such as seaborn.countplot.

Given on the right is a countplot of the 'education' bill, generated from the following code:

1

2

3

4

plt.figure()

sns.countplot(x='education', hue='party', data=df, palette='RdBu')

plt.xticks([0,1], ['No', 'Yes'])

plt.show()

In sns.countplot(), we specify the x-axis data to be 'education', and hue to be 'party'. Recall that 'party' is also our target variable. So the resulting plot shows the difference in voting behavior between the two parties for the 'education' bill, with each party colored differently. We manually specified the color to be 'RdBu', as the Republican party has been traditionally associated with red, and the Democratic party with blue.

It seems like Democrats voted resoundingly against this bill, compared to Republicans. This is the kind of information that our machine learning model will seek to learn when we try to predict party affiliation solely based on voting behavior. An expert in U.S politics may be able to predict this without machine learning, but probably not instantaneously - and certainly not if we are dealing with hundreds of samples!

In the IPython Shell, explore the voting behavior further by generating countplots for the 'satellite' and 'missile' bills, and answer the following question: Of these two bills, for which ones do Democrats vote resoundingly in favor of, compared to Republicans? Be sure to begin your plotting statements for each figure with plt.figure() so that a new figure will be set up. Otherwise, your plots will be overlayed onto the same figure.

1

2

3

4

# in order to use catplot, the dataframe needs to be in a tidy format

vl = votes.set_index('party').stack().reset_index().rename(columns={'level_1': 'cat', 0: 'vote'})

g = sns.catplot(data=vl, x='vote', col='cat', col_wrap=4, hue='party', kind='count', height=3, palette='RdBu')

Possible Answers

'satellite'.'missile'.- Both

'satellite'and'missile'. Neither'satellite'nor'missile'.

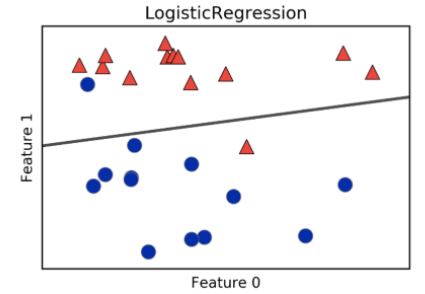

The classification challenge

- We have a set of labeled data and we want to build a classifier that takes unlabeled data as input and output a label.

- How do we construct this classifier?

- We first need to choose a type of classifier, and it needs to learn from the already labeled data.

- For this reason, we call the already labeled data, the training data.

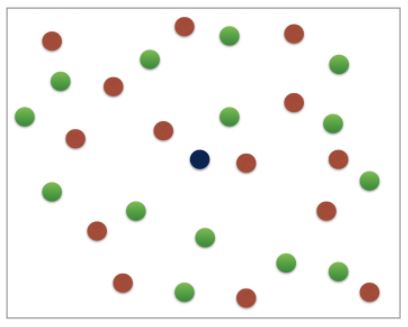

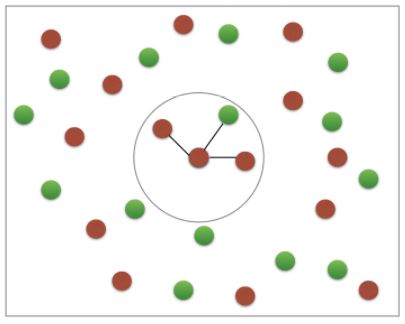

k-Nearest Neighbors (KNN)

- We’ll choose a simple algorithm call K-nearest neighbors.

- the basic idea of KNN, is to predict the label of any data point by looking at the K, for example, 3, closest labeled data points, and getting them to vote on what label the unlabeled point should have.

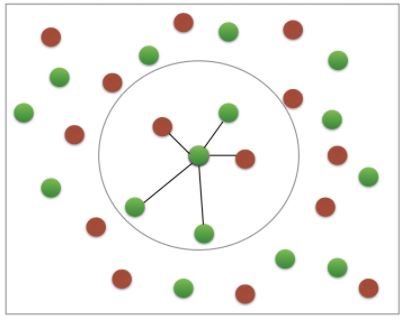

- In this image, there’s an example of KNN in two dimensions: how do you classify the data point in the middle?

- If

k=3, you would classify it as red

- If

- If

k=5, you would classify it as green

- If

KNN: Intuition

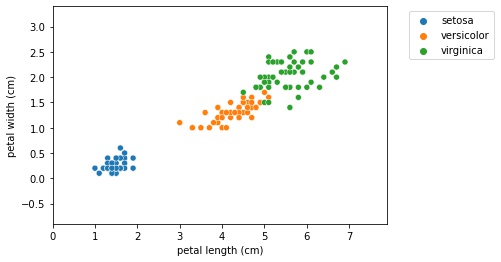

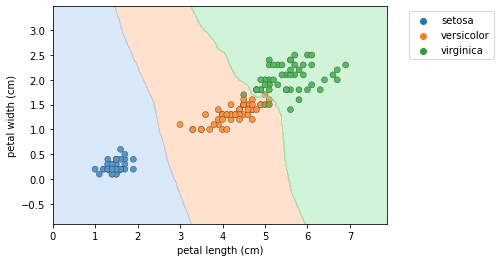

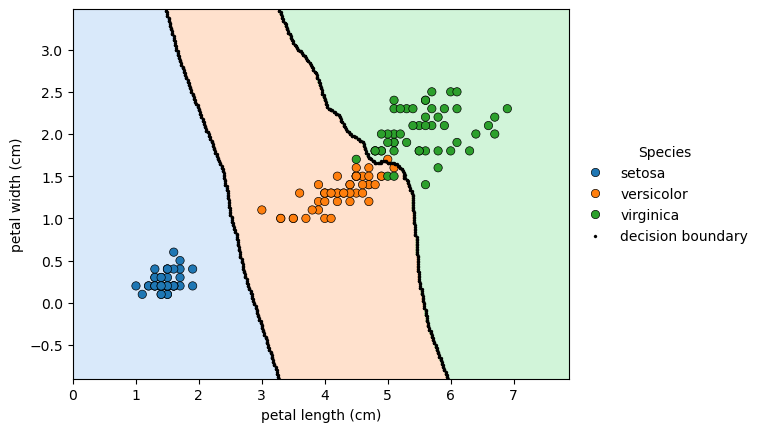

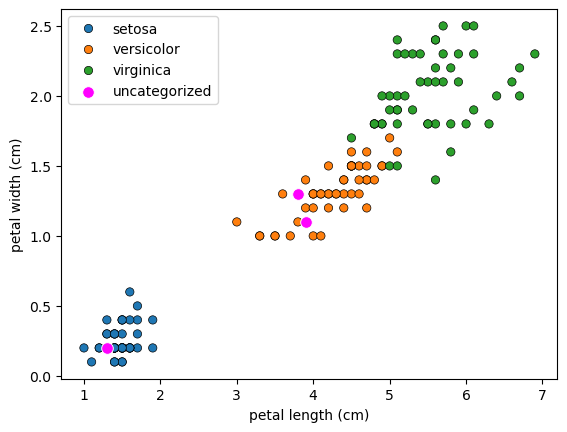

- To get a bit of intuition for KNN, let’s check out a scatter plot of two dimensions of the iris dataset, petal length and petal width.

- The following holds for higher dimensions, however, we’ll show thae 2D case for illustrative purposes.

- What the KNN algorithm essentially does, is create a set of decision boundaries and we visualized the 2D case here.

- Any new data point will have a species prediction based on the boundary.

scikit-learn fit and predict

- All machine learning models in

scikit-learnare implemented as python classes - These classes serve two purposes:

- They implement the algorithms for learning a model, and predicting

- Storing all the information that is learned from the data.

- Training a model on the data is also called fitting the model to the data.

- In

scikit-learnwe use the.fit()method to do this. - The

.predict()is used to predict the label of an unlabeled data point.

- In

Code to create boundary plot in the previous block

- See How to extract only the boundary values from k-nearest neighbors predict to see a method to extract the boundary values.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

# instantiate model

knn = KNeighborsClassifier(n_neighbors=6)

# predict for 'petal length (cm)' and 'petal width (cm)'

knn.fit(df.iloc[:, 2:4], df.label)

h = .02 # step size in the mesh

# create colormap for the contour plot

cmap_light = ListedColormap(list(sns.color_palette('pastel', n_colors=3)))

# Plot the decision boundary.

# For that, we will assign a color to each point in the mesh [x_min, x_max]x[y_min, y_max].

x_min, x_max = df['petal length (cm)'].min() - 1, df['petal length (cm)'].max() + 1

y_min, y_max = df['petal width (cm)'].min() - 1, df['petal width (cm)'].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h))

Z = knn.predict(np.c_[xx.ravel(), yy.ravel()]).reshape(xx.shape)

# create plot

fig, ax = plt.subplots()

# add decision boundary countour map

ax.contourf(xx, yy, Z, cmap=cmap_light, alpha=0.4)

# add data points

sns.scatterplot(data=df, x='petal length (cm)', y='petal width (cm)', hue='species', ax=ax, edgecolor='k')

# use diff to create a mask

mask = np.diff(Z, axis=1) != 0

mask2 = np.diff(Z, axis=0) != 0

# apply mask against xx and yy

xd = np.concatenate((xx[:, 1:][mask], xx[1:, :][mask2]))

yd = np.concatenate((yy[:, 1:][mask], yy[1:, :][mask2]))

# plot the decision boundary

sns.scatterplot(x=xd, y=yd, color='k', edgecolor='k', s=5, ax=ax, label='decision boundary')

# legend

_ = ax.legend(title='Species', bbox_to_anchor=(1, 0.5), loc='center left', frameon=False)

Using scikit-learn to fit a classifier

from sklearn.neighbors import KNeighborsClassifier- The API requires data as a

pandas.DataFrameor as anumpy.array - The API features must take on continuous values, such as the price of a house, as opposed to categories, such as

'male'or'female'. - There should be no missing values in the data.

- All dataset we’ll work with, satisfy these properties.

- Dealing with categorical features and missing data will be discussed later in the course.

- The API requires that the features are in an array, where each column is a feature, and each row, a different observation or data point.

- There must be a label for each observation.

- Check out what’s returned when the classifier is fit

- It returns the classifier itself, and modifies it, to fit it to the data.

- Now that the classifier is fit, use it to predict on some unlabeled data.

1

2

3

4

5

6

# new data

X_new = np.array([[5.6, 2.8, 3.9, 1.1], [5.7, 2.6, 3.8, 1.3], [4.7, 3.2, 1.3, 0.2]])

fig, ax = plt.subplots()

sns.scatterplot(data=df, x='petal length (cm)', y='petal width (cm)', hue='species', ax=ax, edgecolor='k')

_ = sns.scatterplot(x=X_new[:, 2], y=X_new[:, 3], ax=ax, color='magenta', label='uncategorized', s=70)

1

2

3

4

5

6

7

8

9

10

# instantiate the model, and set the number of neighbors

knn = KNeighborsClassifier(n_neighbors=6)

# fit the model to the training set, the labeled data

knn.fit(df.iloc[:, :4], df.label)

# predit the label of the new data

pred = knn.predict(X_new)

spcies_pred = list(map(species_map.get, pred))

print(f'Predicted Label: {pred}\nSpecies: {spcies_pred}')

1

2

Predicted Label: [1 1 0]

Species: ['versicolor', 'versicolor', 'setosa']

k-Nearest Neighbors: Fit

Having explored the Congressional voting records dataset, it is time now to build your first classifier. In this exercise, you will fit a k-Nearest Neighbors classifier to the voting dataset, which has once again been pre-loaded for you into a DataFrame df.

In the video, Hugo discussed the importance of ensuring your data adheres to the format required by the scikit-learn API. The features need to be in an array where each column is a feature and each row a different observation or data point - in this case, a Congressman’s voting record. The target needs to be a single column with the same number of observations as the feature data. We have done this for you in this exercise. Notice we named the feature array X and response variable y: This is in accordance with the common scikit-learn practice.

Your job is to create an instance of a k-NN classifier with 6 neighbors (by specifying the n_neighbors parameter) and then fit it to the data. The data has been pre-loaded into a DataFrame called df.

Instructions

- Import

KNeighborsClassifierfromsklearn.neighbors. - Create arrays

Xandyfor the features and the target variable. Here this has been done for you. Note the use of.drop()to drop the target variable'party'from the feature arrayXas well as the use of the.valuesattribute to ensureXandyare NumPy arrays. Without using.values,Xandyare a DataFrame and Series respectively; the scikit-learn API will accept them in this form also as long as they are of the right shape. - Instantiate a

KNeighborsClassifiercalledknnwith6neighbors by specifying then_neighborsparameter. - Fit the classifier to the data using the

.fit()method.

1

2

v_na = votes.dropna().reset_index(drop=True)

v_na.head()

| party | infants | water | budget | physician | salvador | religious | satellite | aid | missile | immigration | synfuels | education | superfund | crime | duty_free_exports | eaa_rsa | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | democrat | 0.0 | 1.0 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 |

| 1 | republican | 0.0 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 |

| 2 | democrat | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 |

| 3 | democrat | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 |

| 4 | democrat | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 |

1

2

3

4

5

# Create a k-NN classifier with 6 neighbors

knn = KNeighborsClassifier(n_neighbors=6)

# Fit the classifier to the data

knn.fit(v_na.iloc[:, 1:], v_na.party)

KNeighborsClassifier(n_neighbors=6)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

KNeighborsClassifier(n_neighbors=6)

Now that your k-NN classifier with 6 neighbors has been fit to the data, it can be used to predict the labels of new data points.

k-Nearest Neighbors: Predict

Having fit a k-NN classifier, you can now use it to predict the label of a new data point. However, there is no unlabeled data available since all of it was used to fit the model! You can still use the .predict() method on the X that was used to fit the model, but it is not a good indicator of the model’s ability to generalize to new, unseen data.

In the next video, Hugo will discuss a solution to this problem. For now, a random unlabeled data point has been generated and is available to you as X_new. You will use your classifier to predict the label for this new data point, as well as on the training data X that the model has already seen. Using .predict() on X_new will generate 1 prediction, while using it on X will generate 435 predictions: 1 for each sample.

The DataFrame has been pre-loaded as df. This time, you will create the feature array X and target variable array y yourself.

Instructions

- Create arrays for the features and the target variable from

df. As a reminder, the target variable is'party'. - Instantiate a

KNeighborsClassifierwith6neighbors. - Fit the classifier to the data.

- Predict the labels of the training data,

X. - Predict the label of the new data point

X_new.

1

2

X_new = np.array([[0.69646919, 0.28613933, 0.22685145, 0.55131477, 0.71946897, 0.42310646, 0.9807642 , 0.68482974,

0.4809319 , 0.39211752, 0.34317802, 0.72904971, 0.43857224, 0.0596779 , 0.39804426, 0.73799541]])

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# Create arrays for the features and the response variable

y = v_na.party

X = v_na.iloc[:, 1:]

# Create a k-NN classifier with 6 neighbors: knn

knn = KNeighborsClassifier(n_neighbors=6)

# Fit the classifier to the data

knn.fit(X, y)

# Predict the labels for the training data X

y_pred = knn.predict(X)

# Predict and print the label for the new data point X_new

new_prediction = knn.predict(X_new)

print(f'Prediction: {new_prediction}')

1

Prediction: ['democrat']

Did your model predict ‘democrat’ or ‘republican’? How sure can you be of its predictions? In other words, how can you measure its performance? This is what you will learn in the next video.

Measuring model performance

- Now that we know how to fit a classifier and use it to predict the labels of previously unseen data, we need to figure out how to measure its performance. We need a metric.

- In classification problems, accuracy is a commonly-used metric.

- The accuracy of a classifier is defined as the number of correct predictions divided by the total number of data points.

- This begs the question though: which data do we use to compute accuracy?

- What we’re really interested in is how well out model will perform on new data; samples that the algorithm has never seen before.

- You could compute the accuracy on the data you used to fit the classifier.

- However, as this data was used to train it, the classifier’s performance will not be indicative of how well it can generalize to unseen data.

- For this reason, it is common practice to split the data into two sets, a training and test set.

- The classifier is trained or fit on the training set.

- Then predictions are made on the labeled test set, and compared with the known labels.

- The accuracy of the predictions is then computed.

Train Test Split

sklearn.model_selection.train_test_splitrandom_statesets a seed for the random number generator that splits the data into train and test, which allows for reproducing the exact split of the data.- returns four arrays: train data, test data, training labels and test labels.

- the default split is %75/%25, which is a good rule of thumb, and is specified by

test_size. - it is also best practice to perform the split so that the split reflects the labels on the data.

- That is, you want the labels to be distributed in train and test sets as they are in the original dataset, as is achieved by setting

stratify=y, whereyis the array or dataframe of labels.

- That is, you want the labels to be distributed in train and test sets as they are in the original dataset, as is achieved by setting

- See below that the accuracy of the model is approximately %96, which is pretty good for an out-of-the-box model.

Model complexity and over / underfitting

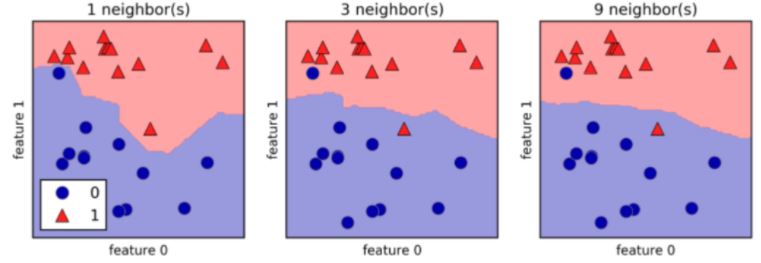

- Recall that we recently discussed the concept of a decision boundary.

- We visualized a decision boundary for several, increasing values of

Kin a KNN model. - As

Kincreases, the decision boundary get smoother and less curvy. - Therefore, we consider it to be a less complex model than those with a lower

K. - Generally, complex models run the risk of being sensitive to noise in the specific data that you have, rather than reflecting general trends in the data.

- This is known as overfitting.

- If you increase

Keven more, and make the model even simpler, then the model will perform less well on both test and training sets, as indicated in the following schematic figure, known as a model complexity curve. - We can see there is a sweet spot in the middle that gives us the best performance on the test set

1

df.head()

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | label | |

|---|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa | 0 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | setosa | 0 |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | setosa | 0 |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | setosa | 0 |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | setosa | 0 |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

# from sklearn.model_selection import train_test_split

# split the data

X_train, X_test, y_train, y_test = train_test_split(df.iloc[:, :4], df.species, test_size=0.3, random_state=21, stratify=df.species)

# instantiate the classifier

knn = KNeighborsClassifier(n_neighbors=8)

# fit it to the training data

knn.fit(X_train, y_train)

# make predictions on the test data

y_pred = knn.predict(X_test)

# check the accuracy using the score method of the model

score = knn.score(X_test, y_test)

# print the predictions and score

print(f'Test set score: {score:0.3f}\nTest set predictions:\n{y_pred}')

1

2

3

4

5

6

7

8

9

10

Test set score: 0.956

Test set predictions:

['virginica' 'versicolor' 'virginica' 'virginica' 'versicolor' 'setosa'

'versicolor' 'setosa' 'setosa' 'versicolor' 'setosa' 'virginica' 'setosa'

'virginica' 'virginica' 'setosa' 'setosa' 'setosa' 'versicolor' 'setosa'

'virginica' 'virginica' 'virginica' 'setosa' 'versicolor' 'versicolor'

'versicolor' 'setosa' 'setosa' 'versicolor' 'virginica' 'virginica'

'setosa' 'setosa' 'versicolor' 'virginica' 'virginica' 'versicolor'

'versicolor' 'virginica' 'versicolor' 'versicolor' 'setosa' 'virginica'

'versicolor']

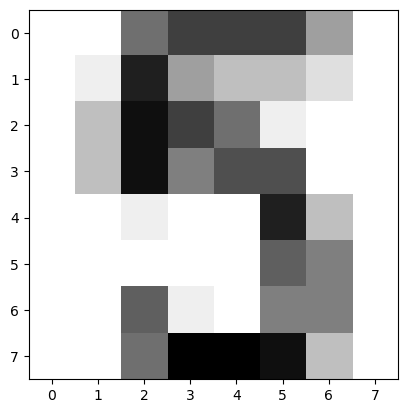

The digits recognition dataset

Up until now, you have been performing binary classification, since the target variable had two possible outcomes. Hugo, however, got to perform multi-class classification in the videos, where the target variable could take on three possible outcomes. Why does he get to have all the fun?! In the following exercises, you’ll be working with the MNIST digits recognition dataset, which has 10 classes, the digits 0 through 9! A reduced version of the MNIST dataset is one of scikit-learn’s included datasets, and that is the one we will use in this exercise.

Each sample in this scikit-learn dataset is an 8x8 image representing a handwritten digit. Each pixel is represented by an integer in the range 0 to 16, indicating varying levels of black. Recall that scikit-learn’s built-in datasets are of type Bunch, which are dictionary-like objects. Helpfully for the MNIST dataset, scikit-learn provides an 'images' key in addition to the 'data' and 'target' keys that you have seen with the Iris data. Because it is a 2D array of the images corresponding to each sample, this 'images' key is useful for visualizing the images, as you’ll see in this exercise (for more on plotting 2D arrays, see Chapter 2 of DataCamp’s course on Data Visualization with Python). On the other hand, the 'data' key contains the feature array - that is, the images as a flattened array of 64 pixels.

Notice that you can access the keys of these Bunch objects in two different ways: By using the . notation, as in digits.images, or the [] notation, as in digits['images'].

For more on the MNIST data, check out this exercise in Part 1 of DataCamp’s Importing Data in Python course. There, the full version of the MNIST dataset is used, in which the images are 28x28. It is a famous dataset in machine learning and computer vision, and frequently used as a benchmark to evaluate the performance of a new model.

Instructions

- Import

datasetsfromsklearnandmatplotlib.pyplotasplt. - Load the digits dataset using the

.load_digits()method ondatasets. - Print the keys and

DESCRof digits. - Print the shape of

imagesanddatakeys using the.notation. - Display the 1011th image using

plt.imshow(). This has been done for you, so hit ‘Submit Answer’ to see which handwritten digit this happens to be!

1

2

3

4

5

6

7

8

9

10

11

12

13

14

# Load the digits dataset: digits

digits = load_digits()

# Print the keys and DESCR of the dataset

print(digits.keys())

print(digits.DESCR)

# Print the shape of the images and data keys

print(digits.images.shape)

print(digits.data.shape)

# Display digit 1010

plt.imshow(digits.images[1010], cmap=plt.cm.gray_r, interpolation='nearest')

plt.show()

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

dict_keys(['data', 'target', 'frame', 'feature_names', 'target_names', 'images', 'DESCR'])

.. _digits_dataset:

Optical recognition of handwritten digits dataset

--------------------------------------------------

**Data Set Characteristics:**

:Number of Instances: 1797

:Number of Attributes: 64

:Attribute Information: 8x8 image of integer pixels in the range 0..16.

:Missing Attribute Values: None

:Creator: E. Alpaydin (alpaydin '@' boun.edu.tr)

:Date: July; 1998

This is a copy of the test set of the UCI ML hand-written digits datasets

https://archive.ics.uci.edu/ml/datasets/Optical+Recognition+of+Handwritten+Digits

The data set contains images of hand-written digits: 10 classes where

each class refers to a digit.

Preprocessing programs made available by NIST were used to extract

normalized bitmaps of handwritten digits from a preprinted form. From a

total of 43 people, 30 contributed to the training set and different 13

to the test set. 32x32 bitmaps are divided into nonoverlapping blocks of

4x4 and the number of on pixels are counted in each block. This generates

an input matrix of 8x8 where each element is an integer in the range

0..16. This reduces dimensionality and gives invariance to small

distortions.

For info on NIST preprocessing routines, see M. D. Garris, J. L. Blue, G.

T. Candela, D. L. Dimmick, J. Geist, P. J. Grother, S. A. Janet, and C.

L. Wilson, NIST Form-Based Handprint Recognition System, NISTIR 5469,

1994.

|details-start|

**References**

|details-split|

- C. Kaynak (1995) Methods of Combining Multiple Classifiers and Their

Applications to Handwritten Digit Recognition, MSc Thesis, Institute of

Graduate Studies in Science and Engineering, Bogazici University.

- E. Alpaydin, C. Kaynak (1998) Cascading Classifiers, Kybernetika.

- Ken Tang and Ponnuthurai N. Suganthan and Xi Yao and A. Kai Qin.

Linear dimensionalityreduction using relevance weighted LDA. School of

Electrical and Electronic Engineering Nanyang Technological University.

2005.

- Claudio Gentile. A New Approximate Maximal Margin Classification

Algorithm. NIPS. 2000.

|details-end|

(1797, 8, 8)

(1797, 64)

It looks like the image in question corresponds to the digit ‘5’. Now, can you build a classifier that can make this prediction not only for this image, but for all the other ones in the dataset? You’ll do so in the next exercise!

Train/Test Split + Fit/Predict/Accuracy

Now that you have learned about the importance of splitting your data into training and test sets, it’s time to practice doing this on the digits dataset! After creating arrays for the features and target variable, you will split them into training and test sets, fit a k-NN classifier to the training data, and then compute its accuracy using the .score() method.

Instructions

- Import

KNeighborsClassifierfromsklearn.neighborsandtrain_test_splitfromsklearn.model_selection. - Create an array for the features using

digits.dataand an array for the target usingdigits.target. - Create stratified training and test sets using

0.2for the size of the test set. Use a random state of42. Stratify the split according to the labels so that they are distributed in the training and test sets as they are in the original dataset. - Create a k-NN classifier with

7neighbors and fit it to the training data. - Compute and print the accuracy of the classifier’s predictions using the

.score()method.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

# Create feature and target arrays

X = digits.data

y = digits.target

# Split into training and test set

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42, stratify=y)

# Create a k-NN classifier with 7 neighbors: knn

knn = KNeighborsClassifier(n_neighbors=7)

# Fit the classifier to the training data

knn.fit(X_train, y_train)

# predict

pred = knn.predict(X_test)

result = list(zip(pred, y_test))

not_correct = [v for v in result if v[0] != v[1]]

num_correct = len(result) - len(not_correct)

# Print the accuracy

score = knn.score(X_test, y_test)

print(f'Incorrect Result: {not_correct}\nNumber Correct: {num_correct}\nScore: {score:0.2f}')

1

2

3

Incorrect Result: [(8, 6), (1, 8), (7, 8), (4, 9), (8, 9), (1, 8)]

Number Correct: 354

Score: 0.98

Incredibly, this out of the box k-NN classifier with 7 neighbors has learned from the training data and predicted the labels of the images in the test set with 98% accuracy, and it did so in less than a second! This is one illustration of how incredibly useful machine learning techniques can be.

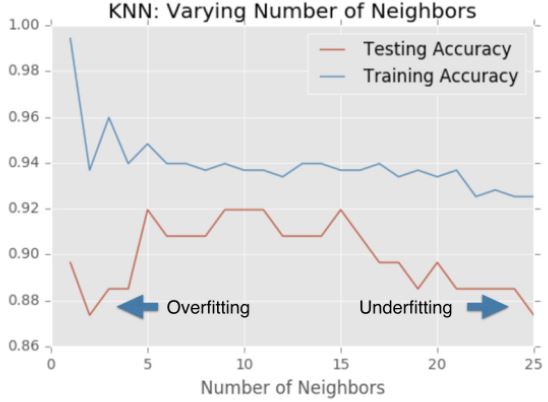

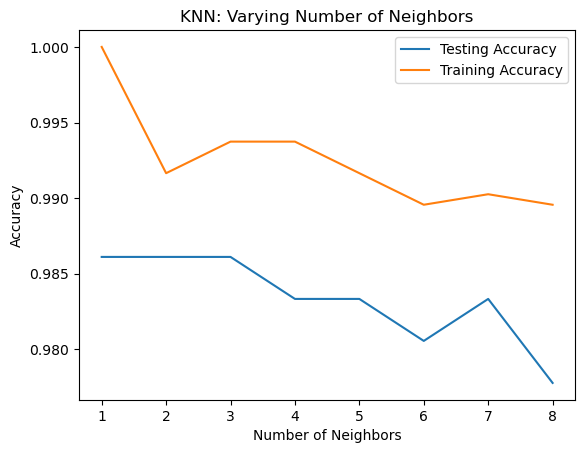

Overfitting and underfitting

Remember the model complexity curve that Hugo showed in the video? You will now construct such a curve for the digits dataset! In this exercise, you will compute and plot the training and testing accuracy scores for a variety of different neighbor values. By observing how the accuracy scores differ for the training and testing sets with different values of k, you will develop your intuition for overfitting and underfitting.

The training and testing sets are available to you in the workspace as X_train, X_test, y_train, y_test. In addition, KNeighborsClassifier has been imported from sklearn.neighbors.

Instructions

- Inside the for loop:

- Setup a k-NN classifier with the number of neighbors equal to

k. - Fit the classifier with

kneighbors to the training data. - Compute accuracy scores the training set and test set separately using the

.score()method and assign the results to thetrain_accuracyandtest_accuracyarrays respectively.

- Setup a k-NN classifier with the number of neighbors equal to

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

# Setup arrays to store train and test accuracies

neighbors = np.arange(1, 9)

train_accuracy = np.empty(len(neighbors))

test_accuracy = np.empty(len(neighbors))

# Loop over different values of k

for i, k in enumerate(neighbors):

# Setup a k-NN Classifier with k neighbors: knn

knn = knn = KNeighborsClassifier(n_neighbors=k)

# Fit the classifier to the training data

knn.fit(X_train, y_train)

#Compute accuracy on the training set

train_accuracy[i] = knn.score(X_train, y_train)

#Compute accuracy on the testing set

test_accuracy[i] = knn.score(X_test, y_test)

# Generate plot

plt.plot(neighbors, test_accuracy, label = 'Testing Accuracy')

plt.plot(neighbors, train_accuracy, label = 'Training Accuracy')

plt.legend()

plt.title('KNN: Varying Number of Neighbors')

plt.xlabel('Number of Neighbors')

_ = plt.ylabel('Accuracy')

It looks like the test accuracy is highest when using 3 and 5 neighbors. Using 8 neighbors or more seems to result in a simple model that underfits the data. Now that you’ve grasped the fundamentals of classification, you will learn about regression in the next chapter!

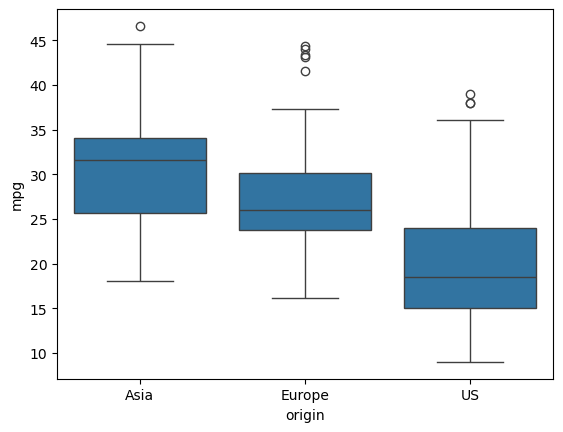

Regression

In the previous chapter, you used image and political datasets to predict binary and multiclass outcomes. But what if your problem requires a continuous outcome? Regression is best suited to solving such problems. You will learn about fundamental concepts in regression and apply them to predict the life expectancy in a given country using Gapminder data.

Introduction to regression

- In regression tasks, the target value is a continuously varying variable, such as a country’s GDP or the price of a house.

- The first regression task will be using the Boston housing dataset.

- The data can be loaded from a CSV or scikit-learn’s built-in datasets.

'CRIM'is per capita crime rate'NX'is nitric oxides concentration'RM'is average number of rooms per dwelling- The target variable,

'MEDV', is the median value of owner occupied homes in thousands of dollars

Creating feature and target arrays

- Recall that scikit-learn wants

featuresandtargetvalues in distinct arrays,Xandy. - Using the

.valuesattribute returns theNumPyarrays.pandasdocumentation recommends using.to_numpy

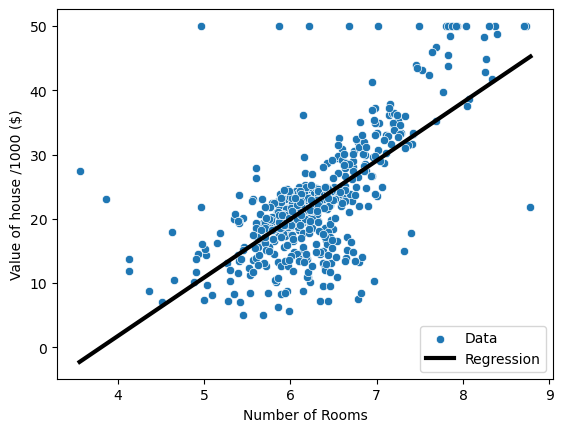

Predicting house value from a single feature

- As a first task, let’s try to predict the price from a single feature: the average number of rooms

- The 5th column is the average number of rooms,

'RM' - To reshape the arrays, use the

.reshapemethod to keep the first dimension, but add another dimension of size one toX.

Fitting a regression model

- Instantiate

sklearn.linear_model.LinearRegression - Fit the model by passing in the data and target

Check out the regressors predictions over the range of the data with

np.linspacebetween themaxandminvalue ofX_rooms.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

boston = pd.read_csv(data_paths[1])

display(boston.head())

# creating features and target arrays

X = boston.drop('MEDV', axis=1).to_numpy()

y = boston.MEDV.to_numpy()

# predict from a single feature

X_rooms = X[:, 5]

# check variable type

print(f'X_rooms type: {type(X_rooms)}, shape: {X_rooms.shape}\ny type: {type(y)}, shape: {y.shape}')

# reshape

X_rooms = X_rooms.reshape(-1, 1)

y = y.reshape(-1, 1)

print(f'X_rooms shape: {X_rooms.shape}\ny shape: {y.shape}')

# instantiate model

reg = LinearRegression()

# fit a linear model

reg.fit(X_rooms, y)

# data range variable

pred_space = np.linspace(min(X_rooms), max(X_rooms)).reshape(-1, 1)

# plot house value as a function of rooms

sns.scatterplot(data=boston, x='RM', y='MEDV', label='Data')

plt.plot(pred_space, reg.predict(pred_space), color='k', lw=3, label='Regression')

plt.legend(loc='lower right')

plt.xlabel('Number of Rooms')

plt.ylabel('Value of house /1000 ($)')

plt.show()

| CRIM | ZN | INDUS | CHAS | NX | RM | AGE | DIS | RAD | TAX | PTRATIO | B | LSTAT | MEDV | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.00632 | 18.0 | 2.31 | 0 | 0.538 | 6.575 | 65.2 | 4.0900 | 1 | 296.0 | 15.3 | 396.90 | 4.98 | 24.0 |

| 1 | 0.02731 | 0.0 | 7.07 | 0 | 0.469 | 6.421 | 78.9 | 4.9671 | 2 | 242.0 | 17.8 | 396.90 | 9.14 | 21.6 |

| 2 | 0.02729 | 0.0 | 7.07 | 0 | 0.469 | 7.185 | 61.1 | 4.9671 | 2 | 242.0 | 17.8 | 392.83 | 4.03 | 34.7 |

| 3 | 0.03237 | 0.0 | 2.18 | 0 | 0.458 | 6.998 | 45.8 | 6.0622 | 3 | 222.0 | 18.7 | 394.63 | 2.94 | 33.4 |

| 4 | 0.06905 | 0.0 | 2.18 | 0 | 0.458 | 7.147 | 54.2 | 6.0622 | 3 | 222.0 | 18.7 | 396.90 | 5.33 | 36.2 |

1

2

3

4

X_rooms type: <class 'numpy.ndarray'>, shape: (506,)

y type: <class 'numpy.ndarray'>, shape: (506,)

X_rooms shape: (506, 1)

y shape: (506, 1)

Which of the following is a regression problem?

Andy introduced regression to you using the Boston housing dataset. But regression models can be used in a variety of contexts to solve a variety of different problems.

Given below are four example applications of machine learning. Your job is to pick the one that is best framed as a regression problem.

Answer the question

An e-commerce company using labeled customer data to predict whether or not a customer will purchase a particular item.A healthcare company using data about cancer tumors (such as their geometric measurements) to predict whether a new tumor is benign or malignant.A restaurant using review data to ascribe positive or negative sentiment to a given review.- A bike share company using time and weather data to predict the number of bikes being rented at any given hour.

- The target variable here - the number of bike rentals at any given hour - is quantitative, so this is best framed as a regression problem.

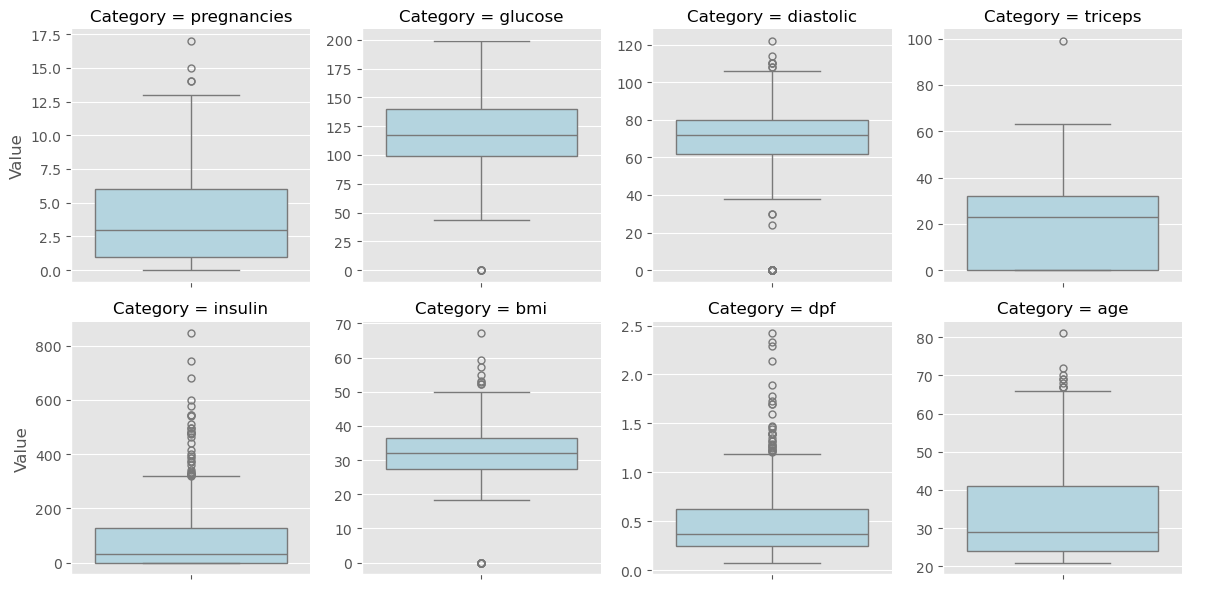

Importing data for supervised learning

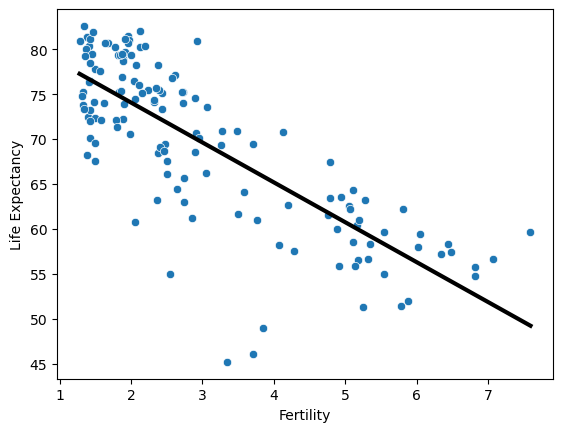

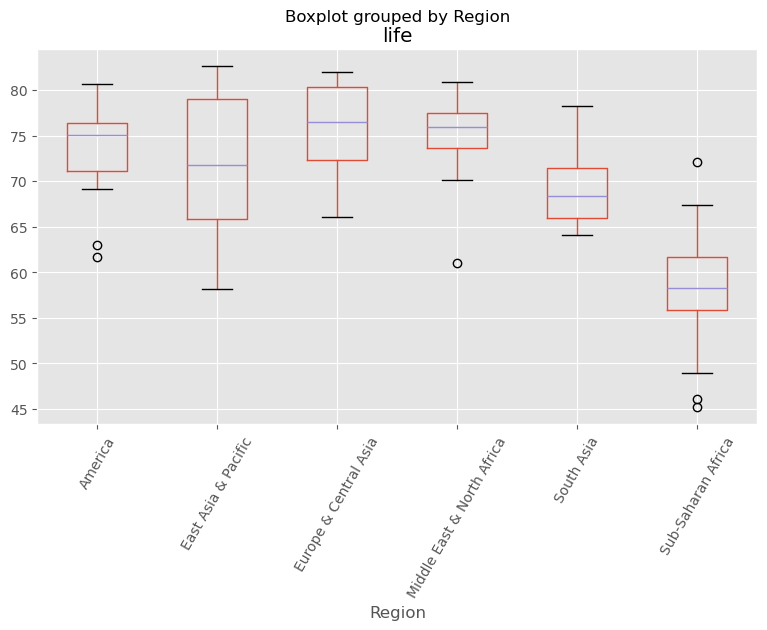

In this chapter, you will work with Gapminder data that we have consolidated into one CSV file available in the workspace as 'gapminder.csv'. Specifically, your goal will be to use this data to predict the life expectancy in a given country based on features such as the country’s GDP, fertility rate, and population. As in Chapter 1, the dataset has been preprocessed.

Since the target variable here is quantitative, this is a regression problem. To begin, you will fit a linear regression with just one feature: 'fertility', which is the average number of children a woman in a given country gives birth to. In later exercises, you will use all the features to build regression models.

Before that, however, you need to import the data and get it into the form needed by scikit-learn. This involves creating feature and target variable arrays. Furthermore, since you are going to use only one feature to begin with, you need to do some reshaping using NumPy’s .reshape() method. Don’t worry too much about this reshaping right now, but it is something you will have to do occasionally when working with scikit-learn so it is useful to practice.

Instructions

- Import

numpyandpandasas their standard aliases. - Read the file

'gapminder.csv'into a DataFramedfusing theread_csv()function. - Create array

Xfor the'fertility'feature and arrayyfor the'life'target variable. Reshape the arrays by using the

.reshape()method and passing in-1and1.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# Read the CSV file into a DataFrame: df

df = pd.read_csv(data_paths[3])

# Create arrays for features and target variable

y = df.life.values

X = df.fertility.values

# Print the dimensions of X and y before reshaping

print("Dimensions of y before reshaping: {}".format(y.shape))

print("Dimensions of X before reshaping: {}".format(X.shape))

# Reshape X and y

y = y.reshape(-1, 1)

X = X.reshape(-1, 1)

# Print the dimensions of X and y after reshaping

print("Dimensions of y after reshaping: {}".format(y.shape))

print("Dimensions of X after reshaping: {}".format(X.shape))

1

2

3

4

Dimensions of y before reshaping: (139,)

Dimensions of X before reshaping: (139,)

Dimensions of y after reshaping: (139, 1)

Dimensions of X after reshaping: (139, 1)

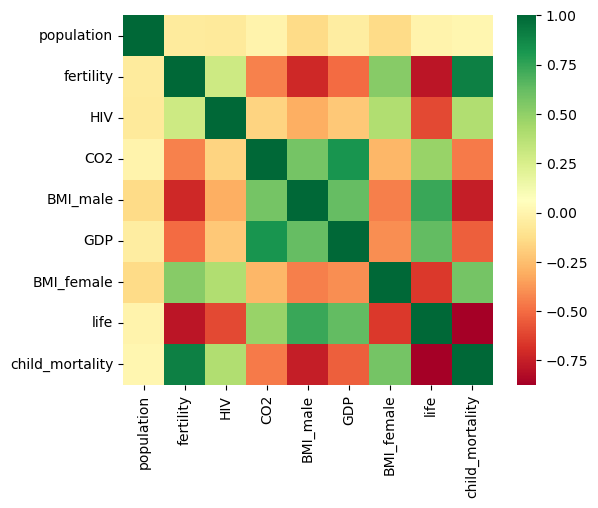

Exploring the Gapminder data

As always, it is important to explore your data before building models. On the right, we have constructed a heatmap showing the correlation between the different features of the Gapminder dataset, which has been pre-loaded into a DataFrame as df and is available for exploration in the IPython Shell. Cells that are in green show positive correlation, while cells that are in red show negative correlation. Take a moment to explore this: Which features are positively correlated with life, and which ones are negatively correlated? Does this match your intuition?

Then, in the IPython Shell, explore the DataFrame using pandas methods such as .info(), .describe(), .head().

In case you are curious, the heatmap was generated using Seaborn’s heatmap function and the following line of code, where df.corr() computes the pairwise correlation between columns:

sns.heatmap(df.corr(), square=True, cmap='RdYlGn')

Once you have a feel for the data, consider the statements below and select the one that is not true. After this, Hugo will explain the mechanics of linear regression in the next video and you will be on your way building regression models!

Instructions

- The DataFrame has

139samples (or rows) and9columns. lifeandfertilityare negatively correlated.- The mean of

lifeis69.602878. fertilityis of typeint64.GDPandlifeare positively correlated.

1

ax = sns.heatmap(df.select_dtypes(include=['number']).corr(), square=True, cmap='RdYlGn')

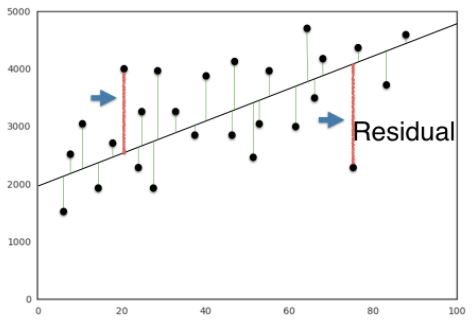

The basics of linear regression

- How does linear regression work?

Regression mechanics

- We want to ft a line to the data and a line in two dimensions is always of the form $y=a*x+b$, where $y$ is the target, $x$ is the single feature, and $a$ and $b$ are the parameters of the model that we want to learn.

- The question of fitting is reduced to: how do we choose $a$ and $b$?

- A common method is to define an error function for any given line, and then choose the line that minimizes the error function.

- Such an error function is also called a loss or a cost function.

The loss function

- What will our loss function be?

- We want the line to be as close to the actual data points as possible.

- For this reason, we wish to minimize the vertical distance between the fit, and the data.

- For each data point, calculate the vertical distance between it and the line.

- This distance is called a residual.

- We could try to minimize the sum of the residuals, but then a large positive residual would cancel out a large negative residual.

- For this reason, we minimize the sum of the squares of the residuals.

- This will be the loss function, and using this loss function is commonly called ordinary least squares (OLS).

- Note this is the same as minimizing the mean squared error of the predictions on the training set.

- See the statistic curriculum for more detail.

- When

.fitis called on a linear regression model in scikit-learn, it performs this OLS, under the hood.

Linear regression in higher dimensions

- When we have two features and one target, a line is in the form $y=a_{1}x_{1}+a_{2}x_{2}+b$, so to fit a linear regression model, is to specify three variables, $a_{1}$, $a_{2}$, and $b$.

- In higher dimensions, with more than one or two features, a line is of this form, $y=a_{1}x_{1}+a_{2}x_{2}+a_{3}x_{3}+…+a_{n}x_{n}+b$, so fitting a linear regression model is to specify a coefficient, $a_{i}$, for each features, as well as the variable $b$.

- The scikit-learn API works exactly the same in this case: pass two arrays to the

.fitmethod, one containing the features, the other is the target variable.

Linear regression on all Boston Housing features

- The default scoring method for linear regression is called $R^2$.

- This metric quantifies the amount of variance in the target variable that is predicted from the feature variables.

- See the scikit-learn documentation, and the DataCamp statistics curriculum for more details.

- To compute $R^2$, apply the .score method to the model, and pass it two arguments, the features and target data.

- This metric quantifies the amount of variance in the target variable that is predicted from the feature variables.

- Generally, linear regression will never be used out of the box, like this; you will mostly likely wish to use regularization, which we’ll see soon, and which places further constraints on the model coefficients.

Learning about linear regression and how to use it in scikit-learn, is an essential first step toward using regularized linear models.

1

boston.head()

| CRIM | ZN | INDUS | CHAS | NX | RM | AGE | DIS | RAD | TAX | PTRATIO | B | LSTAT | MEDV | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.00632 | 18.0 | 2.31 | 0 | 0.538 | 6.575 | 65.2 | 4.0900 | 1 | 296.0 | 15.3 | 396.90 | 4.98 | 24.0 |

| 1 | 0.02731 | 0.0 | 7.07 | 0 | 0.469 | 6.421 | 78.9 | 4.9671 | 2 | 242.0 | 17.8 | 396.90 | 9.14 | 21.6 |

| 2 | 0.02729 | 0.0 | 7.07 | 0 | 0.469 | 7.185 | 61.1 | 4.9671 | 2 | 242.0 | 17.8 | 392.83 | 4.03 | 34.7 |

| 3 | 0.03237 | 0.0 | 2.18 | 0 | 0.458 | 6.998 | 45.8 | 6.0622 | 3 | 222.0 | 18.7 | 394.63 | 2.94 | 33.4 |

| 4 | 0.06905 | 0.0 | 2.18 | 0 | 0.458 | 7.147 | 54.2 | 6.0622 | 3 | 222.0 | 18.7 | 396.90 | 5.33 | 36.2 |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# split the data

X_train, X_test, y_train, y_test = train_test_split(boston.drop('MEDV', axis=1), boston.MEDV, test_size=0.3, random_state=42)

# instantiate the regressor

reg_all = LinearRegression()

# fit on the training set

reg_all.fit(X_train, y_train)

# predict on the test set

y_pred = reg_all.predict(X_test)

# score the model

score = reg_all.score(X_test, y_test)

print(f'Model Score: {score:0.3f}')

1

Model Score: 0.711

Fit & predict for regression

Now, you will fit a linear regression and predict life expectancy using just one feature. You saw Andy do this earlier using the 'RM' feature of the Boston housing dataset. In this exercise, you will use the 'fertility' feature of the Gapminder dataset. Since the goal is to predict life expectancy, the target variable here is 'life'. The array for the target variable has been pre-loaded as y and the array for 'fertility' has been pre-loaded as X_fertility.

A scatter plot with 'fertility' on the x-axis and 'life' on the y-axis has been generated. As you can see, there is a strongly negative correlation, so a linear regression should be able to capture this trend. Your job is to fit a linear regression and then predict the life expectancy, overlaying these predicted values on the plot to generate a regression line. You will also compute and print the $R^2$ score using sckit-learn’s .score() method.

Instructions

- Import

LinearRegressionfromsklearn.linear_model. - Create a

LinearRegressionregressor calledreg. - Set up the prediction space to range from the minimum to the maximum of

X_fertility. This has been done for you. - Fit the regressor to the data (

X_fertilityandy) and compute its predictions using the.predict()method and theprediction_spacearray. - Compute and print the $R^2$ score using the

.score()method. - Overlay the plot with your linear regression line. This has been done for you, so hit ‘Submit Answer’ to see the result!

1

df.head()

| population | fertility | HIV | CO2 | BMI_male | GDP | BMI_female | life | child_mortality | Region | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 34811059.0 | 2.73 | 0.1 | 3.328945 | 24.59620 | 12314.0 | 129.9049 | 75.3 | 29.5 | Middle East & North Africa |

| 1 | 19842251.0 | 6.43 | 2.0 | 1.474353 | 22.25083 | 7103.0 | 130.1247 | 58.3 | 192.0 | Sub-Saharan Africa |

| 2 | 40381860.0 | 2.24 | 0.5 | 4.785170 | 27.50170 | 14646.0 | 118.8915 | 75.5 | 15.4 | America |

| 3 | 2975029.0 | 1.40 | 0.1 | 1.804106 | 25.35542 | 7383.0 | 132.8108 | 72.5 | 20.0 | Europe & Central Asia |

| 4 | 21370348.0 | 1.96 | 0.1 | 18.016313 | 27.56373 | 41312.0 | 117.3755 | 81.5 | 5.2 | East Asia & Pacific |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

X_fertility = df.fertility.to_numpy().reshape(-1, 1)

y = df.life.to_numpy().reshape(-1, 1)

# Create the regressor: reg

reg = LinearRegression()

# Create the prediction space

prediction_space = np.linspace(df.fertility.max(), df.fertility.min()).reshape(-1,1)

# Fit the model to the data

reg.fit(X_fertility, y)

# Compute predictions over the prediction space: y_pred

y_pred = reg.predict(prediction_space)

# Print R^2

score = reg.score(X_fertility, y)

print(f'Score: {score}')

# Plot regression line

sns.scatterplot(data=df, x='fertility', y='life')

plt.xlabel('Fertility')

plt.ylabel('Life Expectancy')

plt.plot(prediction_space, y_pred, color='black', linewidth=3)

plt.show()

1

Score: 0.6192442167740035

Notice how the line captures the underlying trend in the data. And the performance is quite decent for this basic regression model with only one feature.

Train/test split for regression

As you learned in Chapter 1, train and test sets are vital to ensure that your supervised learning model is able to generalize well to new data. This was true for classification models, and is equally true for linear regression models.

In this exercise, you will split the Gapminder dataset into training and testing sets, and then fit and predict a linear regression over all features. In addition to computing the $R^2$ score, you will also compute the Root Mean Squared Error (RMSE), which is another commonly used metric to evaluate regression models. The feature array X and target variable array y have been pre-loaded for you from the DataFrame df.

Instructions

- Import

LinearRegressionfromsklearn.linear_model,mean_squared_errorfromsklearn.metrics, andtrain_test_splitfromsklearn.model_selection. - Using

Xandy, create training and test sets such that 30% is used for testing and 70% for training. Use a random state of42. - Create a linear regression regressor called

reg_all, fit it to the training set, and evaluate it on the test set. - Compute and print the $R^2$ score using the

.score()method on the test set. Compute and print the RMSE. To do this, first compute the Mean Squared Error using the

mean_squared_error()function with the argumentsy_testandy_pred, and then take its square root usingnp.sqrt().

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

X = df.drop(['life', 'Region'], axis=1).to_numpy()

y = df.life.to_numpy()

# Create training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.3, random_state=42)

# Create the regressor: reg_all

reg_all = LinearRegression()

# Fit the regressor to the training data

reg_all.fit(X_train, y_train)

# Predict on the test data: y_pred

y_pred = reg_all.predict(X_test)

# Compute and print R^2 and RMSE

print(f"R^2: {reg_all.score(X_test, y_test):0.3f}")

rmse = np.sqrt(mean_squared_error(y_test, y_pred))

print(f"Root Mean Squared Error: {rmse:0.3f}")

1

2

R^2: 0.838

Root Mean Squared Error: 3.248

Using all features has improved the model score. This makes sense, as the model has more information to learn from. However, there is one potential pitfall to this process. Can you spot it? You’ll learn about this, as well how to better validate your models, in the next section.

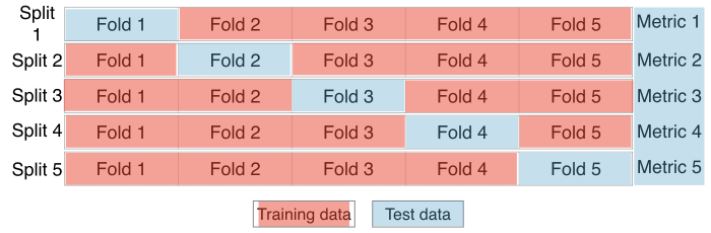

Cross-validation

- You’re now also becoming more acquainted with train test split, and computing model performance metrics on the test set.

- Can you spot a potential pitfall of this process?

- If you’re computing $R^2$ on your test set, the $R^2$ returned, is dependent on the way the data is split.

- The data points in the test set may have some peculiarities that mean the $R^2$ computed on it, is not representative of the model’s ability to generalize to unseen data.

- To combat this dependence on what is essentially an arbitrary split, we use a technique call cross-validation.

- Begin by splitting the dataset into five groups, or folds.

- Hold out the first fold as a test set, fit the model on the remaining 4 folds, predict on the test test set, and compute the metric of interest.

- Next, hold out the second fold as the test set, fit on the remaining data, predict on the test set, and compute the metric of interest.

- Then, similarly, with the third, fourth and fifth fold.

- As a result, there are five values of $R^2$ from which statistics of interest can be computed, such as mean, median, and 95% confidence interval.

- As the dataset is split into 5 folds, this process is called 5-fold cross validation.

- 10 folds would be 10-fold cross validation.

- Generally, if k folds are used, it is called k-fold cross validation or k-fold CV.

- The trade-off is, more folds are computationally more expensive, because there is more fitting and predicting.

- This method avoids the problem of the metric of choice being dependent on the train test split.

Cross-validation in scikit-learn

sklearn.model_selection.cross_val_score- This returns an array of cross-validation scores, which are assigned to

cv_results - The length of the array is the number of folds specified by the

cvparameter. - The reported score is $R^2$, the default score for linear regression

We can also compute the

mean

1

2

3

4

5

6

7

8

# instantiate the model

reg = LinearRegression()

# call cross_val_score

cv_results = cross_val_score(reg, boston.drop('MEDV', axis=1), boston.MEDV, cv=5)

print(f'Scores: {np.round(cv_results, 3)}')

print(f'Scores mean: {np.round(np.mean(cv_results), 3)}')

1

2

Scores: [ 0.639 0.714 0.587 0.079 -0.253]

Scores mean: 0.353

5-fold cross-validation

Cross-validation is a vital step in evaluating a model. It maximizes the amount of data that is used to train the model, as during the course of training, the model is not only trained, but also tested on all of the available data.

In this exercise, you will practice 5-fold cross validation on the Gapminder data. By default, scikit-learn’s cross_val_score() function uses $R^2$ as the metric of choice for regression. Since you are performing 5-fold cross-validation, the function will return 5 scores. Your job is to compute these 5 scores and then take their average.

The DataFrame has been loaded as df and split into the feature/target variable arrays X and y. The modules pandas and numpy have been imported as pd and np, respectively.

Instructions

- Import

LinearRegressionfromsklearn.linear_modelandcross_val_scorefromsklearn.model_selection. - Create a linear regression regressor called

reg. - Use the

cross_val_score()function to perform 5-fold cross-validation onXandy. - Compute and print the average cross-validation score. You can use NumPy’s

mean()function to compute the average.

1

2

3

4

5

6

7

8

9

10

# Create a linear regression object: reg

reg = LinearRegression()

# Compute 5-fold cross-validation scores: cv_scores

cv_scores = cross_val_score(reg, X, y, cv=5)

# Print the 5-fold cross-validation scores

print(f'Scores: {np.round(cv_scores, 3)}')

print(f'Scores mean: {np.round(np.mean(cv_scores), 3)}')

1

2

Scores: [0.817 0.829 0.902 0.806 0.945]

Scores mean: 0.86

Now that you have cross-validated your model, you can more confidently evaluate its predictions.

K-Fold CV comparison

Cross validation is essential but do not forget that the more folds you use, the more computationally expensive cross-validation becomes. In this exercise, you will explore this for yourself. Your job is to perform 3-fold cross-validation and then 10-fold cross-validation on the Gapminder dataset.

In the IPython Shell, you can use %timeit to see how long each 3-fold CV takes compared to 10-fold CV by executing the following cv=3 and cv=10:

%timeit cross_val_score(reg, X, y, cv = ____)

pandas and numpy are available in the workspace as pd and np. The DataFrame has been loaded as df and the feature/target variable arrays X and y have been created.

Instructions

- Import

LinearRegressionfromsklearn.linear_modelandcross_val_scorefromsklearn.model_selection. - Create a linear regression regressor called

reg. - Perform 3-fold CV and then 10-fold CV. Compare the resulting mean scores.

1

2

3

4

5

6

7

8

9

10

# Create a linear regression object: reg

reg = LinearRegression()

# Perform 3-fold CV

cvscores_3 = cross_val_score(reg, X, y, cv=3)

print(f'cv=3 scores mean: {np.round(np.mean(cvscores_3), 3)}')

# Perform 10-fold CV

cvscores_10 = cross_val_score(reg, X, y, cv=10)

print(f'cv=10 scores mean: {np.round(np.mean(cvscores_10), 3)}')

1

2

cv=3 scores mean: 0.872

cv=10 scores mean: 0.844

1

2

3

4

cv3 = %timeit -n10 -r3 -q -o cross_val_score(reg, X, y, cv=3)

cv10 = %timeit -n10 -r3 -q -o cross_val_score(reg, X, y, cv=10)

print(f'cv=3 time: {cv3}\ncv=10 time: {cv10}')

1

2

cv=3 time: 3.16 ms ± 413 µs per loop (mean ± std. dev. of 3 runs, 10 loops each)

cv=10 time: 8.98 ms ± 1.91 ms per loop (mean ± std. dev. of 3 runs, 10 loops each)

Regularized regression

Why regularize?

- Recall that what a linear regression does, is minimize a loss function, to choose a coefficient, $a_{i}$, for each feature variable.

- If we allow these coefficients, or parameters, to be super large, we can get overfitting.

- It isn’t easy to see in two dimensions, but when there are many features, this is, if the data sit in a high-dimensional space with large coefficients, it gets easy to predict nearly anything.

- For this reason, it’s common practice to alter the loss function, so it penalizes for large coefficients.

- This is called Regularization.

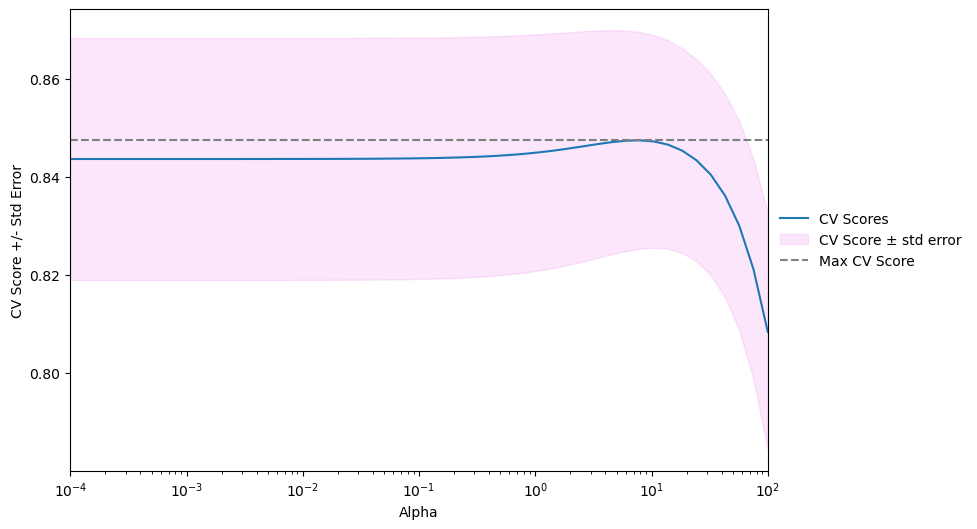

Ridge regression

- The first type of regularized regression that we’ll look at, is called ridge regression, in which out loss function is the standard OLS loss function, plus the squared value of each coefficient, multiplied by some constant, $\alpha$

- $\text{Loss function}=\text{OLS loss function}+\alpha*\sum_{i=1}^n a_{i}^2$

- Thus, when minimizing the loss function to fit to our data, models are penalized for coefficients with a large magnitude: large positive and large negative coefficients.

- Note, $\alpha$ is a parameter we need to choose in order to fit and predict.

- Essentially, we can select the $\alpha$ for which our model performs best.

- Picking $\alpha$ for ridge regression is similar to picking

kinKNN.

- This is called hyperparameter tuning, and we’ll see much more of this in section 3.

- This $\alpha$, which you may also see called $\lambda$ in the wild, can be thought of as a parameter that controls the model complexity.

- Notice when $\alpha = 0$, we get back $\text{OLS}$, which can lead to overfitting.

- Large coefficients, in this case, are not penalized, and the overfitting problem is not accounted for.

- A very high $\alpha$ means large coefficients are significantly penalized, which can lead to a model that’s too simple, and end up underfitting the data.

- The method of performing ridge regression with scikit-learn, mirrors the other models we have seen.

Ridge regression in scikit-learn

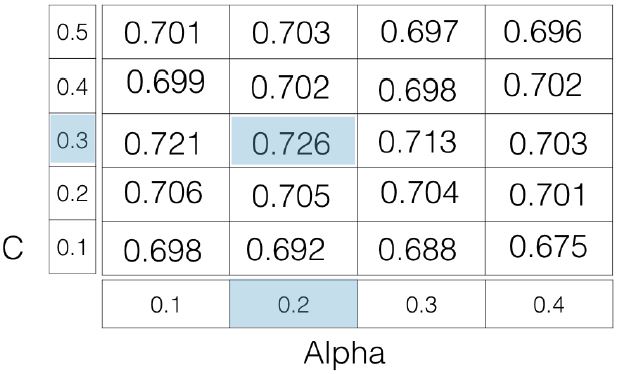

sklearn.linear_model.Ridge- Set $\alpha$ with the

alphaparameter. - Setting the

normalizeparameter toTrue, ensures all the variables are on the same scale, which will be covered later in more depth.

Lasso regression

- There is another type of regularized regression called lasso regression, in which our loss function is the standard OLS loss function, plus the absolute value of each coefficient, multiplied by some constant, $\alpha$.

$\text{Loss function}=\text{OLS loss function}+\alpha*\sum_{i=1}^n a_{i} $

Lasso regression in scikit-learn

sklearn.linear_model.Lasso- Lasso regression in scikit-learn, mirrors ridge regression.

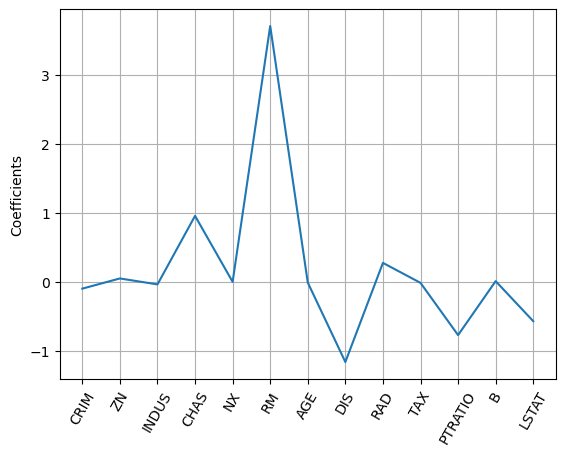

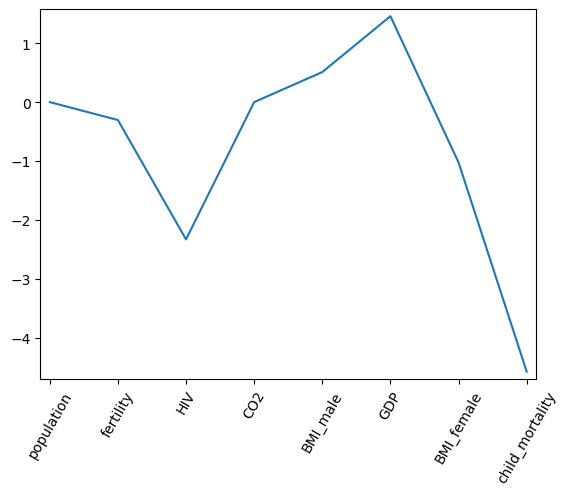

Lasso regression for feature selection

- One of the useful aspects of lasso regression is it can be used to select important features of a dataset.

- This is because it tends to reduce the coefficients of less important features to be exactly zero.

- The features whose coefficients are not shrunk to zero, are ‘selected’ by the

LASSOalgorithm.

- Plotting the coefficients as a function of feature name, yields graph below, and you can see directly, the most important predictor for our target variable,

housing price, is number of rooms,'RM'. - This is not surprising, and is a great sanity check.

- This type of feature selection is very important for machine learning in an industry or business setting, because it allows you, as the Data Scientist, to communicate important results to non-technical colleagues.

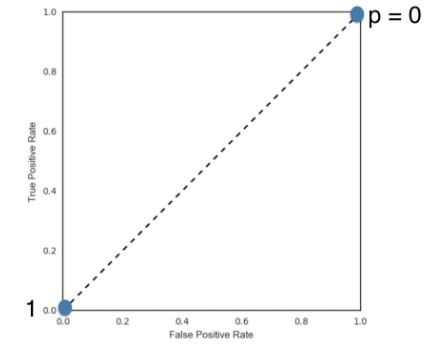

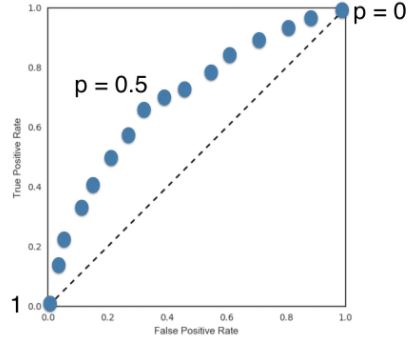

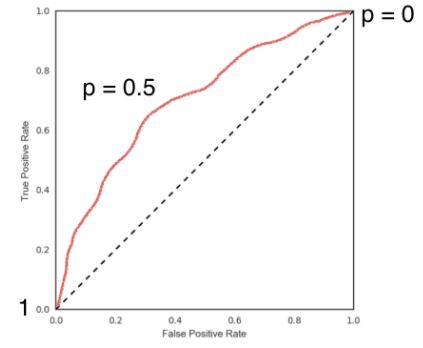

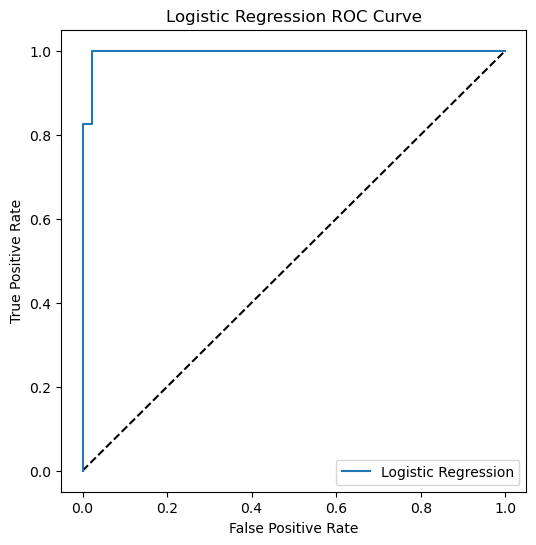

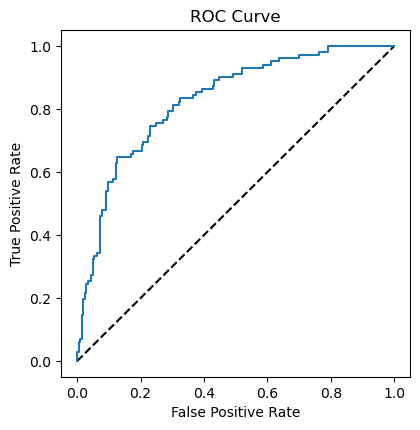

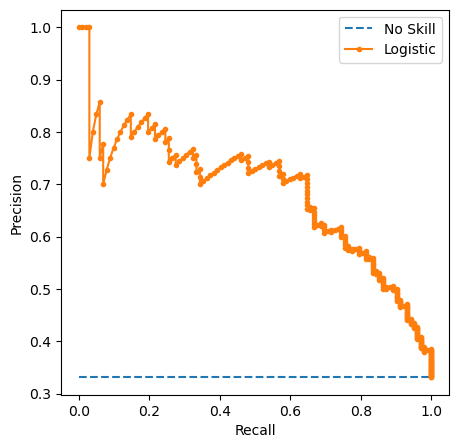

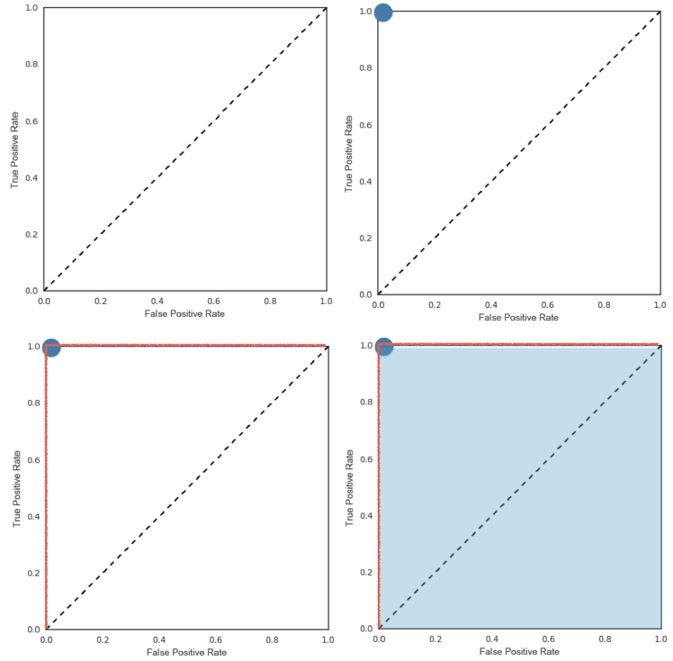

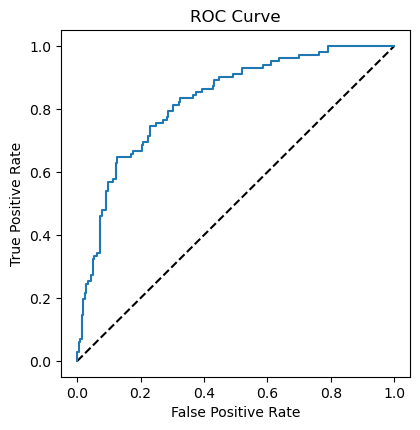

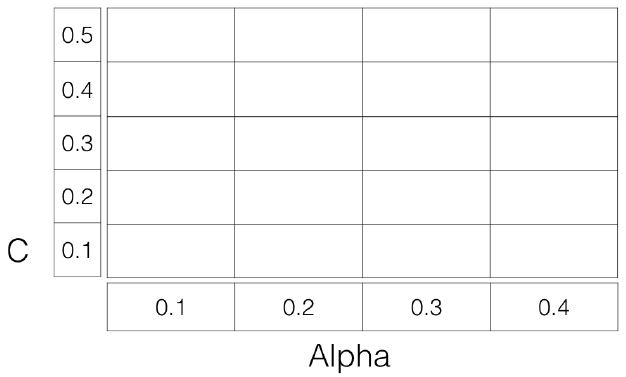

- The power of reporting important features from a linear model, cannot be overestimated.