- Course: DataCamp: Unsupervised Learning in Python

- This notebook was created as a reproducible reference.

- The material is from the course

- The course website uses

scikit-learn v0.19.2, pandas v0.19.2, and numpy v1.17.4 - If you find the content beneficial, consider a DataCamp Subscription.

- I added a function (

create_dir_save_file) to automatically download and save the required data (data/course_name) and image (Images/course_name) files. - Package Versions:

- Pandas version: 2.2.1

- Matplotlib version: 3.8.1

- Seaborn version: 0.13.2

- SciPy version: 1.12.0

- Scikit-Learn version: 1.3.2

- NumPy version: 1.26.4

Summary

The post delves into a variety of machine learning topics, specifically focusing on unsupervised learning techniques. It starts with an introduction to unsupervised learning, explaining its purpose and how it differs from supervised learning.

The post then explores specific unsupervised learning techniques such as clustering and dimension reduction. It elucidates how clustering is employed to group similar data points together, with a spotlight on K-Means clustering. It also covers hierarchical clustering and DBSCAN.

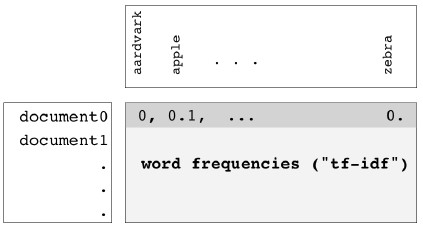

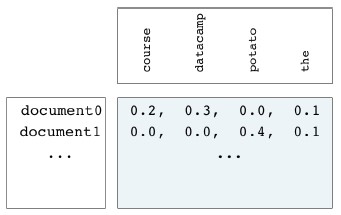

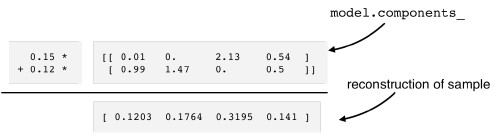

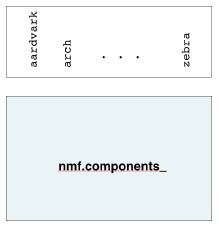

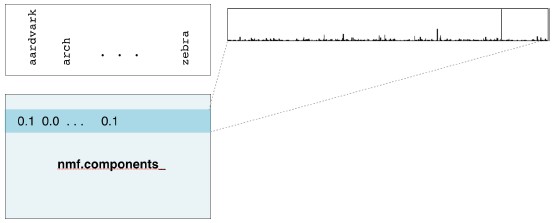

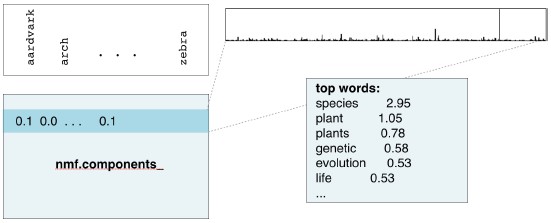

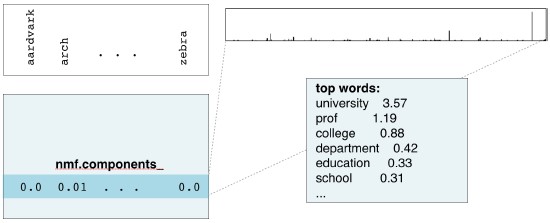

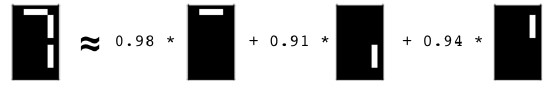

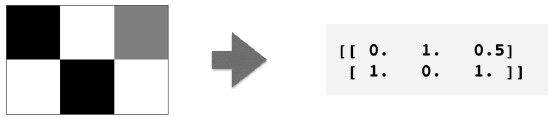

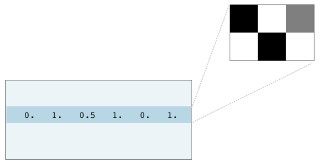

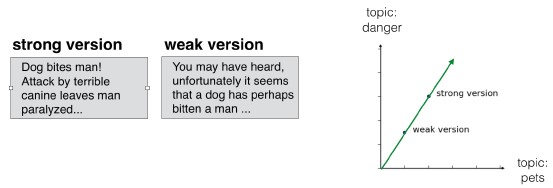

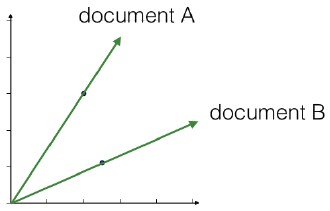

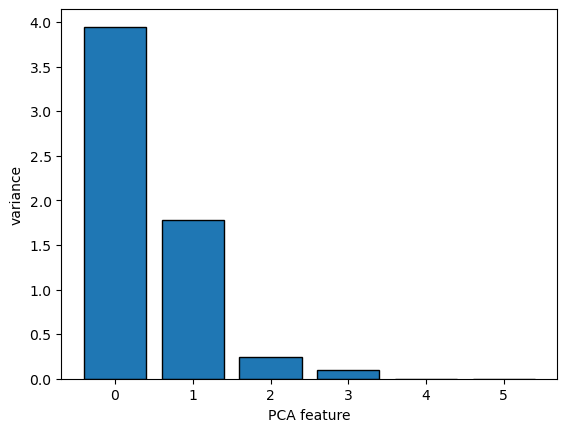

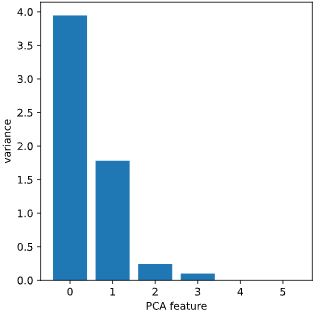

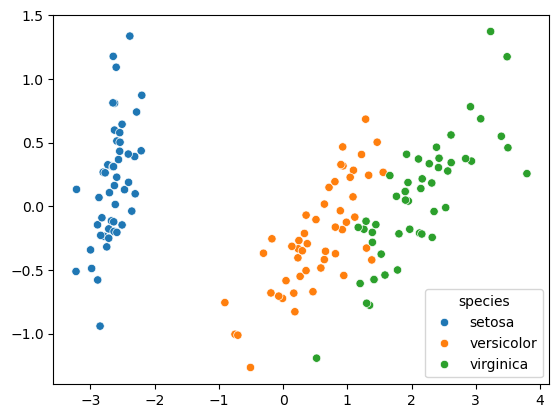

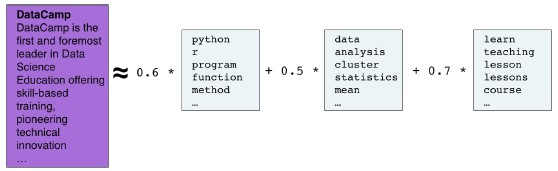

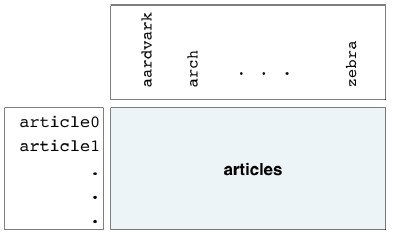

In the section on dimension reduction, the post clarifies the concept of Principal Component Analysis (PCA) and its usage in reducing the dimensionality of data while preserving its structure and relationships. It also introduces Non-negative Matrix Factorization (NMF) as a method to reduce dimensionality and find interpretable parts in the data.

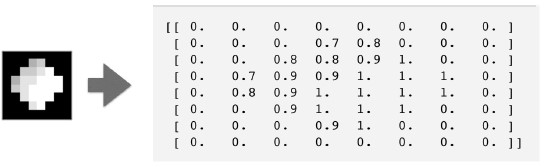

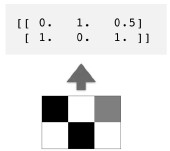

The post further discusses the application of these techniques in real-world scenarios. It demonstrates how to use PCA and NMF for image recognition and text mining, and how to construct recommender systems using NMF.

The post concludes with a brief discussion on the limitations and considerations when using unsupervised learning techniques, emphasizing that these methods should be employed as part of a larger data analysis pipeline.

Throughout the post, code snippets and examples are provided to illustrate the concepts, primarily using Python libraries such as scikit-learn and pandas. The post serves as a comprehensive guide for anyone looking to understand and apply unsupervised learning techniques in their data analysis projects.

Description

Say you have a collection of customers with a variety of characteristics such as age, location, and financial history, and you wish to discover patterns and sort them into clusters. Or perhaps you have a set of texts, such as Wikipedia pages, and you wish to segment them into categories based on their content. This is the world of unsupervised learning, called as such because you are not guiding, or supervising, the pattern discovery by some prediction task, but instead uncovering hidden structure from unlabeled data. Unsupervised learning encompasses a variety of techniques in machine learning, from clustering to dimension reduction to matrix factorization. In this course, you’ll learn the fundamentals of unsupervised learning and implement the essential algorithms using scikit-learn and scipy. You will learn how to cluster, transform, visualize, and extract insights from unlabeled datasets, and end the course by building a recommender system to recommend popular musical artists.

Imports

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| import pandas as pd

from pprint import pprint as pp

from itertools import combinations

from zipfile import ZipFile

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

import seaborn as sns

import numpy as np

from pathlib import Path

import requests

import sys

from scipy.sparse import csr_matrix

from scipy.cluster.hierarchy import linkage, dendrogram, fcluster

from scipy.stats import pearsonr

from sklearn.cluster import KMeans

from sklearn.preprocessing import StandardScaler, Normalizer, normalize, MaxAbsScaler

from sklearn.pipeline import make_pipeline

from sklearn.manifold import TSNE

from sklearn.decomposition import PCA, TruncatedSVD, NMF

from sklearn.feature_extraction.text import TfidfVectorizer

|

1

2

| import warnings

warnings.simplefilter(action="ignore", category=UserWarning)

|

Configuration Options

1

2

3

4

| pd.set_option('display.max_columns', 200)

pd.set_option('display.max_rows', 300)

pd.set_option('display.expand_frame_repr', True)

plt.rcParams["patch.force_edgecolor"] = True

|

Functions

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| def create_dir_save_file(dir_path: Path, url: str):

"""

Check if the path exists and create it if it does not.

Check if the file exists and download it if it does not.

"""

if not dir_path.parents[0].exists():

dir_path.parents[0].mkdir(parents=True)

print(f'Directory Created: {dir_path.parents[0]}')

else:

print('Directory Exists')

if not dir_path.exists():

r = requests.get(url, allow_redirects=True)

open(dir_path, 'wb').write(r.content)

print(f'File Created: {dir_path.name}')

else:

print('File Exists')

|

1

2

| data_dir = Path('data/2021-03-29_unsupervised_learning_python')

images_dir = Path('Images/2021-03-29_unsupervised_learning_python')

|

Datasets

1

2

3

4

5

6

7

8

9

10

11

12

13

| # csv files

base = 'https://assets.datacamp.com/production/repositories/655/datasets'

file_spm = base + '/1304e66b1f9799e1a5eac046ef75cf57bb1dd630/company-stock-movements-2010-2015-incl.csv'

file_ev = base + '/2a1f3ab7bcc76eef1b8e1eb29afbd54c4ebf86f2/eurovision-2016.csv'

file_fish = base + '/fee715f8cf2e7aad9308462fea5a26b791eb96c4/fish.csv'

file_lcd = base + '/effd1557b8146ab6e620a18d50c9ed82df990dce/lcd-digits.csv'

file_wine = base + '/2b27d4c4bdd65801a3b5c09442be3cb0beb9eae0/wine.csv'

file_artists_sparse = 'https://raw.githubusercontent.com/trenton3983/DataCamp/master/data/2021-03-29_unsupervised_learning_python/artists_sparse.csv'

# zip files

file_grain = base + '/bb87f0bee2ac131042a01307f7d7e3d4a38d21ec/Grains.zip'

file_musicians = base + '/c974f2f2c4834958cbe5d239557fbaf4547dc8a3/Musical%20artists.zip'

file_wiki = base + '/8e2fbb5b8240c06602336f2148f3c42e317d1fdb/Wikipedia%20articles.zip'

|

1

2

3

4

5

6

7

8

| file_links = [file_spm, file_ev, file_fish, file_lcd, file_wine, file_grain, file_musicians, file_wiki, file_artists_sparse]

file_paths = list()

for file in file_links:

file_name = file.split('/')[-1].replace('?raw=true', '').replace('%20', '_')

data_path = data_dir / file_name

create_dir_save_file(data_path, file)

file_paths.append(data_path)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

Directory Exists

File Exists

|

1

2

3

4

5

| # unzip the zipped files

zip_files = [v for v in file_paths if v.suffix == '.zip']

for file in zip_files:

with ZipFile(file, 'r') as zip_:

zip_.extractall(data_dir)

|

1

2

| dp = [v for v in data_dir.rglob('*') if v.suffix in ['.csv', '.txt']]

dp

|

1

2

3

4

5

6

7

8

9

10

11

12

| [WindowsPath('data/2021-03-29_unsupervised_learning_python/artists_sparse.csv'),

WindowsPath('data/2021-03-29_unsupervised_learning_python/company-stock-movements-2010-2015-incl.csv'),

WindowsPath('data/2021-03-29_unsupervised_learning_python/eurovision-2016.csv'),

WindowsPath('data/2021-03-29_unsupervised_learning_python/fish.csv'),

WindowsPath('data/2021-03-29_unsupervised_learning_python/lcd-digits.csv'),

WindowsPath('data/2021-03-29_unsupervised_learning_python/wine.csv'),

WindowsPath('data/2021-03-29_unsupervised_learning_python/Grains/seeds-width-vs-length.csv'),

WindowsPath('data/2021-03-29_unsupervised_learning_python/Grains/seeds.csv'),

WindowsPath('data/2021-03-29_unsupervised_learning_python/Musical artists/artists.csv'),

WindowsPath('data/2021-03-29_unsupervised_learning_python/Musical artists/scrobbler-small-sample.csv'),

WindowsPath('data/2021-03-29_unsupervised_learning_python/Wikipedia articles/wikipedia-vectors.csv'),

WindowsPath('data/2021-03-29_unsupervised_learning_python/Wikipedia articles/wikipedia-vocabulary-utf8.txt')]

|

DataFrames

stk: Company Stock Movements 2010 - 2015

1

2

| stk = pd.read_csv(dp[1], index_col=[0])

stk.iloc[:2, :5]

|

| 2010-01-04 | 2010-01-05 | 2010-01-06 | 2010-01-07 | 2010-01-08 |

|---|

| Apple | 0.580000 | -0.220005 | -3.409998 | -1.17 | 1.680011 |

|---|

| AIG | -0.640002 | -0.650000 | -0.210001 | -0.42 | 0.710001 |

|---|

euv: Eurovision 2016

1

2

| euv = pd.read_csv(dp[2])

euv.head(2)

|

| From country | To country | Jury A | Jury B | Jury C | Jury D | Jury E | Jury Rank | Televote Rank | Jury Points | Televote Points |

|---|

| 0 | Albania | Belgium | 20 | 16 | 24 | 22 | 24 | 25 | 14 | NaN | NaN |

|---|

| 1 | Albania | Czech Republic | 21 | 15 | 25 | 23 | 16 | 22 | 22 | NaN | NaN |

|---|

fsh: Fish

1

2

| fsh = pd.read_csv(dp[3], header=None)

fsh.head(2)

|

| 0 | 1 | 2 | 3 | 4 | 5 | 6 |

|---|

| 0 | Bream | 242.0 | 23.2 | 25.4 | 30.0 | 38.4 | 13.4 |

|---|

| 1 | Bream | 290.0 | 24.0 | 26.3 | 31.2 | 40.0 | 13.8 |

|---|

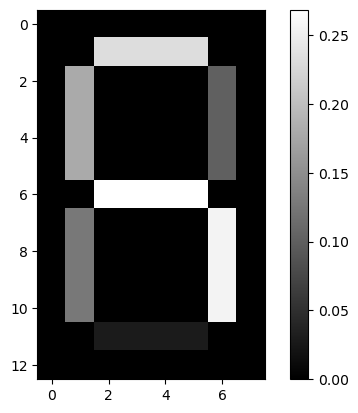

lcd: LCD Digits

1

2

| lcd = pd.read_csv(dp[4], header=None)

lcd.iloc[:2, :5]

|

| 0 | 1 | 2 | 3 | 4 |

|---|

| 0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

|---|

| 1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

|---|

win: Wine

1

2

| win = pd.read_csv(dp[5])

win.head(2)

|

| class_label | class_name | alcohol | malic_acid | ash | alcalinity_of_ash | magnesium | total_phenols | flavanoids | nonflavanoid_phenols | proanthocyanins | color_intensity | hue | od280 | proline |

|---|

| 0 | 1 | Barolo | 14.23 | 1.71 | 2.43 | 15.6 | 127 | 2.80 | 3.06 | 0.28 | 2.29 | 5.64 | 1.04 | 3.92 | 1065 |

|---|

| 1 | 1 | Barolo | 13.20 | 1.78 | 2.14 | 11.2 | 100 | 2.65 | 2.76 | 0.26 | 1.28 | 4.38 | 1.05 | 3.40 | 1050 |

|---|

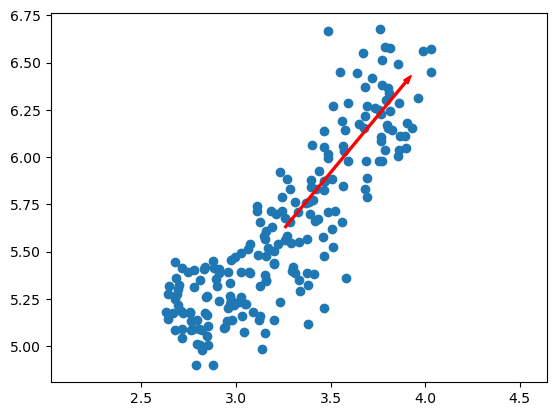

swl: Seeds Width vs. Length

1

2

3

| swl = pd.read_csv(dp[6], header=None)

swl.columns = ['width', 'length']

swl.head(2)

|

| width | length |

|---|

| 0 | 3.312 | 5.763 |

|---|

| 1 | 3.333 | 5.554 |

|---|

sed: Seeds

1

2

3

4

5

| sed = pd.read_csv(dp[7], header=None)

sed['varieties'] = sed[7].map({1: 'Kama wheat', 2: 'Rosa wheat', 3: 'Canadian wheat'})

sed.head(2)

|

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | varieties |

|---|

| 0 | 15.26 | 14.84 | 0.8710 | 5.763 | 3.312 | 2.221 | 5.220 | 1 | Kama wheat |

|---|

| 1 | 14.88 | 14.57 | 0.8811 | 5.554 | 3.333 | 1.018 | 4.956 | 1 | Kama wheat |

|---|

mus1: Musical Artists

1

2

| mus1 = pd.read_csv(dp[8])

mus1.head(2)

|

| Massive Attack |

|---|

| 0 | Sublime |

|---|

| 1 | Beastie Boys |

|---|

mus2: Musical Artists - Scrobbler Small Sample

1

2

| mus2 = pd.read_csv(dp[9])

mus2.head(2)

|

| user_offset | artist_offset | playcount |

|---|

| 0 | 1 | 79 | 58 |

|---|

| 1 | 1 | 84 | 80 |

|---|

artists_sparse

1

2

3

| artist_df = pd.read_csv(dp[0], header=None, index_col=[0])

artist_names = artist_df.index.tolist()

artists_sparse = csr_matrix(artist_df)

|

wik1: Wikipedia Vectors

1

2

| wik1 = pd.read_csv(dp[10], index_col=0).T

wik1.iloc[:4, :10]

|

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|

| HTTP 404 | 0.0 | 0.0 | 0.000000 | 0.0 | 0.0 | 0.0 | 0.0 | 0.000000 | 0.0 | 0.0 |

|---|

| Alexa Internet | 0.0 | 0.0 | 0.029607 | 0.0 | 0.0 | 0.0 | 0.0 | 0.000000 | 0.0 | 0.0 |

|---|

| Internet Explorer | 0.0 | 0.0 | 0.000000 | 0.0 | 0.0 | 0.0 | 0.0 | 0.003772 | 0.0 | 0.0 |

|---|

| HTTP cookie | 0.0 | 0.0 | 0.000000 | 0.0 | 0.0 | 0.0 | 0.0 | 0.000000 | 0.0 | 0.0 |

|---|

1

2

| wik1_sparse = csr_matrix(wik1)

wik1_sparse

|

1

2

| <60x13125 sparse matrix of type '<class 'numpy.float64'>'

with 42091 stored elements in Compressed Sparse Row format>

|

wik2: Wikipedia Vocabulary

1

2

| wik2 = pd.read_csv(dp[11], header=None)

wik2.head(2)

|

Memory Usage

1

2

3

4

5

| # These are the usual ipython objects, including this one you are creating

ipython_vars = ['In', 'Out', 'exit', 'quit', 'get_ipython', 'ipython_vars'] # list a variables

# Get a sorted list of the objects and their sizes

sorted([(x, sys.getsizeof(globals().get(x))) for x in dir() if not x.startswith('_') and x not in sys.modules and x not in ipython_vars], key=lambda x: x[1], reverse=True)[:11]

|

1

2

3

4

5

6

7

8

9

10

11

| [('wik1', 6303933),

('wik2', 740775),

('stk', 465818),

('artist_df', 450773),

('euv', 198170),

('lcd', 83364),

('mus2', 69620),

('win', 30222),

('sed', 26274),

('fsh', 8817),

('mus1', 6841)]

|

Clustering for dataset exploration

Learn how to discover the underlying groups (or “clusters”) in a dataset. By the end of this chapter, you’ll be clustering companies using their stock market prices, and distinguishing different species by clustering their measurements.

Unsupervised Learning

- We’re here to learn about unsupervised learning in Python.

- Unsupervised learning is a class of machine learning techniques for discovering patterns in data. For instance, finding the natural “clusters” of customers based on their purchase histories, or searching for patterns and correlations among these purchases, and using these patterns to express the data in a compressed form. These are examples of unsupervised learning techniques called “clustering” and “dimension reduction”.

- Supervised vs unsupervised learning

- Unsupervised learning is defined in opposition to supervised learning.

- An example of supervised learning is using the measurements of tumors to classify them as benign or cancerous.

- In this case, the pattern discovery is guided, or “supervised”, so that the patterns are as useful as possible for predicting the label: benign or cancerous.

- Unsupervised learning, in contrast, is learning without labels.

- It is pure pattern discovery, unguided by a prediction task. You’ll start by learning about clustering.

- Iris dataset

- The iris dataset consists of the measurements of many iris plants of three different species.

- setosa

- versicolor

- virginica

- There are four measurements: petal length, petal width, sepal length and sepal width. These are the features of the dataset.

- Arrays, features & samples

- Throughout this course, datasets like this will be written as two-dimensional numpy arrays.

- The columns of the array will correspond to the features.

- The measurements for individual plants are the samples of the dataset. These correspond to rows of the array.

- Iris data is 4-dimensional

- The samples of the iris dataset have four measurements, and so correspond to points in a four-dimensional space.

- This is the dimension of the dataset.

- We can’t visualize four dimensions directly, but using unsupervised learning techniques we can still gain insight.

- k-means clustering

- In this chapter, we’ll cluster these samples using k-means clustering.

- k-means finds a specified number of clusters in the samples.

- It’s implemented in the scikit-learn or “sklearn” library. Let’s see

kmeans in action on some samples from the iris dataset.

- k-means clustering with scikit-learn

- The iris samples are represented as an array. To start, import kmeans from scikit-learn.

- Then create a kmeans model, specifying the number of clusters you want to find.

- Let’s specify 3 clusters, since there are three species of iris.

- Now call the fit method of the model, passing the array of samples.

- This fits the model to the data, by locating and remembering the regions where the different clusters occur.

- Then we can use the predict method of the model on these same samples.

- This returns a cluster label for each sample, indicating to which cluster a sample belongs.

- Let’s assign the result to labels, and print it out.

- Cluster labels for new samples

- If someone comes along with some new iris samples, k-means can determine to which clusters they belong without starting over.

- k-means does this by remembering the mean of the samples in each cluster.

- These are called the “centroids”.

- New samples are assigned to the cluster whose centroid is closest.

- Suppose you’ve got an array of new samples.

- To assign the new samples to the existing clusters, pass the array of new samples to the predict method of the kmeans model.

- This returns the cluster labels of the new samples.

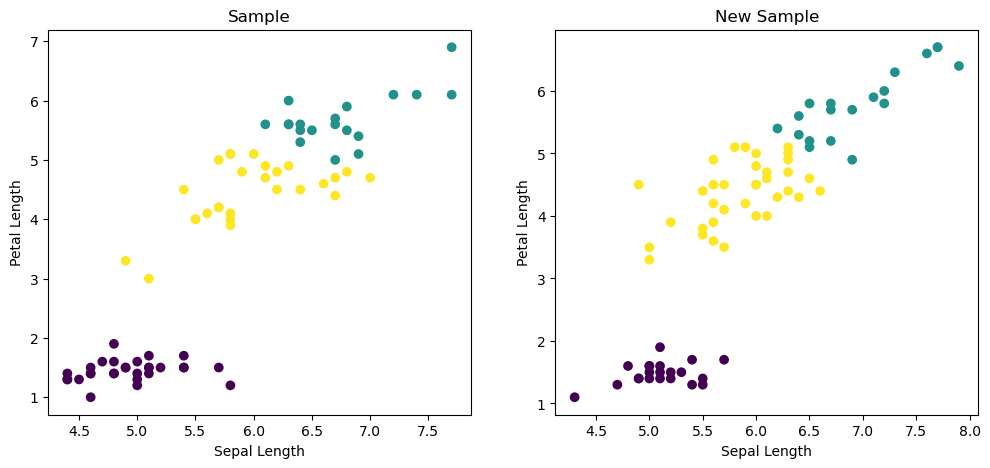

- Scatter plots

- In the next video, you’ll learn how to evaluate the quality of your clustering.

- Let’s visualize our clustering of the iris samples using scatter plots.

- Here is a scatter plot of the sepal length vs petal length of the iris samples. Each point represents an iris sample, and is colored according to the cluster of the sample.

- To create a scatter plot like this, use PyPlot.

- Firstly, import PyPlot. It is conventionally imported as plt.

- Now get the x- and y- co-ordinates of each sample.

- Sepal length is in the 0th column of the array, while petal length is in the 2nd column.

- Now call the plt.scatter function, passing the x- and y- co-ordinates and specifying c=labels to color by cluster label.

- When you are ready to show your plot, call plt.show().

1

2

3

4

| iris = sns.load_dataset('iris')

iris_samples = iris.sample(n=75, replace=False, random_state=3)

X_iris = iris_samples.iloc[:, :4]

y_iris = iris_samples.species

|

| sepal_length | sepal_width | petal_length | petal_width | species |

|---|

| 47 | 4.6 | 3.2 | 1.4 | 0.2 | setosa |

|---|

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | setosa |

|---|

| 31 | 5.4 | 3.4 | 1.5 | 0.4 | setosa |

|---|

| 25 | 5.0 | 3.0 | 1.6 | 0.2 | setosa |

|---|

| 15 | 5.7 | 4.4 | 1.5 | 0.4 | setosa |

|---|

1

2

3

4

5

| iris_model = KMeans(n_clusters=3, n_init=10)

iris_model.fit(X_iris)

iris_labels = iris_model.predict(X_iris)

iris_labels

|

1

2

3

4

| array([0, 0, 0, 0, 0, 1, 2, 0, 1, 2, 2, 0, 2, 2, 1, 0, 2, 1, 2, 0, 2, 1,

1, 2, 0, 1, 1, 2, 2, 2, 0, 0, 1, 2, 0, 0, 1, 0, 1, 2, 1, 2, 0, 0,

2, 2, 0, 2, 2, 2, 0, 0, 2, 2, 2, 0, 1, 0, 1, 2, 0, 0, 1, 2, 0, 0,

2, 1, 1, 0, 1, 2, 0, 0, 1])

|

1

2

3

4

5

6

| iris_new_samples = iris[~iris.index.isin(iris_samples.index)].copy()

X_iris_new = iris_new_samples.iloc[:, :4]

y_iris_new = iris_new_samples.species

iris_new_labels = iris_model.predict(X_iris_new)

iris_new_labels

|

1

2

3

4

| array([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1,

2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,

2, 2, 2, 2, 1, 1, 1, 2, 1, 1, 1, 2, 1, 1, 2, 1, 2, 1, 2, 1, 1, 1,

1, 2, 2, 1, 1, 2, 1, 1, 2])

|

1

2

3

4

5

| iris_new_samples['pred_labels'] = iris_new_labels

iris_samples['pred_labels'] = iris_labels

pred_labels = pd.concat([iris_new_samples[['species', 'pred_labels']], iris_samples[['species', 'pred_labels']]]).sort_index()

pred_labels.head(2)

|

| species | pred_labels |

|---|

| 0 | setosa | 0 |

|---|

| 1 | setosa | 0 |

|---|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| fig, (ax1, ax2) = plt.subplots(nrows=1, ncols=2, figsize=(12, 5))

xs = X_iris.sepal_length

ys = X_iris.petal_length

xs_new = X_iris_new.sepal_length

ys_new = X_iris_new.petal_length

ax1.scatter(xs, ys, c=iris_labels)

ax1.set_ylabel('Petal Length')

ax1.set_xlabel('Sepal Length')

ax1.set_title('Sample')

ax2.scatter(xs_new, ys_new, c=iris_new_labels)

ax2.set_ylabel('Petal Length')

ax2.set_xlabel('Sepal Length')

ax2.set_title('New Sample')

plt.show()

|

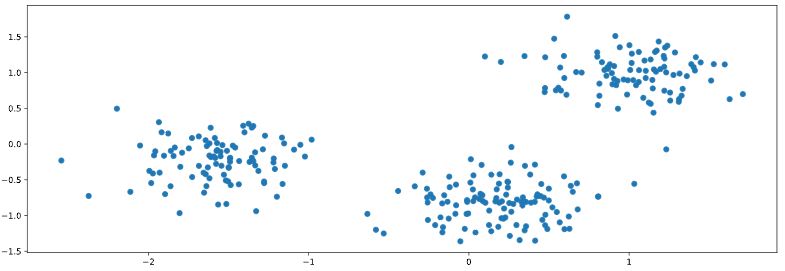

How many clusters?

You are given an array points of size 300x2, where each row gives the (x, y) co-ordinates of a point on a map. Make a scatter plot of these points, and use the scatter plot to guess how many clusters there are.

matplotlib.pyplot has already been imported as plt. In the IPython Shell:

- Create an array called

xs that contains the values of points[:,0] - that is, column 0 of points. - Create an array called

ys that contains the values of points[:,1] - that is, column 1 of points. - Make a scatter plot by passing

xs and ys to the plt.scatter() function. - Call the

plt.show() function to show your plot.

How many clusters do you see?

Possible Answers

1

| pen = sns.load_dataset('penguins').dropna()

|

| species | island | bill_length_mm | bill_depth_mm | flipper_length_mm | body_mass_g | sex |

|---|

| 0 | Adelie | Torgersen | 39.1 | 18.7 | 181.0 | 3750.0 | Male |

|---|

| 1 | Adelie | Torgersen | 39.5 | 17.4 | 186.0 | 3800.0 | Female |

|---|

| 2 | Adelie | Torgersen | 40.3 | 18.0 | 195.0 | 3250.0 | Female |

|---|

| 4 | Adelie | Torgersen | 36.7 | 19.3 | 193.0 | 3450.0 | Female |

|---|

| 5 | Adelie | Torgersen | 39.3 | 20.6 | 190.0 | 3650.0 | Male |

|---|

| ... | ... | ... | ... | ... | ... | ... | ... |

|---|

| 338 | Gentoo | Biscoe | 47.2 | 13.7 | 214.0 | 4925.0 | Female |

|---|

| 340 | Gentoo | Biscoe | 46.8 | 14.3 | 215.0 | 4850.0 | Female |

|---|

| 341 | Gentoo | Biscoe | 50.4 | 15.7 | 222.0 | 5750.0 | Male |

|---|

| 342 | Gentoo | Biscoe | 45.2 | 14.8 | 212.0 | 5200.0 | Female |

|---|

| 343 | Gentoo | Biscoe | 49.9 | 16.1 | 213.0 | 5400.0 | Male |

|---|

333 rows × 7 columns

1

2

| points = pen.iloc[:, 2:4]

points.head()

|

| bill_length_mm | bill_depth_mm |

|---|

| 0 | 39.1 | 18.7 |

|---|

| 1 | 39.5 | 17.4 |

|---|

| 2 | 40.3 | 18.0 |

|---|

| 4 | 36.7 | 19.3 |

|---|

| 5 | 39.3 | 20.6 |

|---|

1

2

3

4

5

6

7

| xs = points.bill_length_mm

ys = points.bill_depth_mm

sns.scatterplot(x=xs, y=ys, hue=pen.species)

plt.legend(title='Species', bbox_to_anchor=(1.05, 1), loc='upper left')

plt.title('Species With Real Labels')

plt.show()

|

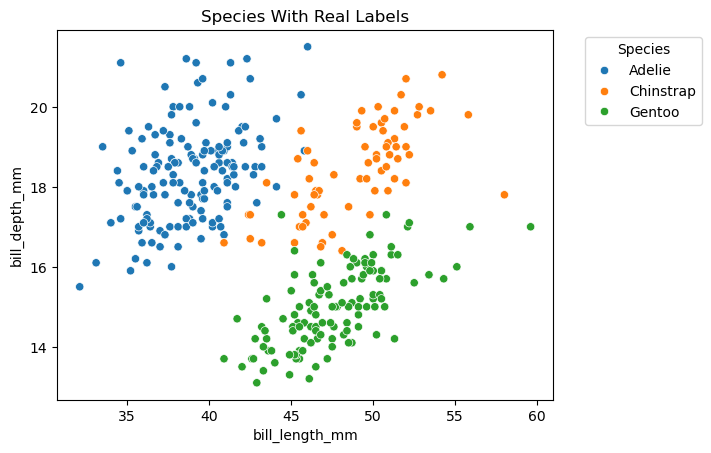

Clustering 2D points

From the scatter plot of the previous exercise, you saw that the points seem to separate into 3 clusters. You’ll now create a KMeans model to find 3 clusters, and fit it to the data points from the previous exercise. After the model has been fit, you’ll obtain the cluster labels for some new points using the .predict() method.

You are given the array points from the previous exercise, and also an array new_points.

Instructions

- Import

KMeans from sklearn.cluster. - Using

KMeans(), create a KMeans instance called model to find 3 clusters. To specify the number of clusters, use the n_clusters keyword argument. - Use the

.fit() method of model to fit the model to the array of points points. - Use the

.predict() method of model to predict the cluster labels of new_points, assigning the result to labels. - Hit ‘Submit Answer’ to see the cluster labels of

new_points.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| # create points

points = pen.iloc[:, 2:4].sample(n=177, random_state=3)

new_points = pen[~pen.index.isin(points.index)].iloc[:, 2:4]

# Import KMeans

# from sklearn.cluster import KMeans

# Create a KMeans instance with 3 clusters: model

model = KMeans(n_clusters=3, n_init=10)

# Fit model to points

model.fit(points)

labels = model.predict(points)

# Determine the cluster labels of new_points: labels

new_labels = model.predict(new_points)

# Print cluster labels of new_points

print(new_labels)

|

1

2

3

4

5

| [2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 1 2 2 2 2 2 2 2

2 2 1 2 2 2 2 2 2 2 2 2 2 2 2 2 2 1 2 2 2 2 2 2 2 2 2 0 0 0 1 1 1 1 0 0 0

0 1 0 0 0 1 2 1 1 1 0 1 0 0 0 0 1 1 1 0 1 0 0 0 0 1 1 1 1 1 1 0 1 1 1 0 0

1 0 1 1 1 1 0 1 0 1 0 1 1 1 0 1 1 1 0 1 0 0 0 1 1 1 1 1 0 0 0 1 0 0 0 0 0

1 0 1 1 1 0 1 0]

|

| bill_length_mm | bill_depth_mm |

|---|

| 124 | 35.2 | 15.9 |

|---|

| 159 | 51.3 | 18.2 |

|---|

| 309 | 52.1 | 17.0 |

|---|

| 20 | 37.8 | 18.3 |

|---|

| 90 | 35.7 | 18.0 |

|---|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

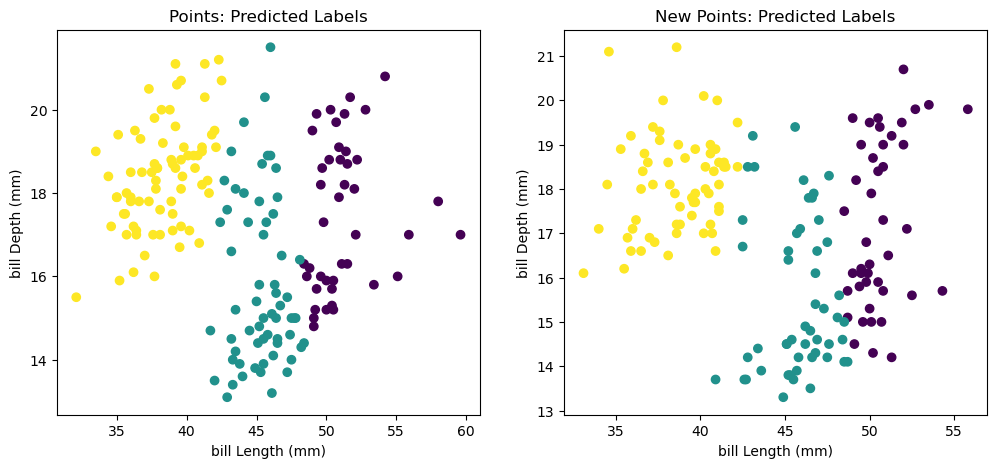

17

| fig, (ax1, ax2) = plt.subplots(nrows=1, ncols=2, figsize=(12, 5))

xs = points.bill_length_mm

ys = points.bill_depth_mm

xs_new = new_points.bill_length_mm

ys_new = new_points.bill_depth_mm

ax1.scatter(xs, ys, c=labels)

ax1.set_ylabel('bill Depth (mm)')

ax1.set_xlabel('bill Length (mm)')

ax1.set_title('Points: Predicted Labels')

ax2.scatter(xs_new, ys_new, c=new_labels)

ax2.set_ylabel('bill Depth (mm)')

ax2.set_xlabel('bill Length (mm)')

ax2.set_title('New Points: Predicted Labels')

plt.show()

|

You’ve successfully performed k-Means clustering and predicted the labels of new points. But it is not easy to inspect the clustering by just looking at the printed labels. A visualization would be far more useful. In the next exercise, you’ll inspect your clustering with a scatter plot!

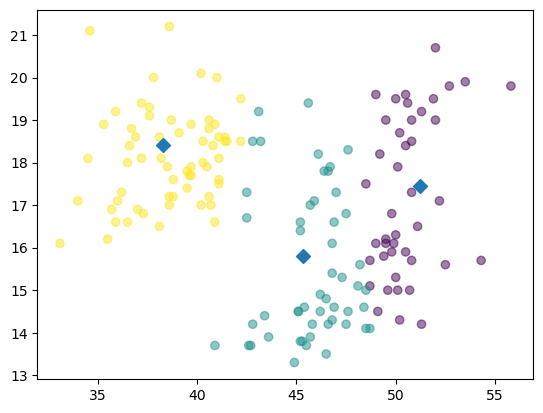

Inspect your clustering

Let’s now inspect the clustering you performed in the previous exercise!

A solution to the previous exercise has already run, so new_points is an array of points and labels is the array of their cluster labels.

Instructions

- Import

matplotlib.pyplot as plt. - Assign column

0 of new_points to xs, and column 1 of new_points to ys. - Make a scatter plot of

xs and ys, specifying the c=labels keyword arguments to color the points by their cluster label. Also specify alpha=0.5. - Compute the coordinates of the centroids using the

.cluster_centers_ attribute of model. - Assign column

0 of centroids to centroids_x, and column 1 of centroids to centroids_y. - Make a scatter plot of

centroids_x and centroids_y, using 'D' (a diamond) as a marker by specifying the marker parameter. Set the size of the markers to be 50 using s=50.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| # Import pyplot

# import matplotlib.pyplot as plt

new_points = new_points.to_numpy()

# Assign the columns of new_points: xs and ys

xs = new_points[:, 0]

ys = new_points[:, 1]

# Make a scatter plot of xs and ys, using labels to define the colors

plt.scatter(xs, ys, c=new_labels, alpha=0.5)

# Assign the cluster centers: centroids

centroids = model.cluster_centers_

# Assign the columns of centroids: centroids_x, centroids_y

centroids_x = centroids[:,0]

centroids_y = centroids[:,1]

# Make a scatter plot of centroids_x and centroids_y

plt.scatter(centroids_x, centroids_y, marker='D', s=50)

plt.show()

|

The clustering looks great! But how can you be sure that 3 clusters is the correct choice? In other words, how can you evaluate the quality of a clustering? Tune into the next video in which Ben will explain how to evaluate a clustering!

Evaluating a clustering

- In the previous video, we used k-means to cluster the iris samples into three clusters.

- But how can we evaluate the quality of this clustering?

- Evaluating a clustering

- A direct approach is to compare the clusters with the iris species.

- You’ll learn about this first, before considering the problem of how to measure the quality of a clustering in a way that doesn’t require our samples to come pre-grouped into species.

- This measure of quality can then be used to make an informed choice about the number of clusters to look for.

- Iris: clusters vs species

- Firstly, let’s check whether the 3 clusters of iris samples have any correspondence to the iris species.

- The correspondence is described by this table.

- There is one column for each of the three species of iris: setosa, versicolor and virginica, and one row for each of the three cluster labels: 0, 1 and 2.

- The table shows the number of samples that have each possible cluster label/species combination.

- For example, we see that cluster 1 corresponds perfectly with the species setosa.

- On the other hand, while cluster 0 contains mainly virginica samples, there are also some virginica samples in cluster 2.

- Cross tabulation with pandas

- Tables like these are called “cross-tabulations”.

- To construct one, we are going to use the pandas library.

- Let’s assume the species of each sample is given as a list of strings.

- Aligning labels and species

- Import pandas, and then create a two-column DataFrame, where the first column is cluster labels and the second column is the iris species, so that each row gives the cluster label and species of a single sample.

- Crosstab of labels and species

- Now use the pandas crosstab function to build the cross tabulation, passing the two columns of the DataFrame.

- Cross tabulations like these provide great insights into which sort of samples are in which cluster.

- But in most datasets, the samples are not labeled by species.

- How can the quality of a clustering be evaluated in these cases?

- Measuring clustering quality

- We need a way to measure the quality of a clustering that uses only the clusters and the samples themselves.

- A good clustering has tight clusters, meaning that the samples in each cluster are bunched together, not spread out.

- Inertia measures clustering quality

- How spread out the samples within each cluster are can be measured by the “inertia”.

- Intuitively, inertia measures how far samples are from their centroids.

- You can find the precise definition in the scikit-learn documentation.

- We want clusters that are not spread out, so lower values of the inertia are better.

- The inertia of a kmeans model is measured automatically when any of the

.fit() methods are called, and is available afterwards as the .inertia_ attribute. - In fact, kmeans aims to place the clusters in a way that minimizes the inertia.

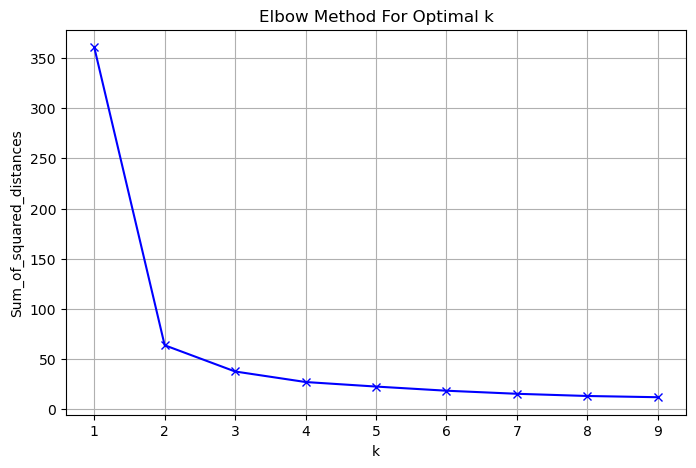

- The number of clusters

- Here is a plot of the inertia values of clusterings of the iris dataset with different numbers of clusters.

- Our kmeans model with 3 clusters has relatively low inertia, which is great.

- But notice that the inertia continues to decrease slowly.

- So what’s the best number of clusters to choose?

- How many clusters to choose?

- Ultimately, this is a trade-off.

- A good clustering has tight clusters (meaning low inertia).

- But it also doesn’t have too many clusters.

- A good rule of thumb is to choose an elbow in the inertia plot, that is, a point where the inertia begins to decrease more slowly.

- For example, by this criterion, 3 is a good number of clusters for the iris dataset.

1

2

| ct = pd.crosstab(pred_labels.pred_labels, pred_labels.species)

ct

|

| species | setosa | versicolor | virginica |

|---|

| pred_labels | | | |

|---|

| 0 | 50 | 0 | 0 |

|---|

| 1 | 0 | 2 | 36 |

|---|

| 2 | 0 | 48 | 14 |

|---|

1

2

3

4

5

6

| Sum_of_squared_distances = list()

K = range(1, 10)

for k in K:

km = KMeans(n_clusters=k, n_init=10)

km = km.fit(X_iris)

Sum_of_squared_distances.append(km.inertia_)

|

1

2

3

4

5

6

7

| plt.figure(figsize=(8, 5))

plt.plot(K, Sum_of_squared_distances, 'bx-')

plt.xlabel('k')

plt.ylabel('Sum_of_squared_distances')

plt.title('Elbow Method For Optimal k')

plt.grid()

plt.show()

|

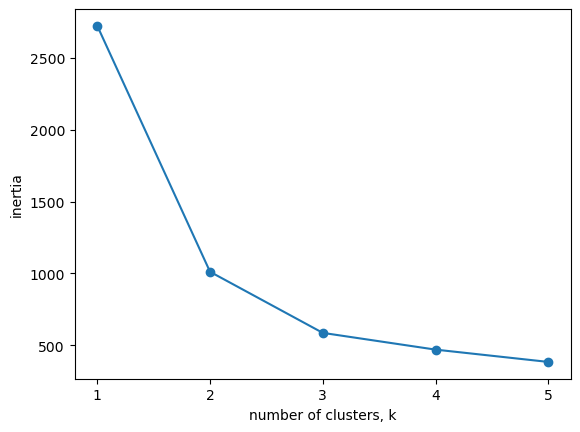

How many clusters of grain?

In the video, you learned how to choose a good number of clusters for a dataset using the k-means inertia graph. You are given an array samples containing the measurements (such as area, perimeter, length, and several others) of samples of grain. What’s a good number of clusters in this case?

KMeans and PyPlot (plt) have already been imported for you.

This dataset was sourced from the UCI Machine Learning Repository.

Instructions

- For each of the given values of

k, perform the following steps: - Create a

KMeans instance called model with k clusters. - Fit the model to the grain data

samples. - Append the value of the

inertia_ attribute of model to the list inertias. - The code to plot

ks vs inertias has been written for you, so hit ‘Submit Answer’ to see the plot!

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| samples = sed.iloc[:, :-2]

ks = range(1, 6)

inertias = list()

for k in ks:

# Create a KMeans instance with k clusters: model

model = KMeans(n_clusters=k, n_init=10)

# Fit model to samples

model.fit(samples)

# Append the inertia to the list of inertias

inertias.append(model.inertia_)

# Plot ks vs inertias

plt.plot(ks, inertias, '-o')

plt.xlabel('number of clusters, k')

plt.ylabel('inertia')

plt.xticks(ks)

plt.show()

|

The inertia decreases very slowly from 3 clusters to 4, so it looks like 3 clusters would be a good choice for this data.

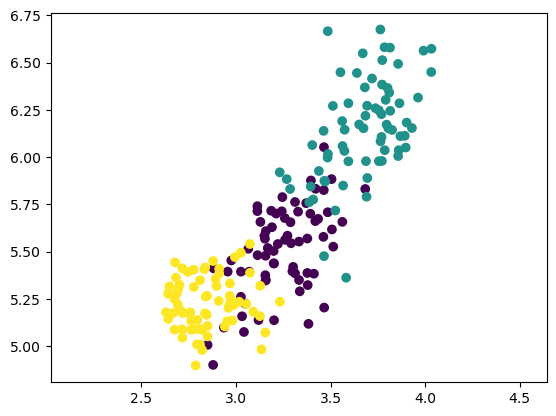

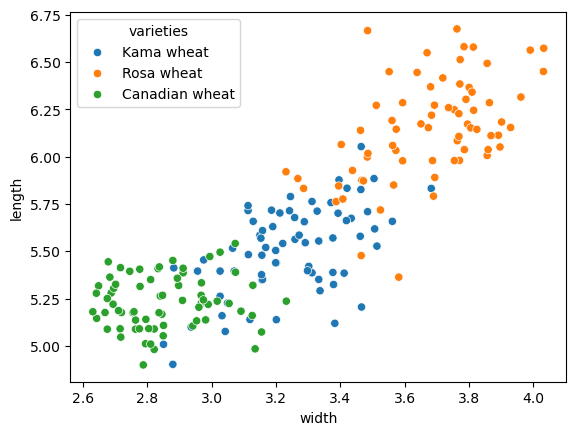

Evaluating the grain clustering

In the previous exercise, you observed from the inertia plot that 3 is a good number of clusters for the grain data. In fact, the grain samples come from a mix of 3 different grain varieties: “Kama”, “Rosa” and “Canadian”. In this exercise, cluster the grain samples into three clusters, and compare the clusters to the grain varieties using a cross-tabulation.

You have the array samples of grain samples, and a list varieties giving the grain variety for each sample. Pandas (pd) and KMeans have already been imported for you.

Instructions

- Create a

KMeans model called model with 3 clusters. - Use the

.fit_predict() method of model to fit it to samples and derive the cluster labels. Using .fit_predict() is the same as using .fit() followed by .predict(). - Create a DataFrame

df with two columns named 'labels' and 'varieties', using labels and varieties, respectively, for the column values. This has been done for you. - Use the

pd.crosstab() function on df['labels'] and df['varieties'] to count the number of times each grain variety coincides with each cluster label. Assign the result to ct. - Hit ‘Submit Answer’ to see the cross-tabulation!

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| varieties = sed.varieties

# Create a KMeans model with 3 clusters: model

model = KMeans(n_clusters=3, n_init=10)

# Use fit_predict to fit model and obtain cluster labels: labels

labels = model.fit_predict(samples)

# Create a DataFrame with labels and varieties as columns: df

df = pd.DataFrame({'labels': labels, 'varieties': varieties})

# Create crosstab: ct

ct = pd.crosstab(df.labels, df.varieties)

# Display ct

ct

|

| varieties | Canadian wheat | Kama wheat | Rosa wheat |

|---|

| labels | | | |

|---|

| 0 | 0 | 1 | 60 |

|---|

| 1 | 68 | 9 | 0 |

|---|

| 2 | 2 | 60 | 10 |

|---|

The cross-tabulation shows that the 3 varieties of grain separate really well into 3 clusters. But depending on the type of data you are working with, the clustering may not always be this good. Is there anything you can do in such situations to improve your clustering?

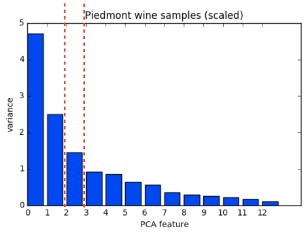

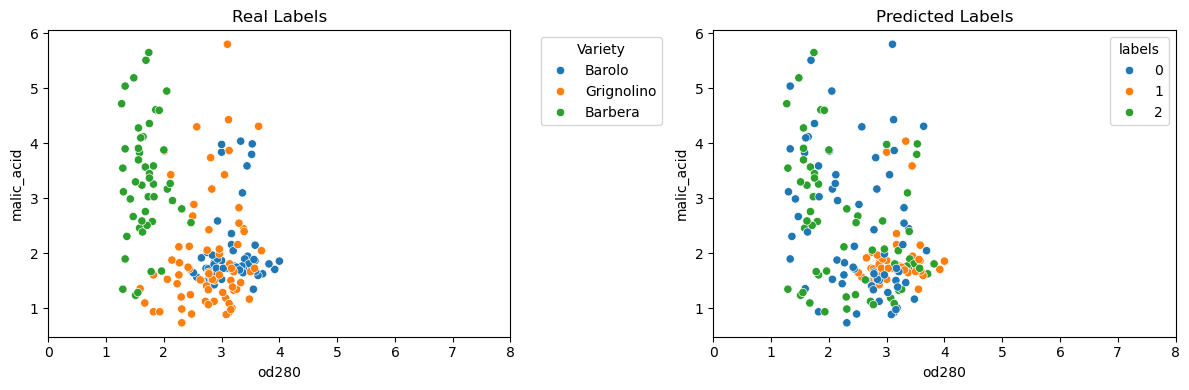

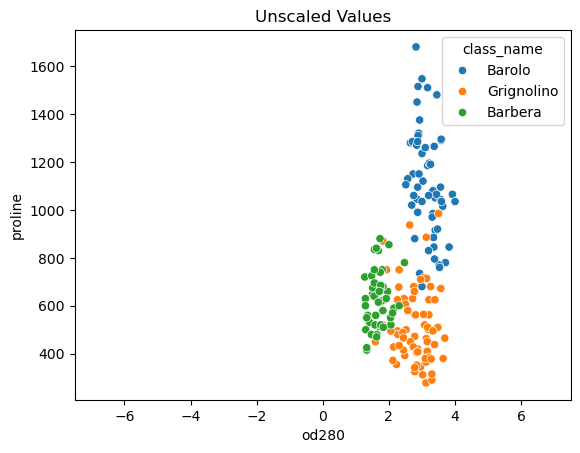

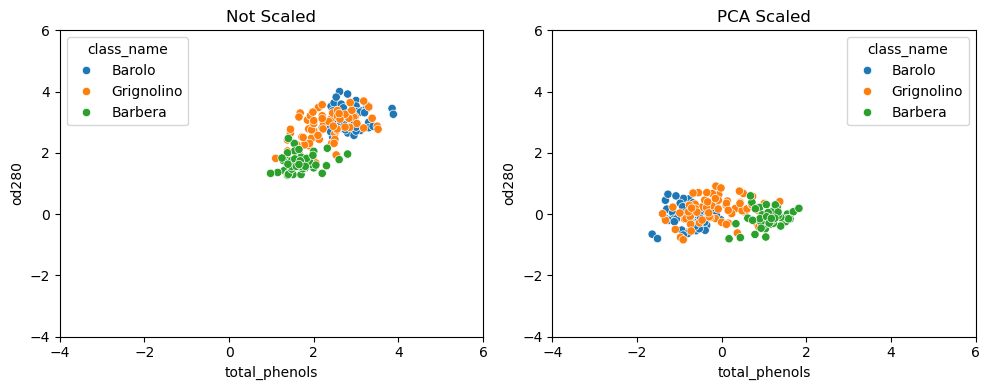

- Piedmont wines dataset

- The Piedmont wines dataset.

- We have 178 samples of red wine from the Piedmont region of Italy.

- The features measure chemical composition (like alcohol content) and visual properties like color intensity.

- The samples come from 3 distinct varieties of wine.

- Clustering the wines

- Let’s take the array of samples and use KMeans to find 3 clusters.

- Clusters vs. varieties

- There are three varieties of wine, so let’s use pandas crosstab to check the cluster label - wine variety correspondence.

- As you can see, this time things haven’t worked out so well.

- The KMeans clusters don’t correspond well with the wine varieties.

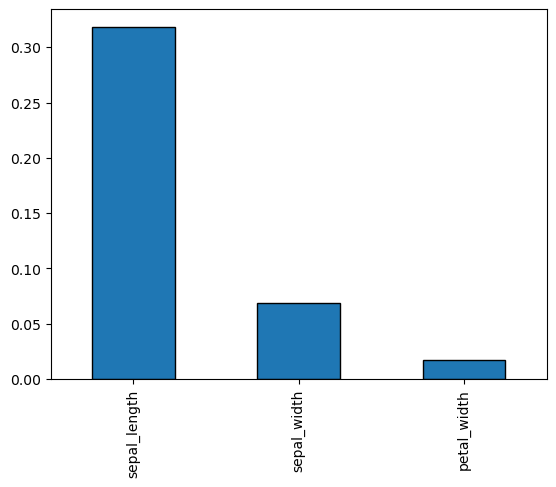

- Feature variances

- The problem is that the features of the wine dataset have very different variances.

- The variance of a feature measures the spread of its values.

- For example, the malic acid feature has a higher variance than the od280 feature, and this can also be seen in their scatter plot.

- The differences in some of the feature variances is enormous, as seen here, for example, in the scatter plot of the od280 and proline features.

- StandardScaler

- In KMeans clustering, the variance of a feature corresponds to its influence on the clustering algorithm.

- To give every feature a chance, the data needs to be transformed so that features have equal variance.

- This can be achieved with the StandardScaler from scikit-learn.

- It transforms every feature to have mean 0 and variance 1.

- The resulting “standardized” features can be very informative.

- Using standardized od280 and proline, for example, the three wine varieties are much more distinct.

- sklearn StandardScaler

- Let’s see the StandardScaler in action.

- First, import StandardScaler from sklearn.preprocessing.

- Then create a StandardScaler object, and fit it to the samples.

- The transform method can now be used to standardize any samples, either the same ones, or completely new ones.

- Similar methods

- The APIs of StandardScaler and KMeans are similar, but there is an important difference.

- StandardScaler transforms data, and so has a transform method.

- KMeans, in contrast, assigns cluster labels to samples, and this done using the predict method.

- StandardScaler, then KMeans

- Let’s return to the problem of clustering the wines.

- We need to perform two steps.

- Firstly, to standardize the data using StandardScaler, and secondly to take the standardized data and cluster it using KMeans.

- This can be conveniently achieved by combining the two steps using a scikit-learn pipeline.

- Data then flows from one step into the next, automatically.

- Pipelines combine multiple steps

- The first steps are the same: creating a StandardScaler and a KMeans object.

- After that, import the make_pipeline function from sklearn.pipeline.

- Apply the make_pipeline function to the steps that you want to compose in this case, the scaler and the kmeans objects.

- Now use the fit method of the pipeline to fit both the scaler and kmeans, and use its predict method to obtain the cluster labels.

- Feature standardization improves clustering

- Checking the correspondence between the cluster labels and the wine varieties reveals that this new clustering, incorporating standardization, is fantastic.

- Its three clusters correspond almost exactly to the three wine varieties.

- This is a huge improvement on the clustering without standardization.

- sklearn preprocessing steps

- StandardScaler is an example of a “preprocessing” step.

- There are several of these available in scikit-learn, for example MaxAbsScaler and Normalizer.

| class_label | class_name | alcohol | malic_acid | ash | alcalinity_of_ash | magnesium | total_phenols | flavanoids | nonflavanoid_phenols | proanthocyanins | color_intensity | hue | od280 | proline |

|---|

| 0 | 1 | Barolo | 14.23 | 1.71 | 2.43 | 15.6 | 127 | 2.80 | 3.06 | 0.28 | 2.29 | 5.64 | 1.04 | 3.92 | 1065 |

|---|

| 1 | 1 | Barolo | 13.20 | 1.78 | 2.14 | 11.2 | 100 | 2.65 | 2.76 | 0.26 | 1.28 | 4.38 | 1.05 | 3.40 | 1050 |

|---|

1

2

3

4

5

6

7

| wine_samples = win.iloc[:, 2:]

wine_model = KMeans(n_clusters=3, n_init=10)

wine_labels = wine_model.fit_predict(wine_samples)

wine_pred = pd.DataFrame({'labels': wine_labels, 'varieties': win.class_name})

wine_ct = pd.crosstab(wine_pred.labels, wine_pred.varieties)

wine_ct

|

| varieties | Barbera | Barolo | Grignolino |

|---|

| labels | | | |

|---|

| 0 | 19 | 0 | 50 |

|---|

| 1 | 0 | 46 | 1 |

|---|

| 2 | 29 | 13 | 20 |

|---|

1

| wine_samples.var().round(3)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| alcohol 0.659

malic_acid 1.248

ash 0.075

alcalinity_of_ash 11.153

magnesium 203.989

total_phenols 0.392

flavanoids 0.998

nonflavanoid_phenols 0.015

proanthocyanins 0.328

color_intensity 5.374

hue 0.052

od280 0.504

proline 99166.717

dtype: float64

|

1

2

3

4

5

6

7

8

9

10

11

| fig, (ax1, ax2) = plt.subplots(ncols=2, figsize=(12, 4))

sns.scatterplot(data=win, x='od280', y='malic_acid', hue='class_name', ax=ax1)

ax1.legend(title='Variety', bbox_to_anchor=(1.05, 1), loc='upper left')

ax1.set_xlim(0, 8)

ax1.set_title('Real Labels')

sns.scatterplot(data=win, x='od280', y='malic_acid', hue=wine_pred.labels, palette="tab10", ax=ax2)

ax2.set_xlim(0, 8)

ax2.set_title('Predicted Labels')

plt.tight_layout()

|

1

2

3

| p1 = sns.scatterplot(data=win, x='od280', y='proline', hue='class_name')

p1.set_xlim(-7.5, 7.5)

p1.set_title('Unscaled Values');

|

1

2

3

4

| wine_scaler = StandardScaler()

wine_scaler.fit(wine_samples)

StandardScaler(copy=True, with_mean=True, with_std=True)

wine_samples_scaled = wine_scaler.transform(wine_samples)

|

1

2

| wine_samples_scaled = pd.DataFrame(wine_samples_scaled, columns=win.columns[2:])

wine_samples_scaled.head(2)

|

| alcohol | malic_acid | ash | alcalinity_of_ash | magnesium | total_phenols | flavanoids | nonflavanoid_phenols | proanthocyanins | color_intensity | hue | od280 | proline |

|---|

| 0 | 1.518613 | -0.562250 | 0.232053 | -1.169593 | 1.913905 | 0.808997 | 1.034819 | -0.659563 | 1.224884 | 0.251717 | 0.362177 | 1.847920 | 1.013009 |

|---|

| 1 | 0.246290 | -0.499413 | -0.827996 | -2.490847 | 0.018145 | 0.568648 | 0.733629 | -0.820719 | -0.544721 | -0.293321 | 0.406051 | 1.113449 | 0.965242 |

|---|

1

2

3

| p2 = sns.scatterplot(data=wine_samples_scaled, x='od280', y='proline', hue=win.class_name)

p2.set_xlim(-7.5, 7.5)

p2.set_title('Scaled Values');

|

1

2

3

4

5

| scaler = StandardScaler()

kmeans = KMeans(n_clusters=3, n_init=10)

pipeline = make_pipeline(scaler, kmeans)

pipeline.fit(wine_samples_scaled)

wine_scaled_labels = pipeline.predict(wine_samples_scaled)

|

1

2

3

| wine_pred_scaled = pd.DataFrame({'labels': wine_scaled_labels, 'varieties': win.class_name})

wine_scaled_ct = pd.crosstab(wine_pred_scaled.labels, wine_pred_scaled.varieties)

wine_scaled_ct

|

| varieties | Barbera | Barolo | Grignolino |

|---|

| labels | | | |

|---|

| 0 | 48 | 0 | 3 |

|---|

| 1 | 0 | 0 | 65 |

|---|

| 2 | 0 | 59 | 3 |

|---|

Scaling fish data for clustering

You are given an array samples giving measurements of fish. Each row represents an individual fish. The measurements, such as weight in grams, length in centimeters, and the percentage ratio of height to length, have very different scales. In order to cluster this data effectively, you’ll need to standardize these features first. In this exercise, you’ll build a pipeline to standardize and cluster the data.

These fish measurement data were sourced from the Journal of Statistics Education.

Instructions

- Import:

make_pipeline from sklearn.pipeline.StandardScaler from sklearn.preprocessing.KMeans from sklearn.cluster.

- Create an instance of

StandardScaler called scaler. - Create an instance of

KMeans with 4 clusters called kmeans. - Create a pipeline called

pipeline that chains scaler and kmeans. To do this, you just need to pass them in as arguments to make_pipeline().

1

2

3

4

5

6

7

8

9

10

11

12

13

| # Perform the necessary imports

# from sklearn.pipeline import make_pipeline

# from sklearn.preprocessing import StandardScaler

# from sklearn.cluster import KMeans

# Create scaler: scaler

scaler = StandardScaler()

# Create KMeans instance: kmeans

kmeans = KMeans(n_clusters=4, n_init=10)

# Create pipeline: pipeline

pipeline = make_pipeline(scaler, kmeans)

|

Now that you’ve built the pipeline, you’ll use it in the next exercise to cluster the fish by their measurements.

Clustering the fish data

You’ll now use your standardization and clustering pipeline from the previous exercise to cluster the fish by their measurements, and then create a cross-tabulation to compare the cluster labels with the fish species.

As before, samples is the 2D array of fish measurements. Your pipeline is available as pipeline, and the species of every fish sample is given by the list species.

Instructions

- Import

pandas as pd. - Fit the pipeline to the fish measurements

samples. - Obtain the cluster labels for

samples by using the .predict() method of pipeline. - Using

pd.DataFrame(), create a DataFrame df with two columns named 'labels' and 'species', using labels and species, respectively, for the column values. - Using

pd.crosstab(), create a cross-tabulation ct of df['labels'] and df['species']

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| samples = fsh.iloc[:, 1:]

species = fsh[0]

# Fit the pipeline to samples

pipeline.fit(samples)

# Calculate the cluster labels: labels

labels = pipeline.predict(samples)

# Create a DataFrame with labels and species as columns: df

df = pd.DataFrame({'labels': labels, 'species': species})

# Create crosstab: ct

ct = pd.crosstab(df.labels, df.species)

# Display ct

ct

|

| species | Bream | Pike | Roach | Smelt |

|---|

| labels | | | | |

|---|

| 0 | 1 | 0 | 19 | 1 |

|---|

| 1 | 0 | 17 | 0 | 0 |

|---|

| 2 | 33 | 0 | 1 | 0 |

|---|

| 3 | 0 | 0 | 0 | 13 |

|---|

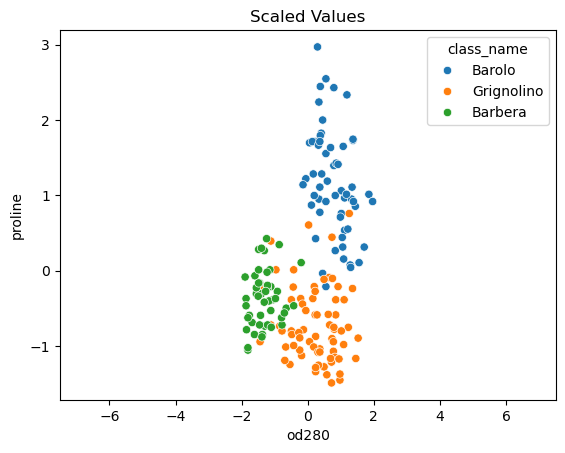

Clustering stocks using KMeans

In this exercise, you’ll cluster companies using their daily stock price movements (i.e. the dollar difference between the closing and opening prices for each trading day). You are given a NumPy array movements of daily price movements from 2010 to 2015 (obtained from Yahoo! Finance), where each row corresponds to a company, and each column corresponds to a trading day.

Some stocks are more expensive than others. To account for this, include a Normalizer at the beginning of your pipeline. The Normalizer will separately transform each company’s stock price to a relative scale before the clustering begins.

Note that Normalizer() is different to StandardScaler(), which you used in the previous exercise. While StandardScaler() standardizes features (such as the features of the fish data from the previous exercise) by removing the mean and scaling to unit variance, Normalizer() rescales each sample - here, each company’s stock price - independently of the other.

KMeans and make_pipeline have already been imported for you.

Instructions

- Import

Normalizer from sklearn.preprocessing. - Create an instance of

Normalizer called normalizer. - Create an instance of

KMeans called kmeans with 10 clusters. - Using

make_pipeline(), create a pipeline called pipeline that chains normalizer and kmeans. - Fit the pipeline to the

movements array.

1

2

| movements = stk.to_numpy()

companies = stk.index.to_list()

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| # Import Normalizer

# from sklearn.preprocessing import Normalizer

# Create a normalizer: normalizer

normalizer = Normalizer()

# Create a KMeans model with 10 clusters: kmeans

kmeans = KMeans(n_clusters=10, random_state=12, n_init=10)

# Make a pipeline chaining normalizer and kmeans: pipeline

pipeline = make_pipeline(normalizer, kmeans)

# Fit pipeline to the daily price movements

pipeline.fit(movements)

|

Pipeline(steps=[('normalizer', Normalizer()),

('kmeans', KMeans(n_clusters=10, n_init=10, random_state=12))])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.Now that your pipeline has been set up, you can find out which stocks move together in the next exercise!

Which stocks move together?

In the previous exercise, you clustered companies by their daily stock price movements. So which company have stock prices that tend to change in the same way? You’ll now inspect the cluster labels from your clustering to find out.

Your solution to the previous exercise has already been run. Recall that you constructed a Pipeline pipeline containing a KMeans model and fit it to the NumPy array movements of daily stock movements. In addition, a list companies of the company names is available.

Instructions

- Import

pandas as pd. - Use the

.predict() method of the pipeline to predict the labels for movements. - Align the cluster labels with the list of company names

companies by creating a DataFrame df with labels and companies as columns. This has been done for you. - Use the

.sort_values() method of df to sort the DataFrame by the 'labels' column, and print the result. - Hit ‘Submit Answer’ and take a moment to see which companies are together in each cluster!

1

2

3

4

5

6

7

8

9

| # Predict the cluster labels: labels

labels = pipeline.predict(movements)

# Create a DataFrame aligning labels and companies: df

df = pd.DataFrame({'labels': labels, 'companies': companies})

# Display df sorted by cluster label

df = df.sort_values('labels')

df

|

| labels | companies |

|---|

| 0 | 0 | Apple |

|---|

| 32 | 0 | 3M |

|---|

| 35 | 0 | Navistar |

|---|

| 13 | 0 | DuPont de Nemours |

|---|

| 8 | 0 | Caterpillar |

|---|

| 51 | 0 | Texas instruments |

|---|

| 30 | 1 | MasterCard |

|---|

| 23 | 1 | IBM |

|---|

| 43 | 1 | SAP |

|---|

| 47 | 1 | Symantec |

|---|

| 50 | 1 | Taiwan Semiconductor Manufacturing |

|---|

| 17 | 1 | Google/Alphabet |

|---|

| 56 | 2 | Wal-Mart |

|---|

| 28 | 2 | Coca Cola |

|---|

| 27 | 2 | Kimberly-Clark |

|---|

| 38 | 2 | Pepsi |

|---|

| 40 | 2 | Procter Gamble |

|---|

| 9 | 2 | Colgate-Palmolive |

|---|

| 41 | 2 | Philip Morris |

|---|

| 48 | 3 | Toyota |

|---|

| 58 | 3 | Xerox |

|---|

| 34 | 3 | Mitsubishi |

|---|

| 45 | 3 | Sony |

|---|

| 15 | 3 | Ford |

|---|

| 7 | 3 | Canon |

|---|

| 21 | 3 | Honda |

|---|

| 55 | 4 | Wells Fargo |

|---|

| 18 | 4 | Goldman Sachs |

|---|

| 5 | 4 | Bank of America |

|---|

| 26 | 4 | JPMorgan Chase |

|---|

| 16 | 4 | General Electrics |

|---|

| 1 | 4 | AIG |

|---|

| 3 | 4 | American express |

|---|

| 54 | 5 | Walgreen |

|---|

| 36 | 5 | Northrop Grumman |

|---|

| 29 | 5 | Lookheed Martin |

|---|

| 4 | 5 | Boeing |

|---|

| 44 | 6 | Schlumberger |

|---|

| 10 | 6 | ConocoPhillips |

|---|

| 12 | 6 | Chevron |

|---|

| 53 | 6 | Valero Energy |

|---|

| 39 | 6 | Pfizer |

|---|

| 25 | 6 | Johnson & Johnson |

|---|

| 57 | 6 | Exxon |

|---|

| 42 | 7 | Royal Dutch Shell |

|---|

| 20 | 7 | Home Depot |

|---|

| 52 | 7 | Unilever |

|---|

| 19 | 7 | GlaxoSmithKline |

|---|

| 46 | 7 | Sanofi-Aventis |

|---|

| 49 | 7 | Total |

|---|

| 6 | 7 | British American Tobacco |

|---|

| 37 | 7 | Novartis |

|---|

| 31 | 7 | McDonalds |

|---|

| 2 | 8 | Amazon |

|---|

| 59 | 8 | Yahoo |

|---|

| 33 | 9 | Microsoft |

|---|

| 22 | 9 | HP |

|---|

| 24 | 9 | Intel |

|---|

| 11 | 9 | Cisco |

|---|

| 14 | 9 | Dell |

|---|

Take a look at the clusters. Are you surprised by any of the results? In the next chapter, you’ll learn about how to communicate results such as this through visualizations.

1

2

3

| stk_t = stk.T.copy()

stk_t.index = pd.to_datetime(stk_t.index)

stk_t = stk_t.rolling(30).mean()

|

1

2

3

4

5

6

7

8

9

10

11

12

| fig, axes = plt.subplots(nrows=5, ncols=2, figsize=(16, 16))

axes = axes.ravel()

for i, (g, d) in enumerate(df.groupby('labels')):

cols = d.companies.tolist()

sns.lineplot(data=stk_t[cols], ax=axes[i])

axes[i].legend(bbox_to_anchor=(1.05, 1), loc='upper left')

axes[i].set_title(f'30-Day Rolling Mean: Group {g}')

axes[i].set_ylim(-3, 3)

fig.autofmt_xdate(rotation=90, ha='center')

plt.tight_layout()

plt.show()

|

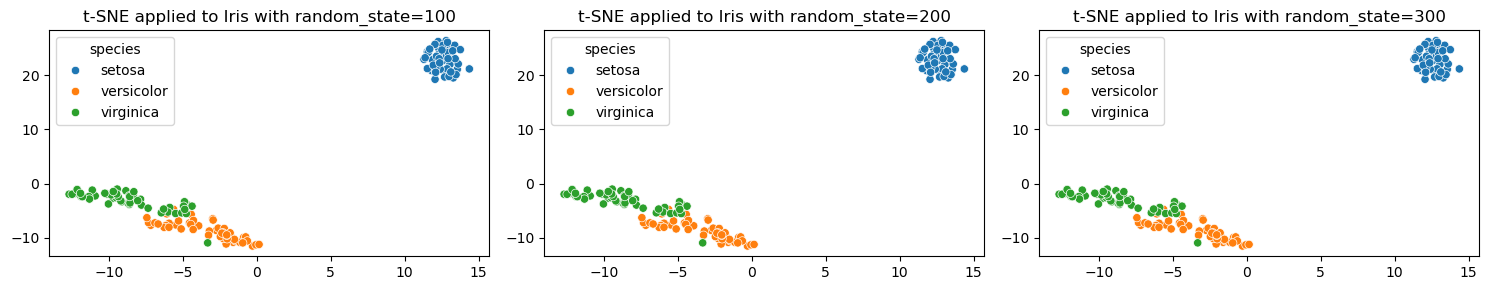

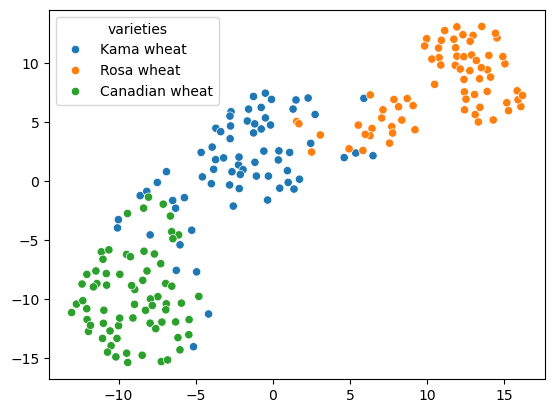

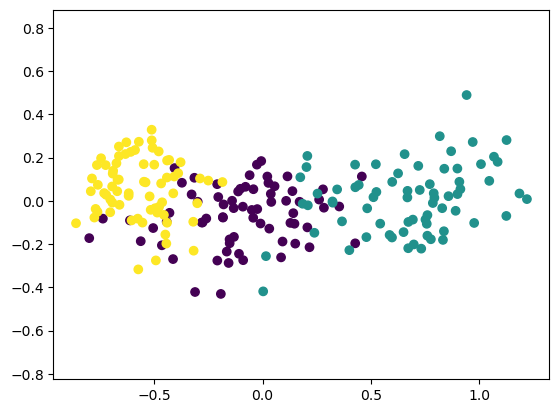

Visualization with hierarchical clustering and t-SNE

In this chapter, you’ll learn about two unsupervised learning techniques for data visualization, hierarchical clustering and t-SNE. Hierarchical clustering merges the data samples into ever-coarser clusters, yielding a tree visualization of the resulting cluster hierarchy. t-SNE maps the data samples into 2d space so that the proximity of the samples to one another can be visualized.

Visualizing hierarchies

- A huge part of your work as a data scientist will be the communication of your insights to other people.

- Visualizations communicate insight

- Visualizations are an excellent way to share your findings, particularly with a non-technical audience.

- In this chapter, you’ll learn about two unsupervised learning techniques for visualization: t-SNE and hierarchical clustering.

- t-SNE, which we’ll consider later, creates a 2d map of any dataset, and conveys useful information about the proximity of the samples to one another.

- First up, however, let’s learn about hierarchical clustering.

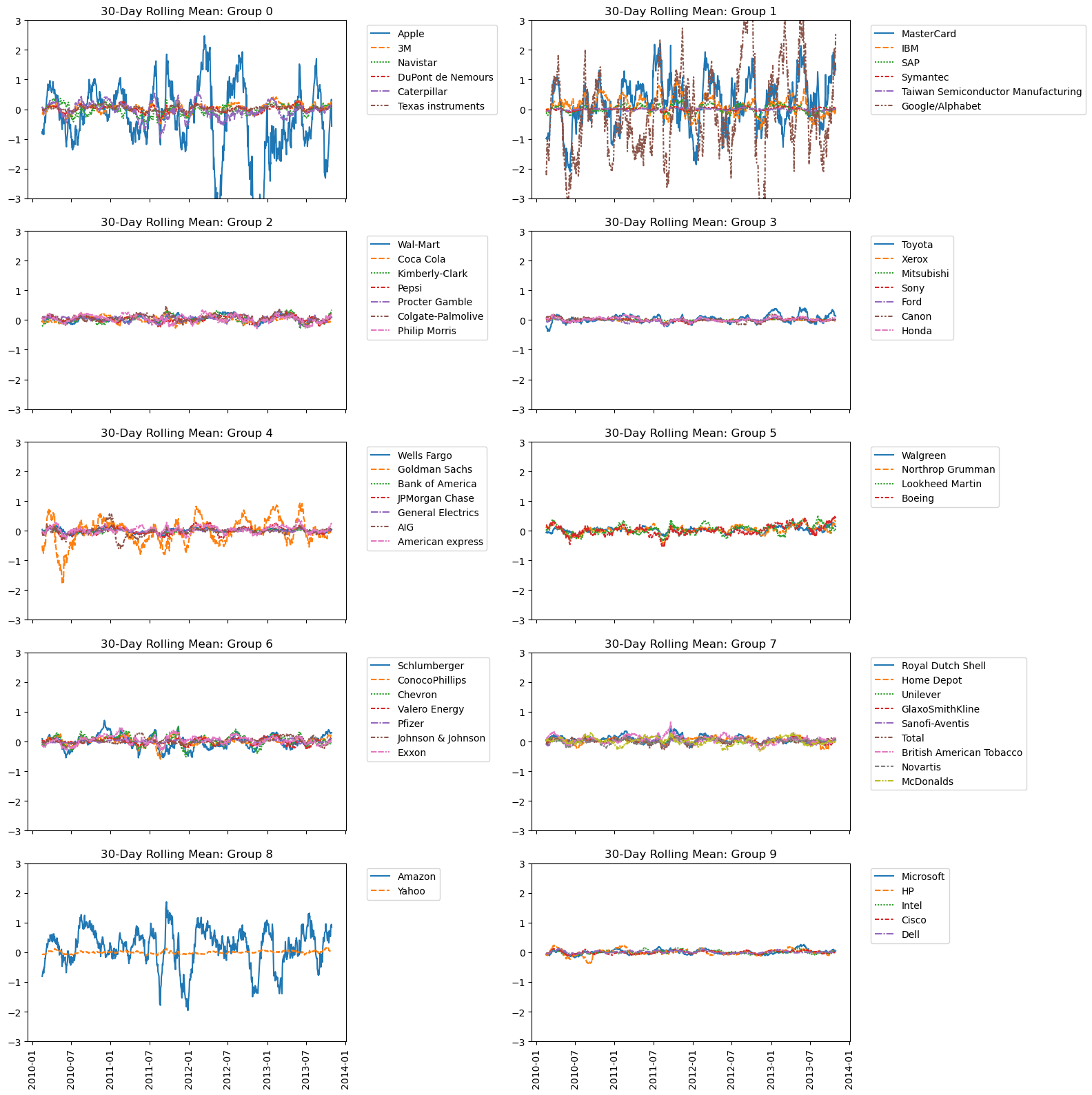

- A hierarchy of groups

- You’ve already seen many hierarchical clusterings in the real world.

- For example, living things can be organized into small narrow groups, like humans, apes, snakes and lizards, or into larger, broader groups like mammals and reptiles, or even broader groups like animals and plants.

- These groups are contained in one another, and form a hierarchy.

- Analogously, hierarchical clustering arranges samples into a hierarchy of clusters.

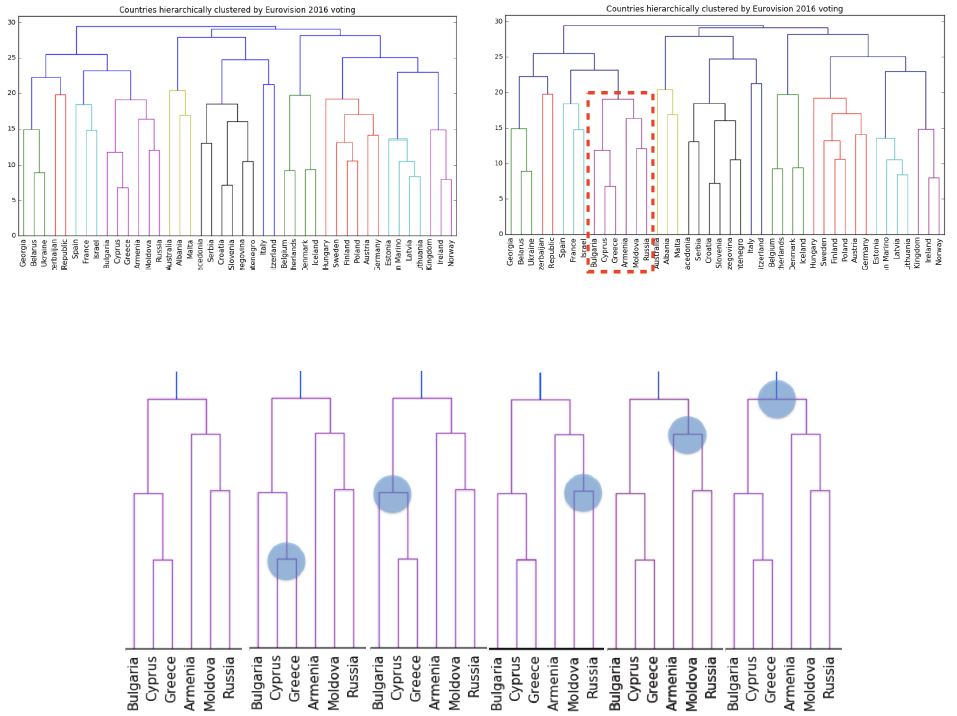

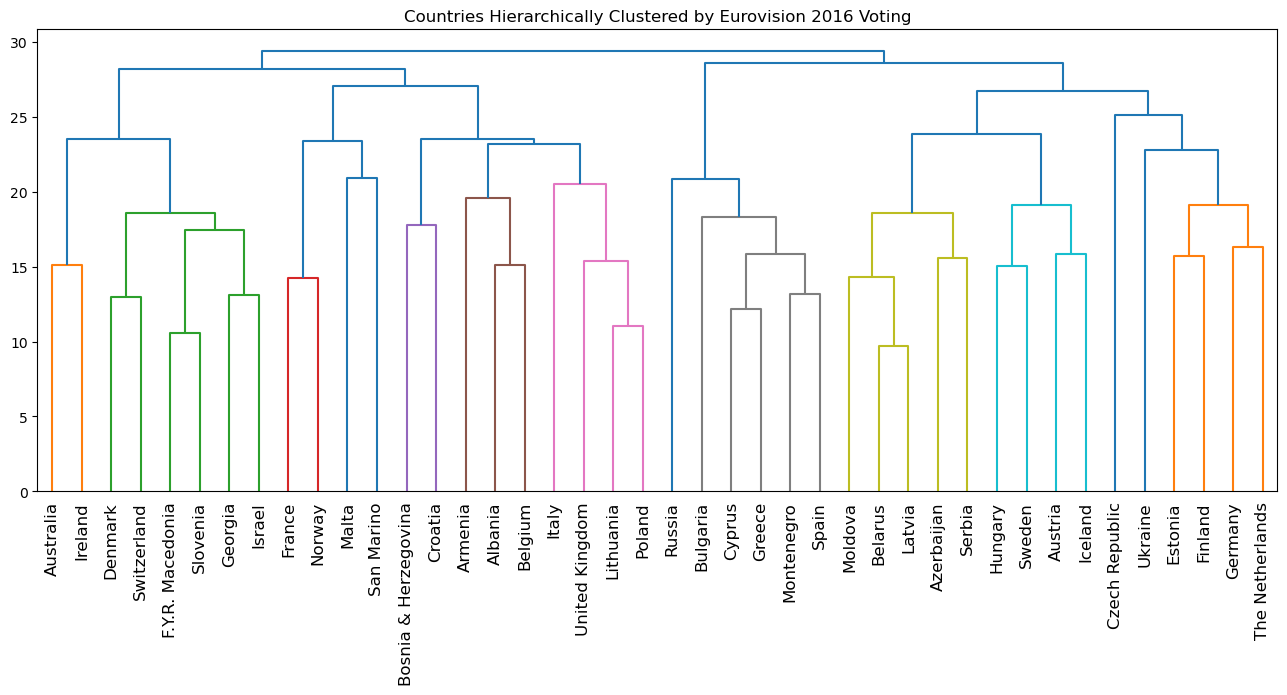

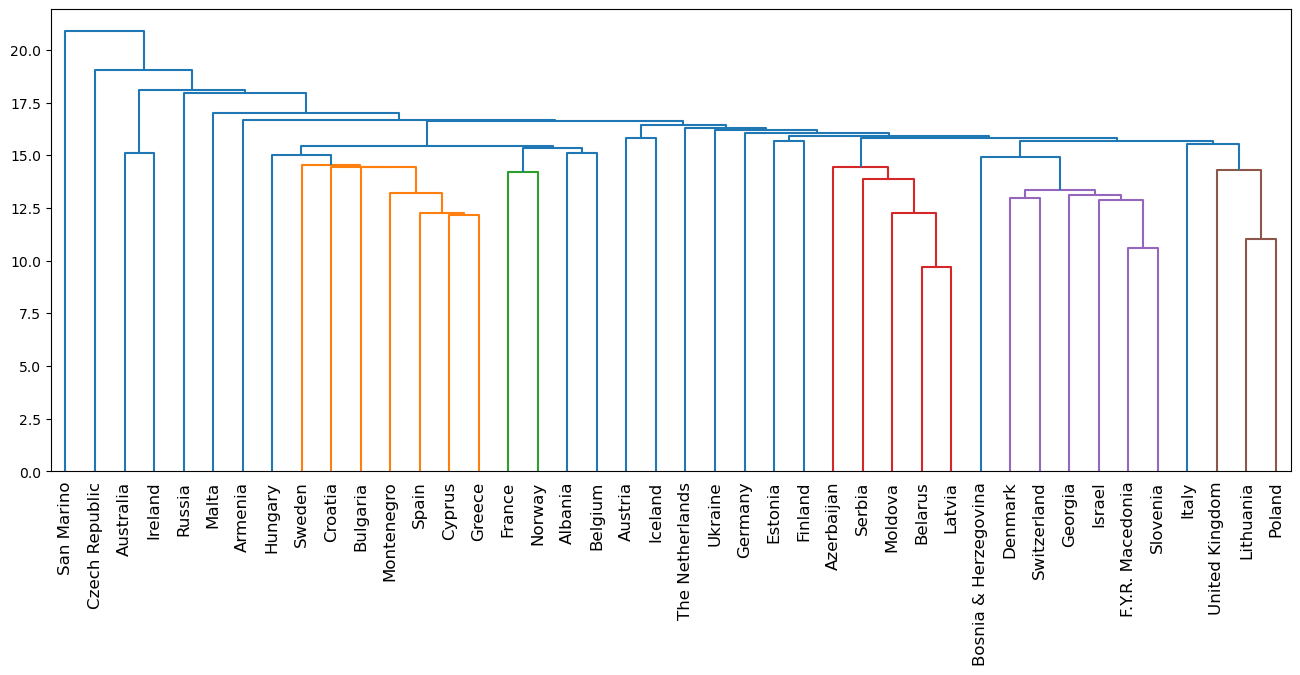

- Eurovision scoring dataset

- Hierarchical clustering can organize any sort of data into a hierarchy, not just samples of plants and animals.

- Let’s consider a new type of dataset, describing how countries scored performances at the Eurovision 2016 song contest.

- The data is arranged in a rectangular array, where the rows of the array show how many points a country gave to each song.

- The “samples” in this case are the countries.

- Hierarchical clustering of voting countries

- The result of applying hierarchical clustering to the Eurovision scores can be visualized as a tree-like diagram called a “dendrogram”.

- This single picture reveals a great deal of information about the voting behavior of countries at the Eurovision.

- The dendrogram groups the countries into larger and larger clusters, and many of these clusters are immediately recognizable as containing countries that are close to one another geographically, or that have close cultural or political ties, or that belong to single language group.

- So hierarchical clustering can produce great visualizations. But how does it work?

- Hierarchical clustering

- Hierarchical clustering proceeds in steps.

- In the beginning, every country is its own cluster - so there are as many clusters as there are countries!

- At each step, the two closest clusters are merged.

- This decreases the number of clusters, and eventually, there is only one cluster left, and it contains all the countries.

- This process is actually a particular type of hierarchical clustering called “agglomerative clustering” - there is also “divisive clustering”, which works the other way around.

- We haven’t defined yet what it means for two clusters to be close, but we’ll revisit that later on.

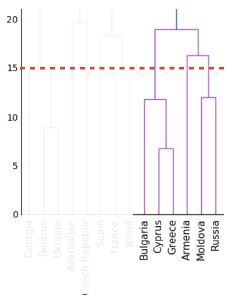

- The dendrogram of a hierarchical clustering

scipy.cluster.hierarchy.dendrogram- The entire process of the hierarchical clustering is encoded in the dendrogram.

- At the bottom, each country is in a cluster of its own.

- The clustering then proceeds from the bottom up.

- Clusters are represented as vertical lines, and a joining of vertical lines indicates a merging of clusters.

- To understand better, let’s zoom in and look at just one part of this dendrogram.

- Dendrograms, step-by-step

- In the beginning, there are six clusters, each containing only one country.

- The first merging is here, where the clusters containing Cyprus and Greece are merged together in a single cluster.

- Later on, this new cluster is merged with the cluster containing Bulgaria.

- Shortly after that, the clusters containing Moldova and Russia are merged, which later is in turn merged with the cluster containing Armenia.

- Later still, the two big composite clusters are merged together. This process continues

- until there is only one cluster left, and it contains all the countries.

- Hierarchical clustering with SciPy

- We’ll use functions from scipy to perform a hierarchical clustering on the array of scores.

- For the dendrogram, we’ll also need a list of country names.

- Firstly, import the linkage and dendrogram functions.

- Then, apply the linkage function to the sample array.

- Its the linkage function that performs the hierarchical clustering.

- Notice there is an extra method parameter - we’ll cover that in the next video.

- Now pass the output of linkage to the dendrogram function, specifying the list of country names as the labels parameter.

- In the next video, you’ll learn how to extract information from a hierarchical clustering.

A Note Regarding the Data

- The Eurovision data,

euv, is used for the lecture and some of the following exercises. - The

.shape of the Eurovision samples is (42, 26) - The Eurovision DataFrame must be pivoted to achieve the correct shape

'From country' is index'To country' is columns'Jury Points' is values- In

samples produced by DataCamp, they have changed the order of the values for every row, so that the correct data point does not correctly correspond to 'To country' - Other than copying

samples from the iPython shell, there isn’t an automated way, that I can see, to sort the rows to match the DataCamp example, so the Dendrogram will not look the same

1

2

| euvp = euv.pivot(index='From country', columns='To country', values='Jury Points').fillna(0)

euv_samples = euvp.to_numpy()

|

| To country | Armenia | Australia | Austria | Azerbaijan | Belgium |

|---|

| From country | | | | | |

|---|

| Albania | 0.0 | 12.0 | 0.0 | 0.0 | 0.0 |

|---|

| Armenia | 0.0 | 5.0 | 0.0 | 0.0 | 4.0 |

|---|

| Australia | 0.0 | 0.0 | 0.0 | 0.0 | 12.0 |

|---|

| Austria | 2.0 | 12.0 | 0.0 | 0.0 | 5.0 |

|---|

| Azerbaijan | 0.0 | 7.0 | 0.0 | 0.0 | 0.0 |

|---|

1

2

3

4

5

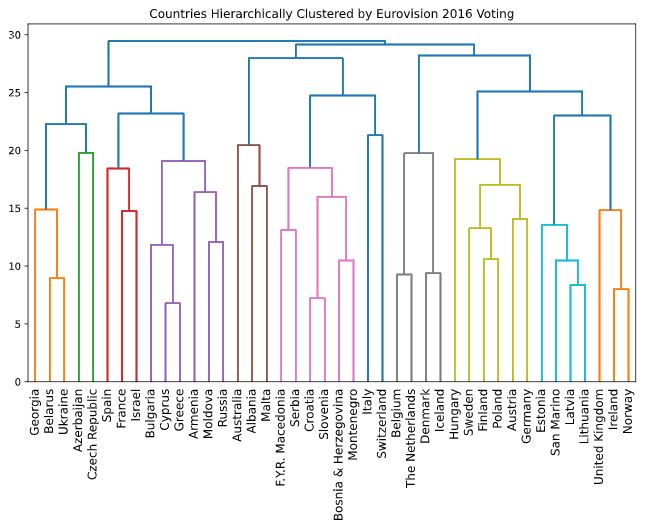

| plt.figure(figsize=(16, 6))

euv_mergings = linkage(euv_samples, method='complete')

dendrogram(euv_mergings, labels=euvp.index, leaf_rotation=90, leaf_font_size=12)

plt.title('Countries Hierarchically Clustered by Eurovision 2016 Voting')

plt.show()

|

How many merges?

If there are 5 data samples, how many merge operations will occur in a hierarchical clustering?

(To help answer this question, think back to the video, in which Ben walked through an example of hierarchical clustering using 6 countries.)

Possible Answers

- 4 merges.

- With 5 data samples, there would be 4 merge operations, and with 6 data samples, there would be 5 merges, and so on.

3 merges.This can’t be known in advance.

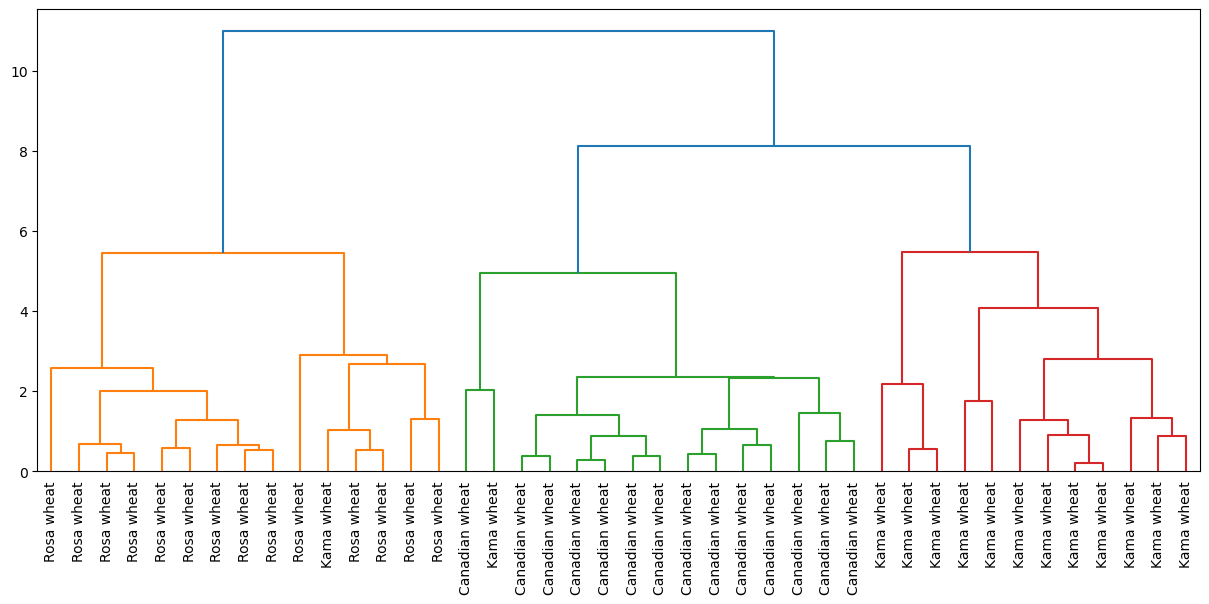

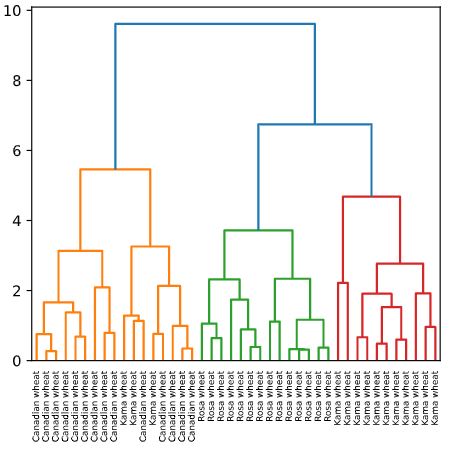

Hierarchical clustering of the grain data

In the video, you learned that the SciPy linkage() function performs hierarchical clustering on an array of samples. Use the linkage() function to obtain a hierarchical clustering of the grain samples, and use dendrogram() to visualize the result. A sample of the grain measurements is provided in the array samples, while the variety of each grain sample is given by the list varieties.

Instructions

- Import:

linkage and dendrogram from scipy.cluster.hierarchy.matplotlib.pyplot as plt.

- Perform hierarchical clustering on

samples using the linkage() function with the method='complete' keyword argument. Assign the result to mergings. - Plot a dendrogram using the

dendrogram() function on mergings. Specify the keyword arguments labels=varieties, leaf_rotation=90, and leaf_font_size=6.

1

2

3

4

| # the DataCamp sample uses a subset of the seed data; the linkage result is very dependant upon the random_state

seed_sample = sed.groupby('varieties').sample(n=14, random_state=250)

samples = seed_sample.iloc[:, :7]

varieties = seed_sample.varieties.tolist()

|

1

2

3

4

5

6

7

8

9

10

11

| # Perform the necessary imports

# from scipy.cluster.hierarchy import linkage, dendrogram

# import matplotlib.pyplot as plt

# Calculate the linkage: mergings

mergings = linkage(samples, method='complete')

# Plot the dendrogram, using varieties as labels

plt.figure(figsize=(15, 6))

dendrogram(mergings, labels=varieties, leaf_rotation=90, leaf_font_size=10)

plt.show()

|

Dendrograms are a great way to illustrate the arrangement of the clusters produced by hierarchical clustering.

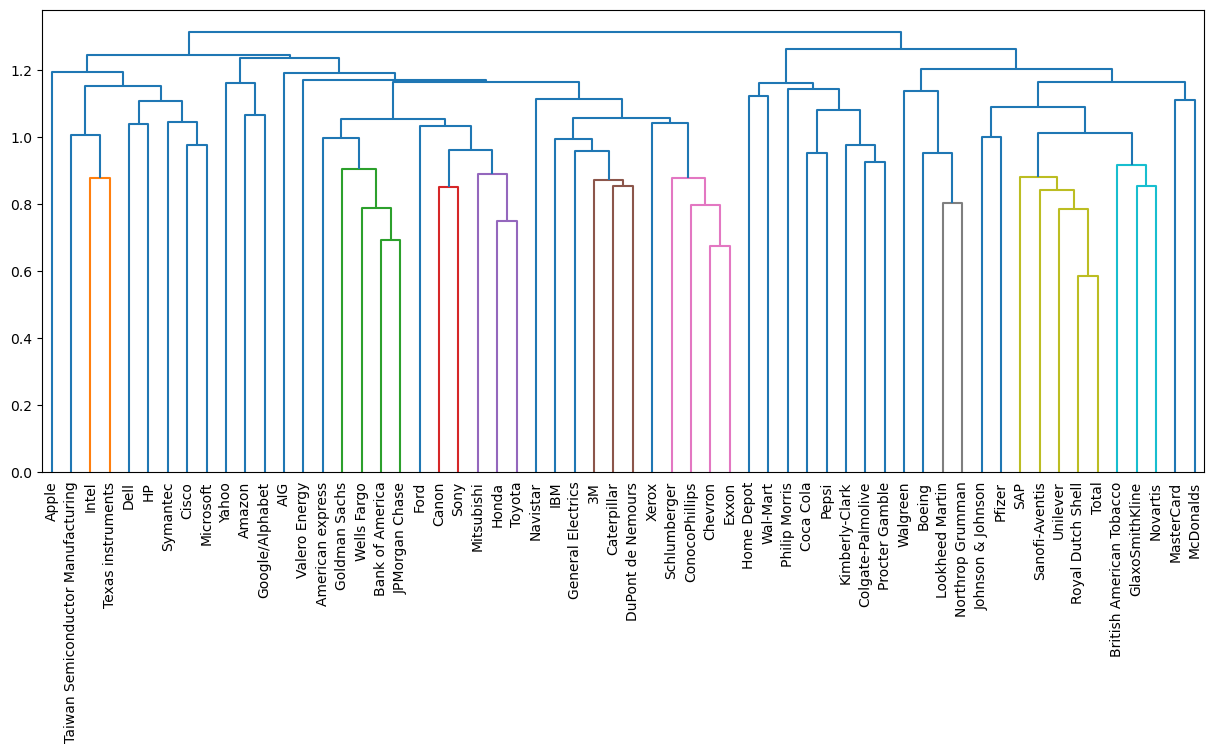

Hierarchies of stocks

In chapter 1, you used k-means clustering to cluster companies according to their stock price movements. Now, you’ll perform hierarchical clustering of the companies. You are given a NumPy array of price movements movements, where the rows correspond to companies, and a list of the company names companies. SciPy hierarchical clustering doesn’t fit into a sklearn pipeline, so you’ll need to use the normalize() function from sklearn.preprocessing instead of Normalizer.

linkage and dendrogram have already been imported from scipy.cluster.hierarchy, and PyPlot has been imported as plt.

Instructions

- Import

normalize from sklearn.preprocessing. - Rescale the price movements for each stock by using the

normalize() function on movements. - Apply the

linkage() function to normalized_movements, using 'complete' linkage, to calculate the hierarchical clustering. Assign the result to mergings. - Plot a dendrogram of the hierarchical clustering, using the list

companies of company names as the labels. In addition, specify the leaf_rotation=90, and leaf_font_size=6 keyword arguments as you did in the previous exercise.

1

2

3

4

5

6

7

8

9

10

11

12

13

| # Import normalize

# from sklearn.preprocessing import normalize

# Normalize the movements: normalized_movements

normalized_movements = normalize(stk)

# Calculate the linkage: mergings

mergings = linkage(normalized_movements, method='complete')

# Plot the dendrogram

plt.figure(figsize=(15, 6))

dendrogram(mergings, labels=stk.index, leaf_rotation=90, leaf_font_size=10)

plt.show()

|

Cluster labels in hierarchical clustering

- Cluster labels in hierarchical clustering

- To create a great visualization of the voting behavior at the Eurovision.

- But hierarchical clustering is not only a visualization tool.

- In this video, you’ll learn how to extract the clusters from intermediate stages of a hierarchical clustering.

- The cluster labels for these intermediate clusterings can then be used in further computations, such as cross tabulations, just like the cluster labels from k-means.

- Intermediate clusterings & height on dendrogram

- An intermediate stage in the hierarchical clustering is specified by choosing a height on the dendrogram.

- For example, choosing a height of 15 defines a clustering in which Bulgaria, Cyprus and Greece are in one cluster, Russia and Moldova are in another, and Armenia is in a cluster on its own.

- But what is the meaning of the height?

- Dendrograms show cluster distances

- The y-axis of the dendrogram encodes the distance between merging clusters.

- For example, the distance between the cluster containing Cyprus and the cluster containing Greece was approximately 6 when they were merged into a single cluster.

- When this new cluster was merged with the cluster containing Bulgaria, the distance between them was 12.

- Intermediate clusterings & height on dendrogram

- So the height that specifies an intermediate clustering corresponds to a distance.

- This specifies that the hierarchical clustering should stop merging clusters when all clusters are at least this far apart.

- Distance between clusters

- The distance between two clusters is measured using a “linkage method”.

- In our example, we used “complete” linkage, where the distance between two clusters is the maximum of the distances between their samples.

- This was specified via the “method” parameter.

- There are many other linkage methods, and you’ll see in the exercises that different linkage methods give different hierarchical clusterings!

- Extracting cluster labels

- The cluster labels for any intermediate stage of the hierarchical clustering can be extracted using the fcluster function.

- Let’s try it out, specifying the height of 15.

- Extracting cluster labels using fcluster

- After performing the hierarchical clustering of the Eurovision data, import the fcluster function.

- Then pass the result of the linkage function to the fcluster function, specifying the height as the second argument.

- This returns a numpy array containing the cluster labels for all the countries.

- Aligning cluster labels with country names

- To inspect cluster labels, let’s use a DataFrame to align the labels with the country names.

- Firstly, import pandas, then create the data frame, and then sort by cluster label, printing the result.

- As expected, the cluster labels group Bulgaria, Greece and Cyprus in the same cluster.

- But do note that the scipy cluster labels start at 1, not at 0 like they do in scikit-learn.

1

2

3

| mergings = linkage(euv_samples, method='complete')

labels = fcluster(mergings, 15, criterion='distance')

print(labels)

|

1

2

| [11 13 1 26 22 21 12 9 19 10 17 33 3 28 4 29 6 5 30 17 24 27 2 5

16 21 14 7 21 18 6 14 20 8 23 4 18 25 3 31 32 15]

|

1

2

| pairs = pd.DataFrame({'labels': labels, 'countries': euvp.index}).sort_values('labels')

pairs

|

| labels | countries |

|---|

| 2 | 1 | Australia |

|---|

| 22 | 2 | Ireland |

|---|

| 38 | 3 | Switzerland |

|---|

| 12 | 3 | Denmark |

|---|

| 35 | 4 | Slovenia |

|---|

| 14 | 4 | F.Y.R. Macedonia |

|---|

| 23 | 5 | Israel |

|---|

| 17 | 5 | Georgia |

|---|

| 16 | 6 | France |

|---|

| 30 | 6 | Norway |

|---|

| 27 | 7 | Malta |

|---|

| 33 | 8 | San Marino |

|---|

| 7 | 9 | Bosnia & Herzegovina |

|---|

| 9 | 10 | Croatia |

|---|

| 0 | 11 | Albania |

|---|

| 6 | 12 | Belgium |

|---|

| 1 | 13 | Armenia |

|---|

| 31 | 14 | Poland |

|---|

| 26 | 14 | Lithuania |

|---|

| 41 | 15 | United Kingdom |

|---|

| 24 | 16 | Italy |

|---|

| 10 | 17 | Cyprus |

|---|

| 19 | 17 | Greece |

|---|

| 36 | 18 | Spain |

|---|

| 29 | 18 | Montenegro |

|---|

| 8 | 19 | Bulgaria |

|---|

| 32 | 20 | Russia |

|---|

| 25 | 21 | Latvia |

|---|

| 5 | 21 | Belarus |

|---|

| 28 | 21 | Moldova |

|---|

| 4 | 22 | Azerbaijan |

|---|

| 34 | 23 | Serbia |

|---|

| 20 | 24 | Hungary |

|---|

| 37 | 25 | Sweden |

|---|

| 3 | 26 | Austria |

|---|

| 21 | 27 | Iceland |

|---|

| 13 | 28 | Estonia |

|---|

| 15 | 29 | Finland |

|---|

| 18 | 30 | Germany |

|---|

| 39 | 31 | The Netherlands |

|---|

| 40 | 32 | Ukraine |

|---|

| 11 | 33 | Czech Republic |

|---|

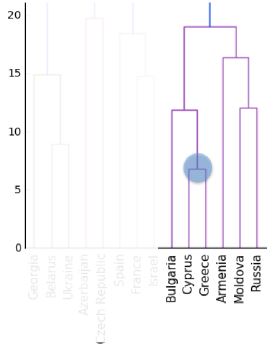

Which clusters are closest?

In the video, you learned that the linkage method defines how the distance between clusters is measured. In complete linkage, the distance between clusters is the distance between the furthest points of the clusters. In single linkage, the distance between clusters is the distance between the closest points of the clusters.

Consider the three clusters in the diagram. Which of the following statements are true?

A. In single linkage, Cluster 3 is the closest to Cluster 2.

B. In complete linkage, Cluster 1 is the closest to Cluster 2.

Possible Answers

Neither A nor B.A only.- Both A and B.

Different linkage, different hierarchical clustering

In the video, you saw a hierarchical clustering of the voting countries at the Eurovision song contest using 'complete' linkage. Now, perform a hierarchical clustering of the voting countries with 'single' linkage, and compare the resulting dendrogram with the one in the video. Different linkage, different hierarchical clustering!

You are given an array samples. Each row corresponds to a voting country, and each column corresponds to a performance that was voted for. The list country_names gives the name of each voting country. This dataset was obtained from Eurovision.

Instructions

- Import

linkage and dendrogram from scipy.cluster.hierarchy. - Perform hierarchical clustering on

samples using the linkage() function with the method='single' keyword argument. Assign the result to mergings. - Plot a dendrogram of the hierarchical clustering, using the list

country_names as the labels. In addition, specify the leaf_rotation=90, and leaf_font_size=6 keyword arguments as you have done earlier.

1

| country_names = euv['From country'].unique()

|

1

2

3

4

5

6

7

8

9

10

11

| # Perform the necessary imports

# import matplotlib.pyplot as plt

# from scipy.cluster.hierarchy import linkage, dendrogram

# Calculate the linkage: mergings

mergings = linkage(euv_samples, method='single')

# Plot the dendrogram

plt.figure(figsize=(16, 6))

dendrogram(mergings, labels=country_names, leaf_rotation=90, leaf_font_size=12)

plt.show()

|

As you can see, performing single linkage hierarchical clustering produces a different dendrogram!

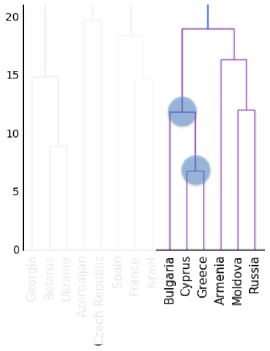

Displayed on the right is the dendrogram for the hierarchical clustering of the grain samples that you computed earlier. If the hierarchical clustering were stopped at height 6 on the dendrogram, how many clusters would there be?

Possible Answers

1- 3

As many as there were at the beginning.

In the previous exercise, you saw that the intermediate clustering of the grain samples at height 6 has 3 clusters. Now, use the fcluster() function to extract the cluster labels for this intermediate clustering, and compare the labels with the grain varieties using a cross-tabulation.

The hierarchical clustering has already been performed and mergings is the result of the linkage() function. The list varieties gives the variety of each grain sample.

Instructions

- Import:

pandas as pd.fcluster from scipy.cluster.hierarchy.

- Perform a flat hierarchical clustering by using the

fcluster() function on mergings. Specify a maximum height of 6 and the keyword argument criterion='distance'. - Create a DataFrame

df with two columns named 'labels' and 'varieties', using labels and varieties, respectively, for the column values. This has been done for you. - Create a cross-tabulation

ct between df['labels'] and df['varieties'] to count the number of times each grain variety coincides with each cluster label.

1

2

3

4

5

6

7

| # the DataCamp sample uses a subset of the seed data; the linkage result is very dependant upon the random_state

seed_sample = sed.groupby('varieties').sample(n=14, random_state=250)

samples = seed_sample.iloc[:, :7]

varieties = seed_sample.varieties.tolist()

# Calculate the linkage: mergings

mergings = linkage(samples, method='complete')

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| # Perform the necessary imports

# import pandas as pd

# from scipy.cluster.hierarchy import fcluster

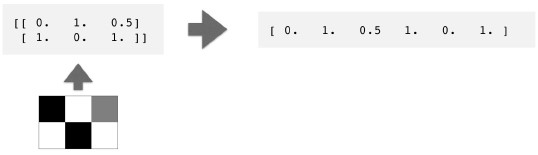

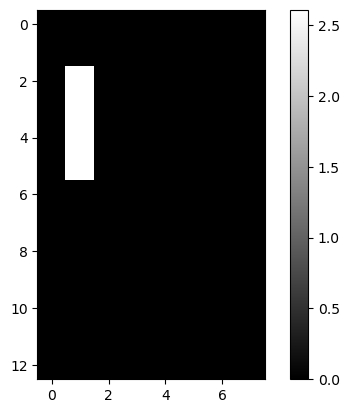

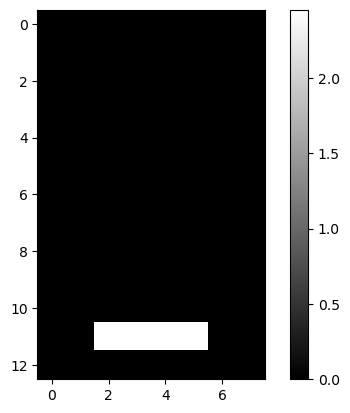

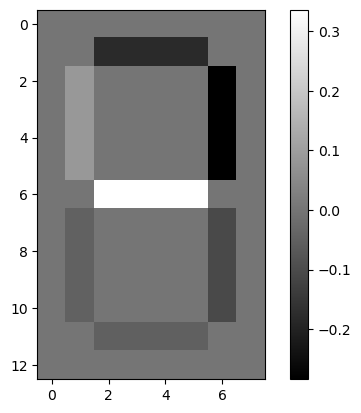

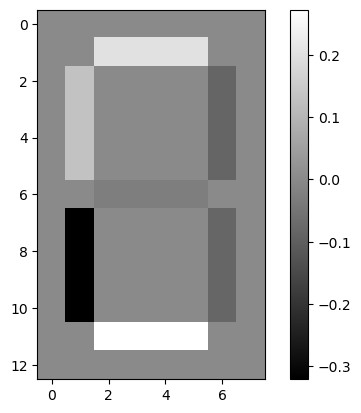

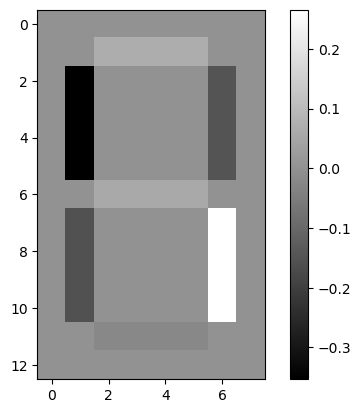

# Use fcluster to extract labels: labels